博客内容Blog Content

大语言模型LLM之自注意力机制 Self-Attention Mechanism in Large Language Models (LLMs)

注意力机制是一种用于提高模型性能的技术,主要用于选择性地关注输入数据中的重要部分,从而增强模型对关键信息的理解能力。 The attention mechanism is a technique designed to improve model performance. It selectively focuses on important parts of the input data, enhancing the model's ability to understand and process key information.

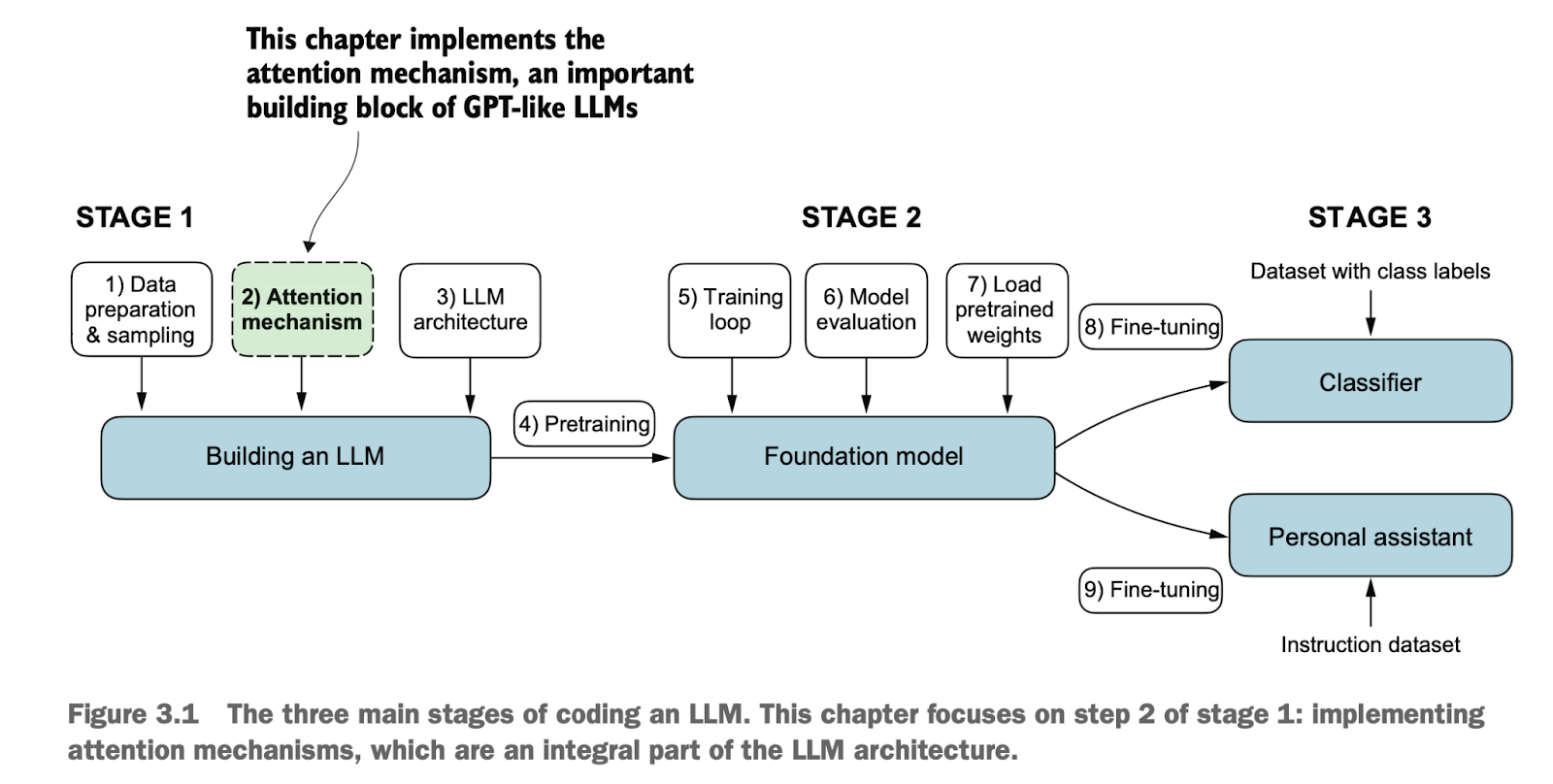

3.0 总览 Overview

![]() 3. Coding attention mechanisms.ipynb

3. Coding attention mechanisms.ipynb

在神经网络中使用注意力机制(Attention Mechanisms)的原因

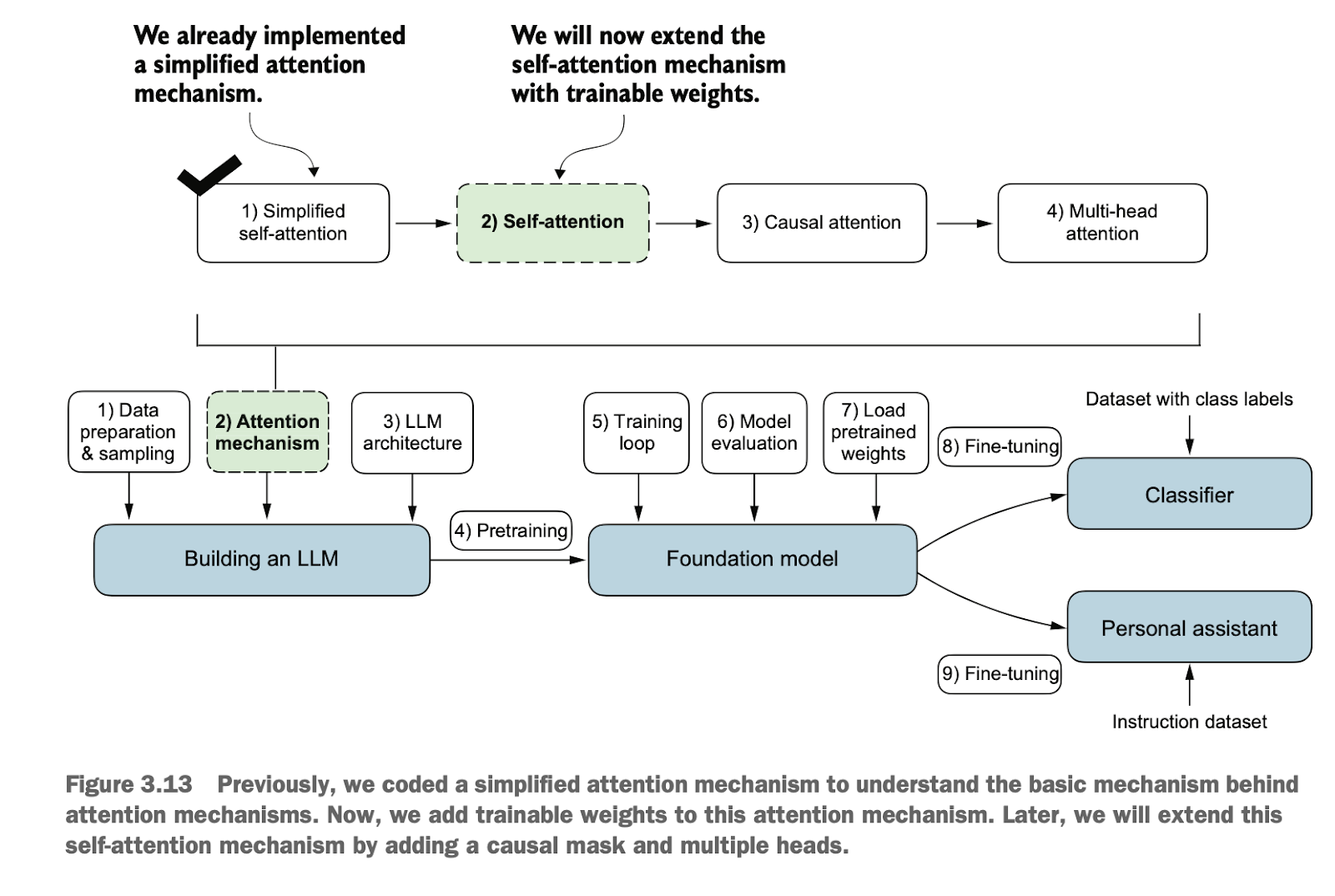

从基础的自注意力(Self-Attention)框架逐步扩展到可训练的增强型自注意力机制(Enhanced Self-Attention Mechanism)

因果注意力模块(Causal Attention Module),保证注意力训练只用之前的信息,确保生成的顺序性

使用 Dropout 随机屏蔽部分注意力权重(Attention Weights)以减少过拟合(Overfitting)

将多个因果注意力模块堆叠为多头注意力模块(Multi-Head Attention Module),并合并到一个矩阵中进行优化

Reasons for Using Attention Mechanisms in Neural Networks

From the basic Self-Attention framework, gradually extend to trainable Enhanced Self-Attention Mechanisms to improve model capacity and representation learning.

Causal Attention Module ensures that attention during training only leverages prior information, maintaining the sequential order required for autoregressive tasks.

Apply Dropout to randomly mask portions of attention weights, effectively reducing the risk of overfitting during training.

Stack multiple Causal Attention Modules into a Multi-Head Attention Module, combining their outputs into a single matrix for optimization and improving the model's ability to capture diverse relationships.

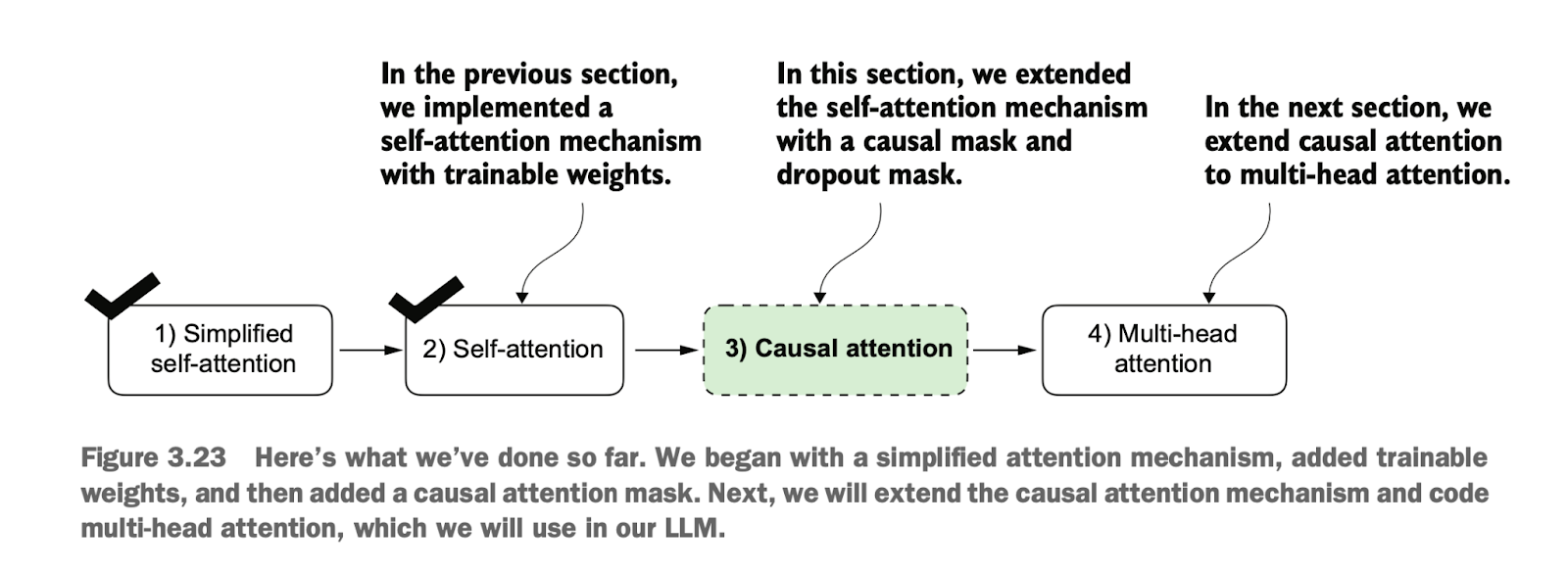

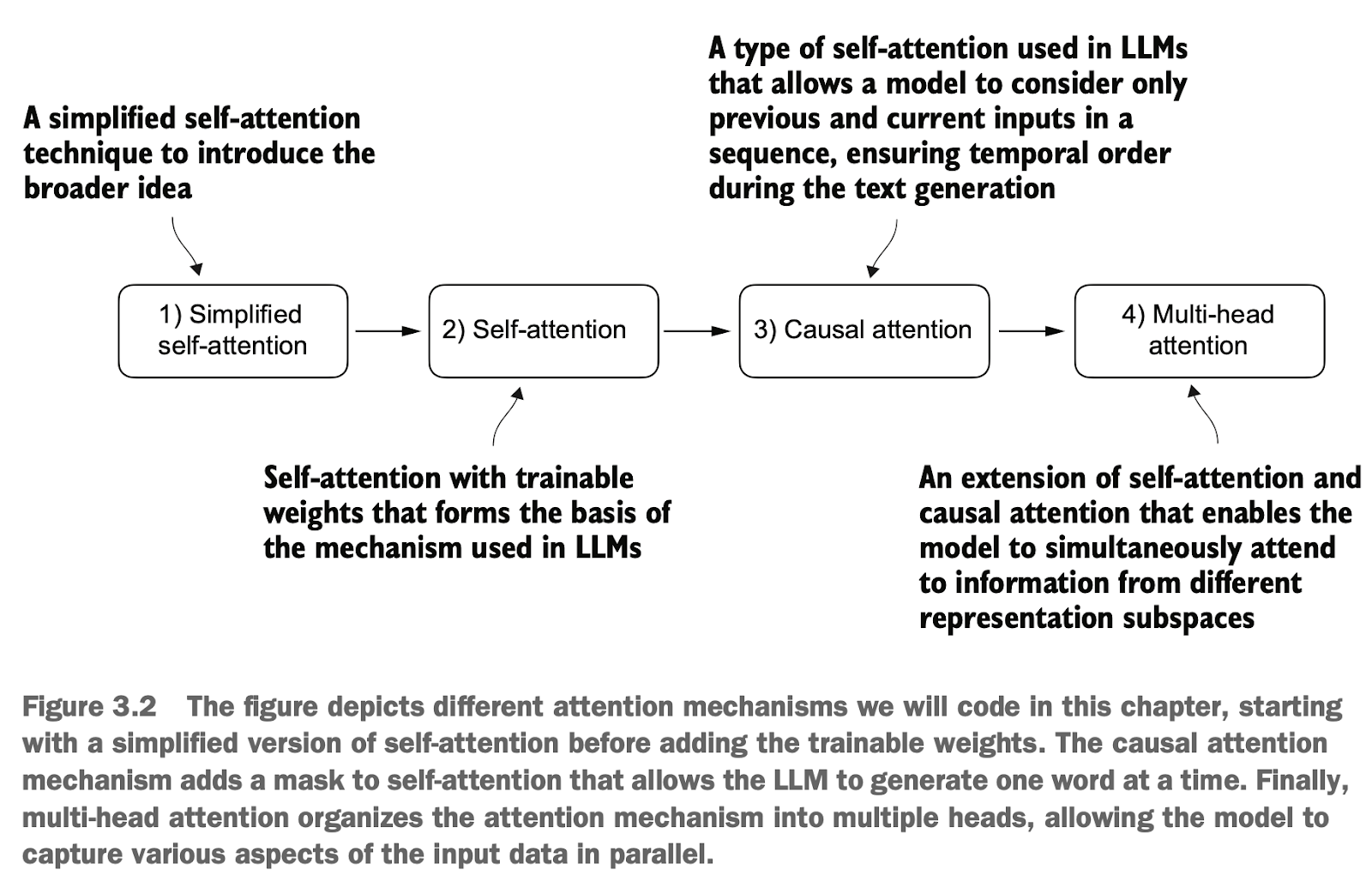

递进地学习以下四种注意力机制

Studying these following four mechanisms gradually

3.1 给长序列做模型的问题 Challenges in Modeling Long Sequences

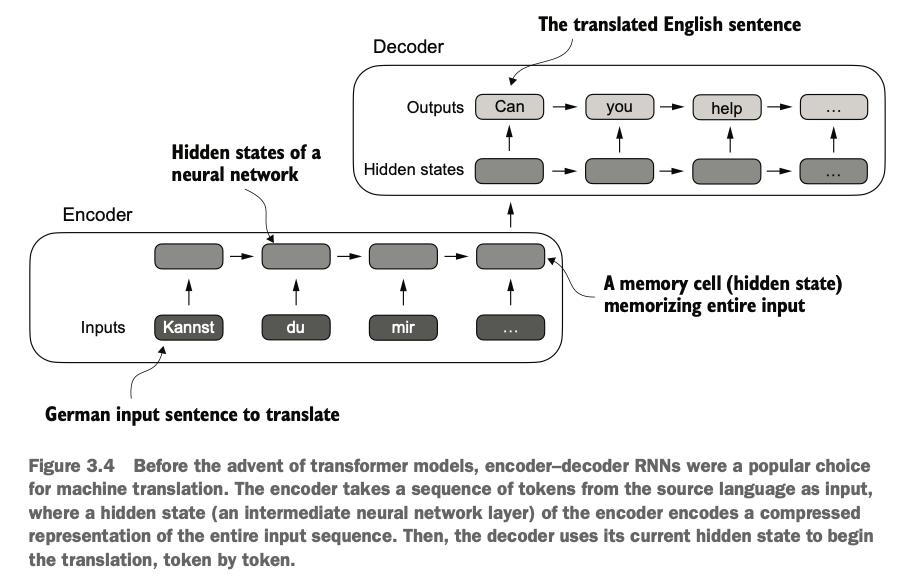

在转换器发展之前人们用递归神经网络RNN来做翻译(因为语法不同不能逐词对应翻译),这里每RNN个词经过神经网络的输出都被继续当做输入和下一个词传入网络中,以便于将序列的数据有序地更新神经网络

Before the advent of Transformers, people used RNNs (Recurrent Neural Networks) for tasks like machine translation. Due to differences in syntax, translations cannot be done word-for-word. In RNNs, the output of the neural network for each word is passed as input, along with the next word in the sequence, into the network to sequentially update the neural network's state with the sequence data.

Encoder将序列数据读入并更新隐藏层(内部state状态)

Decoder同样将数据输出并更新隐藏层(内部state状态)

The Encoder reads the sequence data and updates the hidden layer (internal state).

The Decoder outputs data and simultaneously updates the hidden layer (internal state).

基于这种RNN网络的encoder-decoder模型问题,对于短句效果不错,但仍有以下问题:

隐藏状态的压缩导致信息丢失。

解码器无法直接访问输入序列,缺乏上下文细节。

长距离依赖难以处理。

Although RNN-based encoder-decoder models perform well for short sentences, they face the following challenges:

Compressed hidden states lead to information loss.

The decoder cannot directly access the input sequence, resulting in a lack of contextual details.

Long-distance dependencies are difficult to handle, as RNNs struggle to maintain information over long sequences.

这些问题促使研究者设计了注意力机制,从而在翻译任务中更好地捕捉输入句子的上下文和依赖关系,提高翻译质量。

These limitations motivated researchers to design attention mechanisms, enabling the model to better capture the context and dependencies within input sentences during translation tasks, thereby improving translation quality.

3.2 使用注意力机制捕捉数据依赖 Using Attention Mechanisms to Capture Data Dependencies

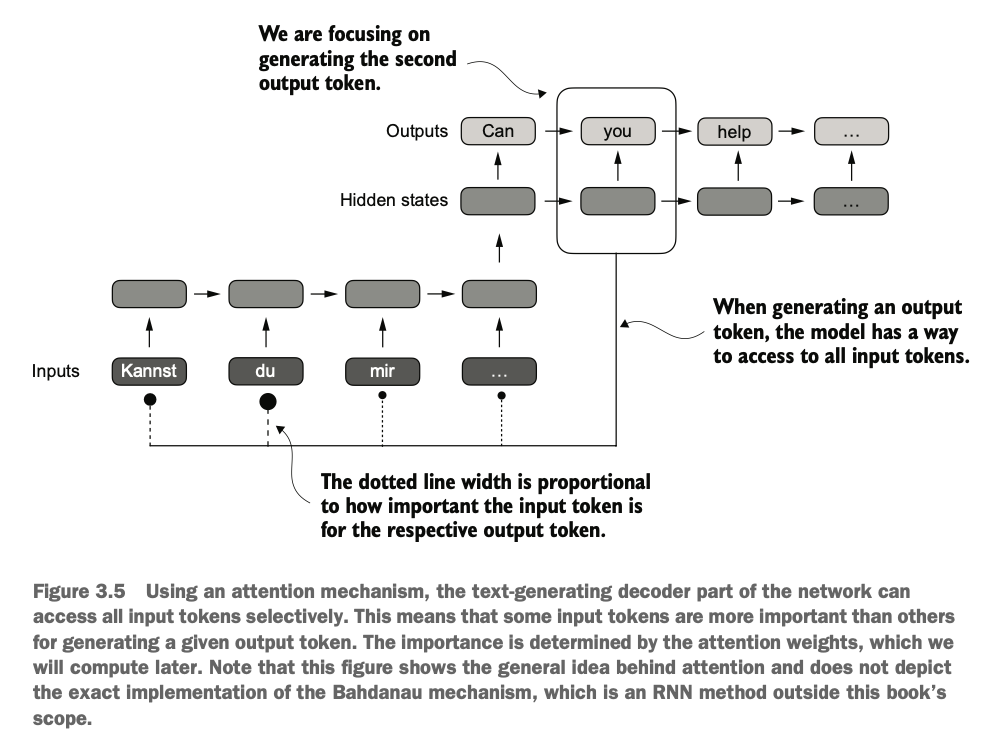

对于RNN梯度消失或梯度爆炸的问题,Bahdanau等人做了一些改进使模型能随机访问输入的不同部分进而提高性能

To address the issues of gradient vanishing or explosion in RNNs, Bahdanau et al. introduced improvements that enabled the model to randomly access different parts of the input, thereby enhancing performance.

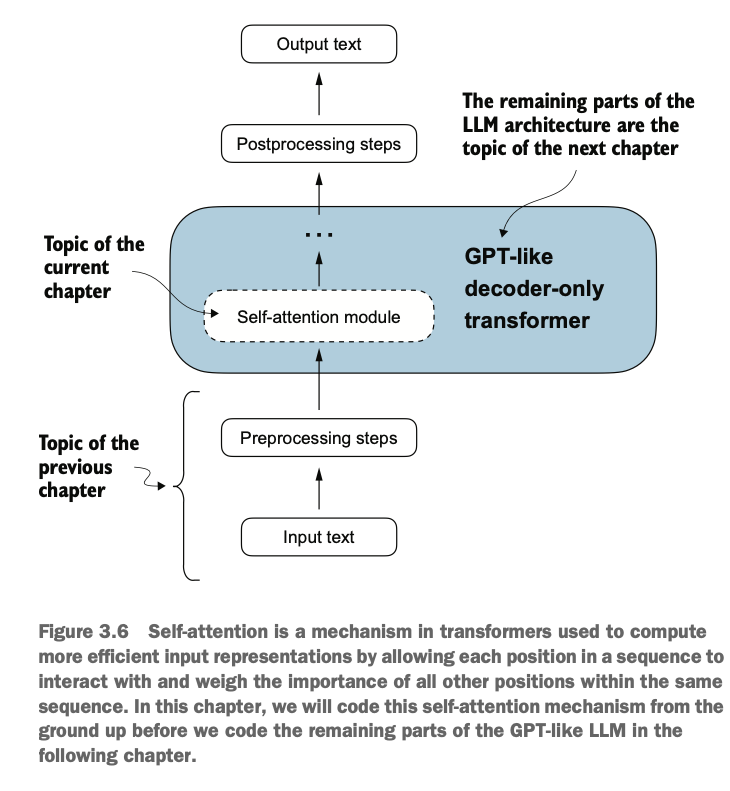

之后,人们又发现了其实可以不用RNN架构,而是用注意力机制做优化,其核心思想是计算某个位置时可以考虑(根据重要性衡量)相同序列里其它位置的相关度

Later, researchers discovered that it was possible to forgo the RNN architecture altogether and instead optimize using attention mechanisms. The core idea is that when computing a specific position, the model can consider the relevance of other positions within the same sequence, weighted by their importance.

3.3 自注意机制的几部分 Components of Self-Attention Mechanism

3.3.1 获取一个不可训练的注意力权重 Obtaining Untrainable Attention Weights

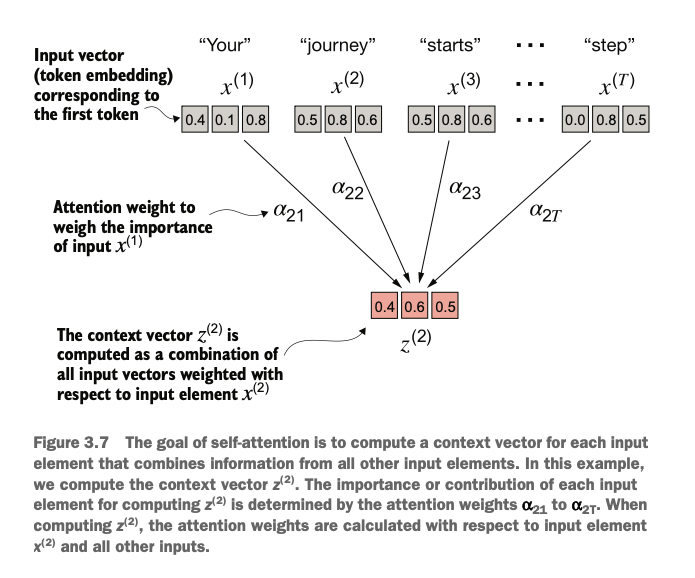

构建某个向量时会考虑到其它输入的向量的影响,比如下图的Xi通过增强得到对应Zi,那么对于计算某个Zj时,需要考虑其他部分Xi的影响,这里通过一组权重ajk进行设置,分别对应Xk

When constructing a particular vector, the model considers the influences of other input vectors. For example, as shown in the diagram, Xi is used to compute its enhancement Zi. When calculating a specific Zj, the influence of other parts, such as Xi, must be taken into account. This is achieved by assigning a set of weights ajk, where each weight corresponds to Xk.

计算权重ajk过程中首先运用了内积dot,因为其能表达两个元素的相似度(值越大越相似)

During the calculation of the attention weight ajk, the dot product is first used. The dot product is employed because it effectively represents the similarity between two elements—the larger the value, the greater the similarity.

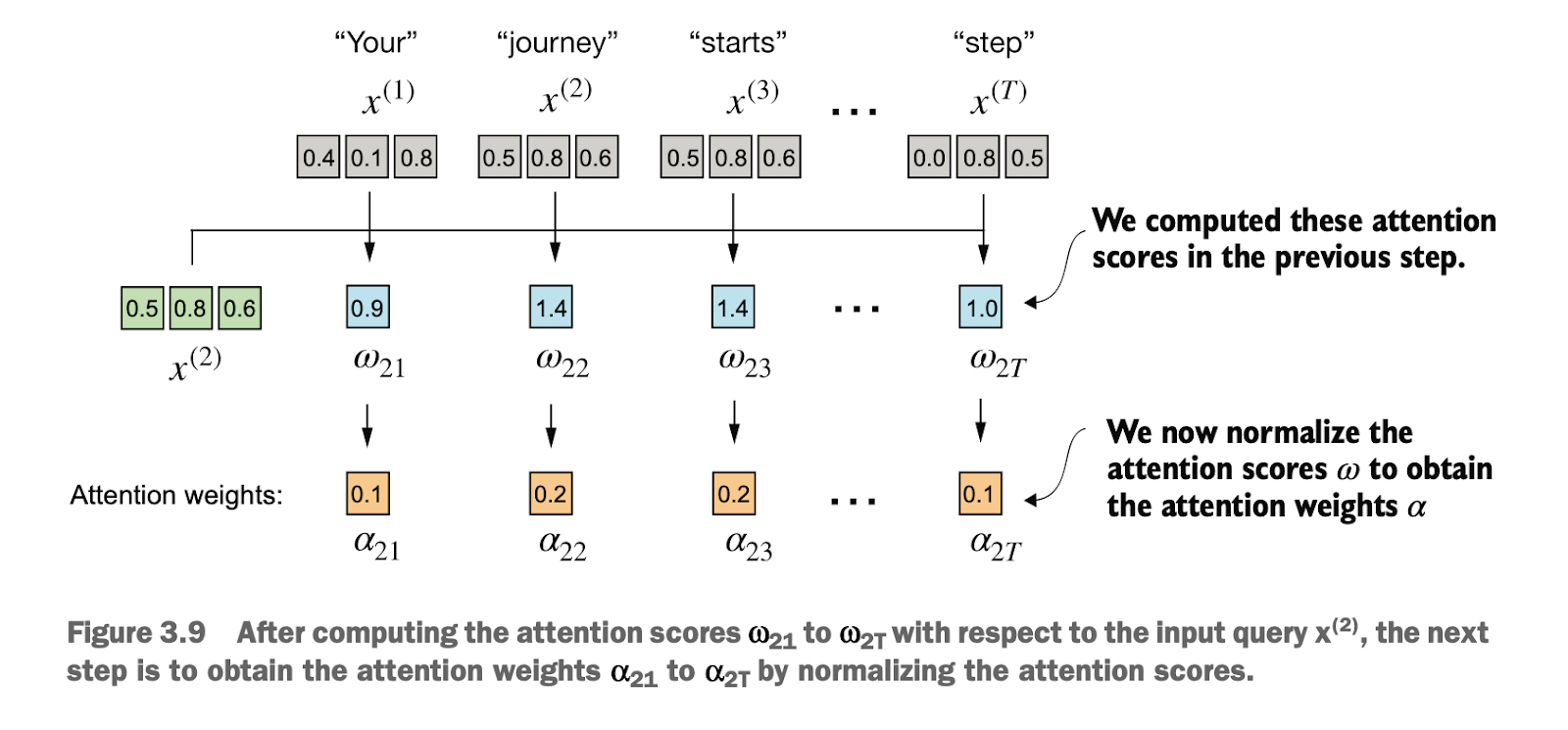

对于计算内积计算权重aj之后,需要对其使用标准化,保证其权重和为1,方便后续数学处理

(实际过程一般用softmax而不是除以sum进行标准化,因为softmax能保证权重均为正数,且能更好放大相对性差异)

After calculating the dot product to obtain the raw attention weights aj, normalization is applied to ensure that the sum of weights equals 1, simplifying subsequent mathematical operations.

(In practice, softmax is typically used for normalization instead of simply dividing by the sum. This is because softmax ensures all weights are positive and can better emphasize relative differences between values.)

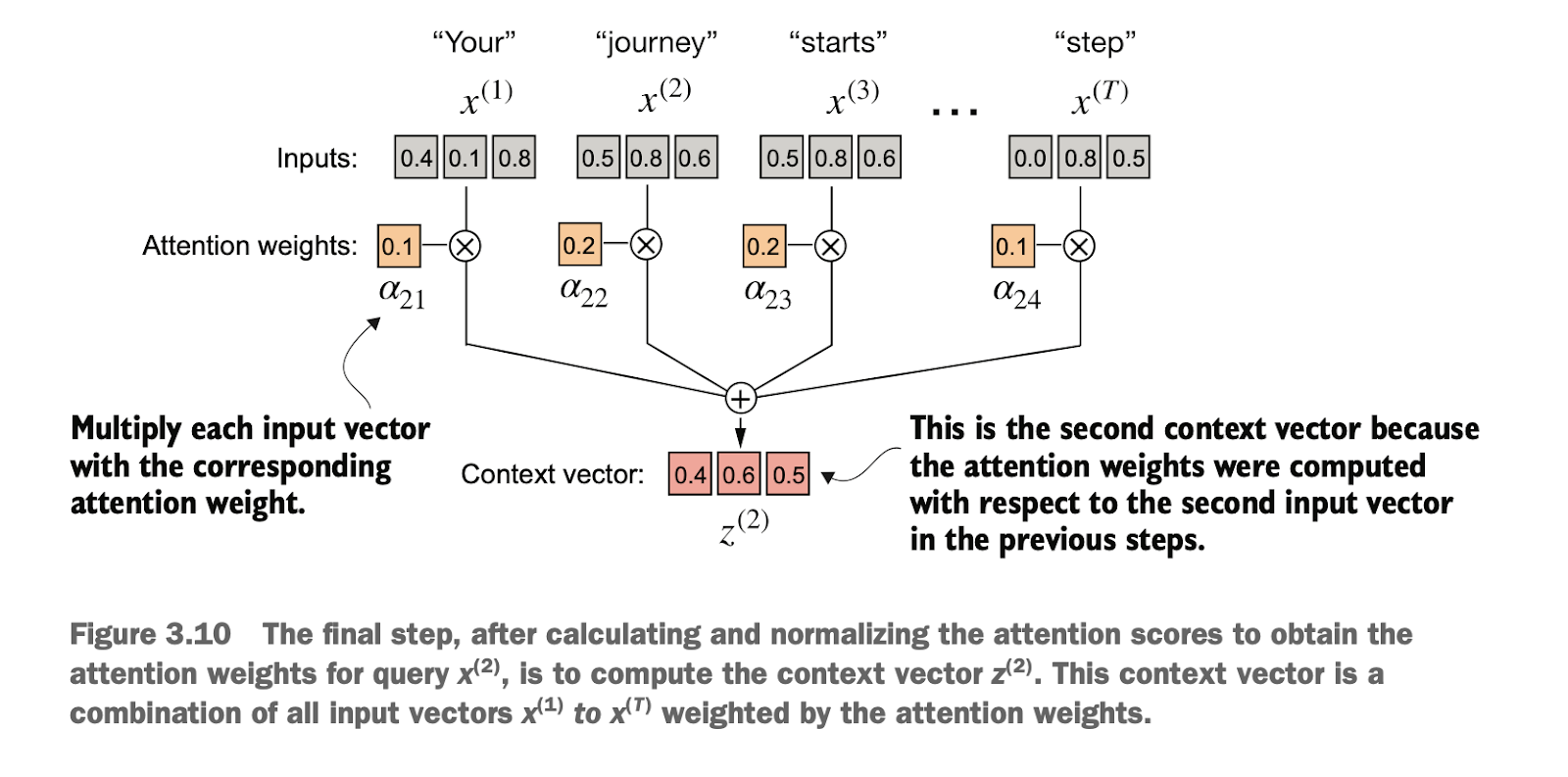

算好标准化的权重aj之后,再使用其乘上原来的x,才得到Zj

After obtaining the normalized weights aj, each weight is multiplied by its corresponding x. The weighted sum of these values then yields Zj.

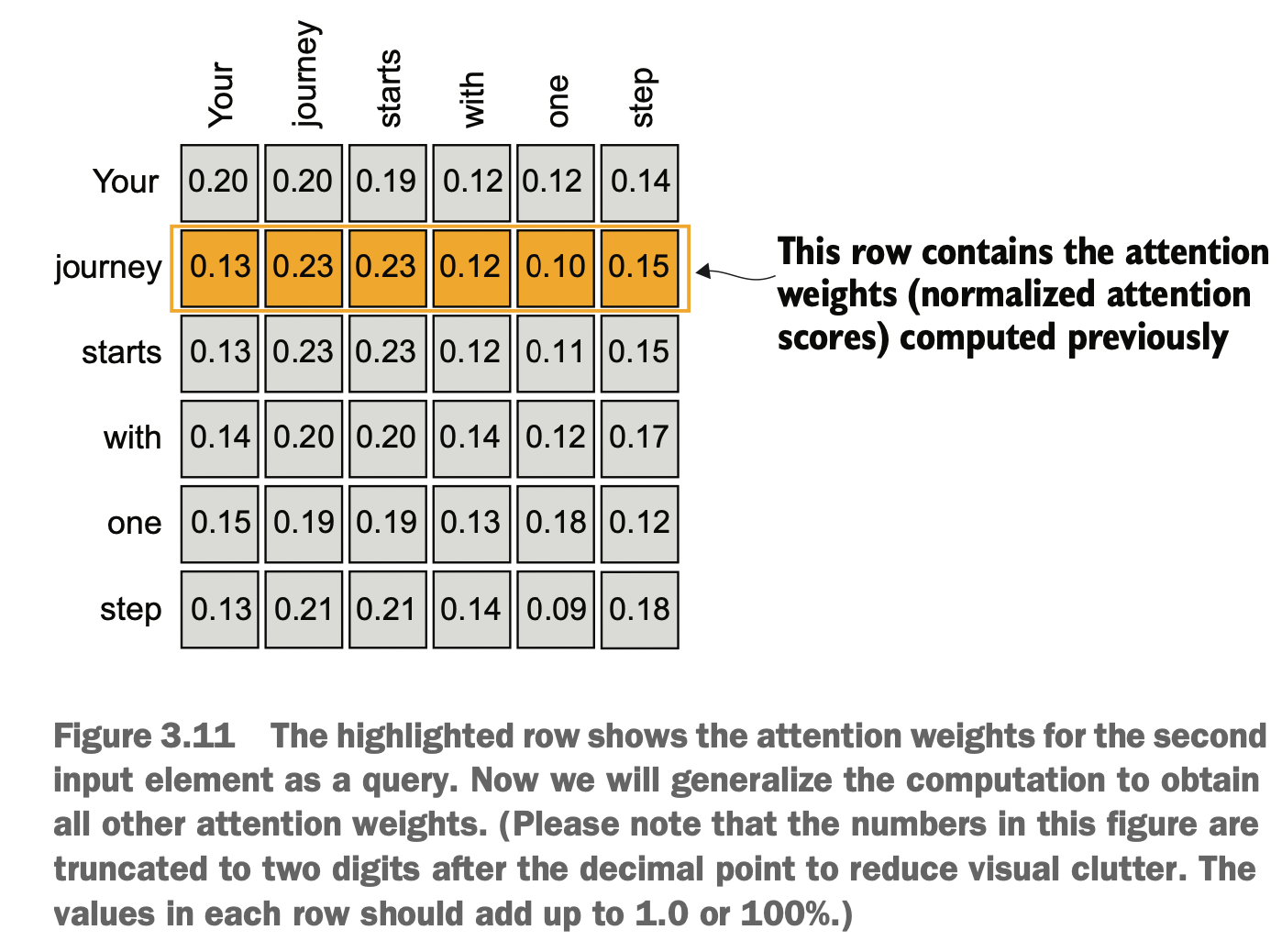

3.3.2 利用该注意力权重计算所有tokens结果 Use the attention weights to calculate the results for all tokens

假设有一个N个单词的句子,每个单词有D维度的向量

Assume there is a sentence with N words, and each word is represented by a vector with D dimensions.

1. 第一步是利用内积单词间的计算注意力得分矩阵,得到N*N矩阵

2. 第二步把该注意力得分矩阵每行进行标准化,还是N*N矩阵

3. 第三步把该标准化后的注意力得分矩阵的每一行当做权重(有N个权重值),乘上所有单词的嵌入(有N个单词,每个单词D维),N个单词的D维合在一起得到一个D维的结果,这就是考虑了所有单词对该行代表单词注意力的后的结果

N*N*D -> select word -> 1*N*D -> sum others -> 1*D

1. The first step is to calculate the attention score matrix using the inner product between words, resulting in an N×N matrix.

2. The second step is to normalize each row of the attention score matrix, still resulting in an N×N matrix.

3. The third step is to treat each row of the normalized attention score matrix as weights (with N weight values) and multiply it with the embeddings of all words (there are N words, each with D dimensions). Combining the D-dimensional results for the N words gives a single D-dimensional result, which considers the attention of all words relative to the word represented by that row.

最终得到每行对应一个D维的嵌入,最终N行有N*D维

In the end, each row corresponds to a D-dimensional embedding, and the final result has dimensions of N×D.

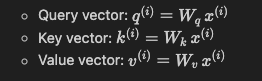

3.4 实现可训练的注意力权重 Implementing Trainable Attention Weights

3.4.1 查询、键和值权重矩阵 Query, Key, and Value Weight Matrices

实际过程中的注意力权重是可以训练和更新的,以便于产生更好的向量Zi

In practice, attention weights are trainable and can be updated to produce better vectors Zᵢ.

我们将通过引入三个可训练的权重矩阵 W_q、W_k 和 W_v,它们作为模型的可训练权重矩阵,作用是将输入向量 x^(i) 投影到查询向量 (Query)、键向量 (Key) 和值向量 (Value) 的空间中。

We achieve this by introducing three trainable weight matrices: W_q, W_k, and W_v. These matrices serve as trainable parameters of the model and are used to project the input vector x⁽ⁱ⁾ into the spaces of Query, Key, and Value vectors.

W_q(查询权重矩阵):查询向量 q(i) 用于表示输入向量在注意力机制中“提问”的内容。它在后续计算中用于匹配其他输入向量的键向量k(i),以确定相关性。

W_k(键权重矩阵):键向量 k(i) 用于表示输入向量的“特征”或“标签”。查询向量q(i)与键向量k(i)之间通过点积计算相关性(即注意力分数),从而衡量输入向量之间的依赖关系。

W_v(值权重矩阵):值向量 v(i) 表示输入向量的实际信息内容。注意力机制使用注意力分数对值向量进行加权求和,生成最终的输出。

W_q (Query weight matrix): The query vector q⁽ⁱ⁾ represents what the input vector is "asking" in the attention mechanism. It is used in subsequent computations to match the key vector k⁽ⁱ⁾ of other input vectors and determine their relevance.

W_k (Key weight matrix): The key vector k⁽ⁱ⁾ represents the "features" or "labels" of the input vector. The relevance (or attention score) is calculated by taking the dot product of the query vector q⁽ⁱ⁾ and the key vector k⁽ⁱ⁾, capturing the dependency between input vectors.

W_v (Value weight matrix): The value vector v⁽ⁱ⁾ represents the actual content of the input vector. The attention mechanism uses the attention scores to perform a weighted sum of the value vectors, generating the final output.

它们的值并不是事先计算出来的,而是在模型训练时通过反向传播(Backpropagation)和梯度下降(Gradient Descent)优化得到的

The values of these weight matrices are not pre-calculated but are optimized during training through backpropagation and gradient descent.

注意这里的训练权重都是用来和学习和更新的,和前面跟句子特定相关的固定注意力权重不同

It is important to note that these trainable weights are designed to be learned and updated during training, which is fundamentally different from the fixed attention weights tied to specific sentences mentioned earlier.

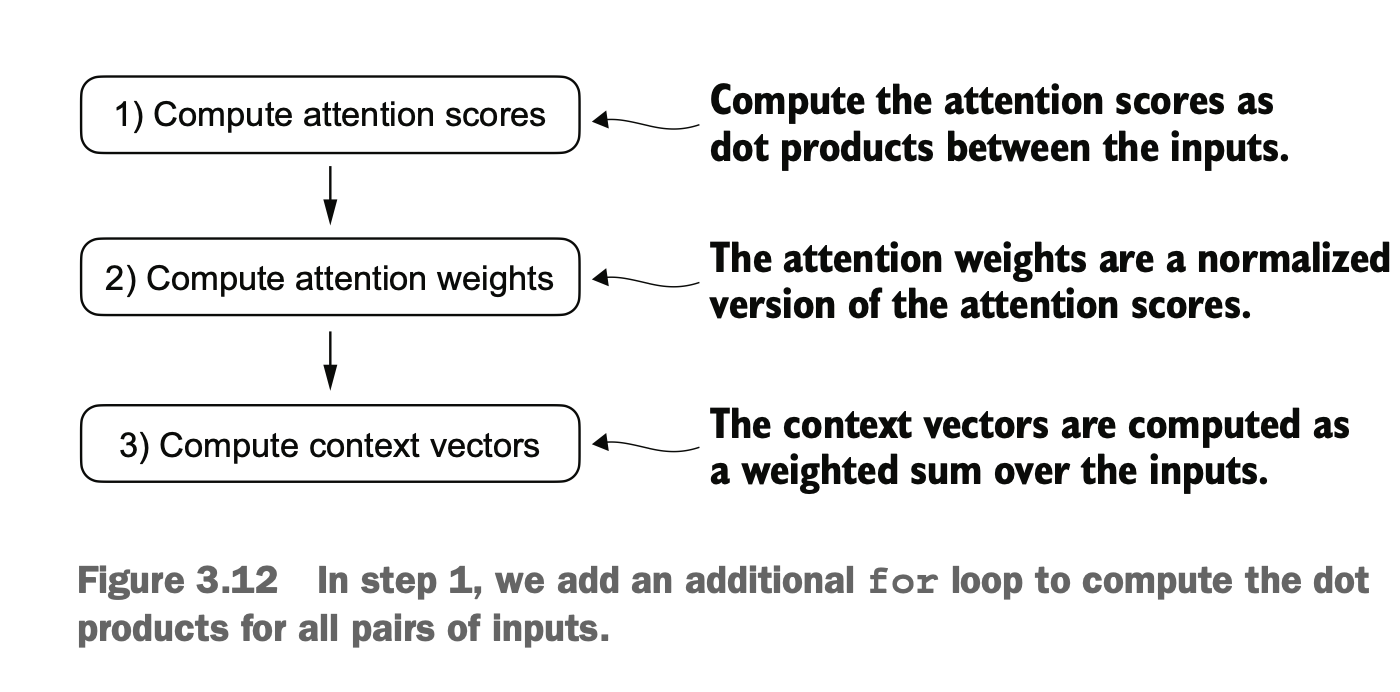

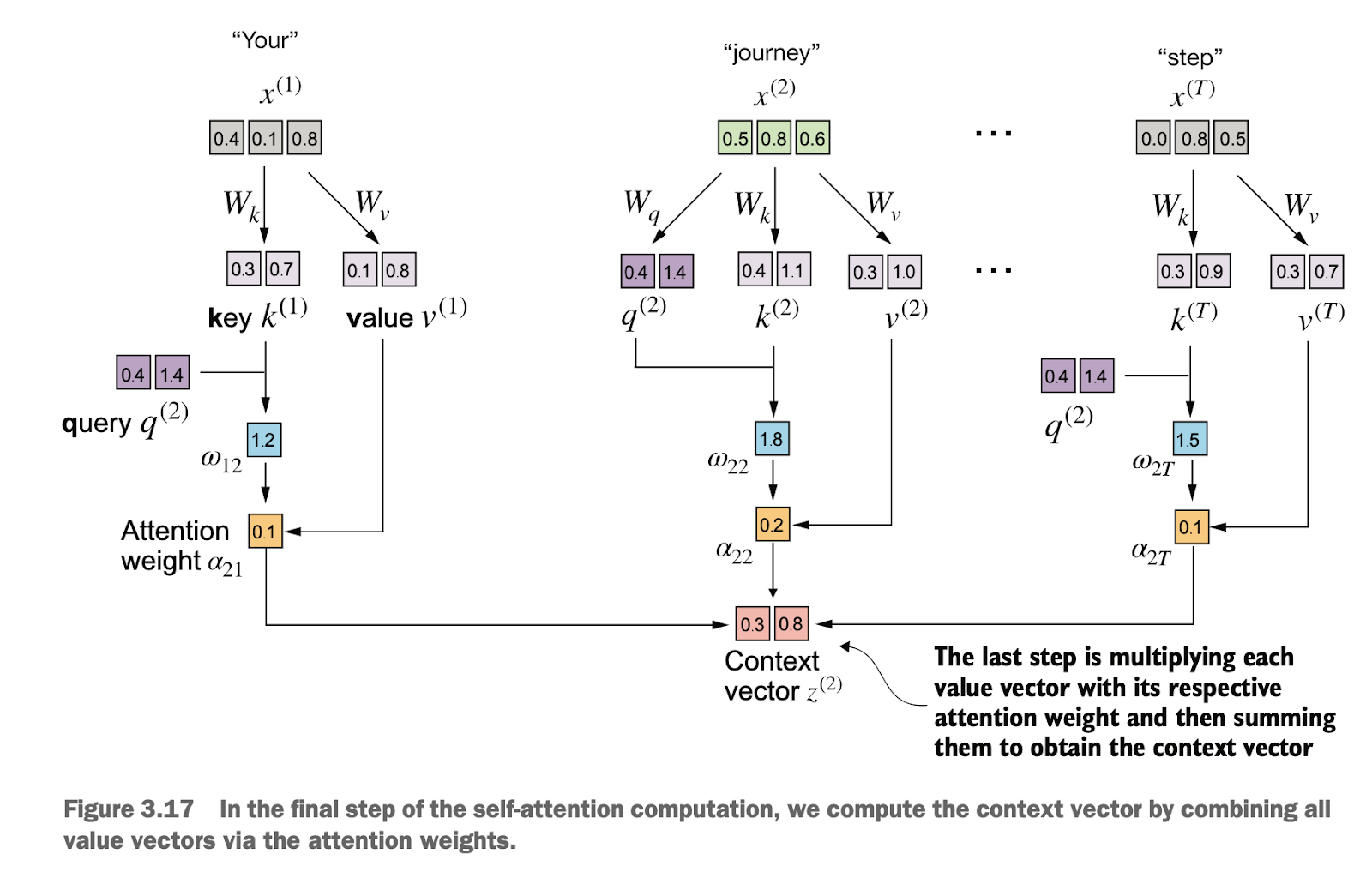

计算步骤:Computation Steps:

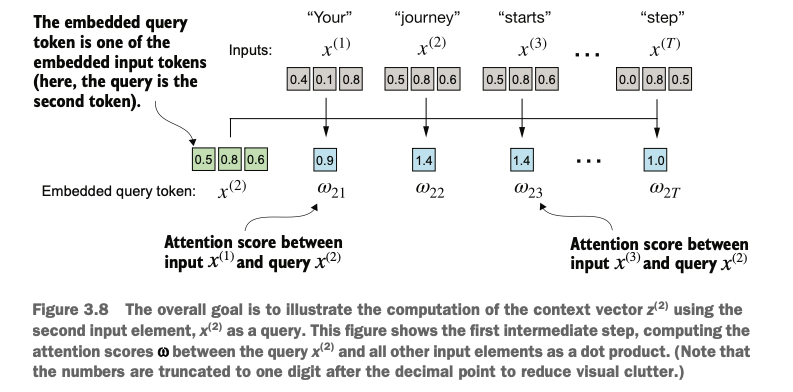

(1)有了查询权重和键权重之后,计算某个注意力得分W2的时候,要用查询权重Q2和其他键权重Kj做乘法,即W2j=Q2 dot Kj

(2)计算好注意力得分W2之后,再进行标准化得到A2(这里对注意力分数标准化时除以键向量的维度开平方根的原因是为了 数值稳定性 和 避免注意力分数过大导致梯度消失问题。这个技巧称为 Scaled Dot-Product Attention,它是经典注意力机制的改进。)

(3)将这个标准化后的注意力得分W2j乘以值权重Vj,最后进行加和得到Z2

(1)Once the query weights and key weights are established, the attention score W₂ⱼ for a specific token is calculated by taking the dot product of the query weight Q₂ and the key weights of other tokens Kⱼ, so W₂ⱼ = Q₂ · Kⱼ

(2)After computing the attention scores W₂, they are normalized to obtain A₂. During this normalization, the attention scores are divided by the square root of the key vector's dimensionality. This scaling ensures numerical stability and prevents excessively large attention scores, which could lead to vanishing gradients. This technique is known as Scaled Dot-Product Attention, an improvement over the classical attention mechanism.

(3)The normalized attention scores W₂ⱼ are then multiplied by the value weights Kⱼ, and the results are summed to produce the final output Z₂.

总结概括 Summary

Query(查询):表示当前关注的项目,用于探测输入序列中其他部分的相关性。

Key(键):作为索引,用于匹配查询,帮助确定输入中哪些部分与查询最相关。

Value(值):表示输入的实际内容,当相关性确定后,模型从中检索对应的值。

Query (Q): Represents the current focus of attention and is used to probe the relevance of other parts of the input sequence.

Key (K): Acts as an index, matching with the query to determine which parts of the input are most relevant.

Value (V): Represents the actual content of the input. Once relevance is determined, the model retrieves the corresponding value.

在注意力机制中,Query 和 Key 的匹配结果(通过点积或其他相似度计算)决定了对 Value 的加权分配,从而生成输出。这种设计灵感来自于数据库中搜索和检索的流程。

In the attention mechanism, the matching of Query and Key (via dot product or other similarity measures) determines the weighted allocation of Value, which is then used to generate the output. This design is inspired by the process of searching and retrieving information in a database.

3.4.2 使用Python类实现全过程 Implementing the Full Process with a Python Class

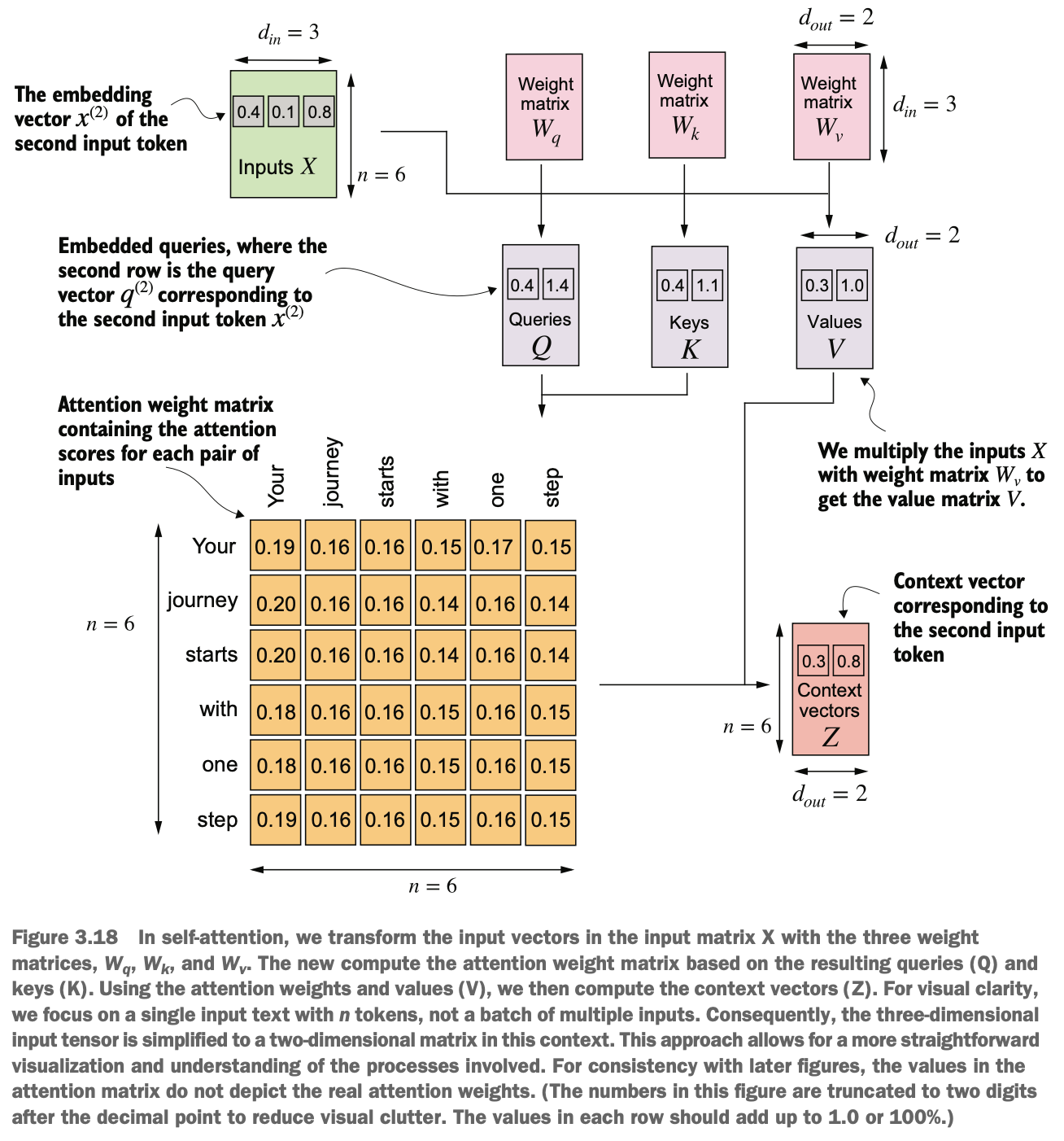

计算整体所有数据流程如下:

The overall data flow for the process is as follows:

输入映射: 将输入向量通过三个权重矩阵 W_q、W_k 和 W_v 映射为 Query(Q)、Key(K) 和 Value(V)。X(6*3) vs WqWkWv(3*2) => QKV(6*2)

计算注意力分数: 用 Q 和 K^T 相乘计算每个标记之间的相关性(注意力分数)。Q(6*2) vs Vt(2*6) => A(6*6)

归一化权重: 对分数进行缩放,并用 Softmax 转化为注意力权重。A(6*6)

加权求和: 用注意力权重对 V 进行加权求和,得到上下文向量。 A(6*6) vs V(6*2) => Z(6*2)

输出结果: 输出上下文向量,表示每个标记结合上下文的新的表示。Z(6*2)

Input Mapping: Map the input vectors into Query (Q), Key (K), and Value (V) using the three weight matrices W_q, W_k, and W_v. X(6*3) vs WqWkWv(3*2) => QKV(6*2)

Attention Score Calculation: Compute the attention scores by Q multiplying K^T, which determines the relevance between tokens. Q(6*2) vs Vt(2*6) => A(6*6)

Weight Normalization: Scale the scores and apply the Softmax function to convert them into attention weights. A(6*6)

Weighted Sum: Use the attention weights to perform a weighted sum over V , resulting in the context vector. A(6*6) vs V(6*2) => Z(6*2)

Output Result: Output the context vector, which represents the new representation of each token in the context of the entire sequence. Z(6*2)

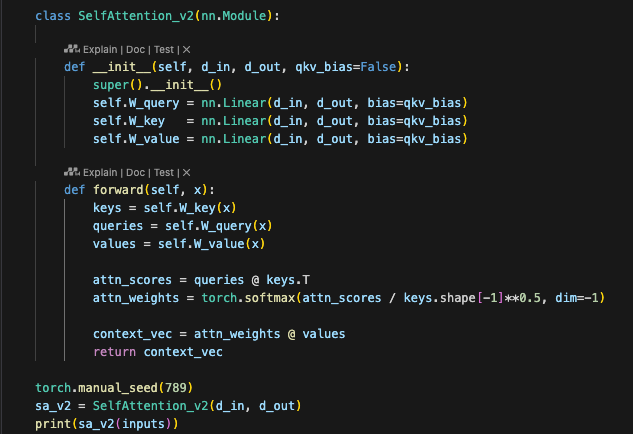

可使用python类进行对应实现:

The corresponding implementation can be achieved using a Python class:

SelfAttention_v1 继承了 PyTorch 的 nn.Module,这是所有神经网络模型的基类

forward 是 PyTorch 中的一个特殊方法,定义了模型的前向传播逻辑。当你调用 model(inputs) 时,实际上触发的是 forward 方法。

当模型不断喂入数据后,其中的查询、键和值权重矩阵会对应进行更新调整

代码可使用nn.Linear做初始化权重,对后续训练更稳定有效

SelfAttention_v1inherits from PyTorch'snn.Module, which is the base class for all neural network models.The

forwardmethod is a special method in PyTorch that defines the forward propagation logic of the model. When you callmodel(inputs), it actually triggers theforwardmethod.As data is continuously fed into the model, the Query, Key, and Value weight matrices will be updated and adjusted accordingly.

The code can use

nn.Linearto initialize the weights, making subsequent training more stable and effective.

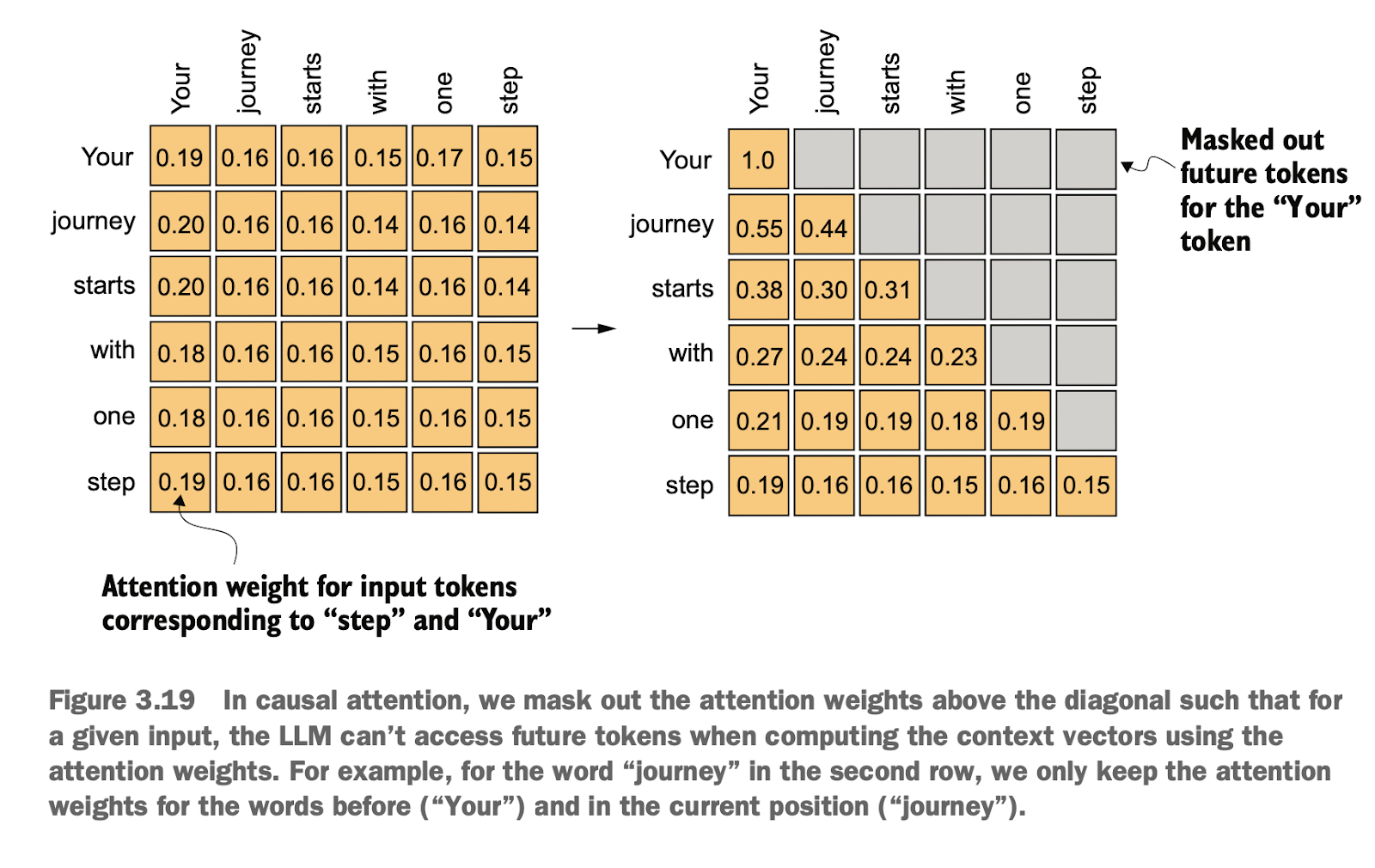

3.5 使用因果性注意力隐藏未来词 Using Causal Attention to Mask Future Tokens

许多LLM在预测未来词的时候,只能使用已有的信息,而不能使用后边未来的信息,一般使用因果注意力Causal attention(Masked attention)来实现注意力得分

Many LLMs, when predicting future tokens, can only utilize existing information and cannot access future tokens. This is typically achieved using Causal Attention (also known as Masked Attention) to compute attention scores.

3.5.1 因果注意力掩码 Causal Attention Mask

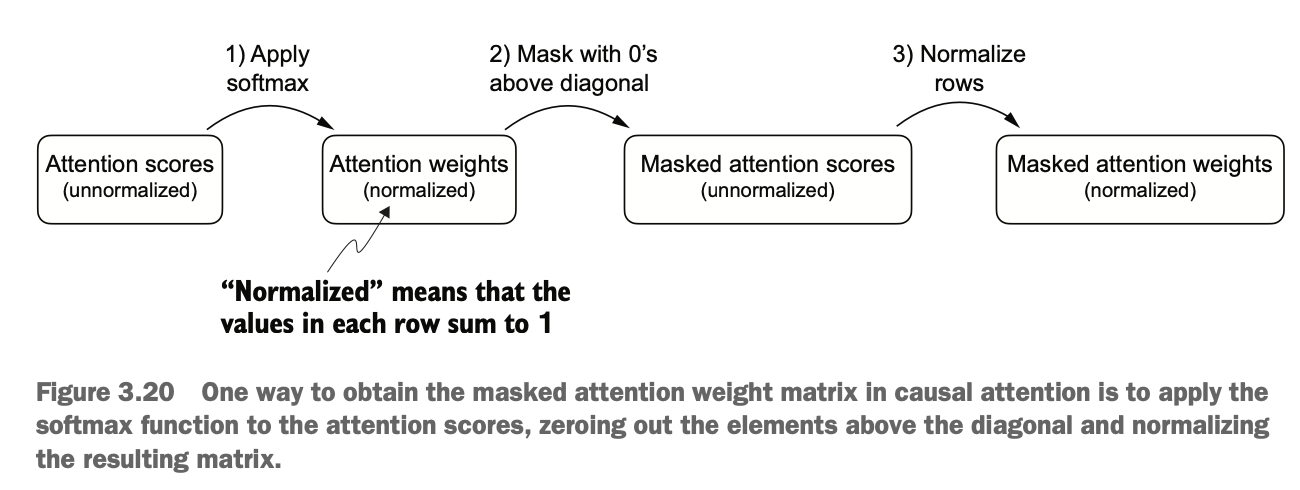

原始注意力得分,通过标准化之后,需要额外通过乘上掩码矩阵把对角线以上的值设置为0,之后再把矩阵剩下的每行标准化

For raw attention scores, after normalization, an additional step is required: multiplying by a mask matrix to set all values above the diagonal to 0. Then, each row of the remaining matrix is re-normalized.

简单来说,经过掩码和重新归一化后,注意力权重的分布就像一开始只在未掩盖的位置之间进行计算一样。这确保了不会有来自未来(或其他被掩盖)标记的信息泄漏,达到了我们的预期目的。

In simple terms, after masking and re-normalization, the distribution of attention weights behaves as if the computation was only performed over the unmasked positions from the beginning. This ensures that no information from future (or other masked) tokens leaks, achieving the desired goal.

注意,采用掩码处理注意力得分矩阵,而不是一开始动态调整计算范围(如果在计算注意力得分矩阵时针对每个位置动态调整计算范围只考虑前面的信息,会导致计算复杂度大幅增加),是为了 效率、并行性、实现上的简洁性以及扩展性。

Note that masking the attention score matrix, rather than dynamically adjusting the computation range (e.g., only considering preceding tokens when calculating the attention score matrix for each position), is for reasons of efficiency, parallelism, simplicity in implementation, and scalability. Dynamically adjusting the computation range would significantly increase computational complexity.

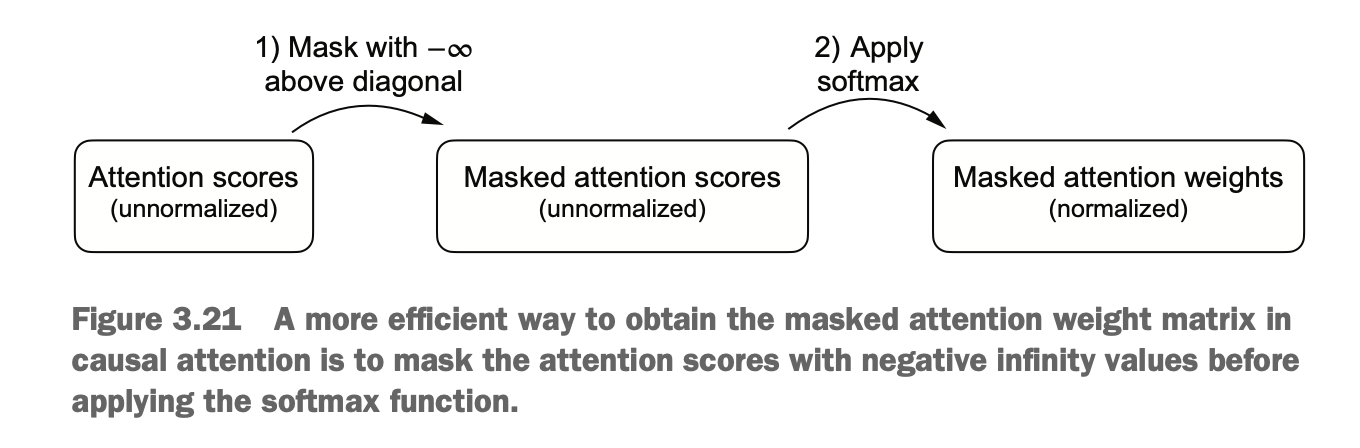

当然以上步骤也可以简化,把原始注意力得分矩阵对角线以上设为-∞,然后再进行标准化,这样softmax算出的就是0

Alternatively, the above steps can be simplified by setting values above the diagonal in the raw attention score matrix to -∞, and then normalizing. This way, the softmax operation would naturally yield 0 for those positions.

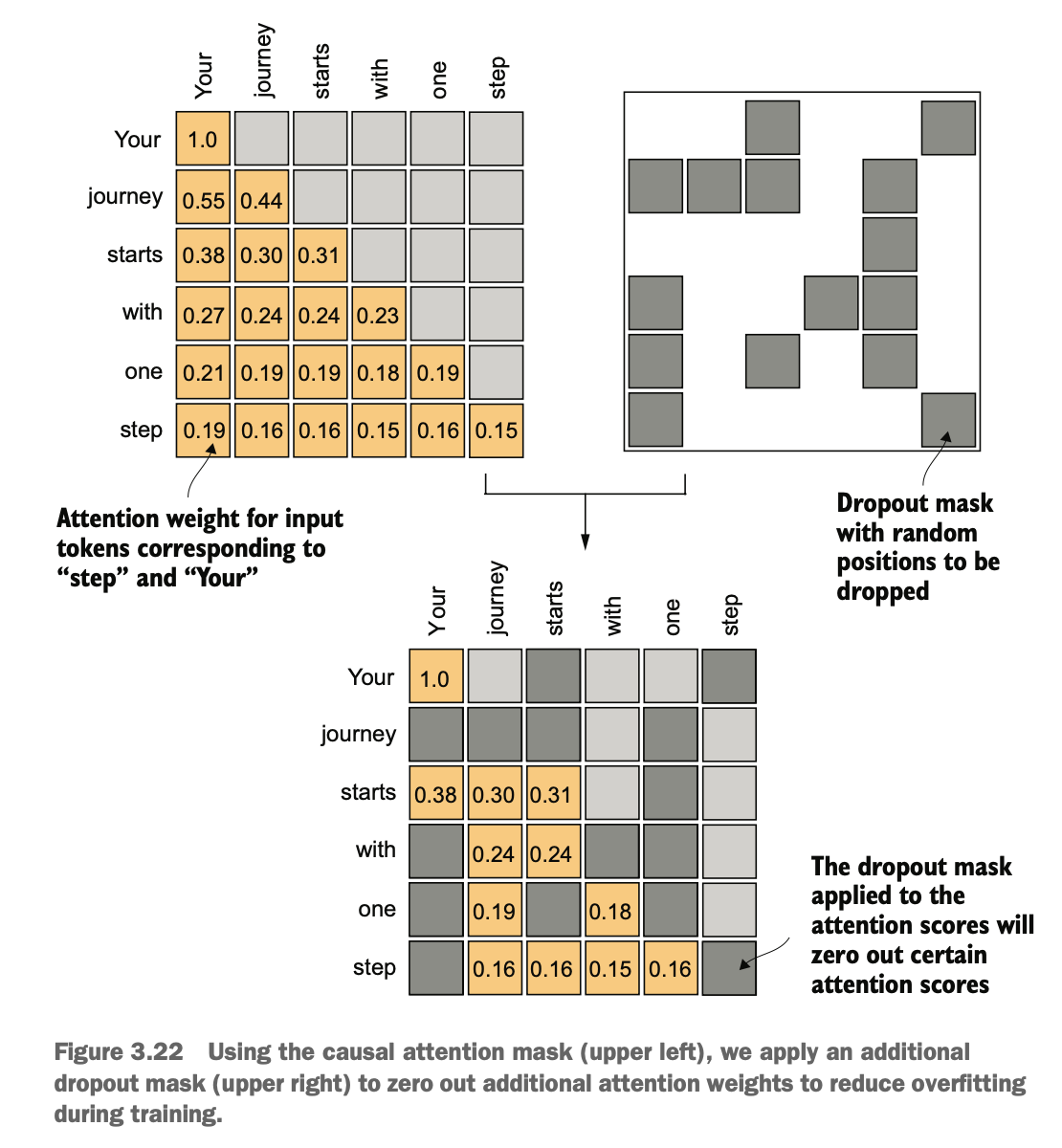

3.5.2 使用 Dropout 对额外的注意力权重进行掩码 Using Dropout to Mask Additional Attention Weights

dropout用于随机丢弃一些因子参数以防模型变得过拟合,GPT训练时一般在注意力权重矩阵或者使用注意力权重矩阵计算出值向量时使用dropout

Dropout is used to randomly drop certain factor parameters to prevent the model from overfitting. During GPT training, dropout is typically applied either to the attention weight matrix or after the attention weight matrix has been used to compute the value vectors.

Dropout 不仅随机将部分值置为零,还会对剩下的非零部分进行 缩放(scaling),这是为了 保持训练和推理时的输出分布一致性,从而确保模型的表现不受 Dropout 操作的影响

Dropout not only randomly sets some values to zero but also scales the remaining non-zero values. This is to ensure consistency in the output distribution between training and inference, thereby ensuring the model's performance is not affected by the Dropout operation.

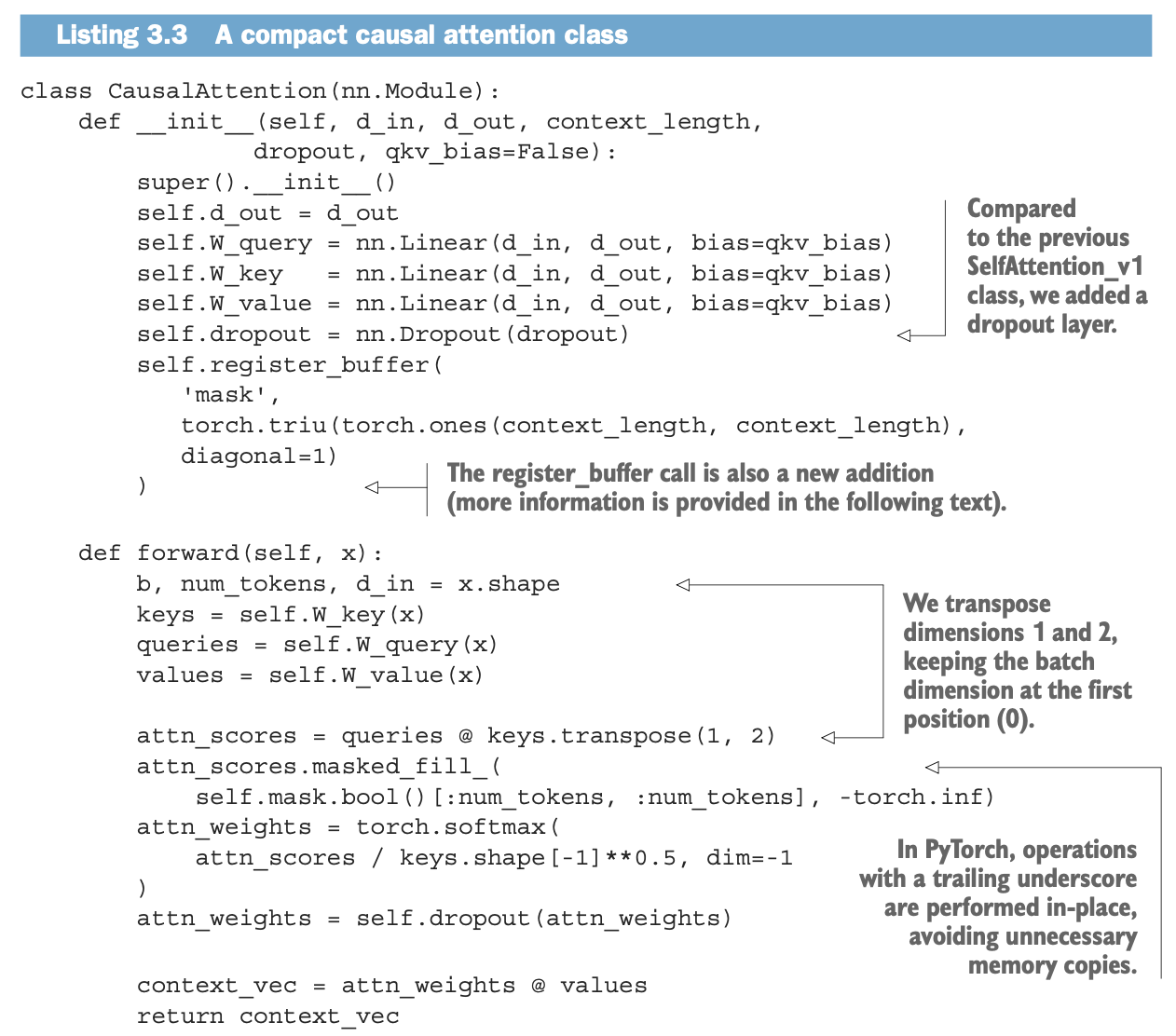

3.5.3 使用Python实现完整因果注意力类 Python Implementation of a Complete Causal Attention Class

代码整体功能 Overall Functionality:

实现了一个因果注意力机制,适用于自回归任务(如语言模型)。

通过掩码确保当前时间步不会访问未来的时间步,防止信息泄露。

使用 Dropout 对注意力权重进行随机正则化,增强模型的泛化性能。

通过 register_buffer 预定义了掩码矩阵,避免每次调用时重复创建掩码。

Implements a causal attention mechanism, suitable for autoregressive tasks (e.g., language models).

Ensures that the current time step cannot access future time steps using a mask, preventing information leakage.

Applies Dropout to attention weights for random regularization, improving the model's generalization ability.

Uses

register_bufferto predefine the mask matrix, avoiding repeated creation of the mask during each call.

目前为止,我们从一个简单的自注意力机制开始,加入了可训练权重,又加入了因果掩码以及dropout掩码,接下来还需要实现多头机制实现多个并行的因果注意力机制

So far, we started with a simple self-attention mechanism, added trainable weights, and then incorporated causal masking and Dropout masking. Next, we need to implement a multi-head mechanism to enable multiple parallel causal attention mechanisms.

3.6 将单头注意力扩展到多头注意力 Extending Single-Head Attention to Multi-Head Attention

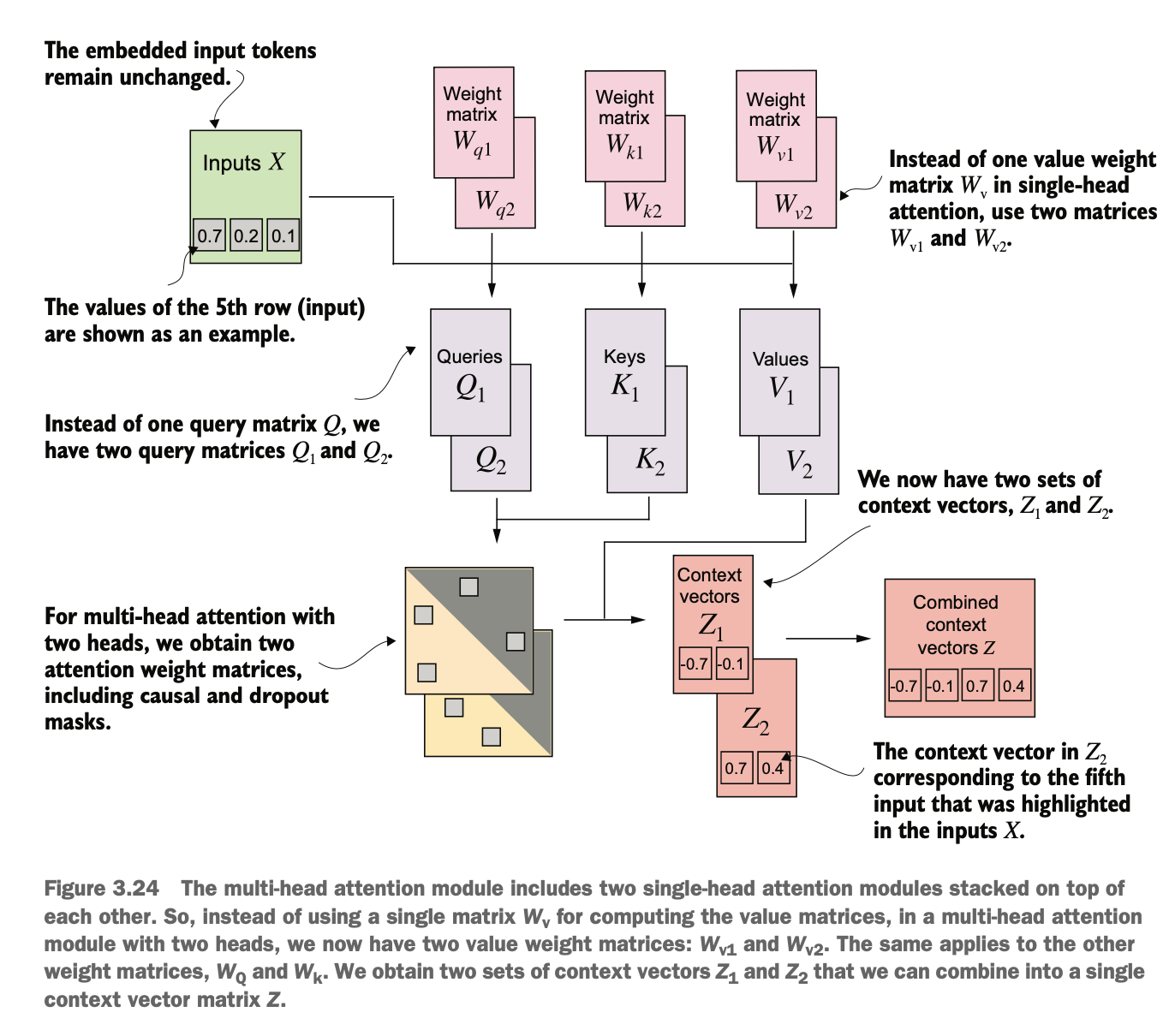

术语“多头”是指将注意力机制划分为多个“头部”,每个头部独立运行(可设置权重)。在这种情况下,一个单独的因果注意力模块可以被视为单头注意力,其中只有一组注意力权重依次处理输入

The term "multi-head" refers to dividing the attention mechanism into multiple "heads", each of which operates independently (with separately learnable weights). In this context, a single causal attention module can be viewed as single-head attention, where only one set of attention weights processes the input sequentially.

每个头可以学习不同的注意力模式,比如一个头关注词之间的语法关系,另一个关注语义关系。

Each head can learn different attention patterns. For example, one head may focus on syntactic relationships, while another captures semantic meaning.

3.6.1 叠加多个单头注意力层 Stacking Multiple Single-Head Attention Layers

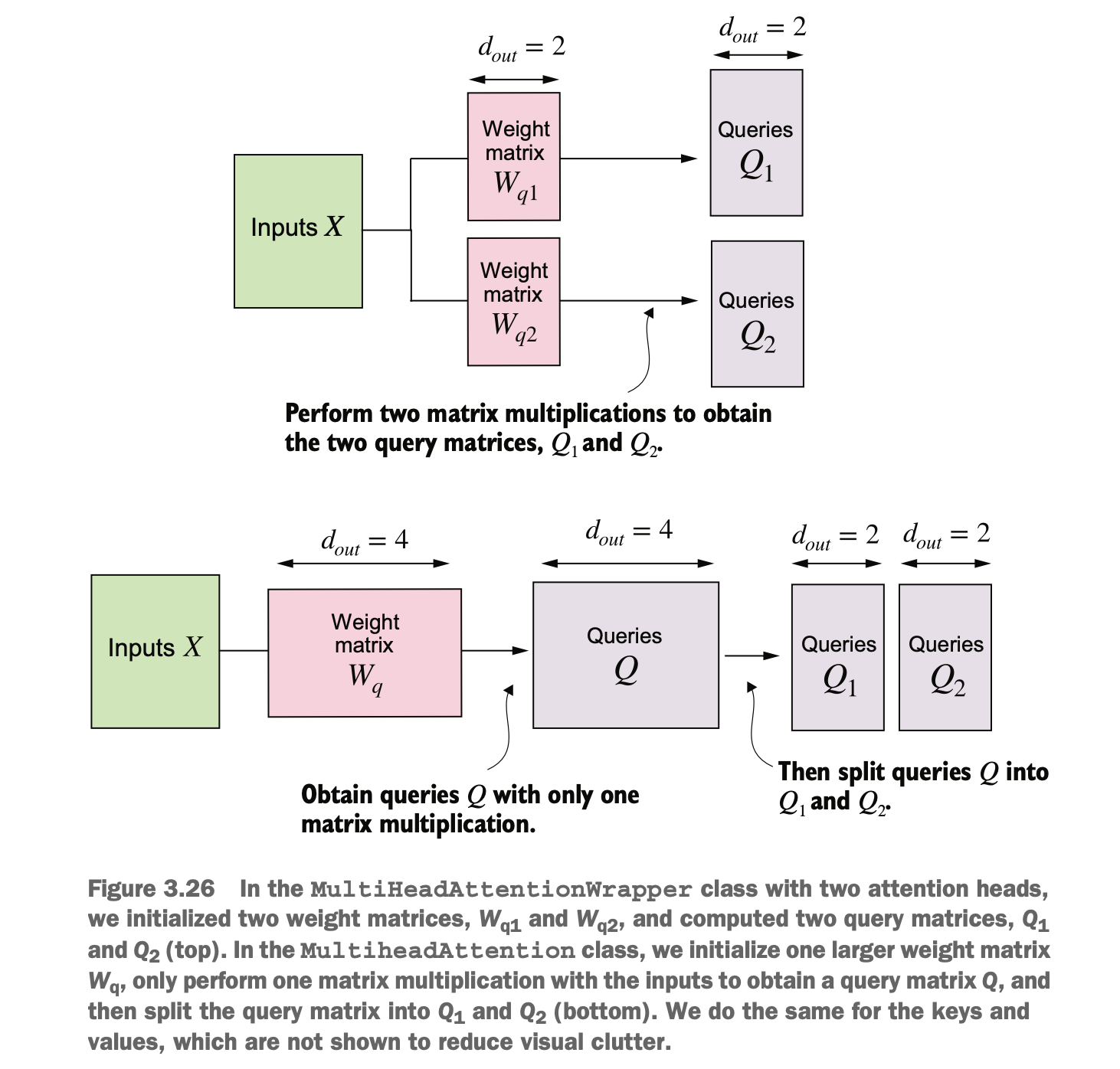

多头注意力机制本质上相当于并行地计算多个单头注意力(相同权重),结果通过拼接保留信息

The multi-head attention mechanism is essentially equivalent to computing multiple single-head attentions in parallel (with different weights). The results are concatenated to preserve information.

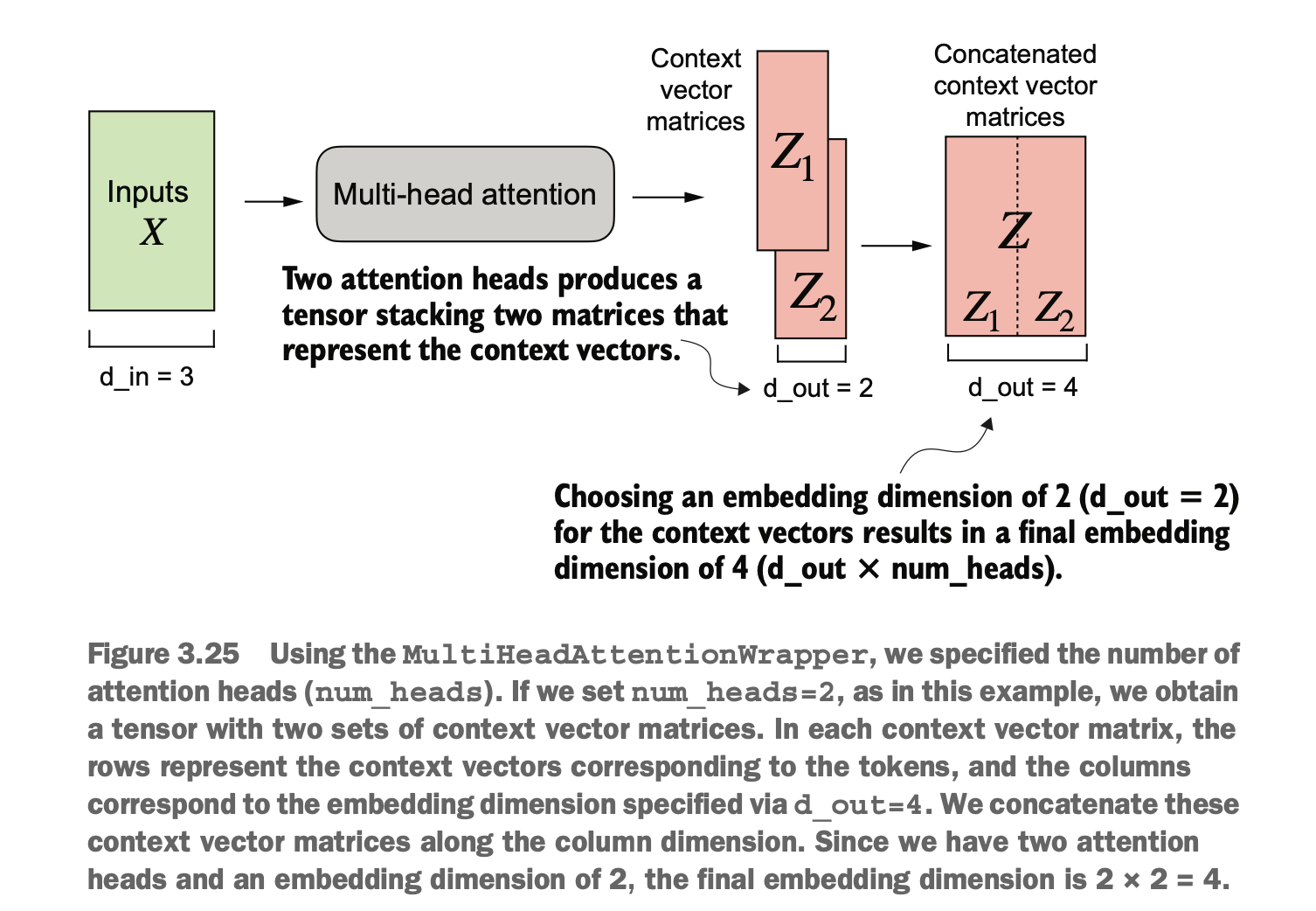

3.6.2 通过划分实现多头注意力 Implementing Multi-Head Attention via Splitting

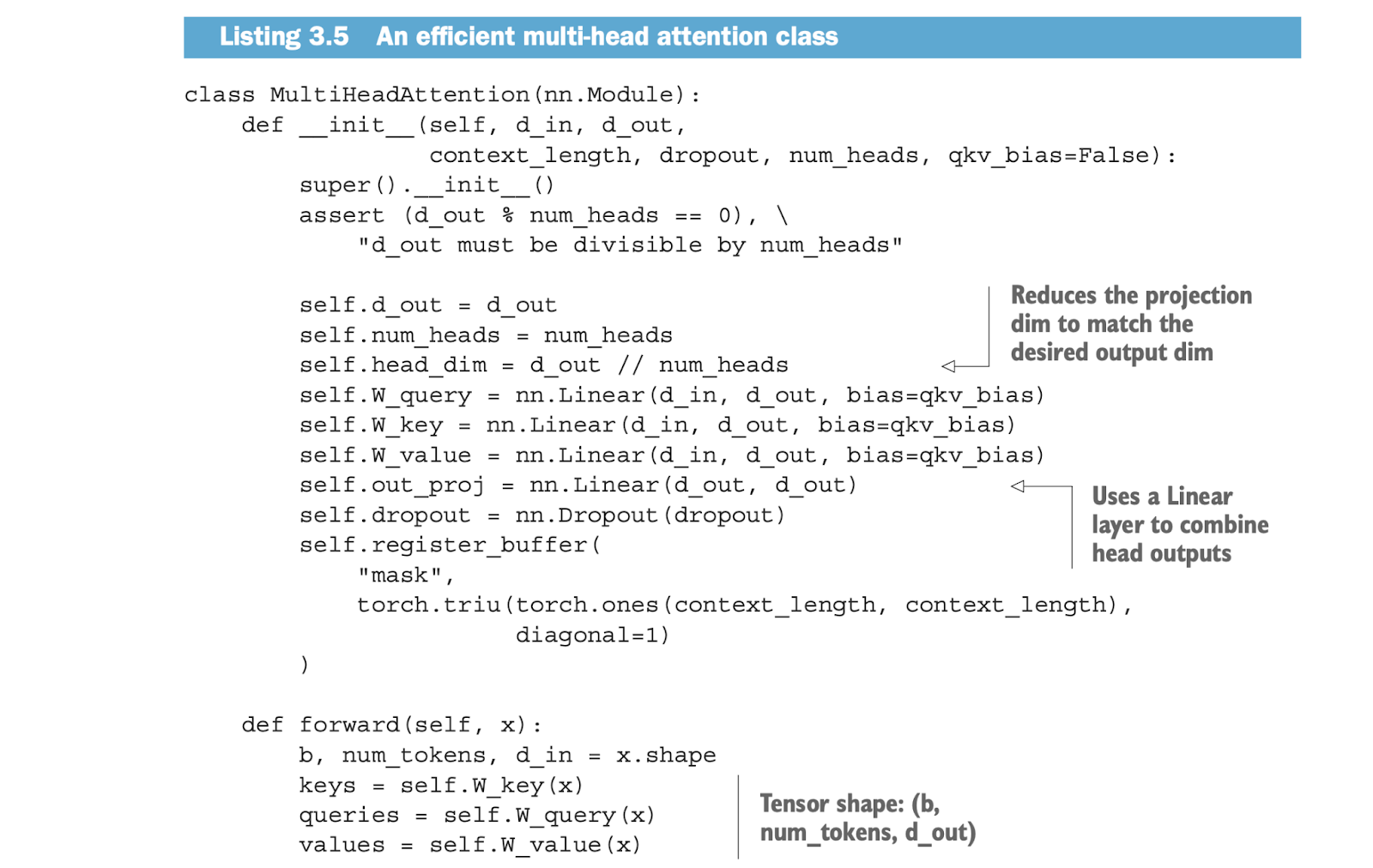

接下来对类实现做一些改进,用一个代码而非整合多个单头实现计算,并尝试提高计算效率

Next, we will improve the class implementation. Instead of combining multiple single-head implementations, we will compute multi-head attention in a single function and aim to improve computational efficiency.

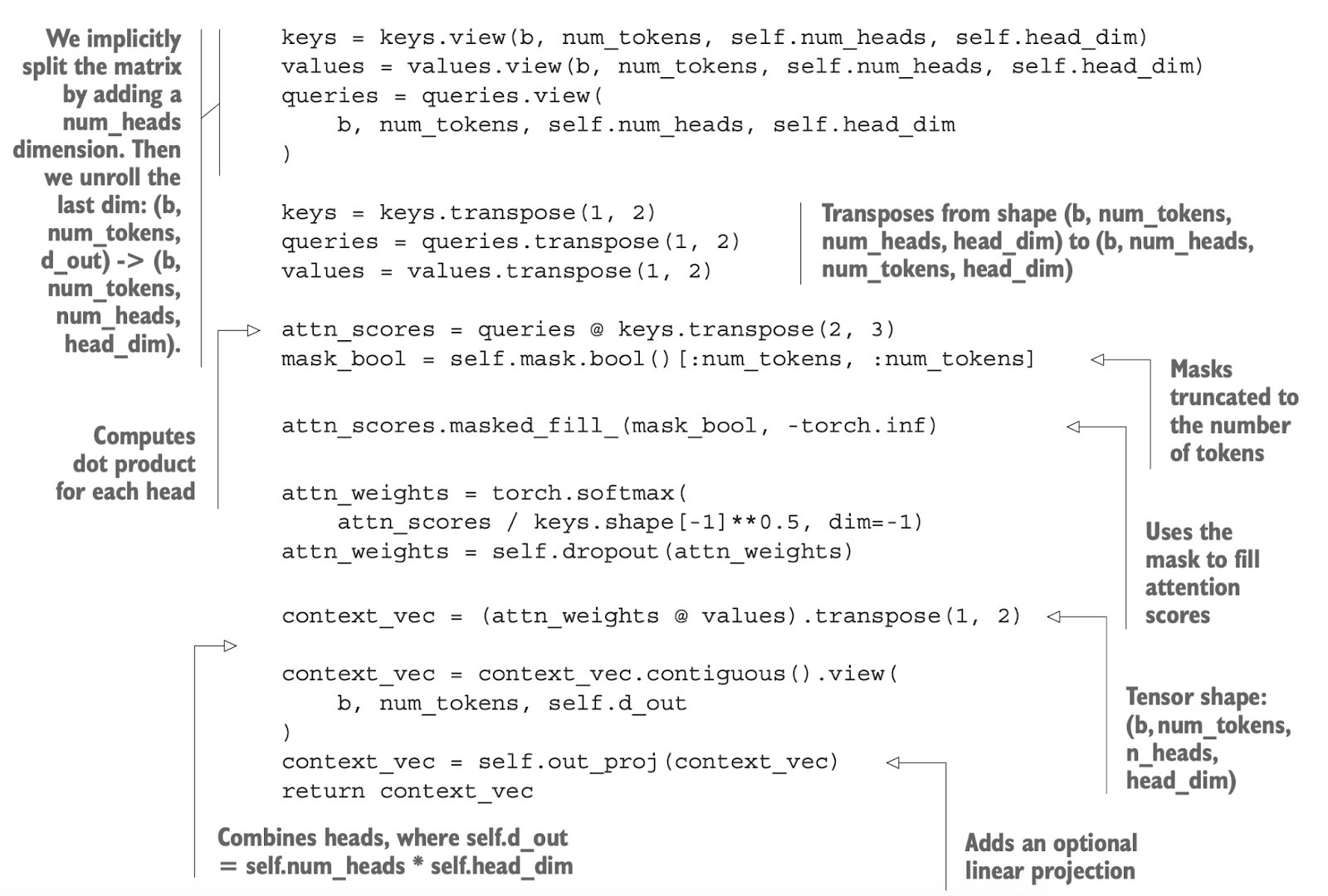

修改的核心是将一个形状为 (b, num_tokens, d_out) 的张量被重塑为形状 (b, num_tokens, num_heads, head_dim),这里注意力头的维度,需要满足d_out = num_heads * head_dim

The key modification is to reshape a tensor of shape (b, num_tokens, d_out) into the shape (b, num_tokens, num_heads, head_dim). Here, the dimension of each attention head, head_dim, must satisfy the condition: d_out = num_heads * head_dim

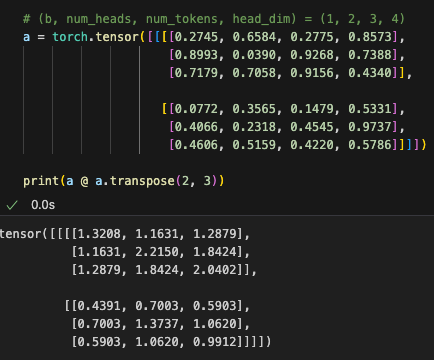

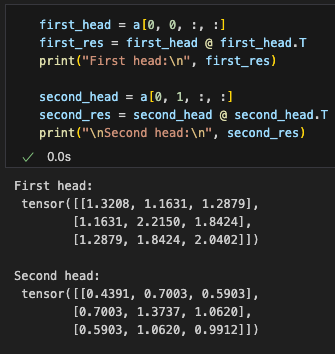

如图,通过划分到(b, num_heads, num_tokens, head_dim)实现并行计算效率的提升

As shown in the illustration, splitting the tensor into the shape (b, num_heads, num_tokens, head_dim) enables parallel computation, improving efficiency.

这里代码相当于用a @ a.transpose(2, 3),实现了每个批的最后两个维度矩阵乘法,前面参数批个数、头个数不变,相当于a[batch_i, heads_j, :, :] @ a[batch_i, heads_j, :, :] .T,意义是为每个批次中的每个头计算对应的注意力得分矩阵

The relevant code performs a matrix multiplication using a @ a.transpose(2, 3), which calculates the matrix product of the last two dimensions for each batch. The batch size and number of heads remain unchanged. It is equivalent to: a[batch_i, heads_j, :, :] @ a[batch_i, heads_j, :, :].T This operation computes the attention score matrix for each head in every batch.

实际上,小的GPT-2模型有12个多头,上下文嵌入参数长度d_in为768;大的GPT-2模型有25个多头,上下文嵌入参数长度d_in为1600

In practice, the small GPT-2 model has 12 attention heads, with a context embedding size d_in of 768; the large GPT-2 model has 25 attention heads, with a context embedding size d_in of 1600.

参数总结: Parameter Summary:

batch: Batch size. 一次处理的样本数量(训练时可变)num_tokens: The number of tokens in the input sequence. 序列长度(上下文长度)d_in: Input embedding dimension. 输入嵌入维度(也是模型的隐藏层维度)d_out: Output embedding dimension. 输出维度(通常与d_in相同)num_heads: Number of attention heads. 注意力头的数量head_dim: Dimension of each attention head 每个注意力头的维度 (d_out / num_heads).

在 Transformer 中,注意力的输入d_in和输出维度d_out通常设置为相同(d_module),是为了保持模型结构对称、便于残差连接(residual connection)和层归一化(layer norm)操作。

In a Transformer, the input and output dimensions of the attention mechanism are typically set to be the same (d_module) in order to maintain a symmetric model structure, which facilitates residual connections and layer normalization.

output = LayerNorm(x + Attention(x))

每个头都有自己的 Q、K、V 权重矩阵(即 W^Q、W^K、W^V),可以从相同的输入中学到不同的关注方式。

Each head has its own Q, K, and V weight matrices (i.e., W^Q, W^K, and W^V), allowing it to learn a different attention pattern from the same input.

head_dim = d_out / num_heads 表示一个头输出的预测,多个头们的输出会被拼接 + 融合后,才一起参与最终的“预测”。

head_dim = d_out / num_heads represents the output dimension of a single head. The outputs from all heads are concatenated and then fused before participating in the final "prediction."

我们可以把每个 head 想象成一个“专家”,把整个 embedding 看作一个“复杂问题”,然后:

每个专家用自己的一套方法(Q/K/V 投影)看这个问题

虽然最后每个专家只给出一个 head_dim = d_out / num_heads 维的“总结”,但他们都是从完整的信息中得出的结论

然后把这些总结拼在一起,再统一处理

We can think of each head as an "expert," and the entire embedding as a "complex problem." Then:

Each expert examines the problem using its own set of methods (Q/K/V projections);

Although each expert only provides a summary of dimension

head_dim = d_out / num_heads, their conclusions are all derived from the full information;These summaries are then concatenated and jointly processed.

那为什么要用多个小 head 而不是一个大 head? 这是 Vaswani 在论文《Attention is All You Need》中提到的设计动机:

多个小 head 各自关注不同的子空间,能够从不同的角度建模注意力模式,比一个大 head 更有效。

So why use multiple small heads instead of one big head? This was a design motivation mentioned by Vaswani in the paper "Attention is All You Need":

Multiple small heads can attend to different subspaces of the input, allowing the model to capture diverse attention patterns from different perspectives — making it more effective than a single large head.

也就是说:

多头注意力的核心思想不是“加宽维度”,而是“分而治之”(divide and conquer);

各个 head 是并行处理不同的特征子空间;

最后拼接后再融合(通过线性变换)形成统一表示。

In other words:

The core idea of multi-head attention is not just to "widen the dimension," but to divide and conquer;

Each head processes a different subspace of features in parallel;

The outputs are the

总结 Summary

注意力机制将输入元素转换为增强的上下文向量表示,这些表示包含了关于所有输入的信息。

自注意力机制通过加权求和的方式计算上下文向量表示,权重由输入决定。

在简化的注意力机制中,注意力权重是通过点积计算的。

点积是一种简洁的方式,将两个向量对应元素相乘并累加得到结果。

矩阵乘法虽然不是严格必需的,但可以通过替代嵌套的循环,使计算更高效、更紧凑。

在用于大型语言模型(LLMs)的自注意力机制中(也称为缩放点积注意力),我们引入了可训练的权重矩阵,用于计算输入的中间变换:查询(queries)、值(values) 和 键(keys)。

当处理从左到右生成文本的 LLM 时,我们会添加因果注意力掩码(causal attention mask),以防止模型访问未来的 token。

除了因果注意力掩码用于将注意力权重置零,我们还可以添加dropout 掩码来减少 LLM 的过拟合。

Transformer 基于注意力的 LLM 中的注意力模块包含多个因果注意力实例,这被称为多头注意力(multi-head attention)。

我们可以通过堆叠多个因果注意力模块来创建多头注意力模块。

更高效的实现多头注意力模块的方法是使用批量矩阵乘法(batched matrix multiplications)。

The attention mechanism transforms input elements into enhanced contextual vector representations, which encapsulate information about all inputs.

Self-attention computes contextual vector representations through a weighted sum, where the weights are determined by the inputs.

In simplified attention mechanisms, attention weights are calculated using dot products.

A dot product is a concise operation that multiplies the corresponding elements of two vectors and sums the results.

While matrix multiplication is not strictly necessary, it replaces nested loops, making computations more efficient and compact.

In the self-attention mechanism used in large language models (LLMs) (also referred to as scaled dot-product attention), trainable weight matrices are introduced to compute intermediate transformations of the inputs: queries, values, and keys.

When processing left-to-right text generation in LLMs, a causal attention mask is introduced to prevent the model from accessing future tokens.

In addition to causal attention masks, which set attention weights to zero, dropout masks can be added to reduce overfitting in LLMs.

The attention module in attention-based LLMs, such as Transformers, contains multiple instances of causal attention, which is referred to as multi-head attention.

Multi-head attention modules can be created by stacking multiple causal attention modules.

A more efficient way to implement multi-head attention modules is by using batched matrix multiplications.