博客内容Blog Content

PyTorch与深度学习 PyTorch & Deep Learning

本章介绍了PyTorch的基础知识,包括张量、前向传播、反向传播等概念,以及 如何构建神经网络、优化数据加载、使用GPU训练模型等操作,为进行深度学习奠定基础 This chapter introduces the fundamentals of PyTorch, including concepts such as tensors, forward propagation, and backpropagation. It also covers how to build neural networks, optimize data loading, and train models using GPUs, laying the foundation for deep learning.

A.1 PyTorch概念 PyTorch Concepts

PyTorch 是一个主流且开源的基于 Python 的深度学习库,其提供了一个在可用性和功能性之间平衡良好的工具。

PyTorch is a mainstream, open-source deep learning library based on Python, offering a well-balanced tool between usability and functionality.

其优点包括:

用户友好的界面:PyTorch 以其用户友好的界面而闻名,使得初学者和专业人士都可以轻松上手。

高效:PyTorch 在效率方面表现出色,适合需要快速迭代和实验的任务。

灵活性:尽管易于使用,PyTorch 并没有在灵活性上妥协,允许高级用户对模型的低级别细节进行调整和优化。

广泛应用于研究:由于其在研究领域的广泛使用,PyTorch 积累了大量的资源和社区支持。

缺点:

有时可能导致在某些特定任务上不如一些更加优化但灵活性较低的框架(如 TensorFlow)那样高效。

Advantages:

User-Friendly Interface: PyTorch is known for its user-friendly interface, making it easy to use for both beginners and professionals.

Efficiency: PyTorch performs excellently in terms of efficiency, making it suitable for tasks that require rapid iteration and experimentation.

Flexibility: Despite being easy to use, PyTorch does not compromise on flexibility, allowing advanced users to fine-tune and optimize low-level details of models.

Widely Used in Research: Due to its extensive use in research, PyTorch has accumulated a wealth of resources and strong community support.

Disadvantages:

In some specific tasks, PyTorch may not be as optimized or efficient as certain frameworks (such as TensorFlow) that prioritize optimization over flexibility.

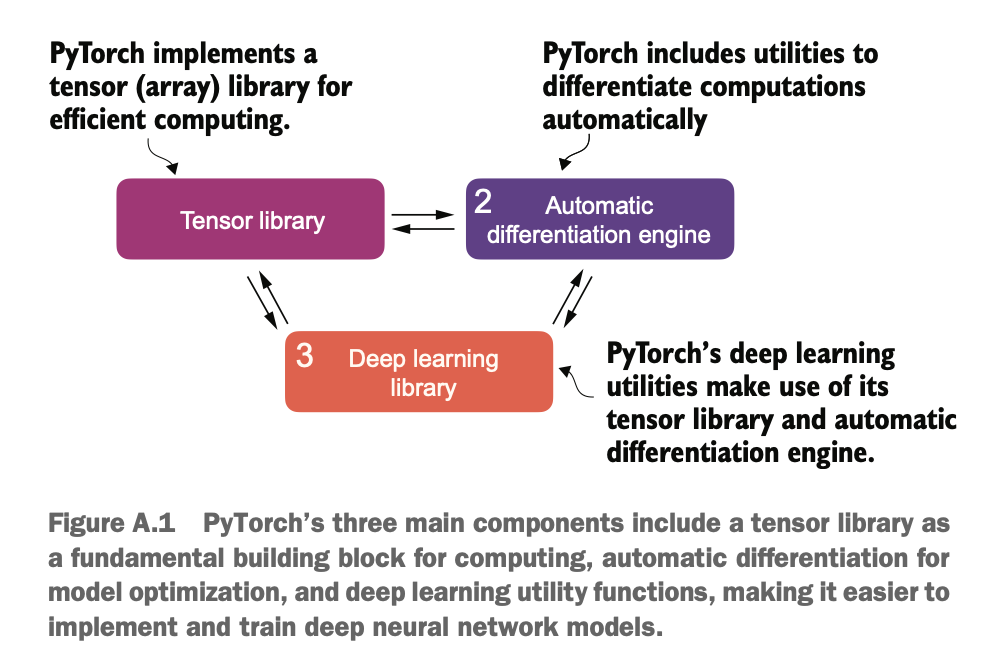

A. 1.1 PyTorch三大核心组件 Three Core Components of PyTorch

张量库(Tensor Library):PyTorch 实现了一个通用的张量库,用于高效计算。这类似于 NumPy,但增加了对 GPU 加速计算的支持,提供 CPU 和 GPU 之间的无缝切换。

自动微分(Automatic Differentiation):PyTorch 包含自动微分工具,可以自动计算计算图中的梯度。这简化了反向传播过程,帮助优化模型。

深度学习库(Deep Learning Library):PyTorch 提供了一系列模块化、灵活且高效的构建块,包括预训练模型、损失函数和优化器,方便用户设计和训练各种深度学习模型,适用于研究人员和开发者。

Tensor Library: PyTorch implements a general-purpose tensor library for efficient computation. This is similar to NumPy but includes support for GPU-accelerated computation, enabling seamless switching between CPU and GPU.

Automatic Differentiation: PyTorch includes an automatic differentiation tool that can compute gradients within a computation graph. This simplifies the backpropagation process and helps optimize models.

Deep Learning Library: PyTorch provides a set of modular, flexible, and efficient building blocks, including pre-trained models, loss functions, and optimizers. This allows users to design and train various deep learning models, making it suitable for both researchers and developers.

A. 1.2 深度学习相关的定义 Definitions Related to Deep Learning

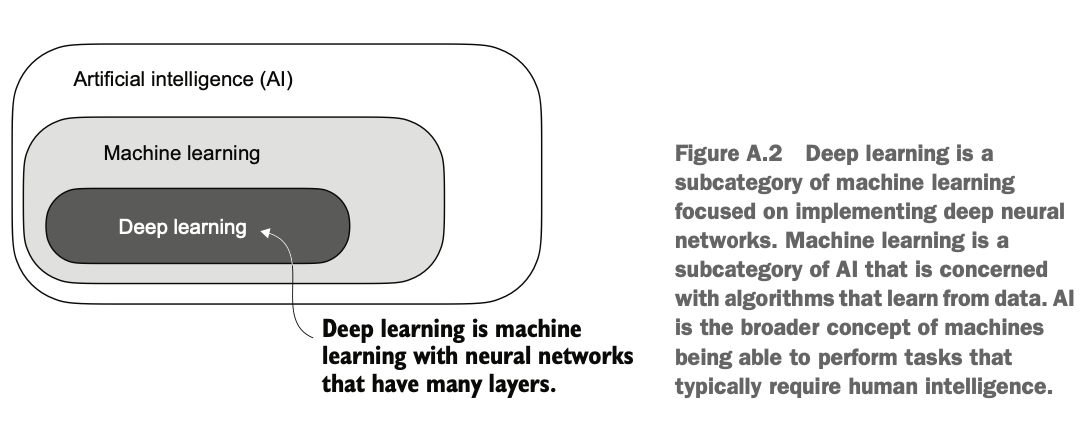

总结来说,AI是最广泛的概念,ML是AI的一部分,而DL则是ML的一个特定分支,专注于深度神经网络的应用和发展。

In summary, AI is the broadest concept, ML is a subset of AI, and DL is a specific branch of ML focused on deep neural networks.

人工智能(AI):AI 是最广泛的概念,指的是创建能够执行通常需要人类智能的任务的计算机或机器的能力。这些任务包括理解自然语言、识别模式和做出决策。尽管在某些特定领域取得了显著进展,但AI在实现通用智能方面仍有很长的路要走。

机器学习(ML):ML 是AI的一个子领域,专注于开发和改进学习算法。ML的核心思想是让计算机从数据中学习,从而在没有明确编程的情况下做出预测或决策。这涉及到开发能够识别模式、从历史数据中学习并随着时间、更多数据和反馈而改进性能的算法。

深度学习(DL):DL 是ML的一个子类别,专注于训练和应用深度神经网络。深度神经网络受到人类大脑工作原理的启发,特别是神经元之间的相互连接。DL中的“深度”指的是人工神经网络中的多层隐藏层,这些层允许模型处理复杂的非线性关系。与传统的机器学习技术相比,DL在处理非结构化数据(如图像、音频或文本)方面表现出色,因此特别适用于大型语言模型(LLMs)。

Artificial Intelligence (AI): AI is the broadest concept, referring to the ability of computers or machines to perform tasks that typically require human intelligence. These tasks include natural language understanding, pattern recognition, and decision-making. Although AI has made significant progress in specific fields, achieving general intelligence remains a long-term challenge.

Machine Learning (ML): ML is a subfield of AI that focuses on developing and improving learning algorithms. The core idea of ML is to enable computers to learn from data, allowing them to make predictions or decisions without explicit programming. This involves creating algorithms that recognize patterns, learn from historical data, and improve performance over time with more data and feedback.

Deep Learning (DL): DL is a subset of ML that focuses on training and applying deep neural networks. These networks are inspired by the way the human brain works, particularly the interconnections between neurons. The "deep" in deep learning refers to the multiple hidden layers in artificial neural networks, which allow models to process complex nonlinear relationships. Compared to traditional machine learning techniques, DL excels at handling unstructured data such as images, audio, and text, making it particularly useful for large language models (LLMs).

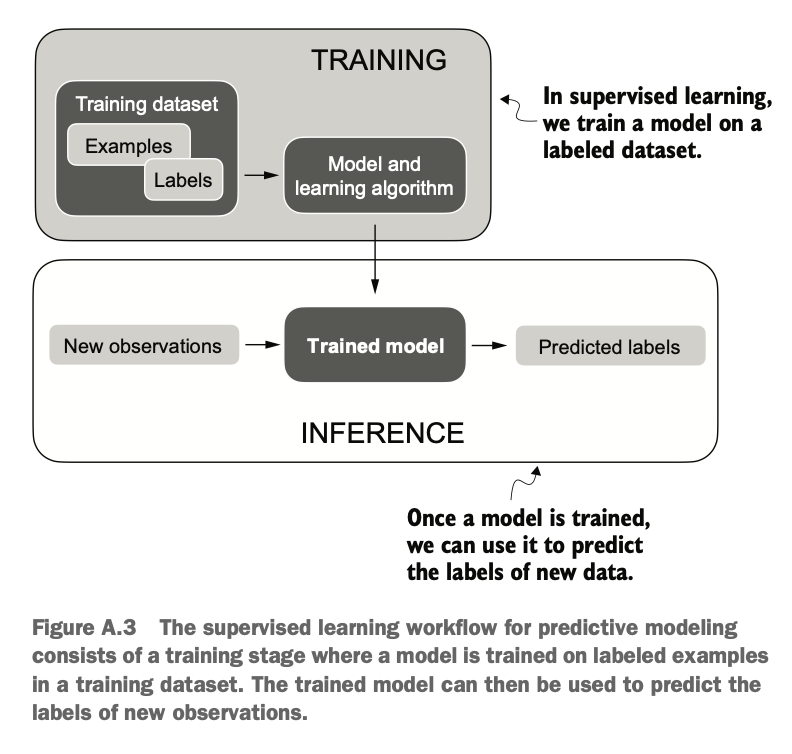

训练LLM模型用的是监督学习,根据样本标签先训练模型,然后使用模型对数据进行预测

Training LLM models involves supervised learning, where the model is first trained on labeled samples before being used to make predictions on new data.

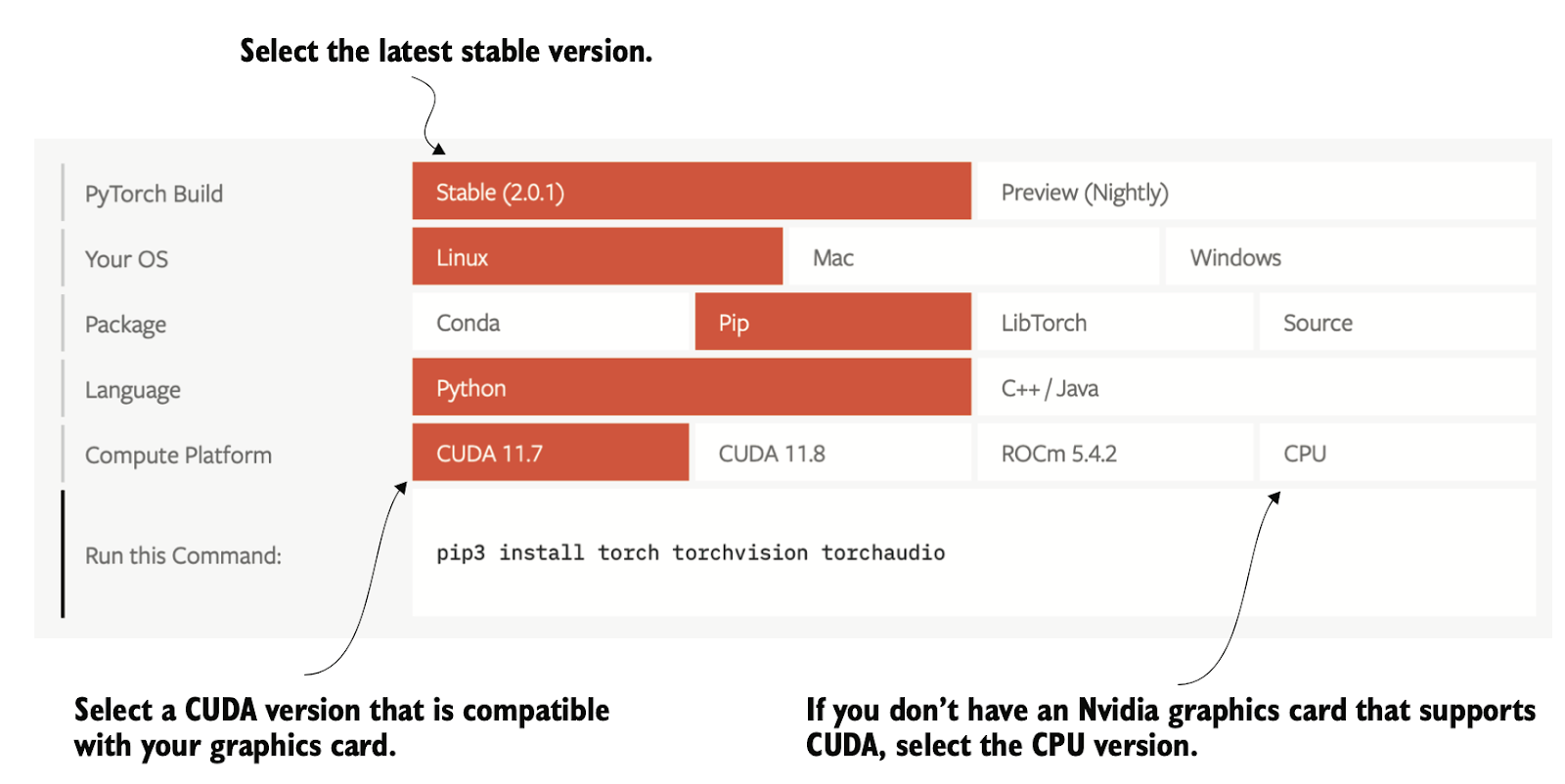

A. 1.3 PyTorch安装版本选择 Choosing the Right PyTorch Installation Version

选择合适的Python版本:由于许多库并不立刻支持最新的Python版本,所以要选择早1~2的版本,比如最新是3.13那推荐使用3.12或3.11

Selecting the Appropriate Python Version: Since many libraries do not immediately support the latest Python version, it is recommended to use a version that is one or two releases behind the latest. For example, if the latest version is 3.13, it is advisable to use 3.12 or 3.11.

根据硬件选择:PyTorch有仅支持CPU计算的leaner版本,和支持GPU+CPU的完整版本,如果有CUDA兼容的显卡GPU则推荐使用完整版(使用pip3 install torch会自动根据硬件安装对应的依赖,当然也可以手动指定版本如pip install torch==2.4.0)

Choosing Based on Hardware: PyTorch offers a leaner version that supports only CPU computation and a full version that supports both GPU and CPU. If you have a CUDA-compatible GPU, it is recommended to install the full version. Running the command pip3 install torch will automatically install the appropriate dependencies based on your hardware. You can also manually specify a version, e.g., pip install torch==2.4.0.

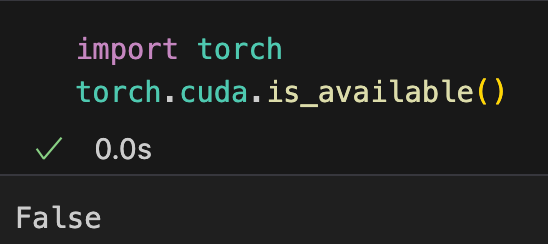

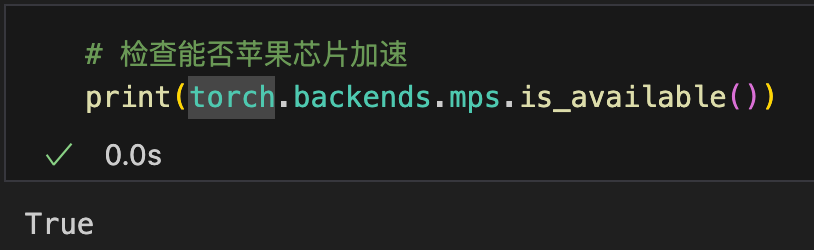

安装完成后,使用api查看是否有cuda兼容的GPU,或者苹果M系列芯片进行加速

After installation, use PyTorch's API to check whether your system has a CUDA-compatible GPU or an Apple M-series chip for acceleration.

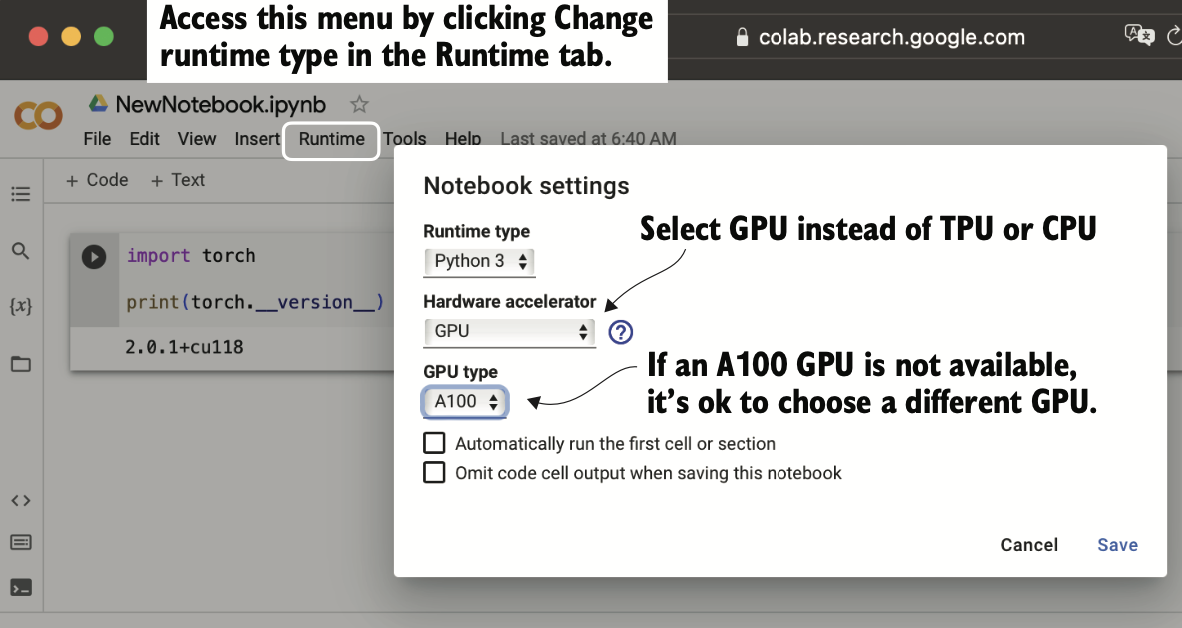

如果没有GPU,可以使用远程算力,比如谷歌colab

If you don't have a GPU, you can use remote computing resources such as Google Colab for running deep learning tasks.

A.2 张量概念 Tensor Concept

张量(Tensor)是一个数学概念,用于推广向量(vector)和矩阵(matrix)到更高维度的数据结构

A tensor is a mathematical concept used to generalize vectors and matrices to higher-dimensional data structures.

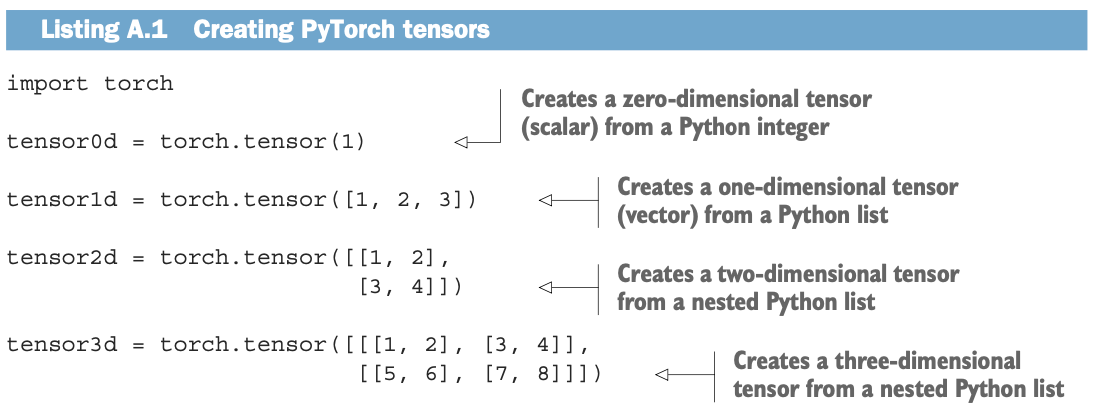

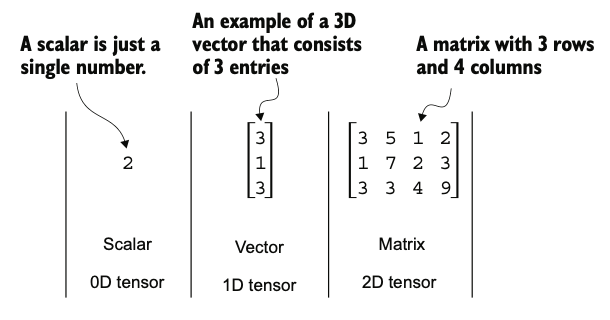

图展示了不同阶的张量:

标量(0D张量):单一数值。

向量(1D张量):一维数组。

矩阵(2D张量):二维数组。

高阶张量(如3D张量):三维或更高维度的数组。

The diagram illustrates tensors of different orders:

Scalar (0D tensor): A single numerical value.

Vector (1D tensor): A one-dimensional array.

Matrix (2D tensor): A two-dimensional array.

Higher-order tensors (e.g., 3D tensor): Arrays of three or more dimensions.

张量的重要性在于它们可以表示复杂的数据结构,并且可以进行各种数学运算,如加法、乘法、卷积等,这在深度学习和计算机视觉等领域尤其重要。相比Numpy,PyTorch可以轻松地将张量从CPU转移到GPU上进行计算,有更好的性能和硬件加速

Tensors are important because they can represent complex data structures and support various mathematical operations such as addition, multiplication, and convolution. These operations are especially crucial in fields like deep learning and computer vision. Compared to NumPy, PyTorch allows tensors to be easily transferred from the CPU to the GPU for computation, offering better performance and hardware acceleration.

A.2.1 标量、向量、矩阵和张量 Scalars, Vectors, Matrices, and Tensors

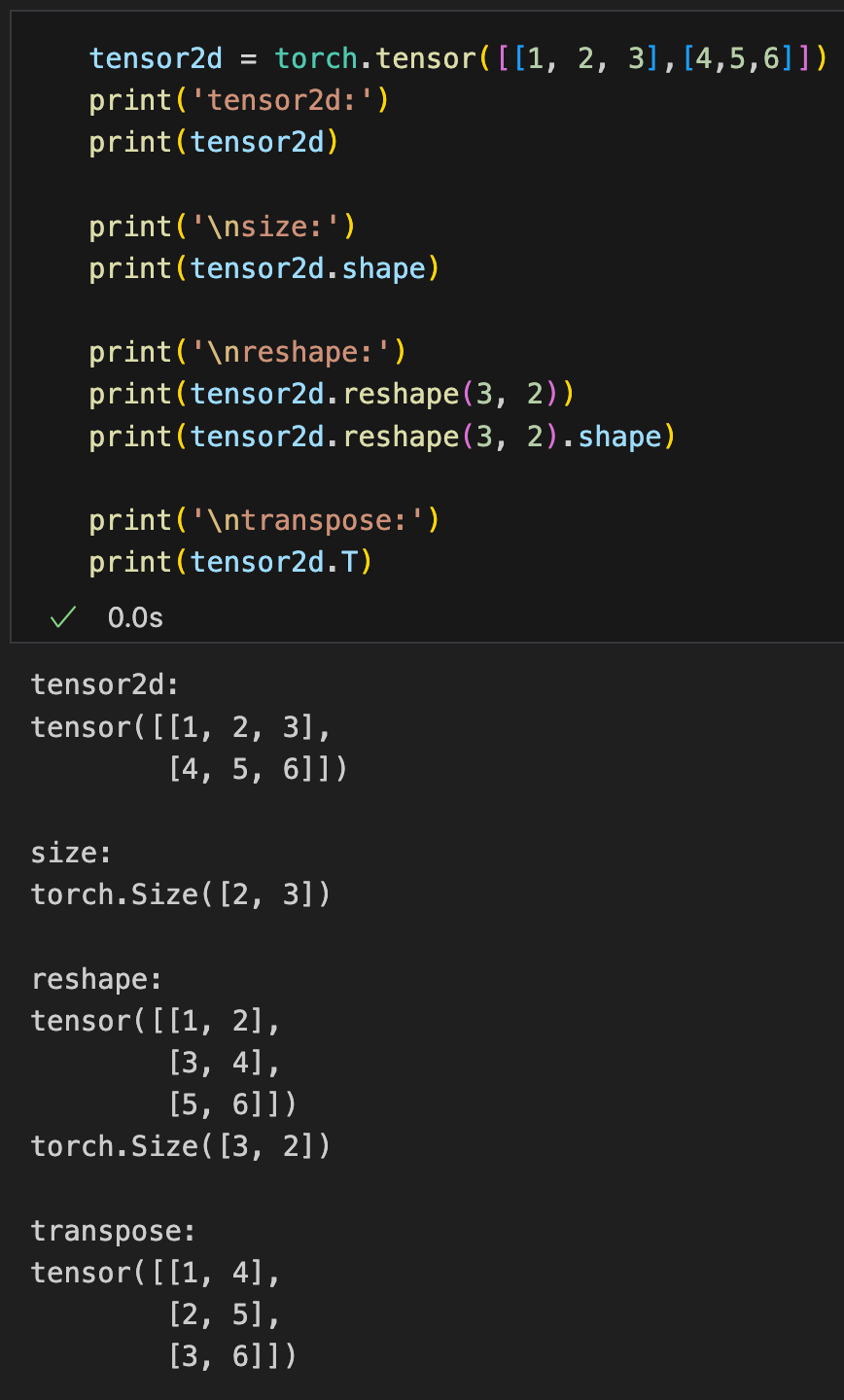

对于高维张量没有特定的术语,所以我们通常将三维张量称为3D张量,以此类推。我们可以使用torch.tensor函数创建PyTorch的Tensor类对象,如代码所示。

There are no specific terms for high-dimensional tensors, so we usually refer to a three-dimensional tensor as a 3D tensor, and so on. We can use the torch.tensor function to create PyTorch Tensor objects, as shown in the code.

A.2.2 张量数据类型 Tensor Data Types

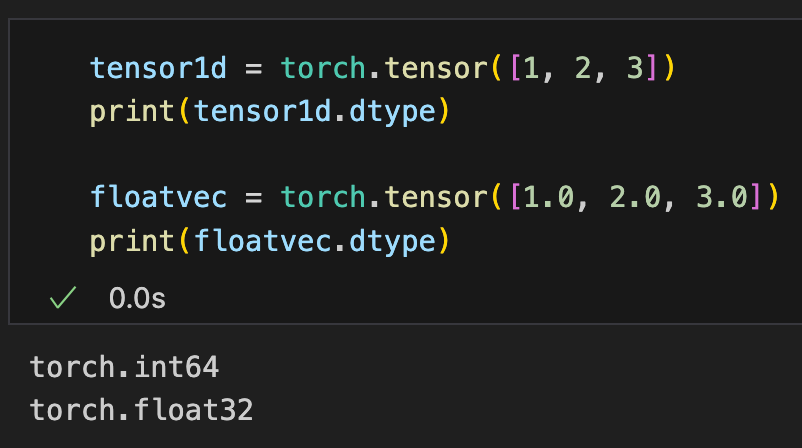

可使用.dtype访问张量的类型

The .dtype attribute can be used to access the data type of a tensor.

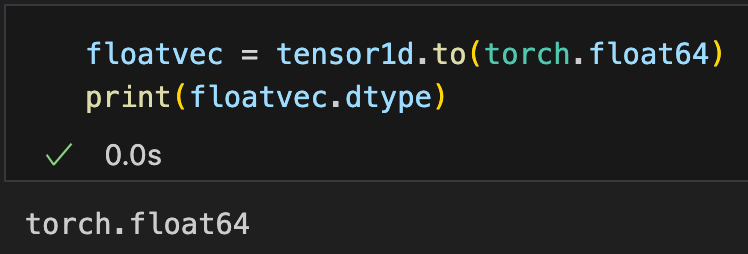

张量默认的是使用64位int和32位float,因为使用32位浮点运算效率高且GPU有优化,当然我们也可以手动进行转换

By default, tensors use 64-bit integers and 32-bit floating-point numbers because 32-bit floating-point operations are more efficient and optimized for GPUs. However, we can manually convert the data type if needed.

A.2.3 张量基本操作 Basic Tensor Operations

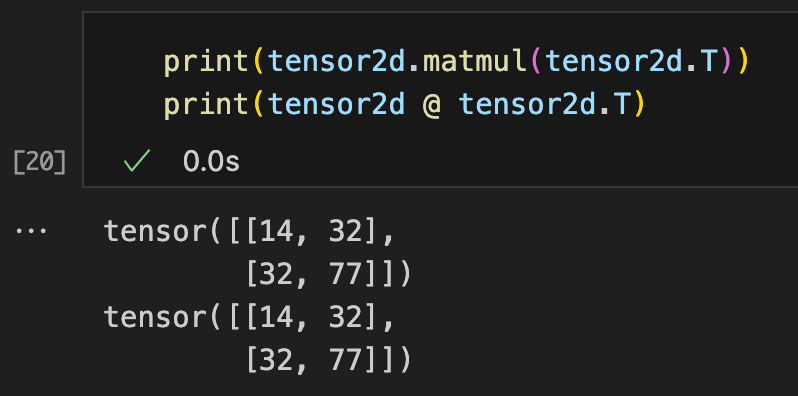

矩阵相乘,可使用.matmul或者@

Matrix multiplication can be performed using .matmul or the @ operator.

A.3 计算图和正向传播 Computational Graph and Forward Propagation

计算图是一个有向图,它允许我们表达和可视化数学表达式,并列出了计算神经网络输出的所需计算序列。神经网络算法需要使用先正向传播,计算出损失值,然后再根据损失值使用反向传播所需的梯度,进而调整模型参数最小化损失。

前向传播: 输入数据通过权重和偏置经过线性变换和激活函数,生成预测值。

损失计算: 预测值与真实值比较,计算损失。

计算图: PyTorch 的动态计算图记录了每一步操作,便于进行自动微分。

A computational graph is a directed graph that allows us to express and visualize mathematical expressions while listing the sequence of computations required to calculate the neural network output. Neural network algorithms first use forward propagation to compute the loss value, then use backpropagation to derive the necessary gradients, adjusting model parameters to minimize the loss.

Forward propagation: Input data passes through weights and biases, undergoing linear transformations and activation functions to generate predictions.

Loss calculation: The predicted value is compared with the actual value to compute the loss.

Computational graph: PyTorch's dynamic computational graph records each step of the operation, facilitating automatic differentiation.

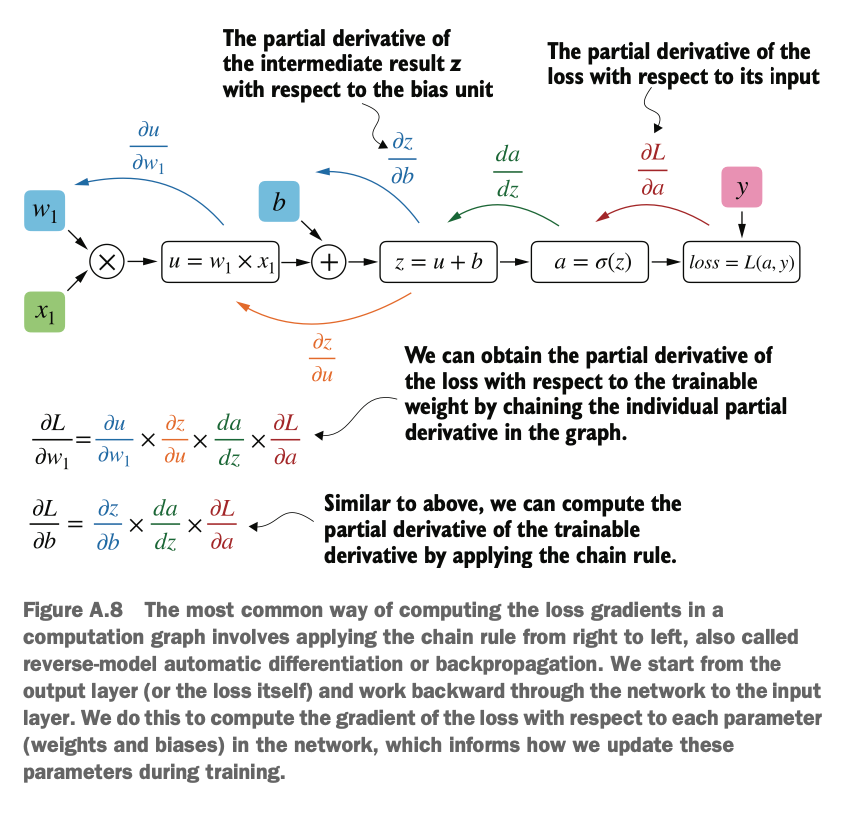

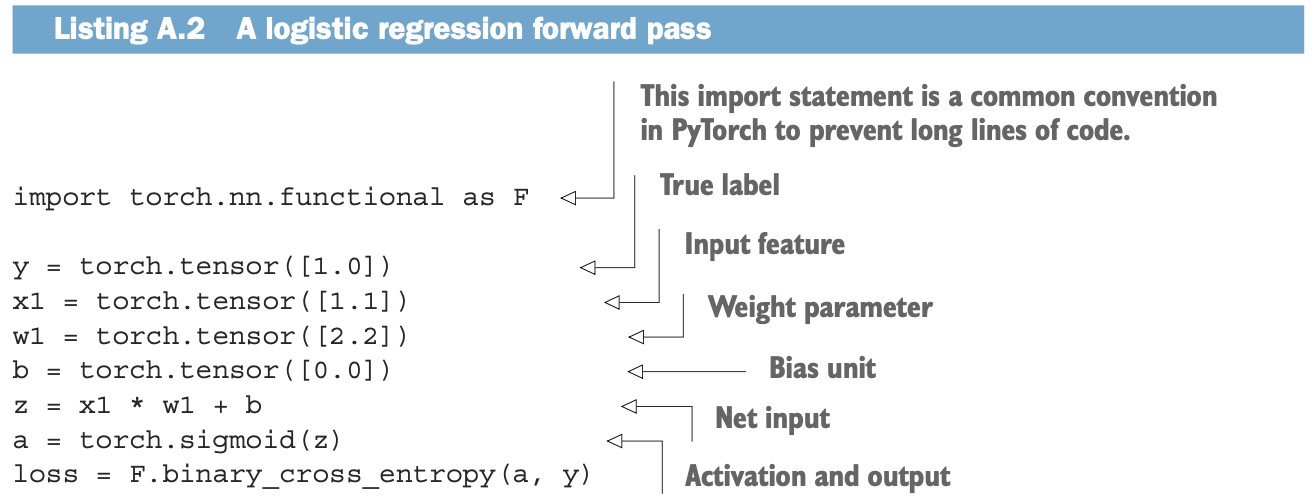

如图,我们定义如下一系列操作

As shown in the diagram, we define a series of operations.

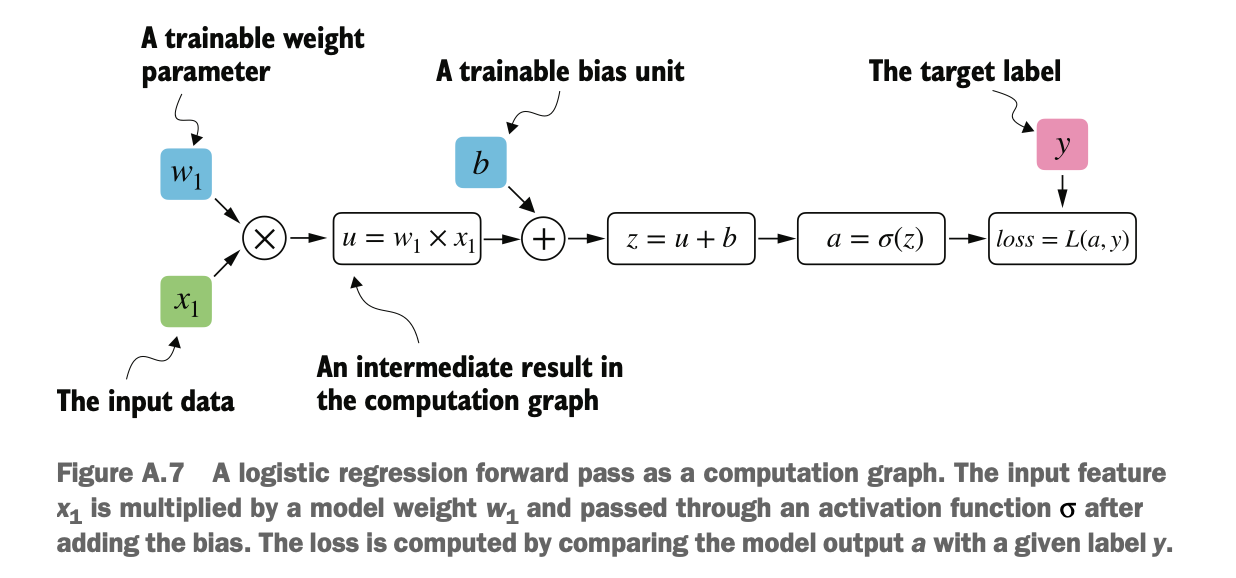

这些操作可得到对应如下的计算图,之后可利用损失函数的梯度来求模型参数(w1和b)

These operations generate a corresponding computational graph, which can then be used to compute gradients for the loss function to optimize model parameters (w1 and b).

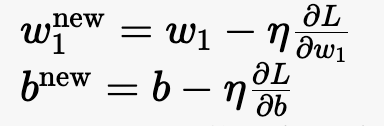

A.4 反向传播与自动微分 Backpropagation and Automatic Differentiation

反向传播求偏导数的主要目的是得到计算梯度(即求出每个方向参数如权重 w 和偏置 b 对损失函数 L 的偏导数),有了梯度我们就可以用梯度来调整这些参数以减少损失(梯度下降法),最终达到最小化损失函数的目的,从而让模型的预测更加准确。

(梯度是一个向量,表示函数在某一点沿各个方向的偏导数或变化率,这个向量的方向相当于函数在该点增加最快的方向,模长表示变化率的大小)

The main purpose of backpropagation in computing partial derivatives is to obtain gradients (i.e., the partial derivatives of the loss function L with respect to each parameter, such as weight w and bias b). With these gradients, we can adjust the parameters using gradient descent to reduce the loss, ultimately minimizing the loss function and improving the accuracy of the model's predictions.

(A gradient is a vector that represents the partial derivatives or rate of change of a function at a given point. The direction of this vector indicates the direction in which the function increases the fastest, while its magnitude represents the rate of change.)

我们在 PyTorch 中进行计算,当其终端节点之一的 requires_grad 属性设置为 True 时,PyTorch 会默认在内部构建计算图。这在我们需要计算梯度时非常有用。

When performing computations in PyTorch, if the requires_grad attribute of any terminal node is set to True, PyTorch will automatically construct a computational graph internally. This is particularly useful when we need to compute gradients.

反向传播或自动微分的具体步骤如图,在计算图中计算损失梯度的最常用方法是从右到左应用链式法则。我们从输出层(或损失本身)开始,向后通过网络直到输入层。我们这样做是为了计算损失函数相对于网络中每个参数(权重和偏置)的梯度,从而使用梯度下降法指导我们在训练过程中更新这些参数。

The specific steps of backpropagation or automatic differentiation are shown in the diagram. The most common method to compute loss gradients in a computational graph is to apply the chain rule from right to left. We start from the output layer (or the loss itself) and move backward through the network to the input layer. This process calculates the gradients of the loss function with respect to each parameter (weights and biases) in the network, which are then used to update these parameters during training using gradient descent.

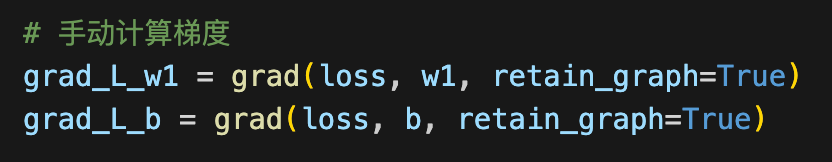

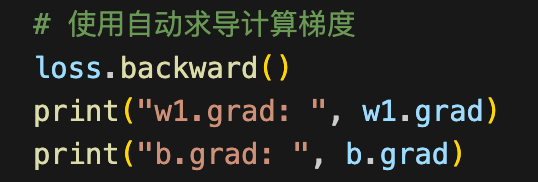

我们可以手动计算各个方向上的偏导数,在debug或者演示时有用

We can manually compute the partial derivatives in different directions, which can be useful for debugging or demonstration purposes.

实际开发也可以使用pytorch自带的高阶函数,调用backward之后可以直接计算好梯度,我们直接查看各个方向上的偏导数结果

In actual development, we can also use PyTorch's built-in high-level functions. By calling backward(), gradients are automatically computed, allowing us to directly inspect the partial derivative results in different directions.

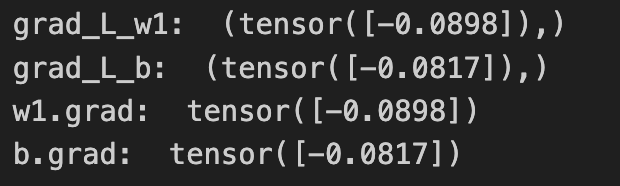

如图在我们训练LLM的代码里,由于pytorch对计算梯度,更新权重等步骤都进行了封装,所以我们使用很方便

As shown in the diagram, in our LLM training code, PyTorch has encapsulated steps like gradient computation and weight updates, making it very convenient to use.

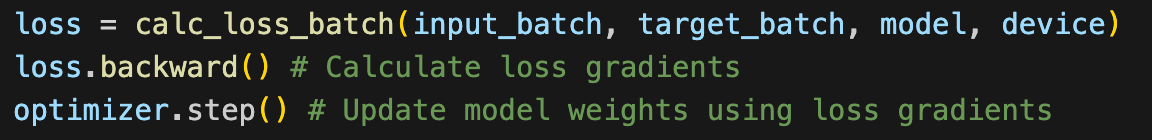

A.5 实现多层神经网络 Implementing a Multi-Layer Neural Network

神经网络是一种模拟人脑神经元连接模式的机器学习模型,用于处理复杂的模式识别和预测任务。它由多个层(layers)组成,每层包含若干节点(神经元),这些节点通过权重和偏置相连。

A neural network is a machine learning model that mimics the connection patterns of neurons in the human brain. It is used for complex pattern recognition and prediction tasks. The network consists of multiple layers, each containing several nodes (neurons) that are connected through weights and biases.

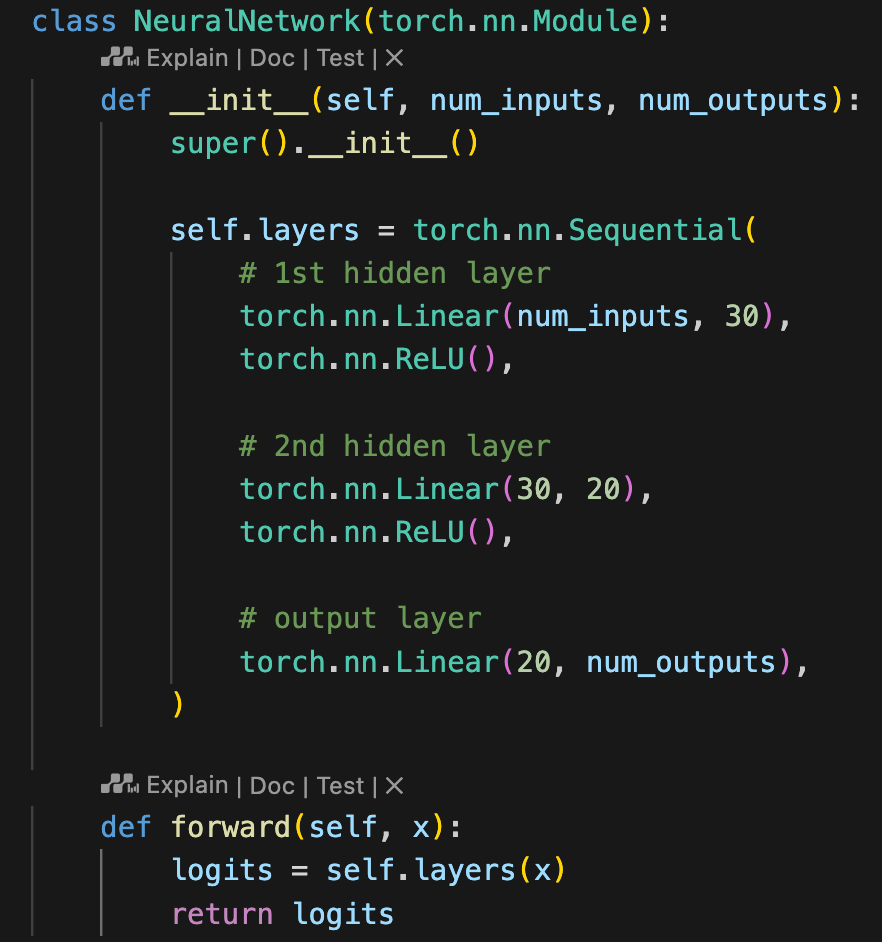

在 PyTorch 中实现神经网络时,我们通常通过继承 torch.nn.Module 类来定义自定义的网络结构。torch.nn.Module 提供了丰富的功能,帮助开发者封装各层以及操作,同时自动管理和跟踪模型的参数。

init 构造函数

在子类的构造函数中定义网络中所有需要的层(如全连接层、卷积层等)。

负责初始化模型所需的参数和结构。

forward 方法

定义输入数据如何在模型各层之间传递。

构建了一个计算图,描述了数据从输入到输出的流动过程。

backward 方法:

尽管通常不需要手动实现,但它在训练时自动计算损失函数关于模型参数的梯度。

该方法用于反向传播,帮助调整模型参数以减少误差。

When implementing a neural network in PyTorch, we typically define a custom network structure by inheriting from the torch.nn.Module class. torch.nn.Module provides a rich set of functionalities that help developers encapsulate layers and operations while automatically managing and tracking model parameters.

init constructor:

Defines all necessary layers in the network (such as fully connected layers, convolutional layers, etc.).

Initializes the parameters and structure required for the model.

forward method:

Defines how input data propagates through the layers of the model.

Constructs a computational graph that describes the flow of data from input to output.

backward method:

Although it usually does not need to be manually implemented, it automatically computes the gradient of the loss function with respect to model parameters during training.

This method is used for backpropagation, helping to adjust model parameters to reduce errors.

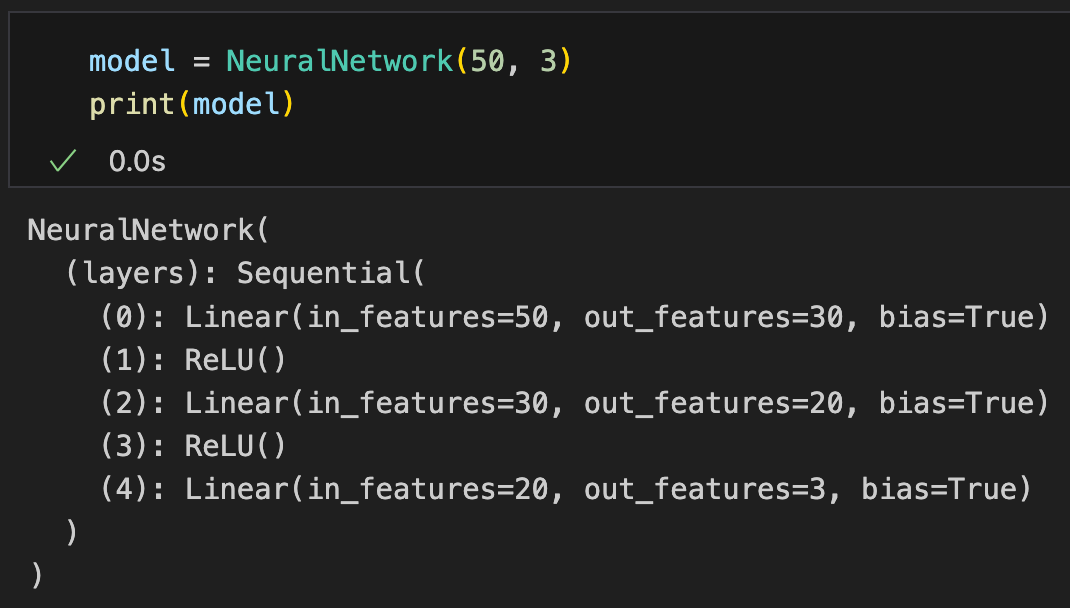

根据这个架构,我们可以定义一个简单的神经网络,可利用Sequential将其串行

Based on this architecture, we can define a simple neural network and use Sequential to chain the layers together.

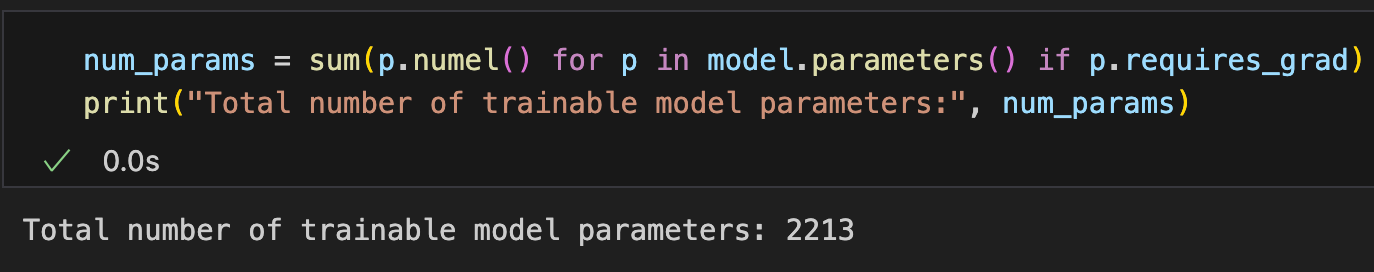

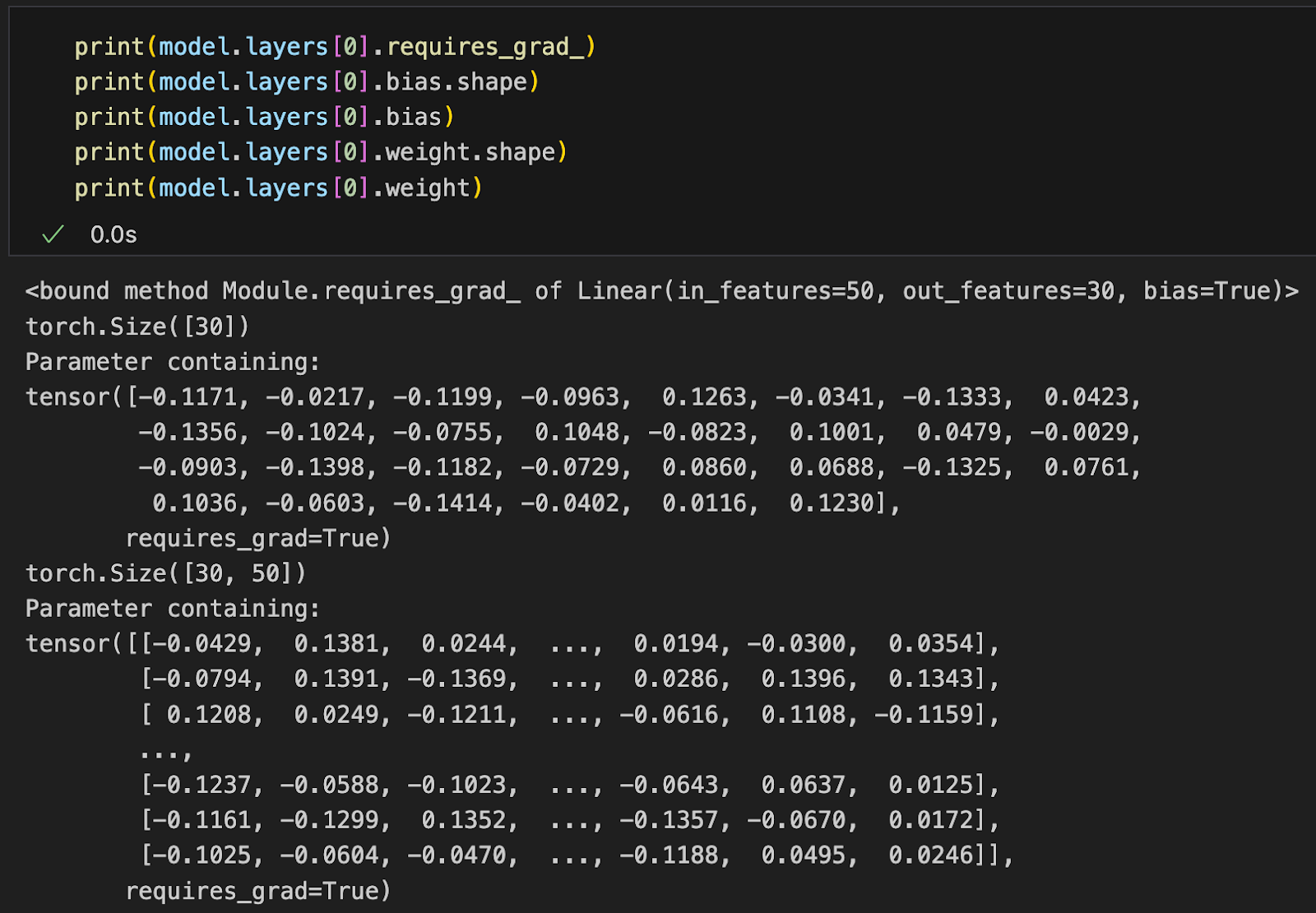

我们可以使用model.parameters()方法查看参数

输入层到第一层:50*30矩阵+30偏置=1530

第一层到第二层:30*20矩阵+20偏置=630

第二层到输出层:20*3矩阵+3偏置=63

We can use the model.parameters() method to view the parameters:

From input layer to the first layer: 50×30 matrix + 30 biases = 1530

From the first layer to the second layer: 30×20 matrix + 20 biases = 630

From the second layer to the output layer: 20×3 matrix + 3 biases = 63

可以打印具体参数,注意计算时权重是乘W^T,即W本身表示[输出数量,输入数量]

We can print specific parameters, keeping in mind that the weights are multiplied by W^T, meaning W itself represents [output size, input size].

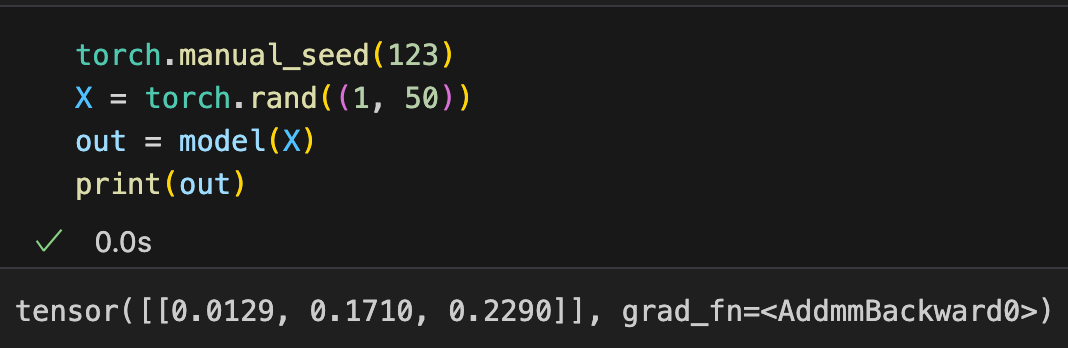

调用模型计算,我们只需要将输入参数传入模型即可

To perform a computation with the model, we simply pass the input parameters into the model.

这里grad_fn 是 PyTorch 中一个张量的属性,它存储了计算该张量的最后一步操作的信息。这些信息在 反向传播(backpropagation) 中非常重要,因为 PyTorch 需要利用它来计算梯度。

Here, grad_fn is an attribute of a PyTorch tensor that stores information about the last operation applied to the tensor. This information is crucial for backpropagation because PyTorch uses it to compute gradients.

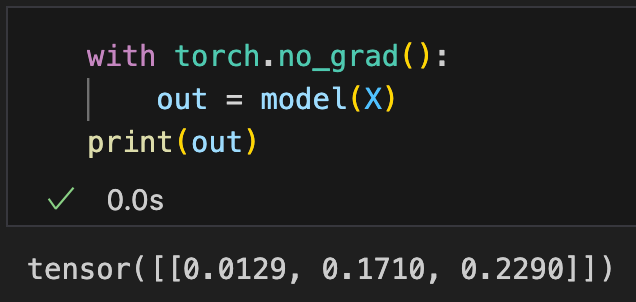

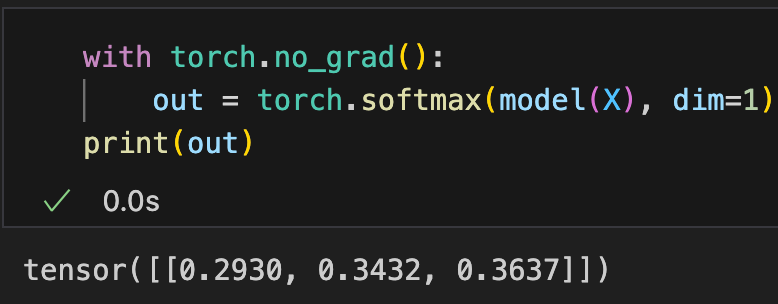

如果在推理或其他不需要梯度的场景中(比如推理和训练完成后的预测),可以通过禁用梯度跟踪如 torch.no_grad()来节省内存和计算资源。

If we are performing inference or other tasks that do not require gradients (such as predictions after training), we can disable gradient tracking using torch.no_grad() to save memory and computational resources.

在PyTorch中,通常的做法是直接返回最后一层原生未处理的输出(logits),而不将其传递给非线性激活函数。因为这样效率更高,也更灵活,可以给下游使用softmax或者sigmod等方式进行处理

In PyTorch, a common practice is to return the raw, unprocessed output (logits) from the last layer instead of passing it through a nonlinear activation function. This approach is more efficient and flexible, allowing downstream processes to apply softmax or sigmoid as needed.

当然,我们也可以手动将输出的logits转为概率,作为多分类问题这些概率和为1,且在初始化时各概率值差不多

Of course, we can also manually convert the output logits into probabilities. For multi-class classification problems, the sum of these probabilities equals 1, and at initialization, each probability value is approximately equal.

A.6 构建高效数据加载器 Building an Efficient Data Loader

在训练模型之前,我们需要定义数据加载器,数据加载器(DataLoader)是用于从数据集中高效加载数据的工具,负责实现数据的分批(batching)、打乱(shuffling)、多线程加载(multi-threaded loading)、以及自动化数据流管理,常用于深度学习训练和评估中。

Before training a model, we need to define a data loader. A data loader is a tool used to efficiently load data from a dataset. It is responsible for batching, shuffling, multi-threaded loading, and automated data flow management, and is commonly used in deep learning training and evaluation.

我们可以使用pytorch自带的数据加载器(DataLoader),但涉及数据本身的初始化和读取的具体逻辑,需要配合数据集(DataSet)一起使用

We can use PyTorch’s built-in DataLoader, but the initialization and reading logic of the data itself need to be handled with a dataset (DataSet).

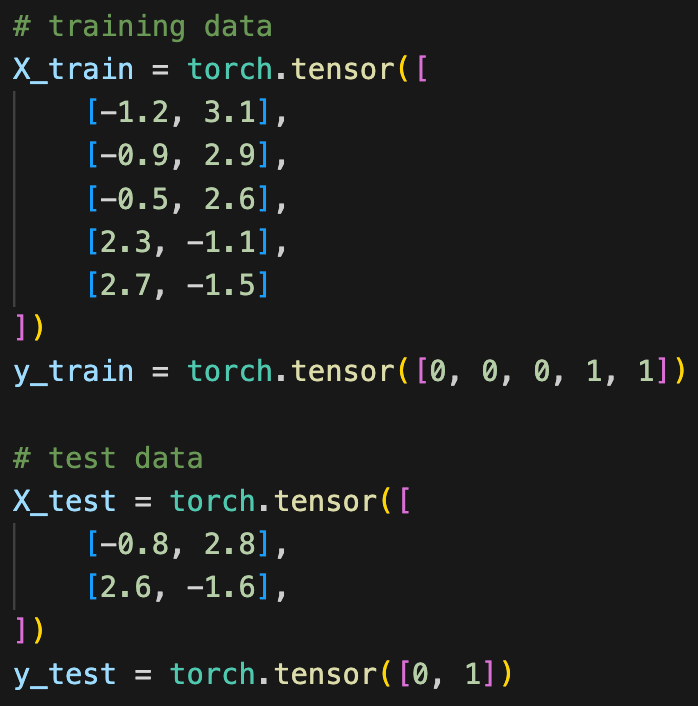

对于数据,我们先简单定义训练集和测试集

For the data, we first define the training set and test set.

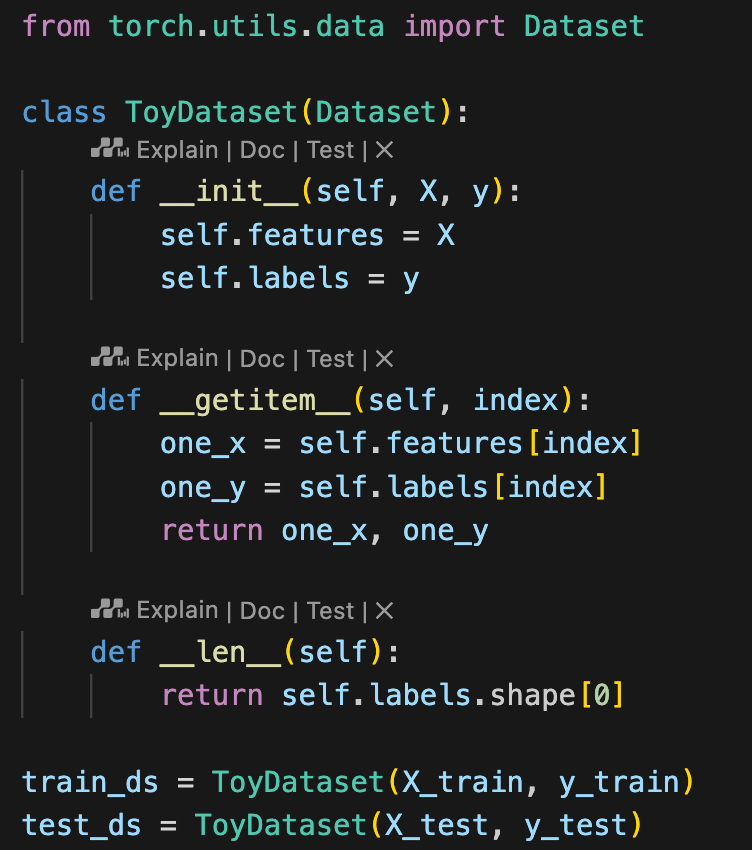

之后,我们先定义一个数据集类 ToyDataset ,用于从不同的数据源中加载数据(实际可以是内存数据、数据库或网络连接等)。数据集其内部主要有三个方法:

构造函数__init__:初始化数据集,将特征(X)和标签(y)存储为类属性。

__getitem__ 方法:根据索引返回单个数据样本及其对应的标签。

__len__ 方法:返回数据集的总长度。

Next, we define a dataset class, ToyDataset, which is used to load data from different sources (which can be in-memory data, a database, or a network connection, etc.). The dataset mainly contains three methods:

Constructor

__init__: Initializes the dataset and stores the features (X) and labels (y) as class attributes.__getitem__method: Returns a single data sample and its corresponding label based on the index.__len__method: Returns the total length of the dataset.

通过实现方法并实例化该数据集类,可以将训练数据和测试数据封装为 PyTorch 数据集对象,方便后续与数据加载器DataLoader配合使用

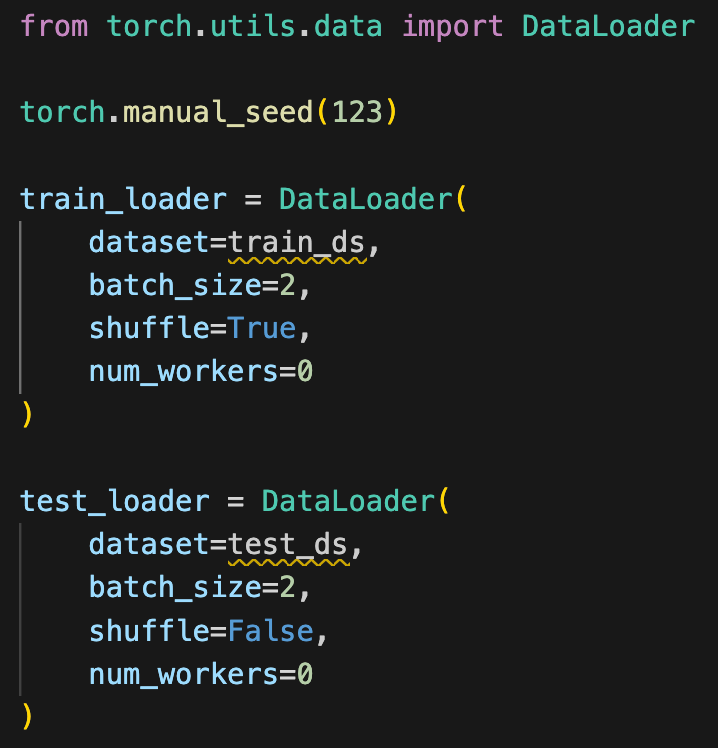

有了数据集之后,我们将其套入数据加载器DataLoader中,便可以按pytorch规范实现了一个通用的数据加载器

By implementing these methods and instantiating the dataset class, we can encapsulate the training and test data as PyTorch dataset objects, making it easier to use them with the DataLoader later.Once we have the dataset, we wrap it into a DataLoader, which allows us to implement a general-purpose data loader following PyTorch’s specifications.

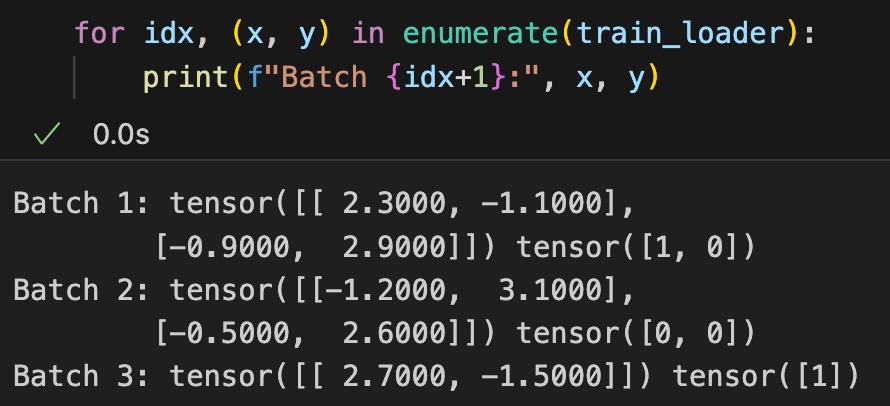

我们可以使用enumerate方法枚举数据加载器的数据:

We can use the enumerate method to iterate over the data from the DataLoader.

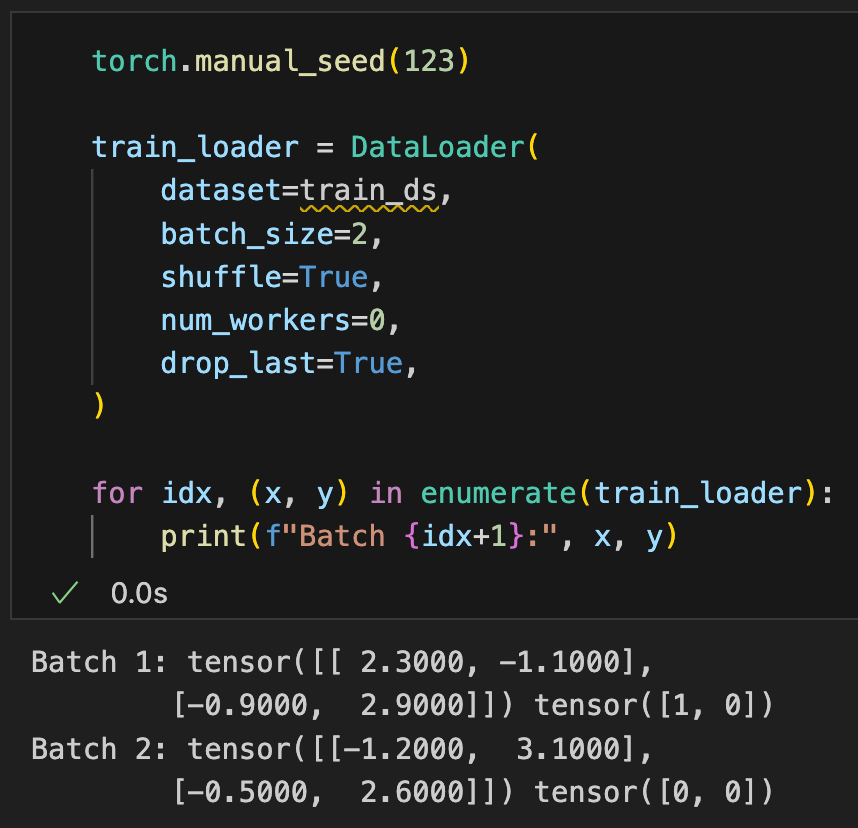

有时我们想让每个批次里的数量一样,避免最后一个批次因为无法整除有不够数量的数据,可以设置drop_last=True

Sometimes, we want each batch to have the same number of samples, avoiding situations where the last batch has fewer samples due to non-divisibility. In such cases, we can set drop_last=True.

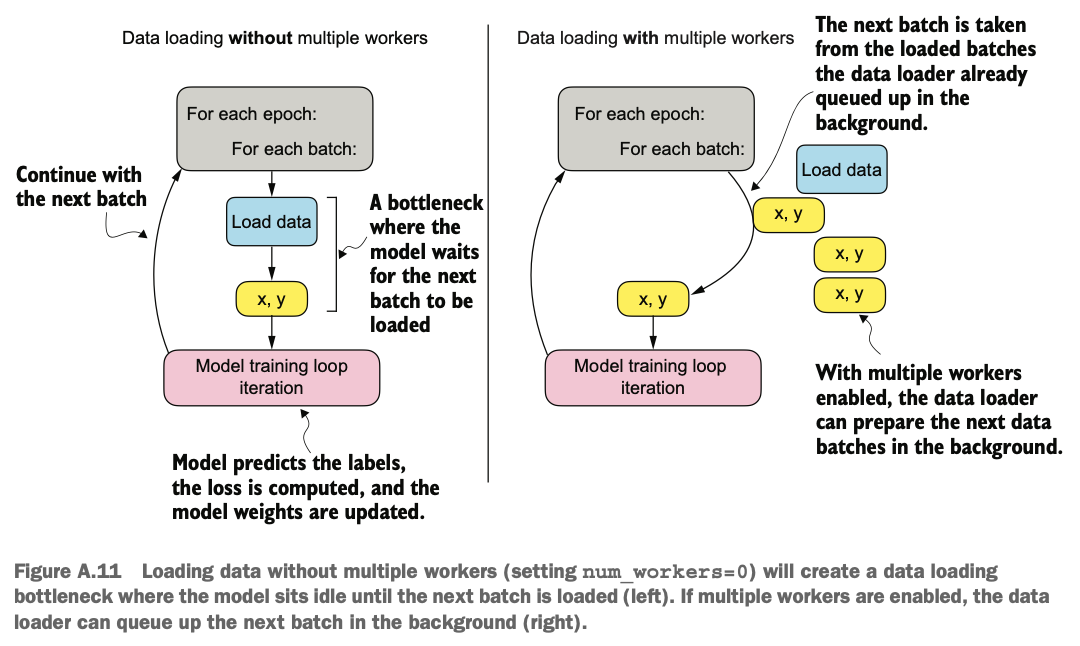

注意,当设置num_workers=0时,只有一个主进程,CPU需要花时间从存储设备(如硬盘)读取数据,然后对数据进行预处理(例如,调整大小、归一化等)。这些任务在处理大数据集时可能会占用CPU大量的时间变成瓶颈,因为GPU的速度通常比CPU快得多,当CPU还在忙于加载和预处理数据时,GPU可能已经完成了当前批次的训练任务。这就导致了GPU空闲并且降低了效率。

Note that when num_workers=0, there is only a single main process. The CPU needs time to read data from storage devices (such as a hard drive) and preprocess the data (e.g., resizing, normalization, etc.). When handling large datasets, these tasks can occupy a significant amount of CPU time and become a bottleneck because GPUs are usually much faster than CPUs. If the CPU is still busy loading and preprocessing data, the GPU may have already finished training the current batch, leading to idle GPU time and reduced efficiency.

当num_workers设置为大于0的数值时,PyTorch会启动多个工作进程来并行处理数据加载和预处理任务。主进程可以更快地获取到预处理好的数据,从而减少GPU等待的时间。这样,GPU可以更充分地利用,提高整体训练效率。但数据量较小时开启并不会有明显效果,并且可能会有额外开销或在Jupyter笔记本出现共享资源的问题

When num_workers is set to a value greater than 0, PyTorch will launch multiple worker processes to parallelize data loading and preprocessing tasks. The main process can obtain preprocessed data more quickly, reducing GPU waiting time. This allows the GPU to be utilized more fully, improving overall training efficiency. However, when the dataset is small, enabling multiple workers may not have a noticeable effect and could introduce additional overhead or cause shared resource issues in Jupyter Notebook.

A.7 一个经典训练循环 A Classic Training Loop

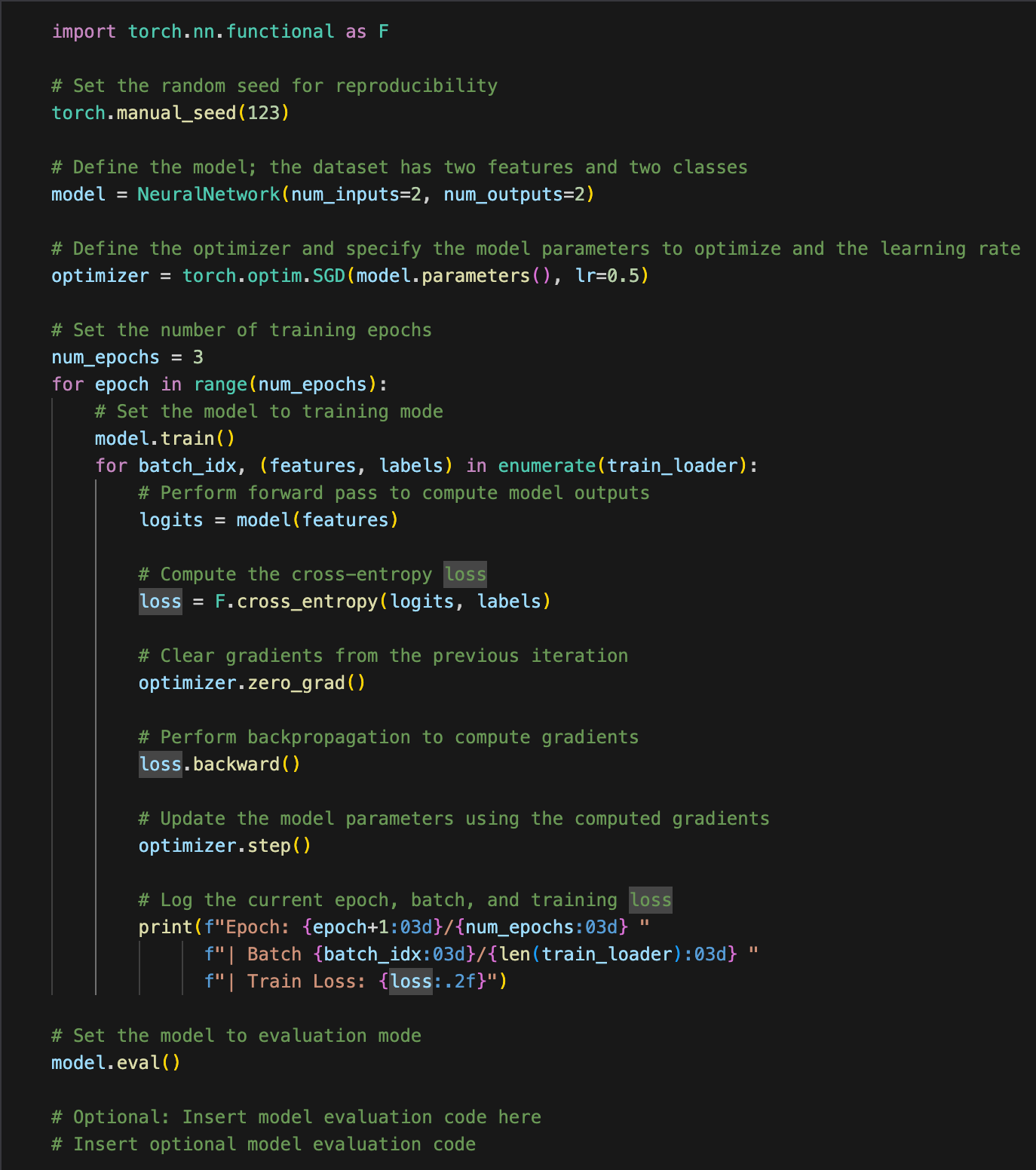

实现一个神经网络的循环的代码如下:

外层循环(epoch)负责多次遍历数据集,逐步改进模型性能。

内层循环(batch)在每次迭代中:

输入数据 ➡️ 前向传播 ➡️ 计算损失 ➡️ 反向传播 ➡️ 更新参数。

每个步骤环环相扣,最终目标是训练的核心目标是通过优化模型参数,使训练样本的损失函数值尽可能小,从而提高模型的预测能力

The code for implementing a neural network training loop is as follows:

The outer loop (epoch) is responsible for iterating over the dataset multiple times to gradually improve model performance.

The inner loop (batch) in each iteration:

Input data ➡️ Forward propagation ➡️ Compute loss ➡️ Backward propagation ➡️ Update parameters.

Each step is interconnected, and the ultimate goal of training is to optimize the model parameters to minimize the loss function value on training samples, thereby improving the model's predictive capability.

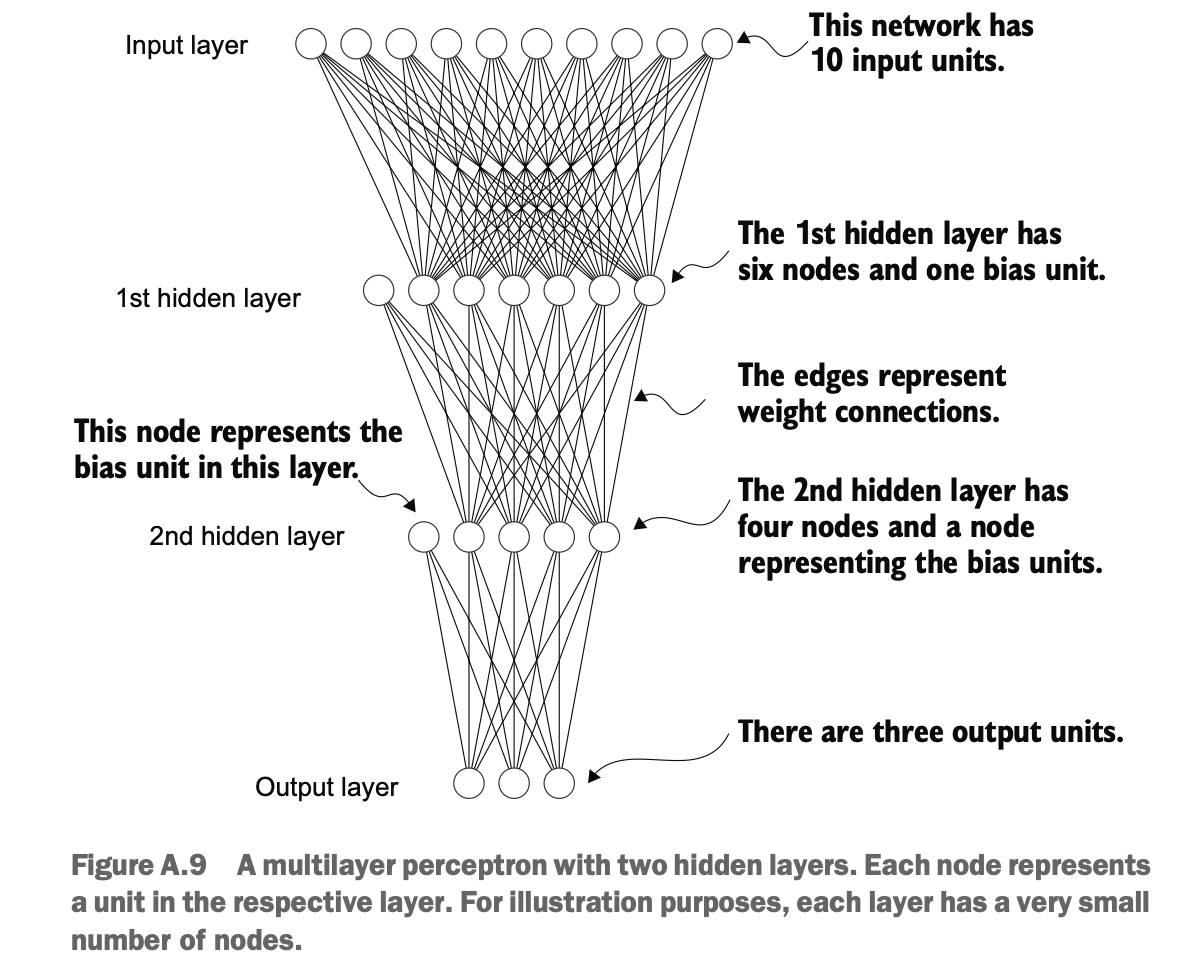

注意:

学习率是一种超参数,需要通过实验调整以观察损失的变化,目标是选择一个能让损失适时收敛的学习率。训练轮数(epochs)也是需要选择的超参数。

实际中,通常引入一个验证集(validation dataset)来调整超参数。验证集类似于测试集,但可以多次使用以优化模型设置,而测试集只用于最终评估。

model.train() 和 model.eval() 用于切换模型的训练模式和评估模式,区别在于一些组件(如 dropout 或批归一化)在两种模式下的行为不同。在评估或推理阶段,Dropout 会完全关闭,不会丢弃任何神经元。模型会使用所有的神经元进行推理,以确保预测的稳定性和一致性。

loss.backward() 计算损失相对于模型参数的梯度。optimizer.step() 使用这些梯度更新参数,在 SGD 中,通过将梯度乘以学习率后按负梯度方向更新参数。

每次参数更新之前,必须调用 optimizer.zero_grad() 将梯度清零,否则梯度会累积,导致意外行为。

Note:

The learning rate is a hyperparameter that needs to be adjusted through experiments to observe changes in loss. The goal is to choose a learning rate that allows the loss to converge appropriately. The number of training epochs is also a hyperparameter that needs to be selected.

In practice, a validation dataset is often introduced to tune hyperparameters. The validation dataset is similar to the test set but can be used multiple times to optimize model settings, whereas the test set is only used for final evaluation.

model.train() and model.eval() are used to switch between training mode and evaluation mode. The difference lies in the behavior of certain components (such as dropout or batch normalization) in these two modes. During evaluation or inference, dropout is completely turned off, meaning no neurons are dropped. The model uses all neurons for inference to ensure prediction stability and consistency.

loss.backward() computes the gradient of the loss with respect to the model parameters. optimizer.step() updates the parameters using these gradients. In SGD, parameters are updated in the negative gradient direction after multiplying the gradient by the learning rate.

Before each parameter update, optimizer.zero_grad() must be called to reset the gradients. Otherwise, gradients will accumulate, leading to unexpected behavior.

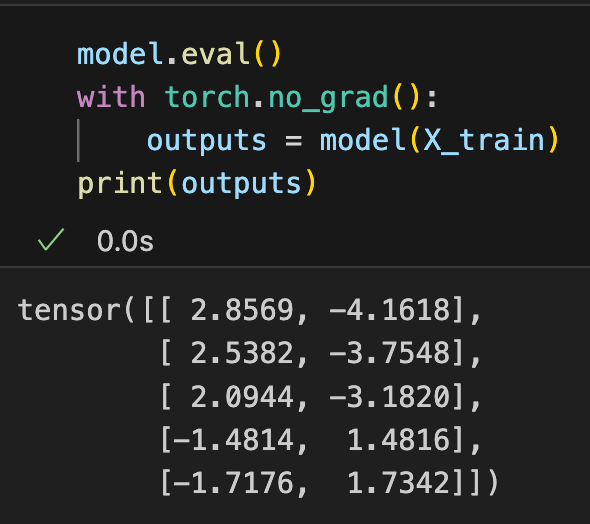

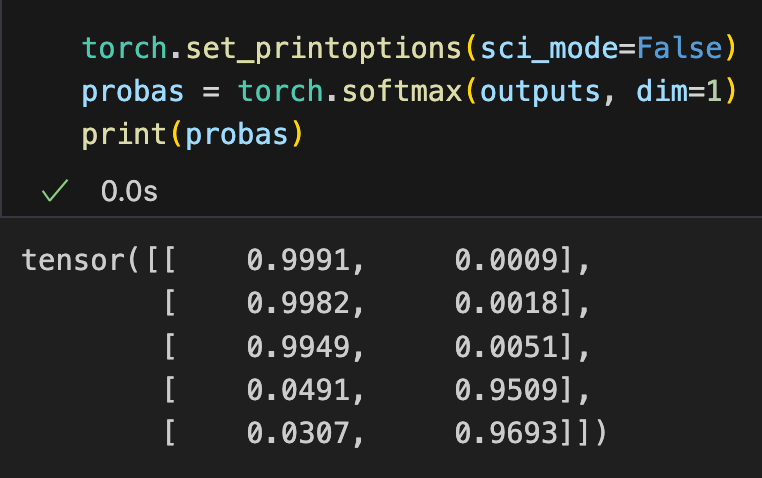

训练完成后,模型可用于进行预测。由于我们定义了输出维度=2,所以输出了一个维度2的logits,可以使用softmax进行转换成概率(也可以不进行转换)

After training is complete, the model can be used for predictions. Since we defined an output dimension of 2, the model outputs a 2-dimensional logits vector, which can be converted into probabilities using softmax (or left unconverted).

然后使用argmax选出对应的分类值

Then, we use argmax to select the corresponding classification value.

然后我们用预测结果和真实值比较看看

Finally, we compare the predicted results with the actual values.

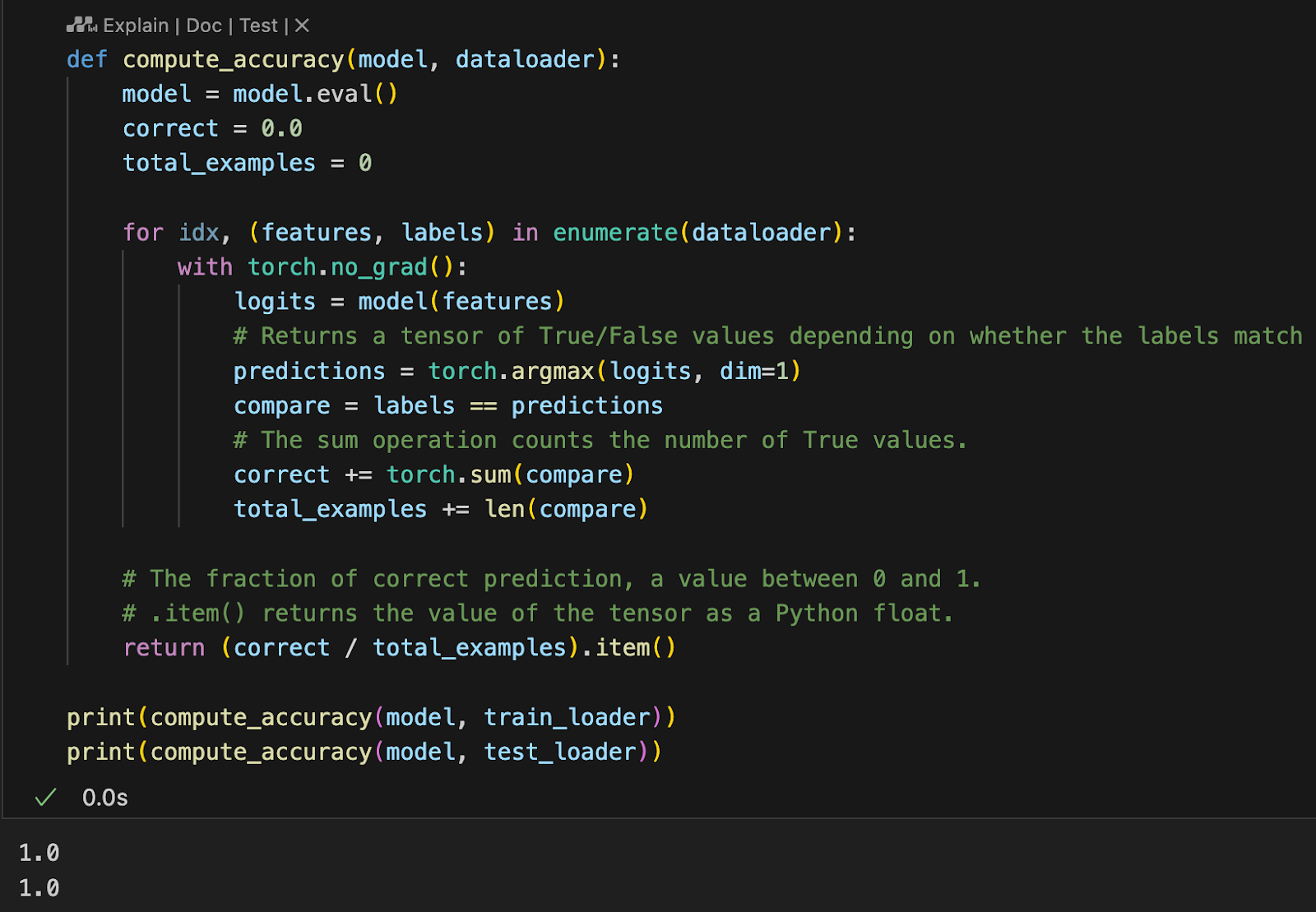

我们可以封装一个评估方法,对训练集和测试集进行评估(实际对于海量数据,我们只能抽样)

We can encapsulate an evaluation method to assess the model on the training and test sets (for large datasets, we can only sample a subset for evaluation).

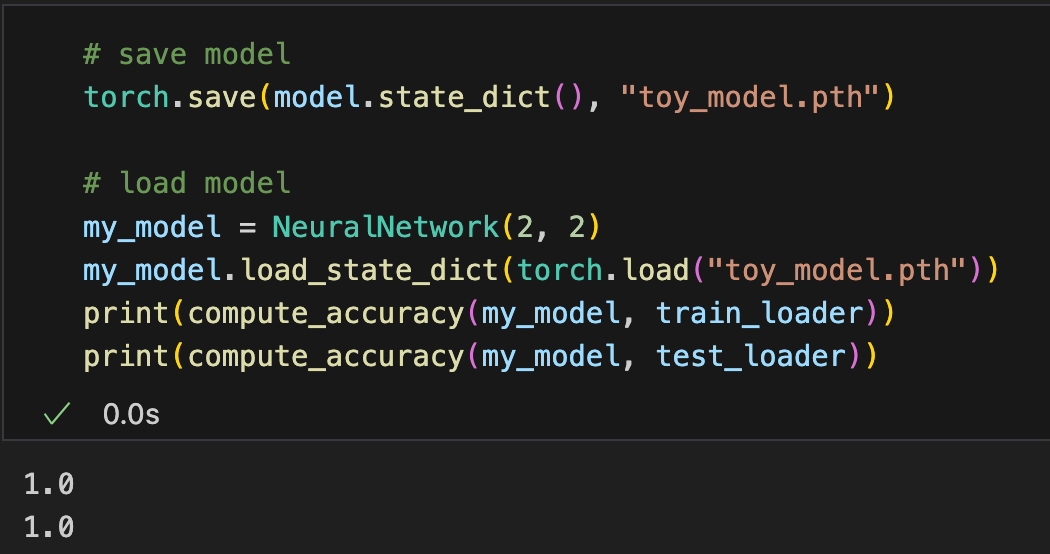

A.8 保存和加载模型 Saving and Loading Models

使用pytorch提供的方法我们可以轻松保存和加载模型,注意加载的模型和保存的模型对应的参数权重等尺寸应该要对应才行

Using the methods provided by PyTorch, we can easily save and load models. Note that the loaded model and the saved model should have corresponding parameter weights and dimensions.

A.9 对使用GPU训练进行调优 Optimizing GPU Training

接下来我们看看如何尽可能压榨GPU性能(远快于CPU),来提高神经网络的性能

Next, let's explore how to maximize GPU performance (which is much faster than CPU) to improve neural network performance.

A.9.1 使用PyTorch在GPU设备上计算 Using PyTorch for Computation on GPU Devices

PyTorch支持GPU计算,我们可以通过 torch.cuda.is_available()检查是否可用,使用GPU 训练模型只需要修改少量代码,非常方便

设备(device):是指 计算发生的地方,可以是 CPU 或 GPU

PyTorch 的张量(tensor) 存放在某个设备上,所有计算必须在同一设备上进行

PyTorch supports GPU computation. We can check availability using torch.cuda.is_available(). Training a model on a GPU requires only minor code modifications, making it very convenient.

Device: This refers to the location where computation occurs, which can be either a CPU or a GPU.

PyTorch tensors: Stored on a specific device, and all computations must be performed on the same device.

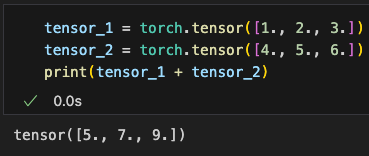

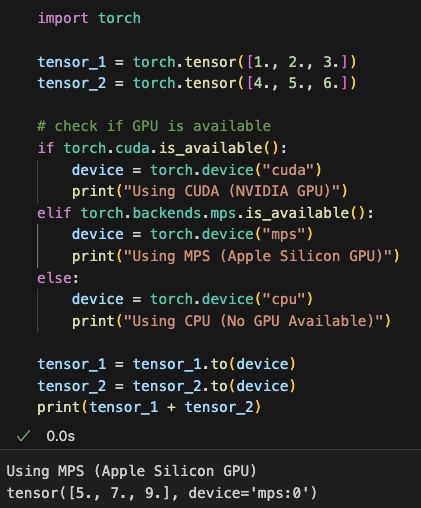

比如两个向量相加,默认是在CPU上计算

For example, adding two vectors by default is computed on the CPU.

我们也可以根据现有设备(GPU/苹果芯片/CPU),把数据放到对应设备进行计算(所有相关计算需要放到同一个设备中)

We can also place data on the appropriate device (GPU/Apple chip/CPU) based on the available hardware for computation (all related computations must be performed on the same device).

这时计算结果就会显示其所在的设备信息device='mps:0'

In this case, the computation result will display its device information as device='mps:0'.

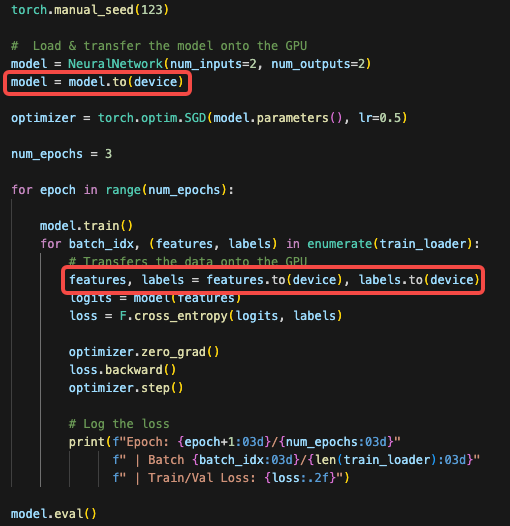

A.9.2 使用单GPU训练 Training with a Single GPU

如图,一个将数据和模型放到GPU训练的例子,使用to(device)放入GPU提升性能

As shown in the example, placing data and models on a GPU using to(device) can improve performance.

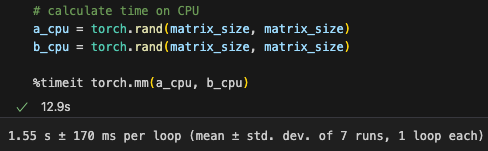

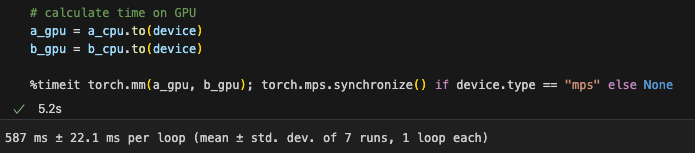

我们可以使用矩阵相乘比较数据放入GPU前后的计算性能耗时,虽然数据从CPU拷贝到GPU需要时间,但当矩阵规模变大后性能提升明显

We can compare computation time before and after moving data to the GPU using matrix multiplication. Although copying data from the CPU to the GPU takes time, performance improvements become significant as matrix size increases.

A.9.3 使用多GPU训练 Training with Multiple GPUs

分布式训练指的是将模型训练任务划分到多个GPU或计算机上进行,这样做的目的是加速训练过程,减少模型训练时间

Distributed training refers to dividing the model training task across multiple GPUs or computers. This approach aims to speed up training and reduce model training time.

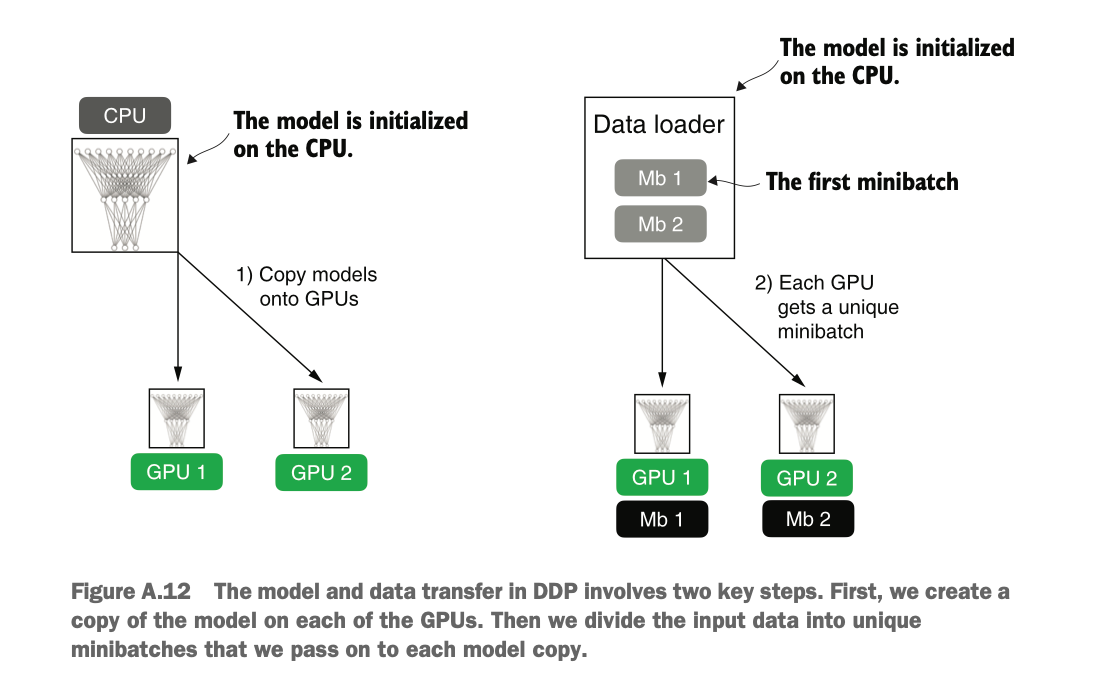

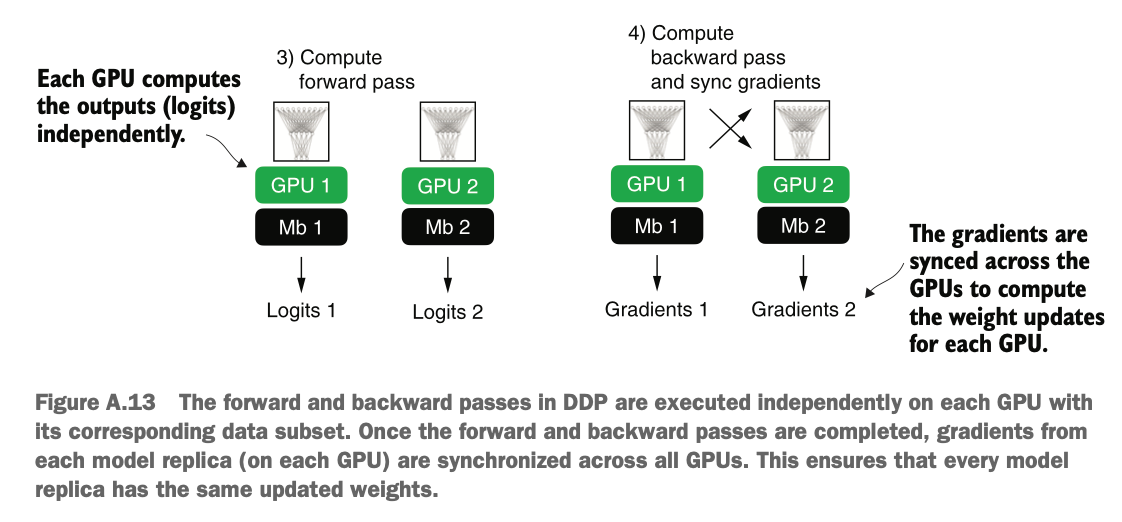

介绍一下常用的分布式训练方法 DistributedDataParallel (DDP) 。它的核心思想是:

模型复制:在每张GPU上启动一个独立进程,并复制模型,使每个GPU都有一份相同的模型副本。

数据划分:使用 DistributedSampler 确保每个GPU接收到的训练数据不重叠,即每个GPU处理不同的小批量数据(minibatch)。

梯度计算与同步:由于每个模型副本处理不同的数据,它们会计算不同的梯度。在反向传播时,这些梯度会被平均并同步,以确保模型参数保持一致,不会出现分歧。

这样,通过让多个GPU并行处理不同数据的方式,可以加速训练并提高计算效率。但同时要注意,GPU直接通信有开销,且不能直接在Jupyter上运行DPP(需要独立脚本)

One commonly used distributed training method is DistributedDataParallel (DDP). Its core concepts are:

Model Replication: A separate process is launched on each GPU, and the model is replicated so that each GPU has an identical copy.

Data Partitioning: DistributedSampler ensures that each GPU receives distinct training data, meaning each GPU processes different mini-batches.

Gradient Computation and Synchronization: Since each model replica processes different data, they compute different gradients. During backpropagation, these gradients are averaged and synchronized to ensure model parameters remain consistent.

By allowing multiple GPUs to process different data in parallel, this method accelerates training and improves computational efficiency. However, it is important to note that direct GPU communication introduces overhead, and DDP cannot be run directly in Jupyter (it requires a standalone script).

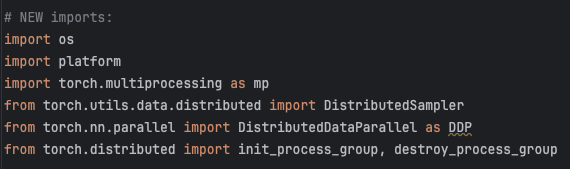

我们写一个具体的python脚本实现GPU分布式训练,首先需要导入相关依赖:

We write a specific Python script to implement GPU distributed training. First, we need to import the necessary dependencies:

multiprocessing.spawn 创建多个训练进程:每个 GPU 运行一个进程,实现并行计算

DistributedSampler 处理数据分配:确保每个 GPU 处理不同的数据,避免数据重复计算

init_process_group 初始化分布式训练:设置 backend、world_size 和 rank,确保进程通信

destroy_process_group 结束分布式训练:释放资源,防止进程残留

multiprocessing.spawn: Creates multiple training processes, with each GPU running one process to achieve parallel computation.

DistributedSampler: Manages data distribution, ensuring that each GPU processes different data and avoids redundant computations.

init_process_group: Initializes distributed training, setting the backend, world_size, and rank to ensure process communication.

destroy_process_group: Ends distributed training, freeing resources and preventing residual processes.

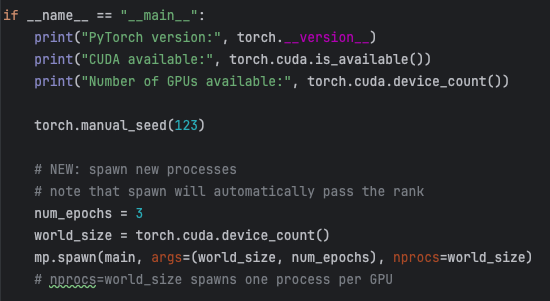

主程序入口里,我们首先使用 torch.cuda.device_count() 打印可用 GPU 的数量,接着设置一个随机种子以保证可复现性,然后使用 PyTorch 的 multiprocessing.spawn 函数启动多个新进程。

In the main program entry, we first print the number of available GPUs using torch.cuda.device_count(). Then, we set a random seed to ensure reproducibility and use PyTorch’s multiprocessing.spawn function to launch multiple new processes.

在这里,spawn 函数会根据 nprocs=world_size(其中 world_size 是可用 GPU 的数量)为每块 GPU 启动一个进程,spawn 函数会在同一脚本中调用 main 函数,并传递一些额外的参数(通过 args 传递)。

Here, the spawn function starts one process per GPU based on nprocs=world_size (where world_size is the number of available GPUs). The spawn function calls the main function within the same script and passes some additional arguments (via args).

需要注意的是,main 函数会自带一个 rank 参数,但我们在 mp.spawn() 调用时并没有显式传递它。 这是因为 rank(即进程 ID,它同时作为 GPU ID)会被自动传递。

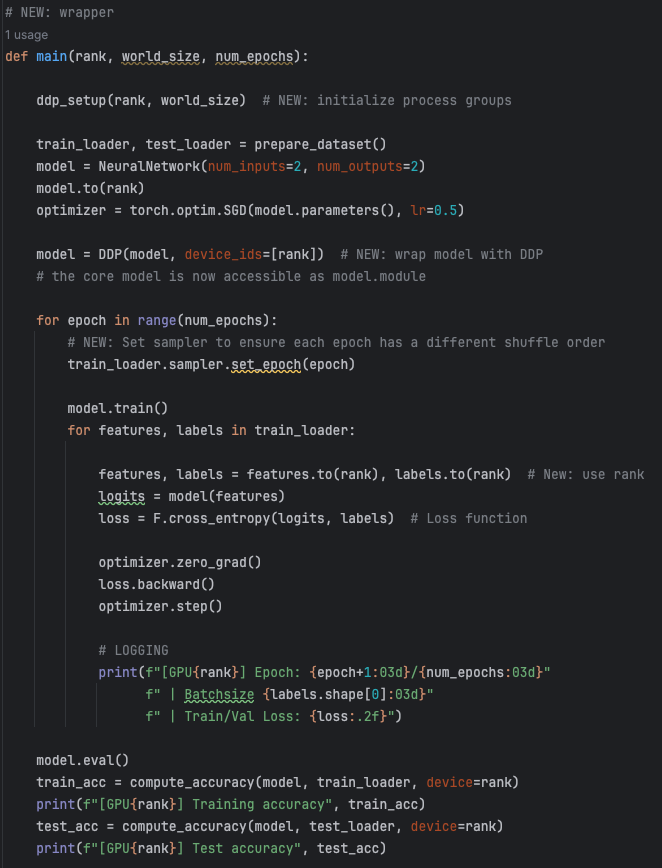

main 函数的作用如下:

通过 ddp_setup(我们定义的另一个函数)初始化分布式环境

加载训练集和测试集

设置模型

执行训练

清理资源并退出

It is important to note that the main function includes a built-in rank parameter, even though we do not explicitly pass it when calling mp.spawn(). This is because rank (which serves as both the process ID and the GPU ID) is automatically assigned.

The main function performs the following tasks:

Initializes the distributed environment using ddp_setup (a function we define).

Loads the training and test datasets.

Sets up the model.

Executes training.

Cleans up resources and exits.

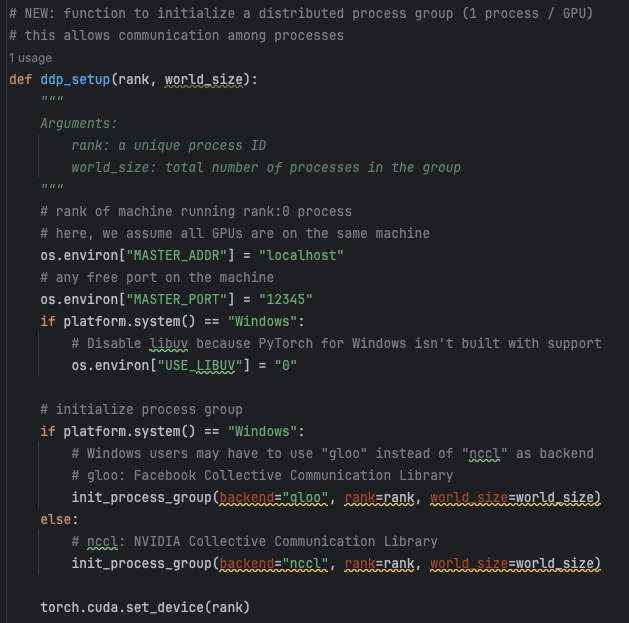

对于main执行的第一步,是一个ddp_setup函数用于初始化:

它设置主节点的地址和端口,使不同进程之间能够通信,

使用 NCCL 后端(专为 GPU 之间的通信设计)初始化进程组,

设定 rank(进程 ID)和 world_size(总进程数),

确保每个训练进程绑定到正确的 GPU 设备。

For the first step in executing main, a ddp_setup function is used for initialization:

It sets the address and port of the master node to enable communication between different processes,

initializes the process group using the NCCL backend (designed specifically for communication between GPUs),

sets the rank (process ID) and world_size (total number of processes),

and ensures that each training process is bound to the correct GPU device.

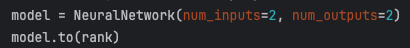

训练时相比单 GPU ,我们现在现在需要使用 .to(rank) 将模型和数据传输到目标设备,其中 rank 指的是 GPU 设备 ID

During training, compared to using a single GPU, we now need to use .to(rank) to transfer the model and data to the target device, where rank refers to the GPU device ID.

![]()

并且,我们使用 DDP(DistributedDataParallel)封装模型进行信息关联,使不同 GPU 之间的梯度能够在训练过程中同步

Additionally, we wrap the model with DDP (DistributedDataParallel) to enable gradient synchronization between different GPUs during training.

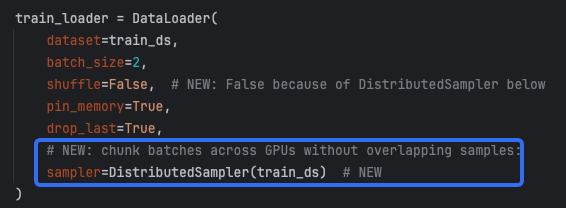

![]()

另外,为了实现每块 GPU 都会接收训练数据的不同子集,我们要在数据加载器中设置 sampler=DistributedSampler(train_ds)以进行数据划分

Furthermore, to ensure that each GPU receives a different subset of the training data, we need to set sampler=DistributedSampler(train_ds) in the data loader to partition the dataset.

训练完成并评估模型后,我们调用 destroy_process_group() 以干净地退出分布式训练,并释放分配的资源。

After training is completed and the model is evaluated, we call destroy_process_group() to cleanly exit distributed training and release allocated resources.

![]()

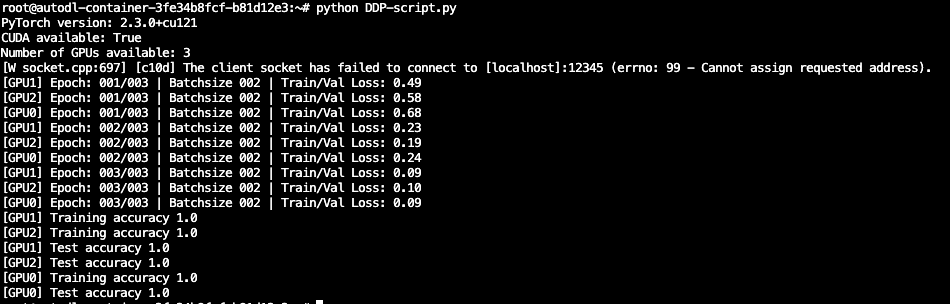

使用AutoDL提供的Jupyter笔记,用三个GPU运行试试,得到如下结果

Using AutoDL's Jupyter notebook, we ran the training on three GPUs and obtained the following results.

梳理一下,数据划分并行计算涉及的关键代码:

mp.spawn(main, args=(world_size, num_epochs), nprocs=world_size) —— 启动多个进程,每个 GPU 运行一个训练进程。

torch.cuda.set_device(rank) —— 绑定指定GPU进程。

DistributedSampler(train_ds) —— 负责数据集划分到不同 GPU。

DDP(model, device_ids=[rank]) —— 让模型关联多个 GPU 间并行训练。

train_loader.sampler.set_epoch(epoch) —— 确保 DistributedSampler 在不同 GPU 上的 shuffle 一致。

To summarize, the key code involved in data partitioning and parallel computation includes:

mp.spawn(main, args=(world_size, num_epochs), nprocs=world_size): Launches multiple processes, with each GPU running a training process.

torch.cuda.set_device(rank): Binds the specified GPU to the process.

DistributedSampler(train_ds): Handles dataset partitioning across different GPUs.

DDP(model, device_ids=[rank]): Enables parallel training of the model across multiple GPUs.

train_loader.sampler.set_epoch(epoch): Ensures that DistributedSampler maintains consistent shuffling across different GPUs.

另外,我们可以在多 GPU 机器上限制训练使用的 GPU 数量,方法是使用 CUDA_VISIBLE_DEVICES 环境变量例如

CUDA_VISIBLE_DEVICES=0 python some_script.py 表示仅使用 GPU 0。

CUDA_VISIBLE_DEVICES=0,2 python some_script.py 表示仅使用 GPU 0 和 GPU 2。

这样可以在 不修改 PyTorch 代码的情况下,有效地管理 GPU 资源

Additionally, we can limit the number of GPUs used for training on a multi-GPU machine by setting the CUDA_VISIBLE_DEVICES environment variable. For example:

CUDA_VISIBLE_DEVICES=0 python some_script.py means only GPU 0 will be used.

CUDA_VISIBLE_DEVICES=0,2 python some_script.py means only GPU 0 and GPU 2 will be used.

This allows effective GPU resource management without modifying the PyTorch code.

总结 Summary

PyTorch 是一个开源库,包含三个核心组件:张量库、自动求导函数和深度学习工具。

PyTorch 的张量库类似于 NumPy 等数组库。

在 PyTorch 中,张量(tensor)是一种类似数组的数据结构,可用于表示 标量、向量、矩阵以及高维数组。

PyTorch 的张量可以在 CPU 上执行,但其主要优势在于 支持 GPU 加速计算。

PyTorch 的 自动求导(autograd) 机制使我们能够 通过反向传播轻松训练神经网络,而无需手动计算梯度。

PyTorch 提供的 深度学习工具 能够作为 构建自定义深度神经网络的基础模块。

PyTorch 提供了 Dataset 和 DataLoader 类,用于 高效地加载数据。

在 CPU 或单个 GPU 上训练模型最简单。

DistributedDataParallel(DDP) 是 PyTorch 中加速多 GPU 训练的最简单方式。

PyTorch is an open-source library that consists of three core components: a tensor library, an automatic differentiation engine, and deep learning tools.

PyTorch's tensor library is similar to array libraries like NumPy.

In PyTorch, a tensor is an array-like data structure that can represent scalars, vectors, matrices, and high-dimensional arrays.

PyTorch tensors can be executed on a CPU, but their main advantage is support for GPU-accelerated computation.

PyTorch's automatic differentiation (autograd) mechanism allows us to easily train neural networks using backpropagation without manually computing gradients.

PyTorch provides deep learning tools that serve as fundamental modules for building custom deep neural networks.

PyTorch offers the Dataset and DataLoader classes for efficient data loading.

Training a model on a CPU or a single GPU is the simplest approach.

DistributedDataParallel (DDP) is the easiest way to accelerate multi-GPU training in PyTorch.