博客内容Blog Content

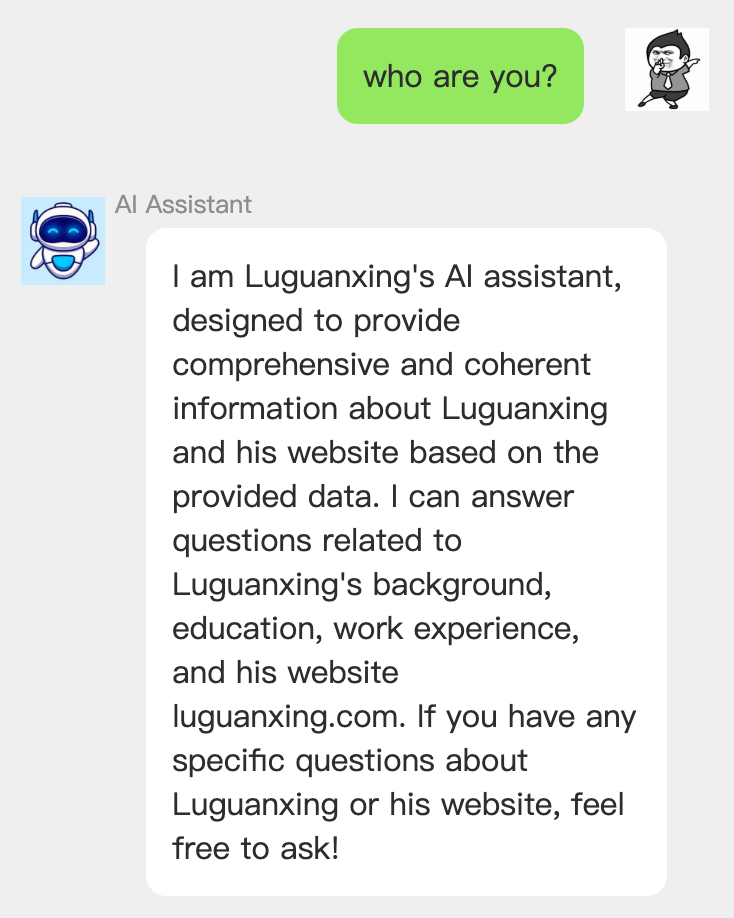

构建网站AI小助手 Building a Website AI Assistant

我基于自己的知识库搭建了一个AI客服机器人,它能根据知识库材料和提出的问题进行相应回答(基于RAG架构和本地向量召回)I built an AI customer service bot based on my own knowledge base. It can provide appropriate answers according to the materials in the knowledge base and the questions asked (based on the RAG architecture and local vector retrieval).

概述 Overview

AI客服机器人入口:这里

AI Customer Service Bot Entry Point: Here

搭建AI客服机器人目的是因为其能有效地提升效率,一方面能帮助提问者快速找到答案,而非通过找繁琐的资料,另一方面,我也无须将维护的资料进行各种格式和排版的整理,只需放入知识库即可。

The purpose of building the AI customer service bot is its ability to effectively improve efficiency. On one hand, it helps users quickly find answers without going through cumbersome resources. On the other hand, I no longer need to organize and format the resources I maintain; I just need to place them into the knowledge base.

原理 Principle

RAG(Retrieval-Augmented Generation)模型结合了检索模型和生成模型两种技术。首先,检索模型将输入查询转化为向量,从外部知识库中检索相关文档;然后,生成模型(如 GPT 或 BART)基于这些检索到的文档生成答案或文本。RAG 的优势在于能够从外部知识库获取信息,并结合生成技术生成更准确且信息丰富的回答。

The RAG (Retrieval-Augmented Generation) model combines two techniques: a retrieval model and a generation model. First, the retrieval model transforms the input query into a vector and retrieves relevant documents from an external knowledge base. Then, the generation model (e.g., GPT or BART) generates an answer or text based on the retrieved documents. The advantage of RAG lies in its ability to retrieve information from external sources and combine it with generation models to produce more accurate and information-rich responses.

但相比微调模型,RAG 存在一些不足。首先,它依赖检索模型的质量,若检索结果不准确,会影响生成效果。此外,RAG 需要持续维护外部知识库,增加了复杂性和维护成本,同时由于有检索过程,响应速度较慢。相比之下,微调模型通过直接学习任务数据,生成更快、更精准,且不依赖外部知识库。

However, compared to fine-tuned models, RAG has some drawbacks. First, it relies on the quality of the retrieval model, and inaccurate retrieval results can affect the generated output. Additionally, RAG requires ongoing maintenance of an external knowledge base, increasing complexity and maintenance costs, while also being slower due to the retrieval process. In contrast, fine-tuned models directly learn from task-specific data, generating faster and more accurate responses without relying on an external knowledge base.

架构和细节 Architecture and Details

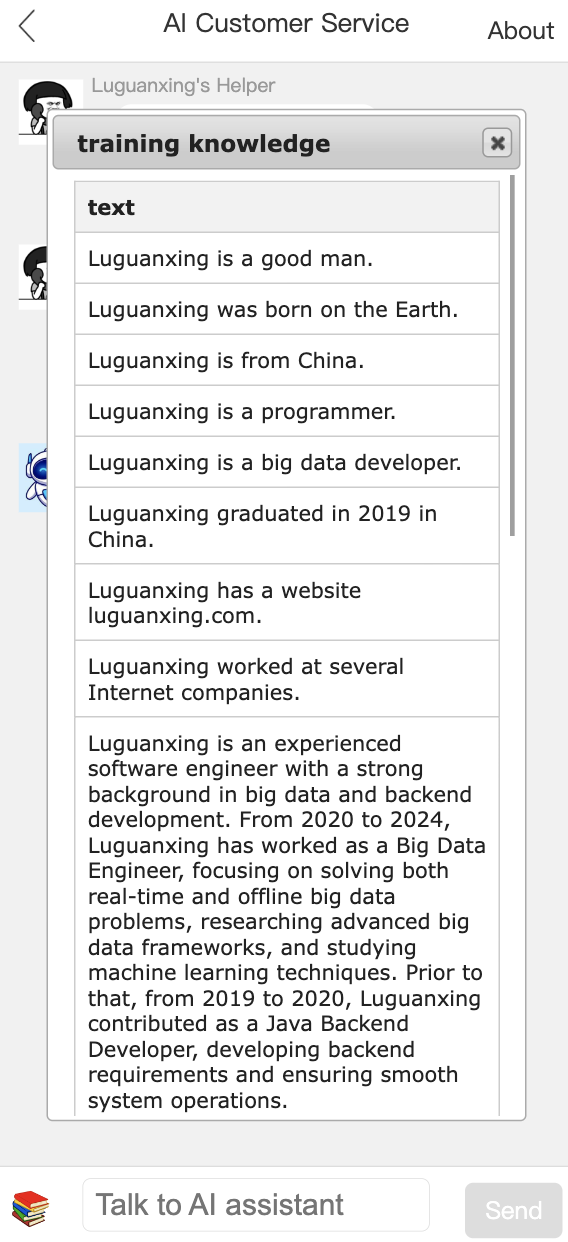

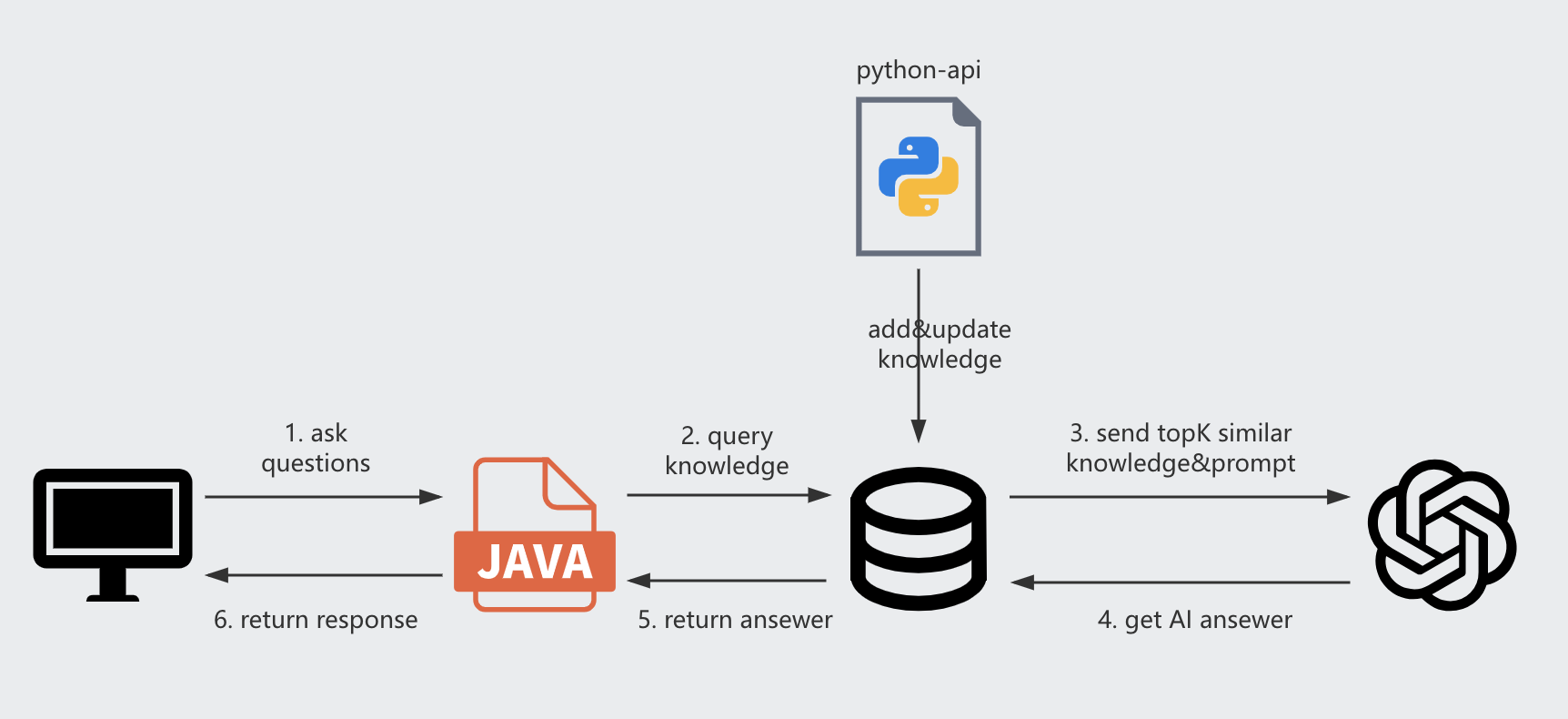

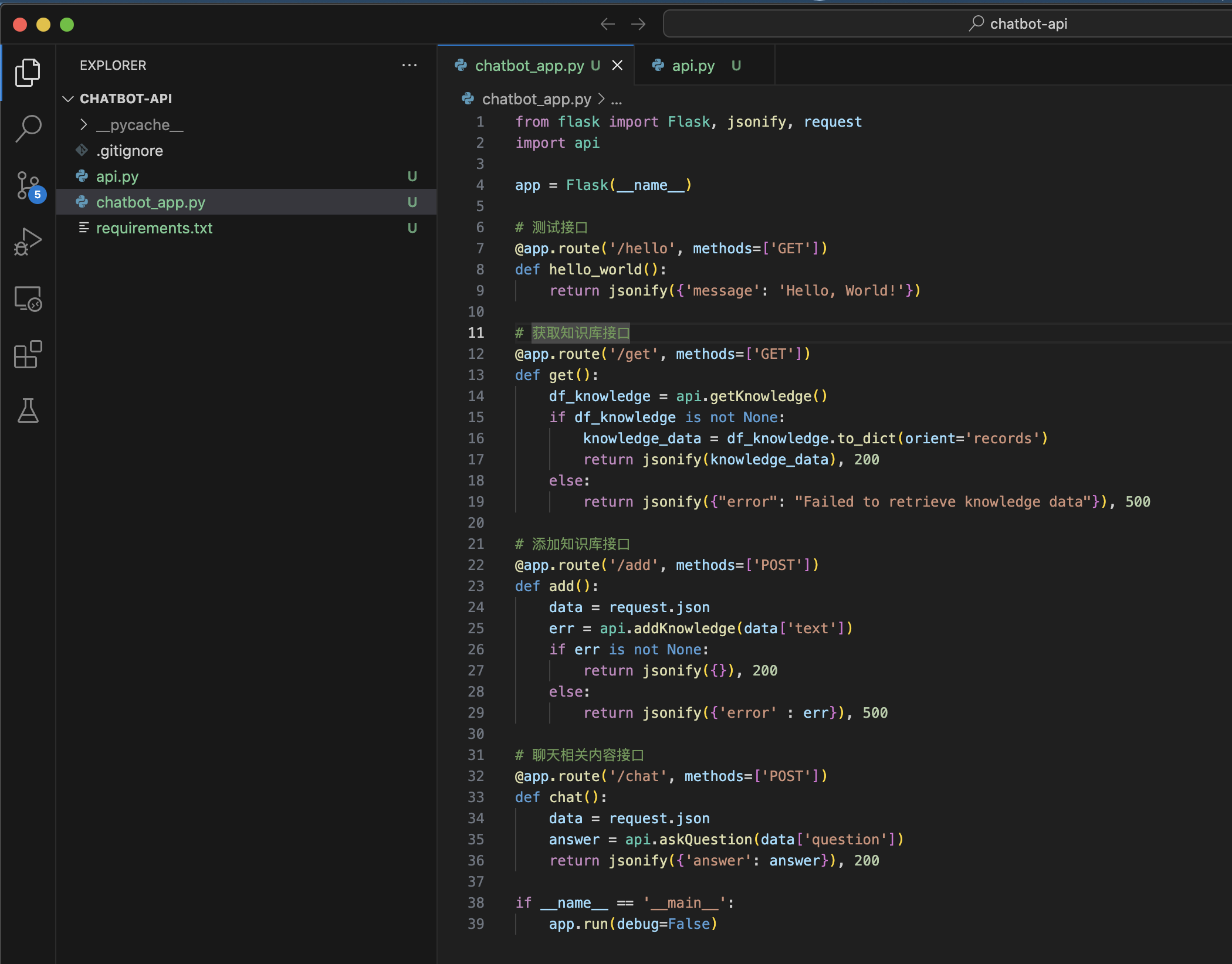

首先利用Python Flask弄了一个服务chatbot-api部署在内网,用于维护知识库和和RAG的实现,代码可参考这里,里面对指令做了限定(只能问相关范围问题),并设定相关GPT的参数

First, a service called chatbot-api was created using Python Flask and deployed on the internal network. This service is used for maintaining a knowledge base and implementing RAG (Retrieval-Augmented Generation). You can refer to the code here, where instructions are limited (only questions within a specific scope are permitted), and relevant GPT parameters are set.

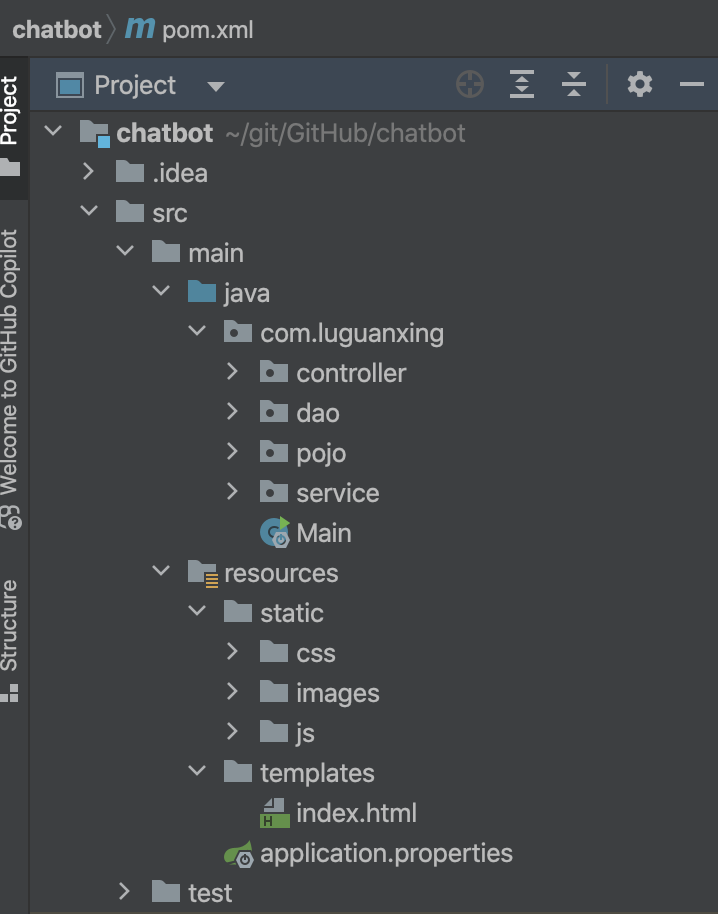

然后是外部的Springboot的web,整体架构不复杂,前端是聊天窗口进行交互,后端直接查询知识数据库进行匹配相似知识,然后把topK结果和prompt一起发给GPT接口整理获取答案

Then there is the external Spring Boot-based web application. The overall architecture is not complex: the front end provides a chat window for interactions, while the back end directly queries the knowledge database to match similar knowledge. It then sends the top-K results along with the prompt to the GPT interface, which organizes and retrieves the final answer.

总结和优化 Summary and Optimization

目前整体效果还是比较满意,一方面通过提示词成功限制了聊天范围,另一方面基于embedding的相似度匹配效果也不错

The overall effect is quite satisfactory so far. On one hand, the prompt successfully limits the scope of the conversation, and on the other hand, the embedding-based similarity matching works well.

待优化点:

这里整个组件用到了Java的SpringBoot和Python的Flask两个不同的技术组件,维护起来还是比较复杂,因为之前项目部署在tomcat上,所以前端页面还是用的SpringBoot维护,但Java本身对OpenAI的库支持又不太方便,所以得靠Python调用OpenAI的库,要是两个能用一套语言实现当然是最好

因为使用的是接口调用,所以机器人没有上下文记忆功能,导致有时问的问题效果并不好,还需要优化(需要每次对话加入上下文,比较耗token)

相关内容匹配embedding使用的是遍历匹配,当数据量大的时候效率不高,应该使用向量索引或数据库

python写入blob数据是小端,和java读取的不一致,这个需要注意

将prompt存储到sql中,便于修改

将不相关的问题联想到luguanxing

引入多个模型,设计成群聊功能

�使用Spring-AI优化,结合mysql记录上下文�

Areas for improvement:

The current setup uses two different technical components: Java's Spring Boot and Python's Flask, which makes maintenance somewhat complex. Since the project was previously deployed on Tomcat, the frontend page is still maintained using Spring Boot. However, Java itself is not very convenient for supporting OpenAI's libraries, so Python is used to call OpenAI's library. It would be ideal if both could be implemented in a single language.

Since I am using API calls, the bot lacks context memory, which sometimes results in suboptimal responses. Further optimization is needed (we have to include the context in every conversation, which consumes quite a few tokens).

The embedding used for relevant content matching relies on brute-force matching, which is inefficient when dealing with large volumes of data. Vector indexing or a database should be used instead.

Writing blob data in Python uses little-endian format, which is inconsistent with Java's reading method. This needs to be noted.

Store the prompts in SQL for easy modification.

Associate unrelated questions with Luguanxing.

Importing more AI models for the group chat function

Importing Spring-AI optimization and Mysql for context