博客内容Blog Content

打造专属GPT知识库机器人 Building a Custom GPT Knowledge Base Bot

构建了一个基于embedding方案构建的知识库机器人,能根据问题和知识库相关内容组织语言进行问题回答 A knowledge base bot has been built using an embedding-based solution, which can organize language to answer questions based on the query and the relevant content from the knowledge base.

背景 Background

日常学习中对于某块知识领域,贡献者需要整理文档资料,而查询者对于想要解答的问题则需要阅读文档资料,有时为了查询一下小块内容就需要查很多资料,比较麻烦。即使会有时贡献者会整理FAQ,但这样效率仍然不高。而此时强大的GPT可以作为一个很好的人工智能帮手,能够学习和整理知识库的内容,并根据查询的问题得到相关的答案,如此一来便能极大提升知识贡献者和查询者的效率!

In daily learning within a specific knowledge domain, contributors need to organize documentation, while those seeking answers must read through the documents to find the information they need. Sometimes, finding a small piece of information requires going through a lot of material, which can be quite cumbersome. Even if contributors compile FAQs, the efficiency is still not very high. At this point, the powerful GPT can serve as an excellent AI assistant, capable of learning and organizing the content of the knowledge base and providing relevant answers based on the queries. This can greatly enhance the efficiency of both knowledge contributors and seekers!

因为我也想弄一个自己的知识库机器人,让它作为一个专属的客服回答各类问题,于是研究了相关的可能方案:

Because I also want to create my own knowledge base bot to act as a dedicated customer service agent answering various questions, I explored the following possible solutions:

微调(Fine-tuning)

微调是指在预训练的大型语言模型的基础上,使用特定领域的额外数据集进一步训练模型。通过微调,模型可以专注于特定的知识领域,使其更适合某些特定任务或场景。

Fine-tuning refers to further training a pre-trained large language model using an additional dataset from a specific domain. Through fine-tuning, the model can focus on a particular knowledge area, making it more suitable for specific tasks or scenarios.

优点 Advantages

专用性强:模型在微调后的表现会更贴合特定领域,能更好地理解行业术语或处理特定问题。

更高的准确度:在特定领域或任务上,微调后的模型通常比通用模型表现更好。

High specialization: After fine-tuning, the model's performance will be more closely aligned with the specific domain, enabling better understanding of industry-specific terminology or handling particular issues.

Higher accuracy: In specific domains or tasks, a fine-tuned model usually performs better than a general-purpose model.

缺点 Disadvantages

计算成本较高:微调需要大量计算资源,尤其是对于大规模模型来说。

数据敏感性:微调过程需要使用大量的领域数据,这些数据的收集、处理和存储可能会涉及隐私或数据合规问题。

更新困难:一旦微调完成,模型很难实时更新知识库内容,因为需要重新训练或调整模型。

High computational cost: Fine-tuning requires significant computational resources, especially for large-scale models.

Data sensitivity: The fine-tuning process requires a substantial amount of domain-specific data, and collecting, processing, and storing this data may involve privacy or data compliance issues.

Difficult to update: Once fine-tuning is complete, it is hard to update the model with new knowledge in real-time, as this requires retraining or adjusting the model.

知识库集成与检索增强模型(RAG, Retrieval-Augmented Generation)

Embedding 是一种将文本表示为高维向量的方式。当使用 Embedding 来构建知识库时,模型本身不需要微调。相反,系统会先检索与问题相关的知识库内容,再由模型根据检索到的上下文生成答案。这种方式的优势在于可以动态更新知识库,而无需重新训练模型。

Embedding is a method of representing text as high-dimensional vectors. When using embedding to build a knowledge base, the model itself doesn't need fine-tuning. Instead, the system first retrieves relevant content from the knowledge base based on the query, and the model generates the answer based on the retrieved context. The advantage of this approach is that the knowledge base can be updated dynamically without retraining the model.

优点 Advantages

实时更新:知识库可以随时更新或扩展,无需重新训练模型。例如,新的文档可以直接生成对应的向量并加入向量数据库。

计算成本低:相比微调,Embedding 不需要再训练模型,只需要生成和存储向量,计算资源需求较低。

灵活性强:可以轻松添加和删除知识库中的内容,适合动态变化的知识库场景。

Real-time updates: The knowledge base can be updated or expanded at any time without retraining the model. For example, new documents can be directly converted into vectors and added to the vector database.

Lower computational cost: Compared to fine-tuning, embeddings don't require retraining the model, only generating and storing vectors, which demands less computational resources.

High flexibility: Content in the knowledge base can be easily added or removed, making this approach suitable for dynamically changing knowledge base scenarios.

缺点 Disadvantages

上下文依赖性较弱:Embedding 主要通过相似度匹配来检索信息,因此模型的回答主要依赖于检索到的内容,而不是对领域知识的深度理解。

答案质量受限于检索结果:如果检索到的内容不准确或不完整,生成的答案质量会受影响。

Weaker context dependency: Embedding primarily retrieves information based on similarity matching, so the model's responses rely heavily on the retrieved content rather than a deep understanding of the domain knowledge.

Answer quality limited by retrieval results: If the retrieved content is inaccurate or incomplete, the quality of the generated answer will be affected.

目前因为资源有限(穷),没有GPU资源,我目前代码选择的是embedding+外部gpt接口方案进行实现

Currently, due to limited resources (no too much money) and no access to GPU resources, I have chosen to implement the solution using the embedding + external GPT API approach.

原理 Principle

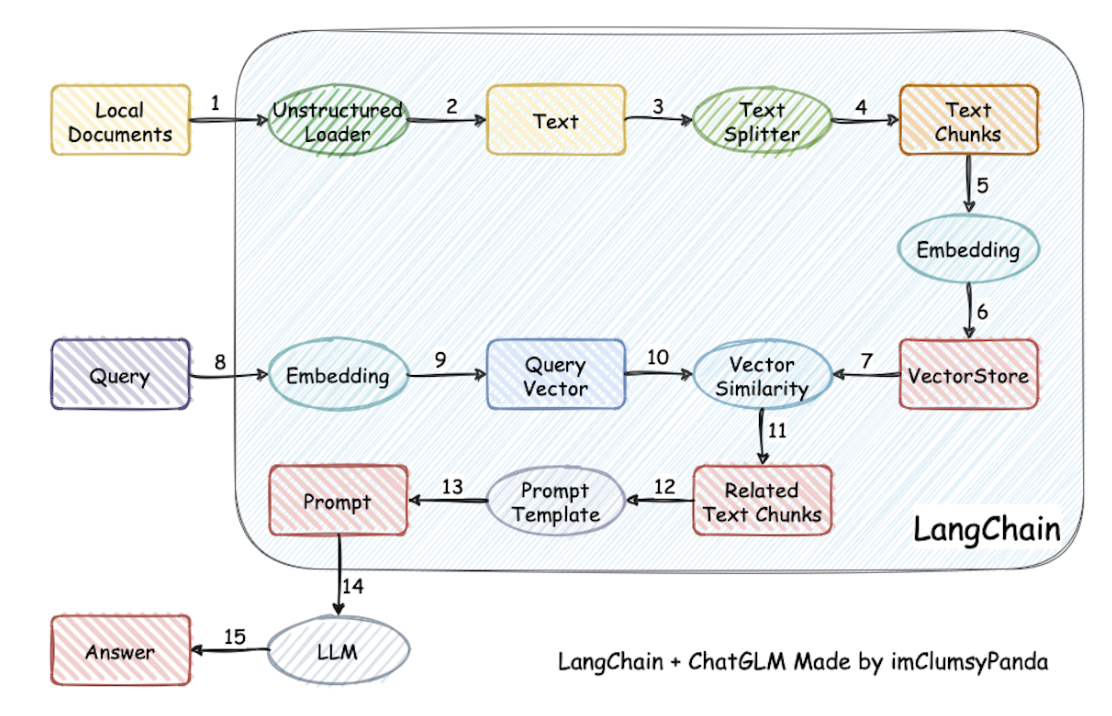

参考langchain思想实现的基于本地知识库的问答应用的框架,核心原理为:

Referring to the LangChain concept, I’ve developed a framework for a Q&A application based on a local knowledge base. The core principle is as follows:

知识库准备 Knowledge Base Preparation

加载文件 -> 读取文本 -> 文本分割 -> 文本向量化

Load files -> Read text -> Split text -> Convert text to vectors

回答问题 Answering Questions

问句向量化 -> 在文本向量中匹配出与问句向量最相似的 top k个 -> 匹配出的文本作为上下文和问题一起添加到 prompt中 -> 提交给 LLM生成回答

Convert the query to a vector -> Match the most similar top k vectors from the text vectors -> Take the matched text as context and add it to the prompt along with the question -> Submit to the LLM to generate the answer.

代码 Code

首先配置相关参数,因国内无法使用GPT接口,我使用了第三方接口

First, I configured the relevant parameters. Since GPT APIs are inaccessible in China, I used a third-party API.

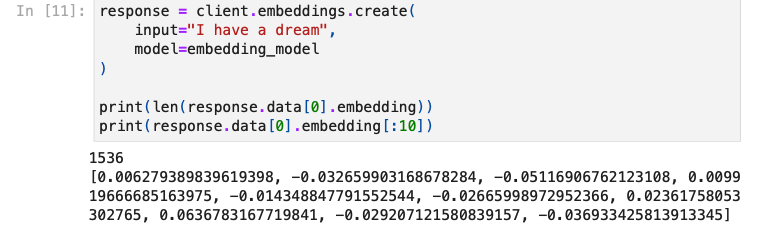

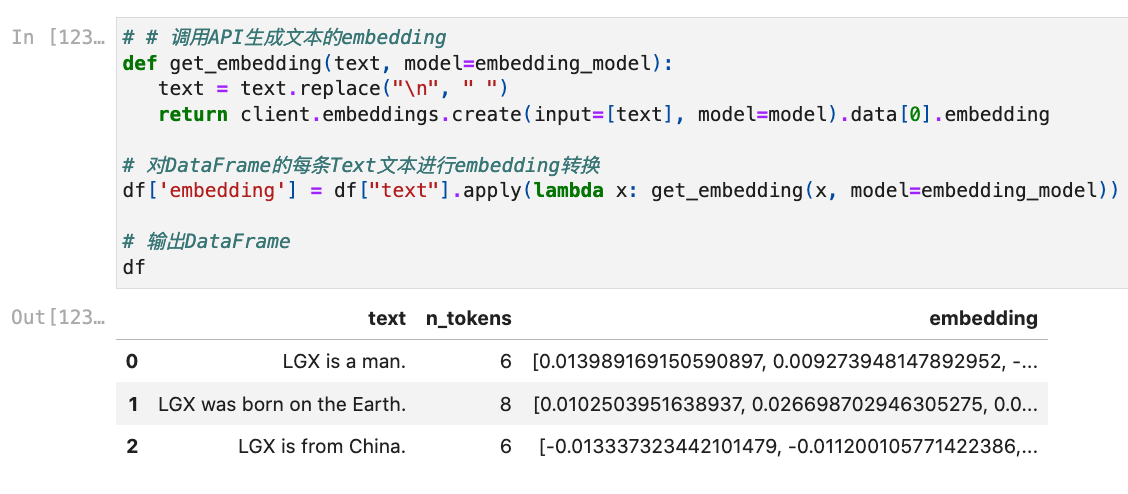

测试embedding编码,也就是把句子转成向量

I tested the embedding encoding, which means converting sentences into vectors.

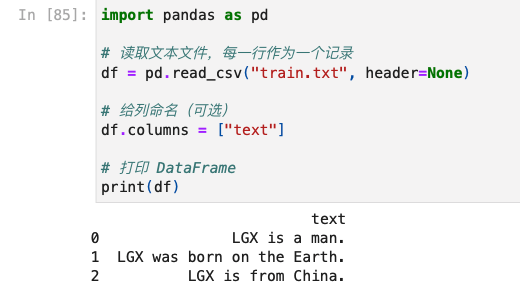

准备知识库,我简单弄了一个txt文档,里面有LGX的知识

Next, I prepared the knowledge base by creating a simple .txt document containing information about LGX.

随后对知识库进行训练

Then, I trained the knowledge base.

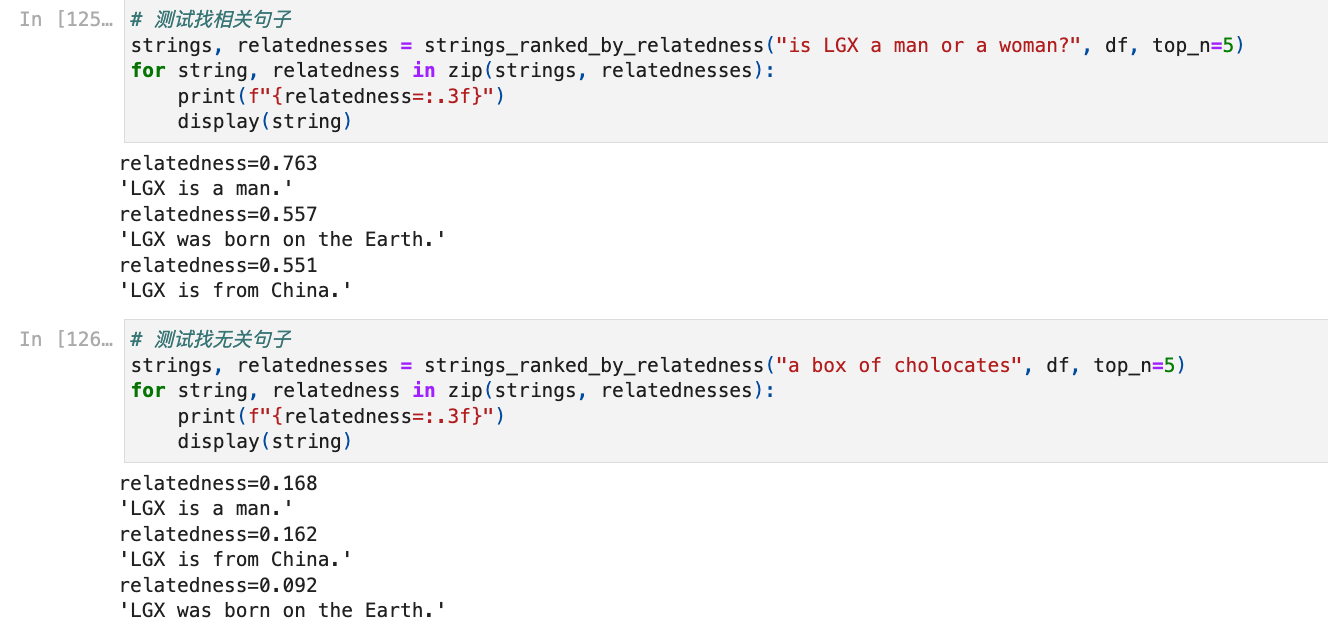

随便测试输入几句话,转换向量后根据相似度找topk句子

I randomly input a few sentences for testing, converted them into vectors, and retrieved the top-k sentences based on similarity.

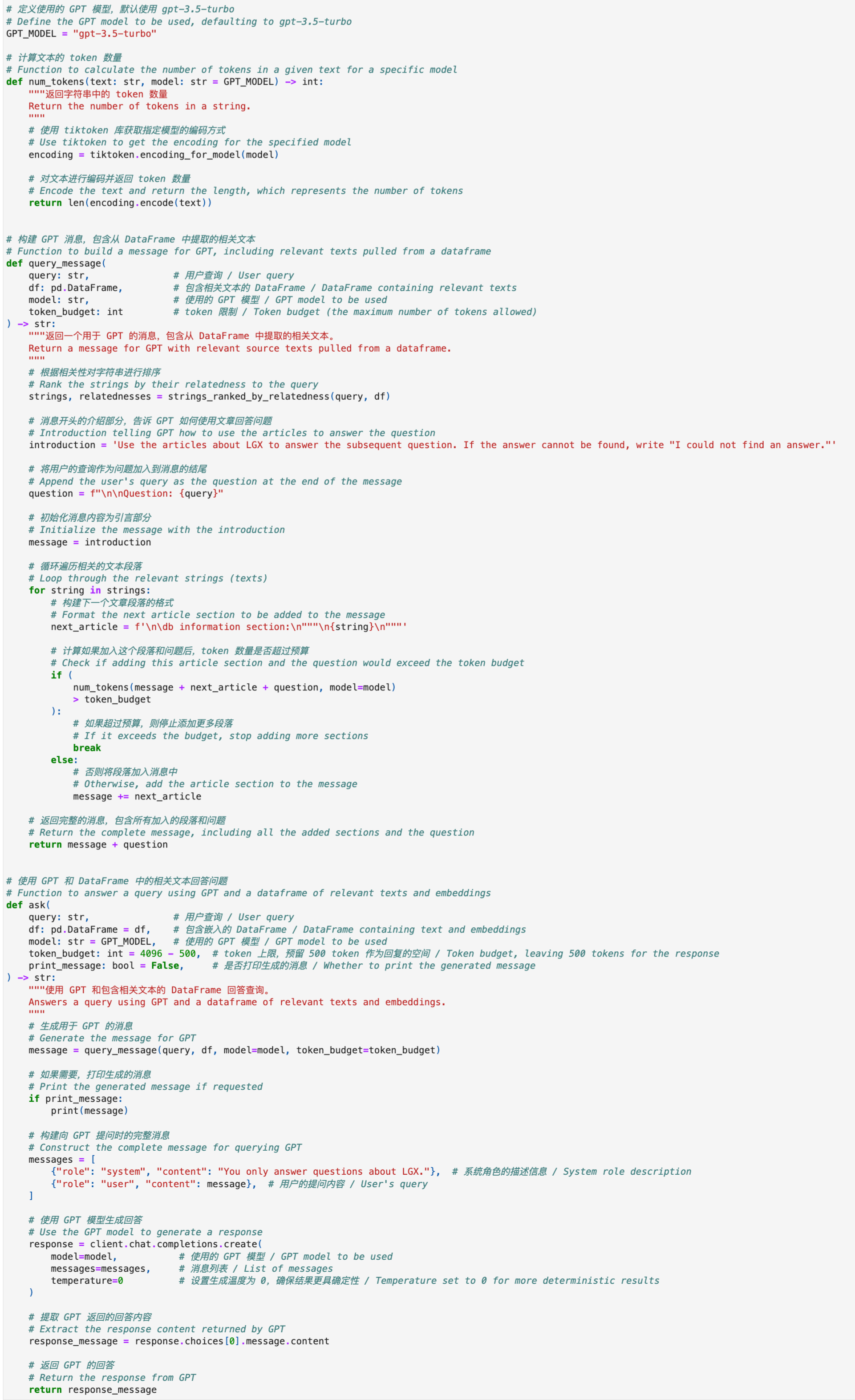

整理代码,封装函数,把相关句子和prompt进行组织,给GPT指令返回回答

Finally, I organized the code, encapsulated the functions, and structured the related sentences and prompt, issuing a command to the GPT to return an answer.

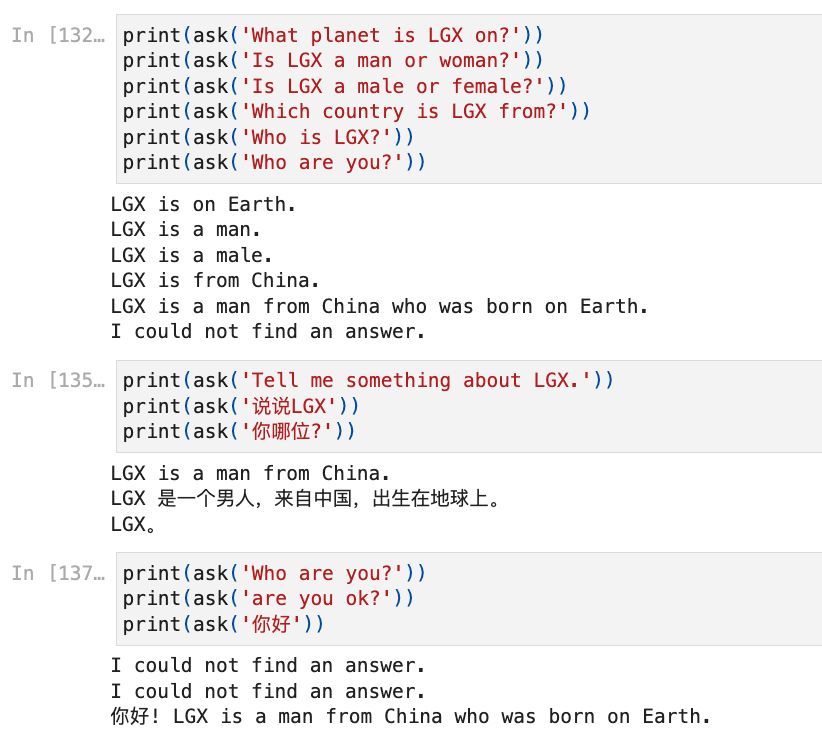

测试效果

I then tested the results.