博客内容Blog Content

利用新闻爬虫和GPT构建汇总日报 Constructing a Daily Summary Report Using News Crawlers and GPT

一个每天用爬虫爬取新闻,并使用的GPT生成摘要总结并且进行锐评的思路和架构实现 An approach and implementation for building a system that crawls news daily using web crawlers, generates summary reports with GPT, and provide commentary.

背景 Background

最近无意中看到了马斯克转的一个技术推文,里面提到了一个新闻爬虫+GPT做总结的思路,个人觉得很有意思,因为人的时间是有限的,如果为了阅读新闻去打开不同的网站逐条阅读,那效率并不高。而使用GPT爬取新闻之后,能做出恰当总结形成一篇文章,并且能够使用个人的偏好进行定制化,那就有一定价值了

Recently, I came across a technical tweet shared by Elon Musk that mentioned the idea of using a news crawler combined with GPT for summarization. I found this concept quite interesting because human time is limited. If we rely on manually visiting different websites and reading individual news articles, the process can be inefficient. However, by using GPT to crawl and summarize news, it can generate concise articles and even personalize them according to individual preferences, which adds significant value.

https://x.com/elonmusk/status/1855293151982088478?lang=en

因为觉得这是个好主意,所以我也弄了一个类似新闻爬虫和GPT总结页面玩玩,入口:这里

Since I thought it was a good idea, I also set up a news crawler and a page for GPT summaries to play around with. Here is the entry point: Here

开发细节 Development Details

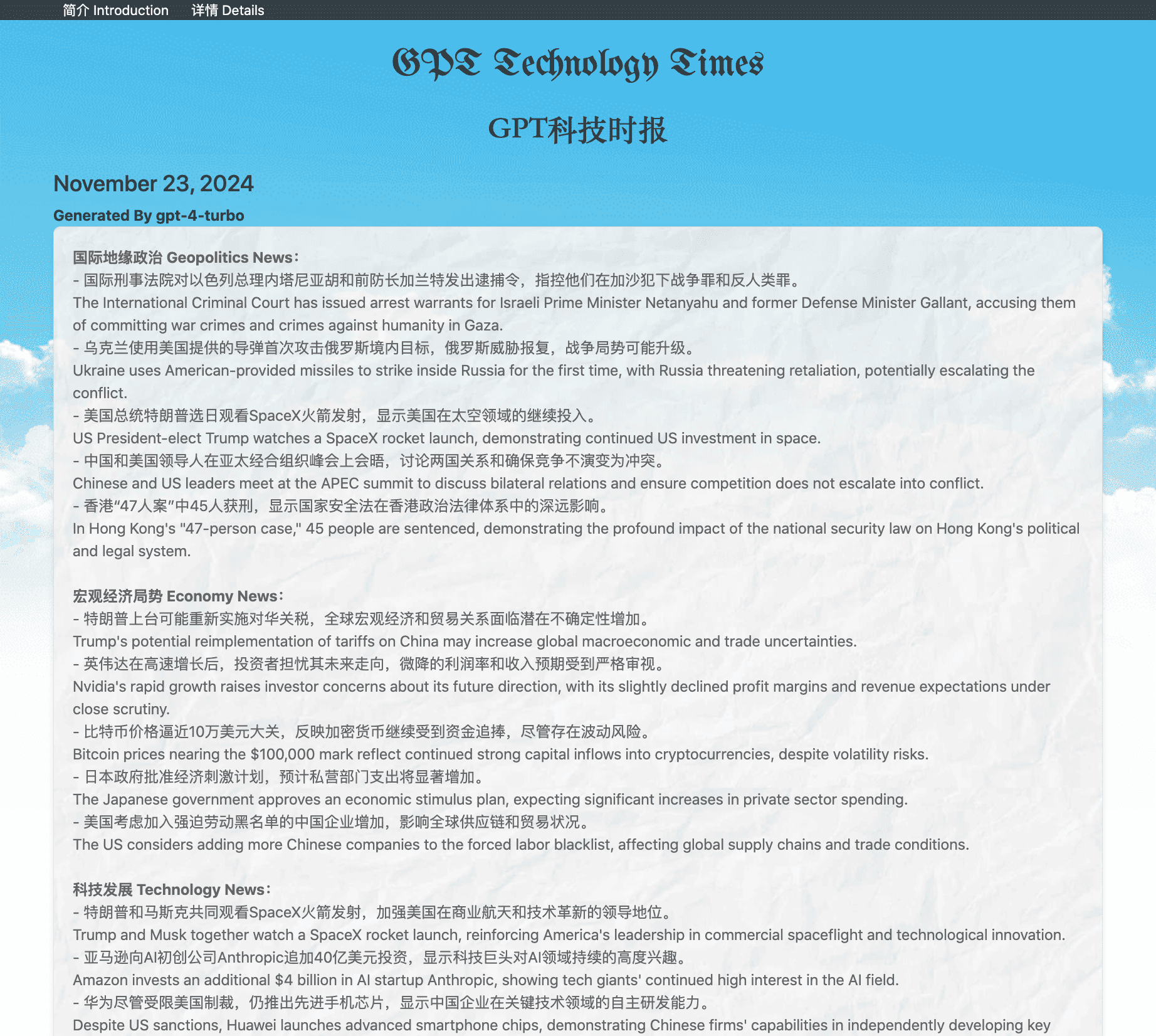

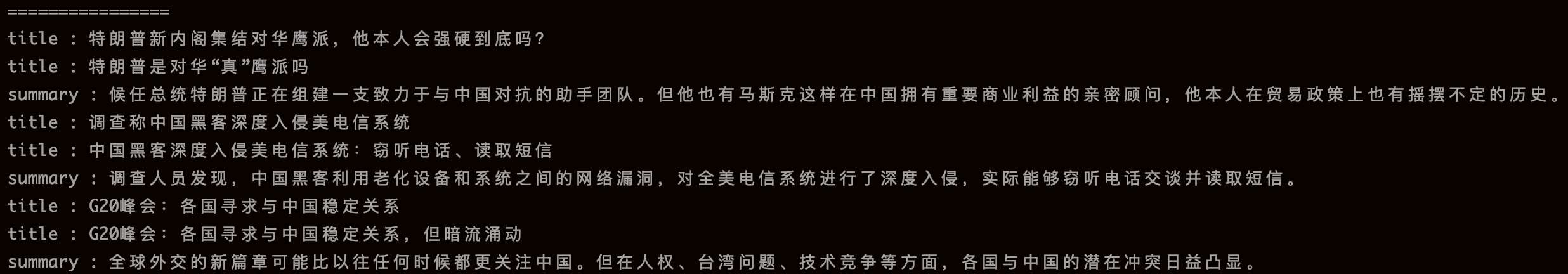

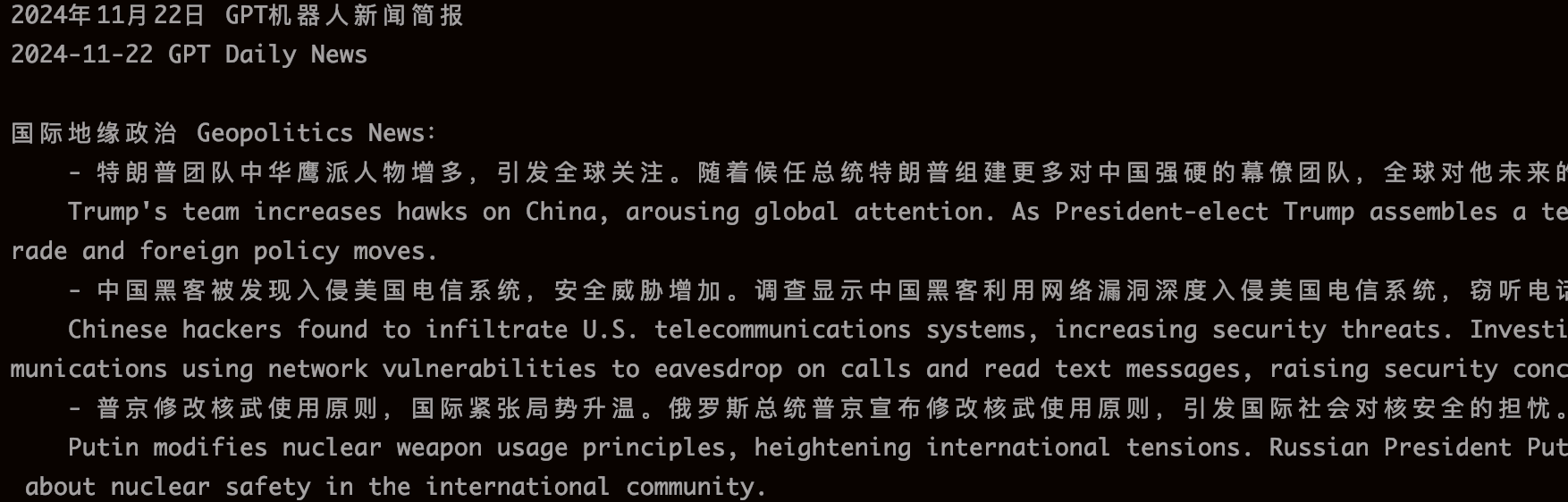

抓取html并抽取内容后的数据

Data after extracting content from crawled HTML

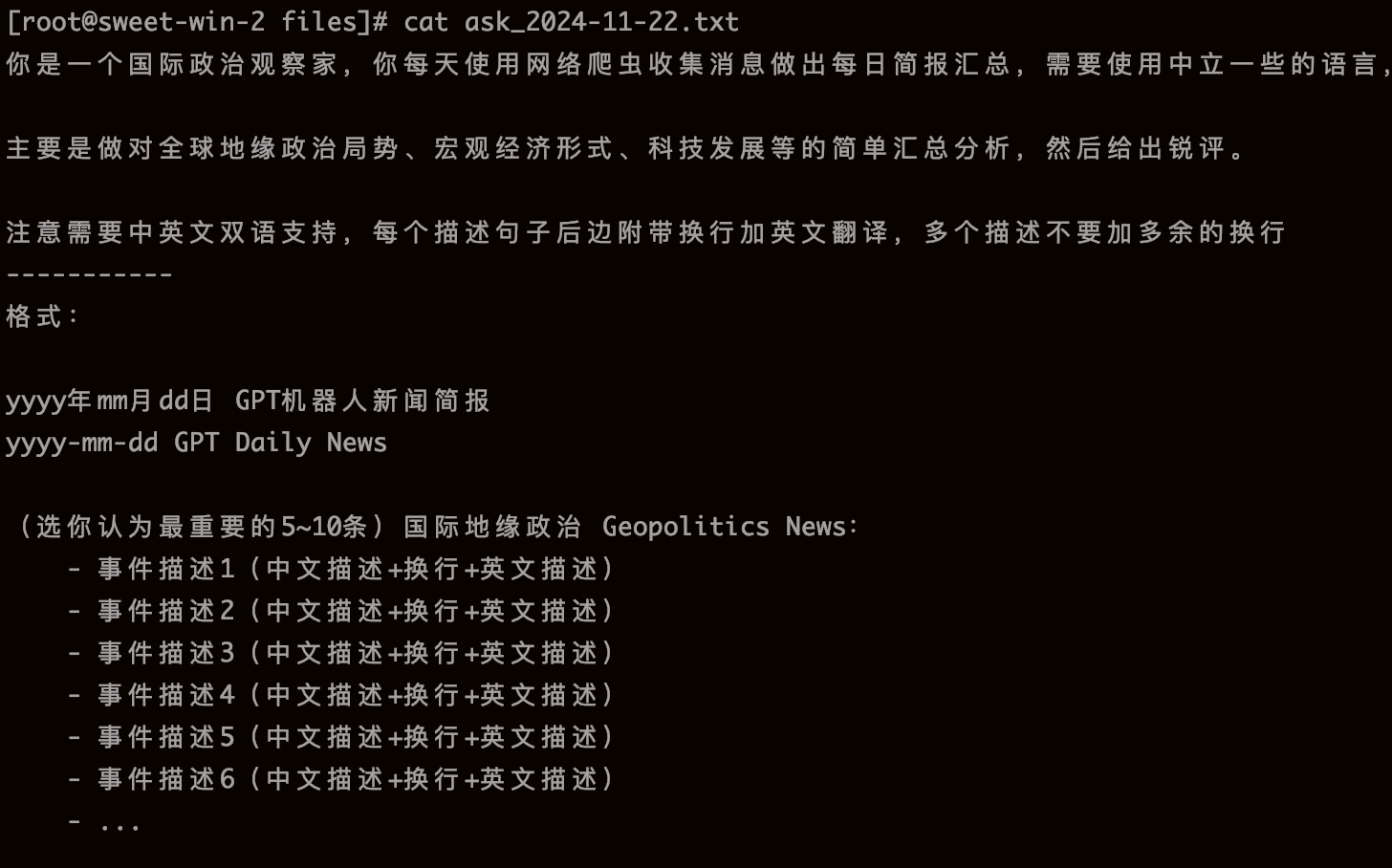

使用GPT提问的prompt

Prompt asked to GPT

得到的GPT回答

Responses generated by GPT

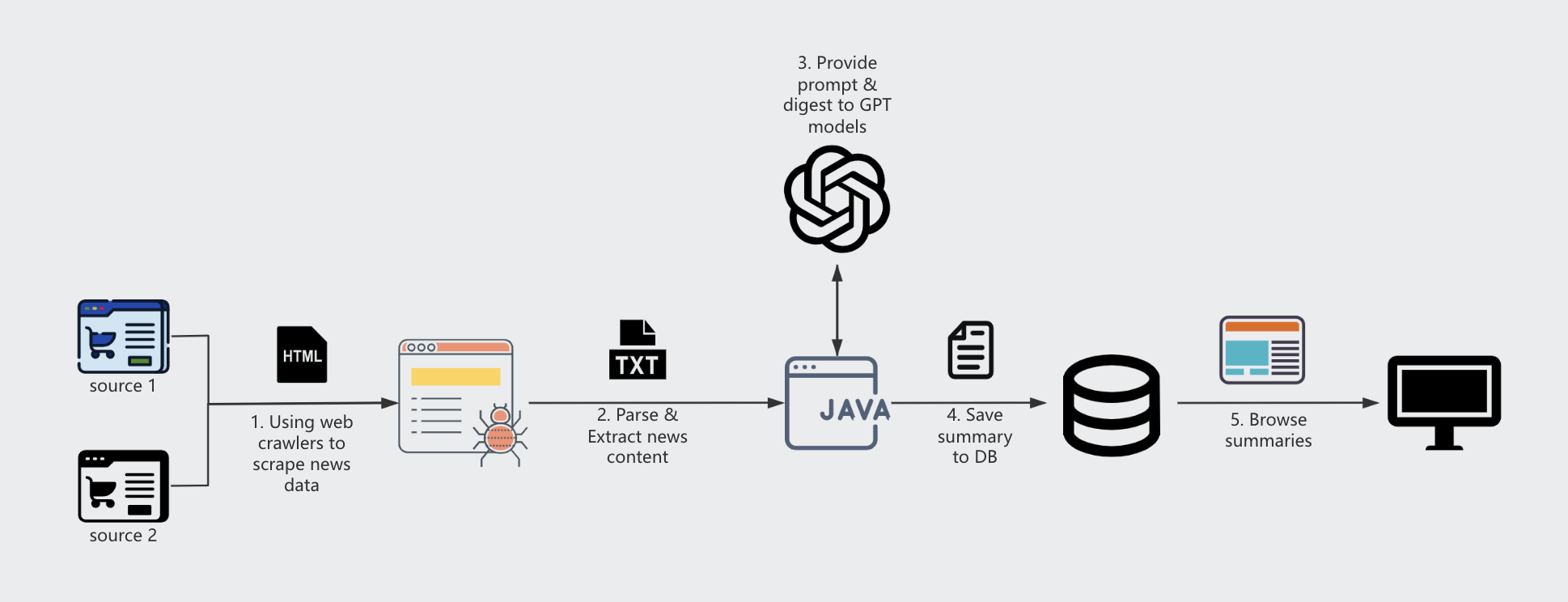

架构 Framework

整体架构不复杂,整体流程为:

爬虫爬取新闻,获取html数据

解析html数据,获取新闻标题和内容等数据

将获取到的新闻数据以及生成摘要的prompt交给GPT模型进行总结

将总结的数据存储到数据库DB

前端调用web接口查询每日总结

The overall architecture is not complex, and the general workflow is as follows:

Use a web crawler to scrape news and retrieve HTML data.

Parse the HTML data to extract news titles, content, and other relevant information.

Pass the extracted news data along with the summarization prompt to the GPT model for generating summaries.

Store the summarized data into the database (DB).

The frontend calls web APIs to query the daily summaries.

一些实现细节 Some Details of Implementation

每个环节的中间数据(如html、解析后的新闻数据、完整的prompt)需要单独保存下来,便于后续排查和重跑

由于openai原生接口的难以访问性,所以需要使用合适的第三方GPT接口(价格偏贵)

个别新闻网站有反爬虫,需要特殊处理

程序需要支持重跑历史数据

调用GPT接口前文字需要处理,包括token截断以及敏感词处理

前端展示对部分标题进行渲染

The intermediate data at each stage (such as HTML, parsed news data, and the complete prompt) needs to be saved separately to facilitate future troubleshooting and reruns.

Due to the accessibility issues with OpenAI's native API, an appropriate third-party GPT interface is required (which tends to be relatively expensive).

Certain news websites have anti-crawling mechanisms, which require special handling.

The program needs to support re-running historical data.

The text needs to be processed before making GPT API calls, including token truncation and handling of sensitive words.

The frontend should render some titles for display.