博客内容Blog Content

利用Docker部署大数据环境 Deploying a Real-Time Lakehouse Big Data Environment Using Docker

使用Docker搭建HDFS+Flink+Paimon流批一体环境,进行数据查询,并加入StarRocks查询 Using Docker to Set Up an HDFS + Flink + Paimon Unified Streaming and Batch Environment for Data Querying, Adding StarRocks For Data Querying

概述 Overview

做Flink数据开发时,往往需要一个相对可靠的集群环境,之前我是在mac上分别下载安装hdfs-namenode、hdfs-datanode、flink等组件分别运行,依赖十分复杂,而且如果迁移到别的机器上搭建才发现很困难,于是我就想到使用docker-compose进行搭建(没想到也不简单),这里记录下docker安装hdfs、flink等组件并让其互相调用的过程

When developing Flink data applications, a relatively reliable cluster environment is often required. Previously, I installed and ran components like HDFS NameNode, HDFS DataNode, and Flink separately on my Mac. However, the dependencies were quite complex, and migrating the setup to another machine was difficult. Therefore, I thought of using Docker Compose for the setup (which turned out to be not so simple either). Here, I document the process of installing HDFS, Flink, and other components using Docker and enabling their interaction.

Flink → Paimon → StarRocks 构建了一条现代化的、流批一体的、高性能数据链路,不仅支持实时数据处理,还兼顾离线分析能力,是构建企业级数据湖仓一体架构的理想方案。

Flink (流计算引擎 ) ↓ Paimon 表写入(以 Parquet 格式存储在 HDFS) ↓ StarRocks 查询或导入(用于高速分析)

Flink:负责流式处理、实时计算、数据清洗、聚合等。

Paimon on HDFS:作为湖存储格式,支持主键、增量、流批一体的写入。

StarRocks:MPP 分析型数据库,用于实时和离线数据分析,查询性能极高。

Flink → Paimon → StarRocks forms a modern, high-performance, and stream-batch unified data pipeline. It not only supports real-time data processing but also enables efficient offline analytics, making it an ideal solution for building enterprise-level lakehouse architectures.

Flink (Stream Processing Engine) ↓ Paimon Table Writes (Stored in HDFS in Parquet Format) ↓ StarRocks Query or Ingestion (For High-Speed Analytics)

Flink: Responsible for stream processing, real-time computation, data cleaning, aggregation, etc.

Paimon on HDFS: Serves as the lake storage format, supporting primary keys, incremental updates, and stream-batch unified writes.

StarRocks: A high-performance MPP analytical database used for both real-time and batch analytics with excellent query performance.

1. Docker安装 Installing Docker

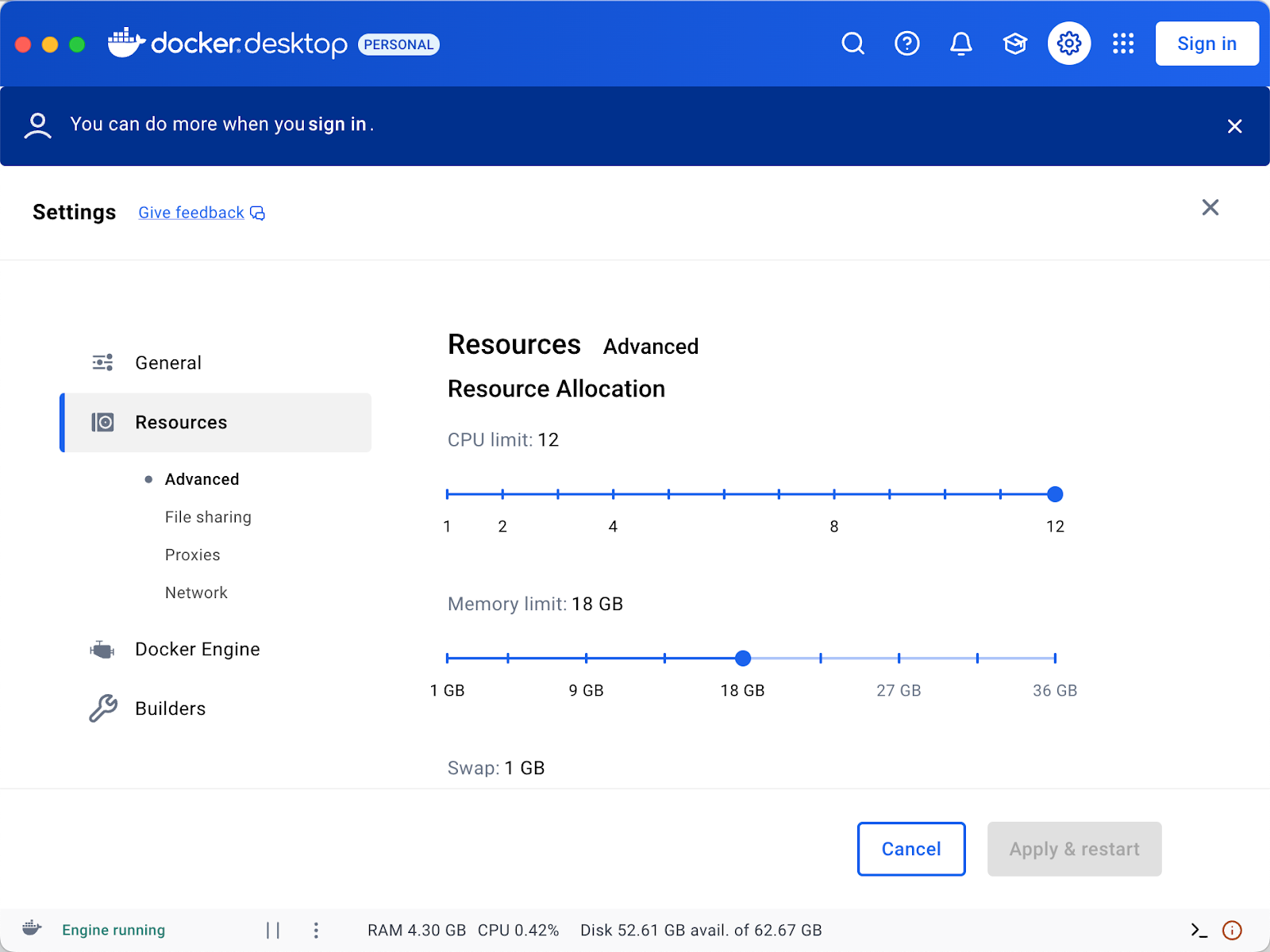

首先需要安装docker,我选择安装了桌面应用,它会有可视化界面还包含相关docker指令,安装之后分配一些cpu和内存资源即可

First, we need to install Docker. I chose to install the desktop application, which provides a graphical user interface and includes relevant Docker commands. After installation, some CPU and memory resources need to be allocated.

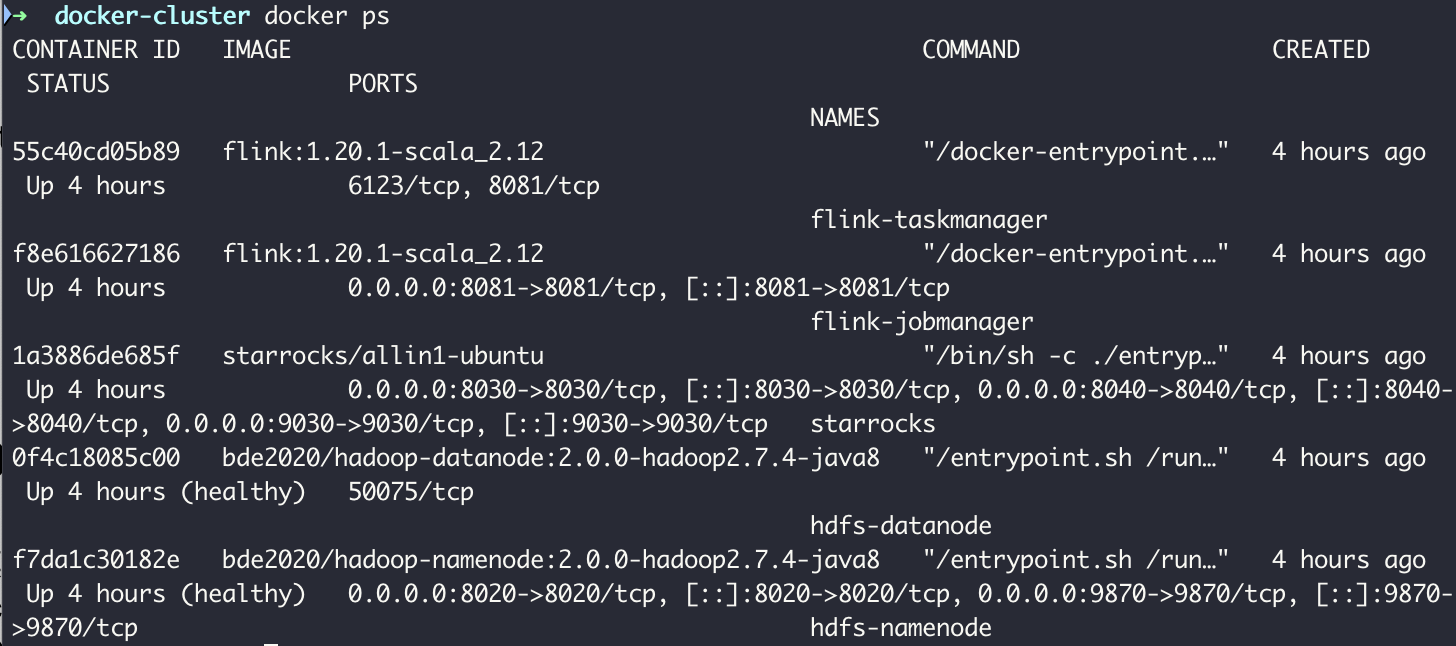

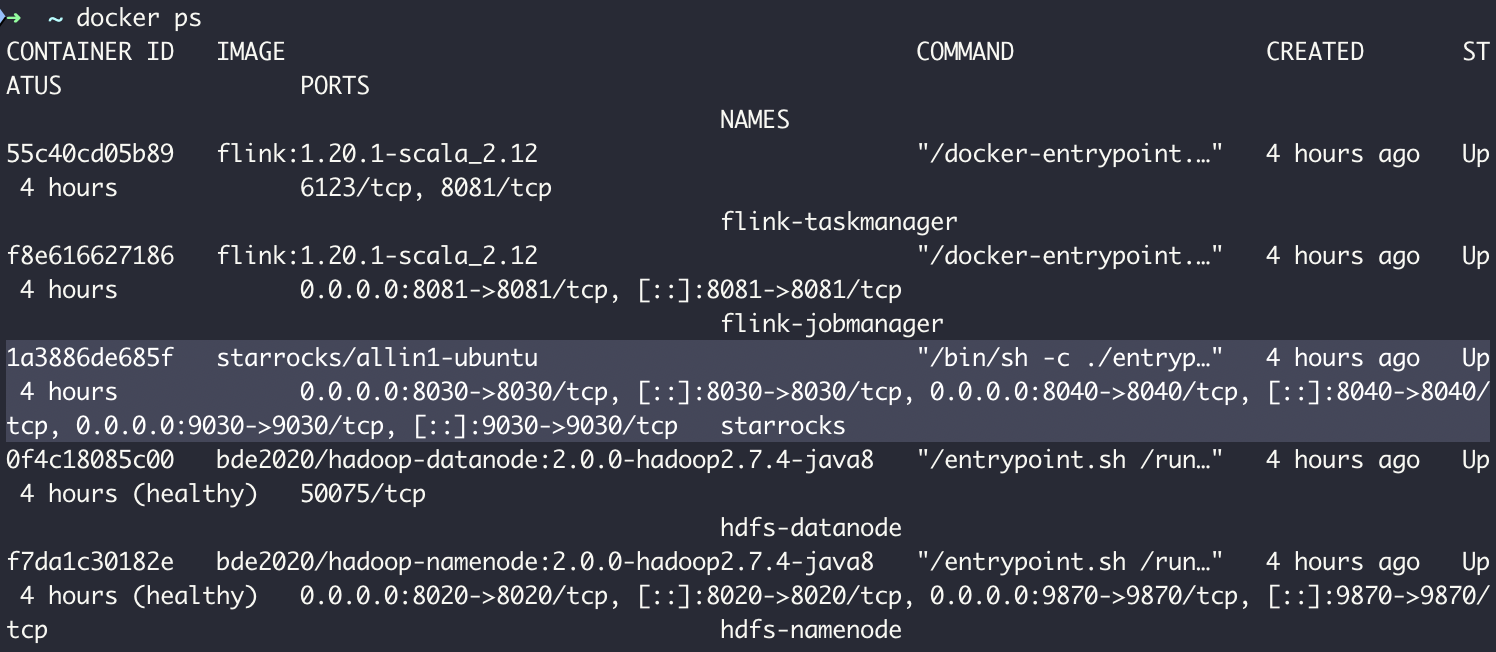

安装后,使用docker -ps查看进程,下图为我安装好了flink、hdfs和paimon后有如下的进程

Once installed, use docker -ps to check running processes. The following screenshot shows the processes after successfully installing Flink and HDFS.

2. HDFS安装 Installing HDFS

网上找的或GPT给的相关docker-compose.yml大都有问题,比如配置文件里面的配置对不上,启动后datanode没有拉起,我这里提供一个正确的模版

Most docker-compose.yml files found online or provided by GPT contain issues, such as mismatched configurations in the config files, or the DataNode failing to start. Here, I provide a correct template.

services: # HDFS NameNode hdfs-namenode: image: bde2020/hadoop-namenode:2.0.0-hadoop2.7.4-java8 container_name: hdfs-namenode ports: - "8020:8020" - "9870:9870" environment: - CLUSTER_NAME=test volumes: - namenode-data:/hadoop/dfs/name # HDFS DataNode hdfs-datanode: image: bde2020/hadoop-datanode:2.0.0-hadoop2.7.4-java8 container_name: hdfs-datanode depends_on: - hdfs-namenode environment: - CLUSTER_NAME=test - CORE_CONF_fs_defaultFS=hdfs://hdfs-namenode:8020 volumes: - datanode-data:/hadoop/dfs/data

通过docker compose up -d启动后,首先通过docker ps看namenode和datanode是否都正确启动了,然后通过docker logs hdfs-namenode和docker logs hdfs-datanode看日志里面是否有报错

After launching with docker compose up -d, first check whether NameNode and DataNode have started correctly using docker ps. Then, use docker logs hdfs-namenode and docker logs hdfs-datanode to check for errors in the logs.

如果没有报错,通过docker exec -it hdfs-namenode /bin/bash进入namenode节点做一些文件操作,例如上传文件

If there are no errors, use docker exec -it hdfs-namenode /bin/bash to enter the NameNode container and perform file operations, such as uploading files.

cat <<EOF > /tmp/sample.csv id,name 1,Alice 2,Bob EOF hdfs dfs -mkdir -p /user/flink/input hdfs dfs -put /tmp/sample.csv /user/flink/input/ hdfs dfs -ls /user/flink/input/ hdfs dfs -cat /user/flink/input/sample.csv

3. Flink安装 Installing Flink

安装flink包含jobmanager和taskmanager的配置,注意它们需要分配较多内存才能进行启动,另外flink经常需要引入一些额外的依赖组件,我这里已paimon为例,尝试给flink添加hdfs+paimon的依赖

Installing Flink involves configuring both JobManager and TaskManager. Note that they require a significant amount of memory to start. Additionally, Flink often needs extra dependency components. Here, I take Paimon as an example and attempt to add HDFS + Paimon dependencies to Flink.

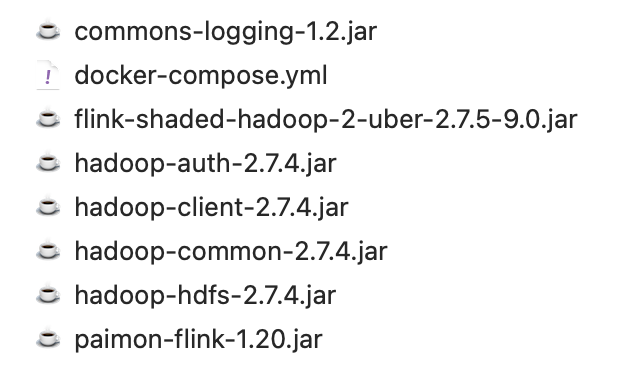

首先选择合适的flink版本(目前是1.20),然后去mvn下载对应步骤一里的hdfs版本的flink-hadoop依赖、以及paimon的依赖的jar包,一起放在docker-compose.yml的目录里

First, choose a suitable Flink version (currently 1.20). Then, download the Flink-Hadoop dependency matching the HDFS version from Maven, along with the Paimon dependency JAR files, and place them in the same directory as the docker-compose.yml file.

我们要将这些依赖挂载到docker启动的jm和tm进程里,所以我们要添加对应的挂载配置

We need to mount these dependencies into the Docker containers running the JobManager (JM) and TaskManager (TM), so we add the corresponding mount configuration.

# Flink JobManager jobmanager: image: flink:1.20.1-scala_2.12 container_name: flink-jobmanager ports: - "8081:8081" depends_on: - hdfs-namenode command: jobmanager environment: - JOB_MANAGER_RPC_ADDRESS=flink-jobmanager volumes: - ./hadoop-common-2.7.4.jar:/opt/flink/lib/hadoop-common-2.7.4.jar - ./hadoop-client-2.7.4.jar:/opt/flink/lib/hadoop-client-2.7.4.jar - ./hadoop-hdfs-2.7.4.jar:/opt/flink/lib/hadoop-hdfs-2.7.4.jar - ./commons-logging-1.2.jar:/opt/flink/lib/commons-logging-1.2.jar - ./hadoop-auth-2.7.4.jar:/opt/flink/lib/hadoop-auth-2.7.4.jar - ./paimon-flink-1.20.jar:/opt/flink/lib/paimon-flink-1.20.jar - ./flink-shaded-hadoop-2-uber-2.7.5-9.0.jar:/opt/flink/lib/flink-shaded-hadoop-2-uber-2.7.5-9.0.jar # Flink TaskManager taskmanager: image: flink:1.20.1-scala_2.12 container_name: flink-taskmanager depends_on: - jobmanager command: taskmanager environment: - JOB_MANAGER_RPC_ADDRESS=flink-jobmanager - TASK_MANAGER_MEMORY=2g - TASK_MANAGER_NUMBER_OF_TASK_SLOTS=2 volumes: - ./hadoop-common-2.7.4.jar:/opt/flink/lib/hadoop-common-2.7.4.jar - ./hadoop-client-2.7.4.jar:/opt/flink/lib/hadoop-client-2.7.4.jar - ./hadoop-hdfs-2.7.4.jar:/opt/flink/lib/hadoop-hdfs-2.7.4.jar - ./commons-logging-1.2.jar:/opt/flink/lib/commons-logging-1.2.jar - ./hadoop-auth-2.7.4.jar:/opt/flink/lib/hadoop-auth-2.7.4.jar - ./paimon-flink-1.20.jar:/opt/flink/lib/paimon-flink-1.20.jar - ./flink-shaded-hadoop-2-uber-2.7.5-9.0.jar:/opt/flink/lib/flink-shaded-hadoop-2-uber-2.7.5-9.0.jar

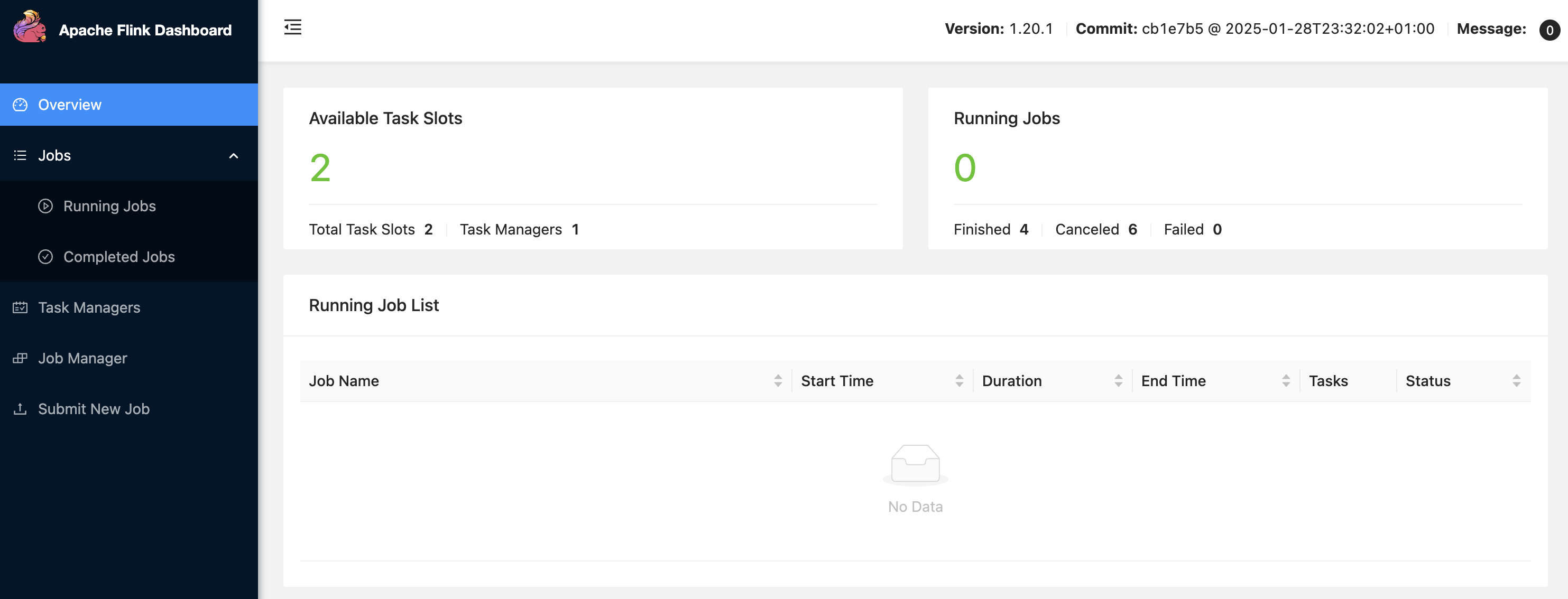

通过docker compose up -d启动后,我们先打开8081端口看看是不是启动起来了

After launching with docker compose up -d, first check port 8081 to see if Flink has started successfully.

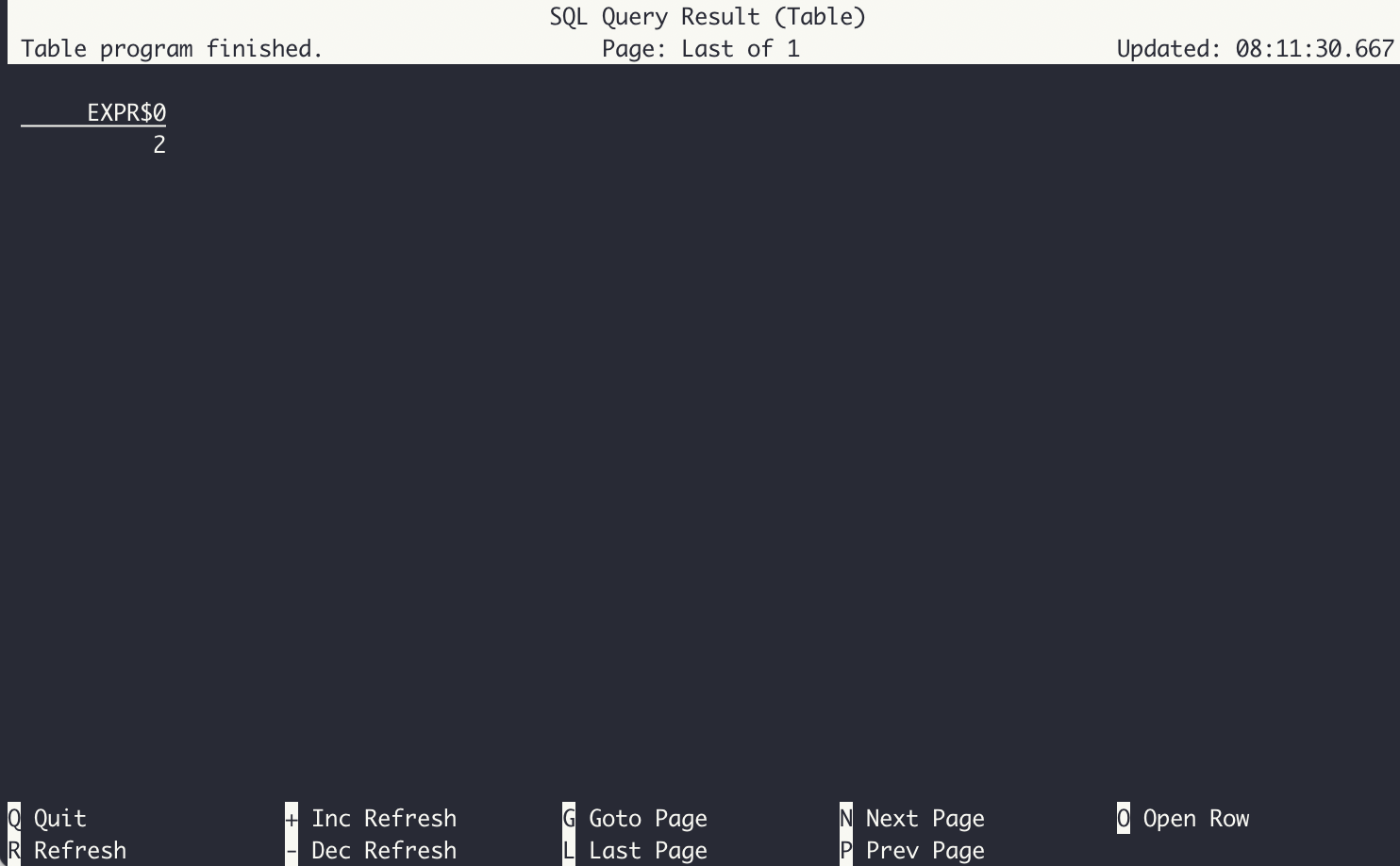

我们使用docker exec -it flink-jobmanager /bin/bash进入jm节点,直接./bin/sql-client.sh运行sql-client脚本,然后执行select 1+1,如果出现了结果2说明jm正常执行并提交给了tm执行任务

Then, use docker exec -it flink-jobmanager /bin/bash to enter the JobManager container and run the SQL client script with ./bin/sql-client.sh. Execute SELECT 1+1, and if the result is 2, it means the JobManager is working correctly and successfully submitting tasks to the TaskManager.

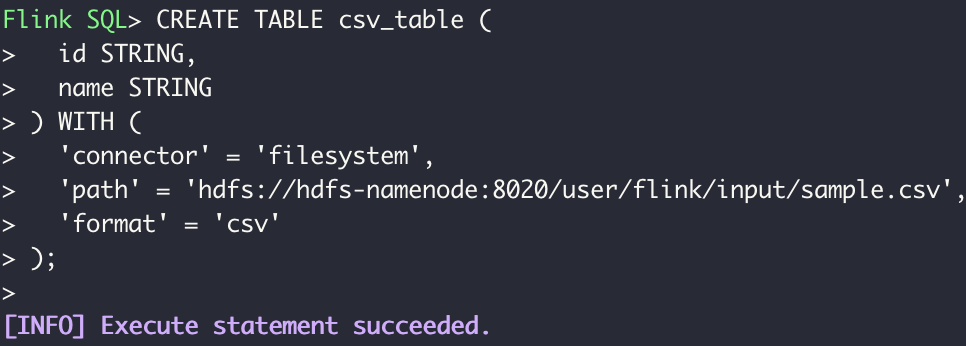

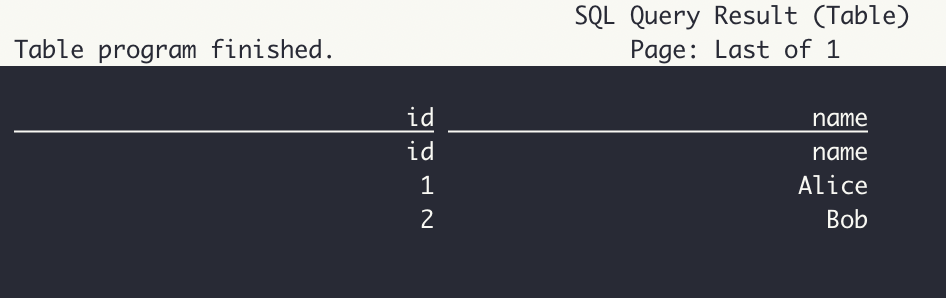

下一步,我们验证flink节点能不能访问hdfs,我们在第一部安装hdfs的时候,写了一个csv文件并上传到了hdfs,我们尝试使用flinksql把它读出来

Next, we verify whether Flink can access HDFS. During the HDFS installation step, we uploaded a CSV file to HDFS. Now, we attempt to read it using Flink

CREATE TABLE csv_table ( id STRING, name STRING ) WITH ( 'connector' = 'filesystem', 'path' = 'hdfs://hdfs-namenode:8020/user/flink/input/sample.csv', 'format' = 'csv' ); SELECT * FROM csv_table;

能出现数据说明flink节点能正常访问hdfs了

If data appears, it confirms that the Flink nodes can access HDFS correctly.

然后我们再测试下引入的paimon依赖是否能正常使用,根据官网示例代码构建一个hdfs表并执行插入任务看看(hdfs目录需要给flink提前授权):

Finally, we test whether the added Paimon dependency is working properly. Using the example code from the official Paimon documentation, we create an HDFS table and execute an insert operation (ensuring that Flink has prior authorization for the HDFS directory).

docker exec -it hdfs-namenode bash hdfs dfs -mkdir -p /tmp/paimon hdfs dfs -chmod -R 775 /tmp/paimon hdfs dfs -chown -R flink:flink /tmp/paimon

-- 创建 Paimon Catalog CREATE CATALOG my_catalog WITH ( 'type' = 'paimon', 'warehouse' = 'hdfs://hdfs-namenode:8020/tmp/paimon' ); -- 使用 Catalog USE CATALOG my_catalog; -- 创建 word_count 表 CREATE TABLE word_count ( word STRING PRIMARY KEY NOT ENFORCED, cnt BIGINT ); -- 创建临时输入表 word_table(使用 datagen connector) CREATE TEMPORARY TABLE word_table ( word STRING ) WITH ( 'connector' = 'datagen', 'fields.word.length' = '1' ); -- 设置 checkpoint 间隔 SET 'execution.checkpointing.interval' = '10 s'; -- 插入查询(word count) INSERT INTO word_count SELECT word, COUNT(*) FROM word_table GROUP BY word;

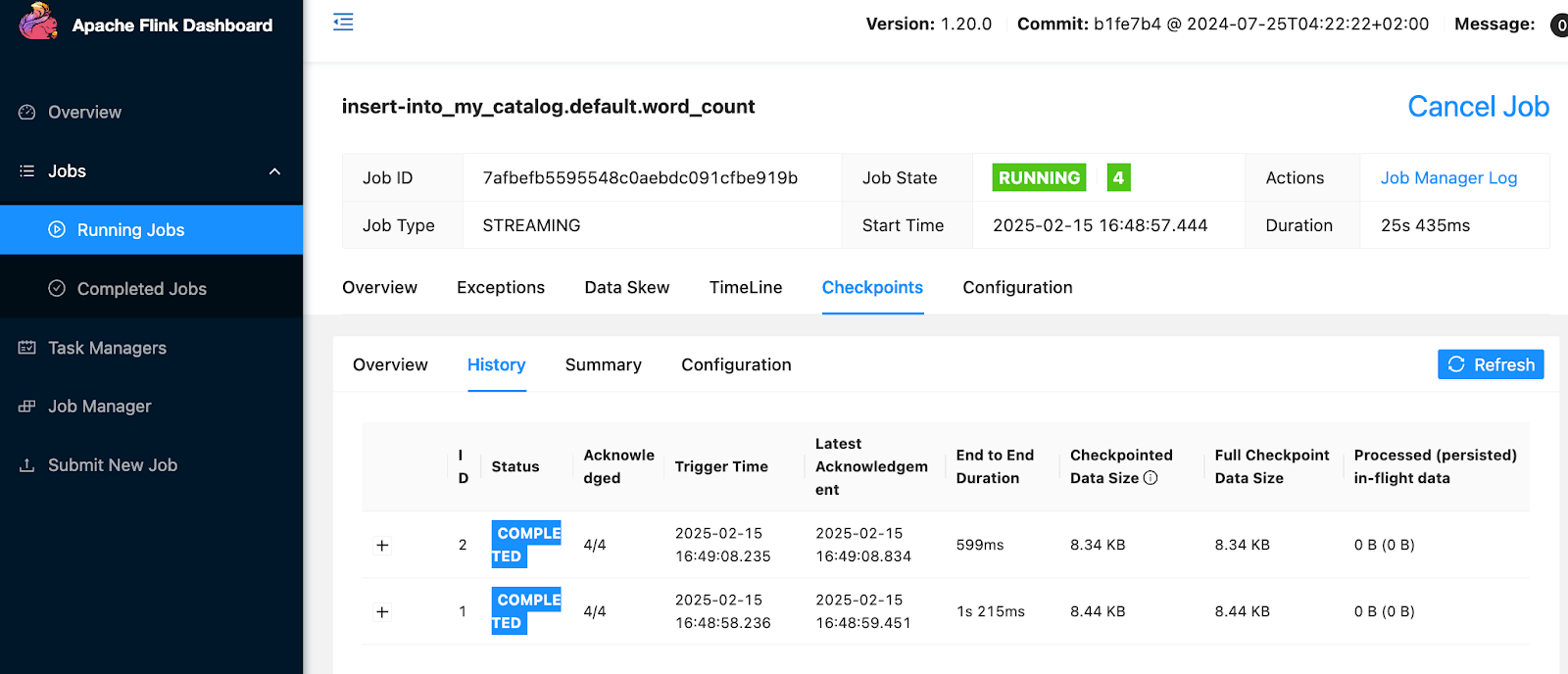

任务提上去了,而且checkpoint正常

The task is successfully submitted, and checkpoints are working normally.

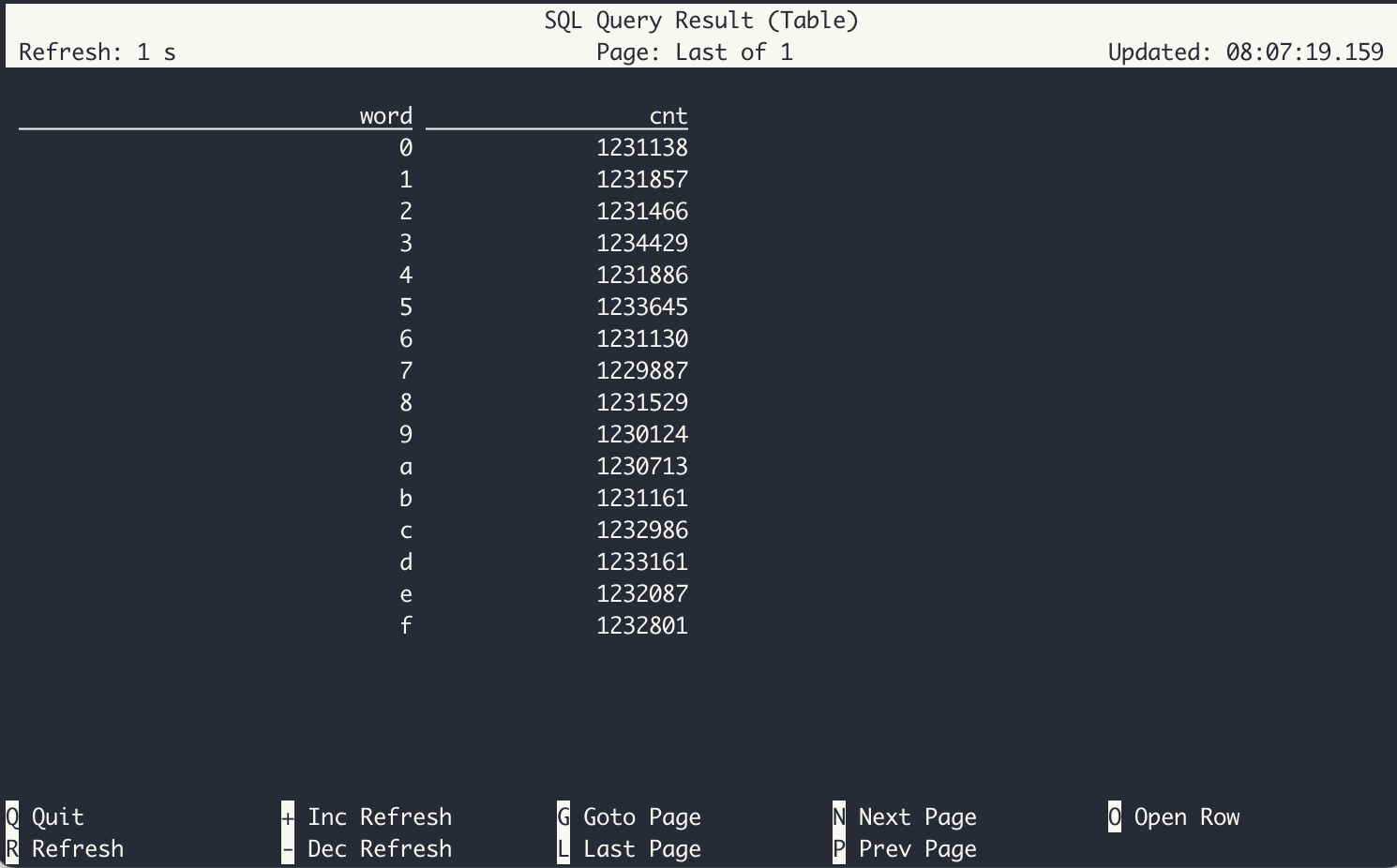

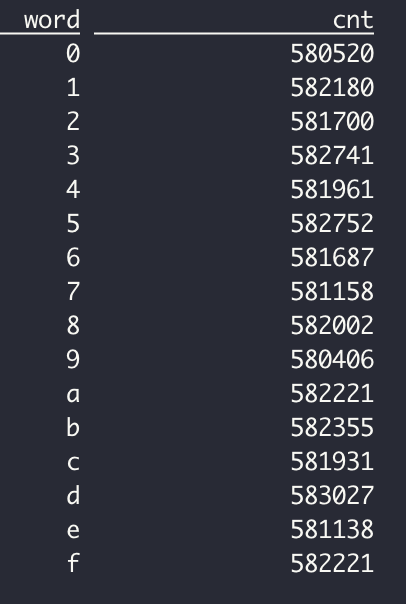

使用flink-sql手动进行下select *查询,可以看到实时写入paimon的最新结果

Using Flink SQL, we manually run SELECT * and can see the latest real-time data written into Paimon.

select * from word_count;

4. StarRocks安装 Install StarRocks

往docker-compose.yml添加StarRocks模块

Add the StarRocks module to docker-compose.yml

# StarRocks starrocks: image: starrocks/allin1-ubuntu container_name: starrocks ports: - "9030:9030" - "8030:8030" - "8040:8040" depends_on: - hdfs-namenode environment: - HDFS_NAMENODE_URL=hdfs://hdfs-namenode:8020

使用docker-compose up -d拉起,并使用docker ps检查进程,确认正常启动

Start it using docker-compose up -d, and check the process with docker ps to confirm it has started successfully.

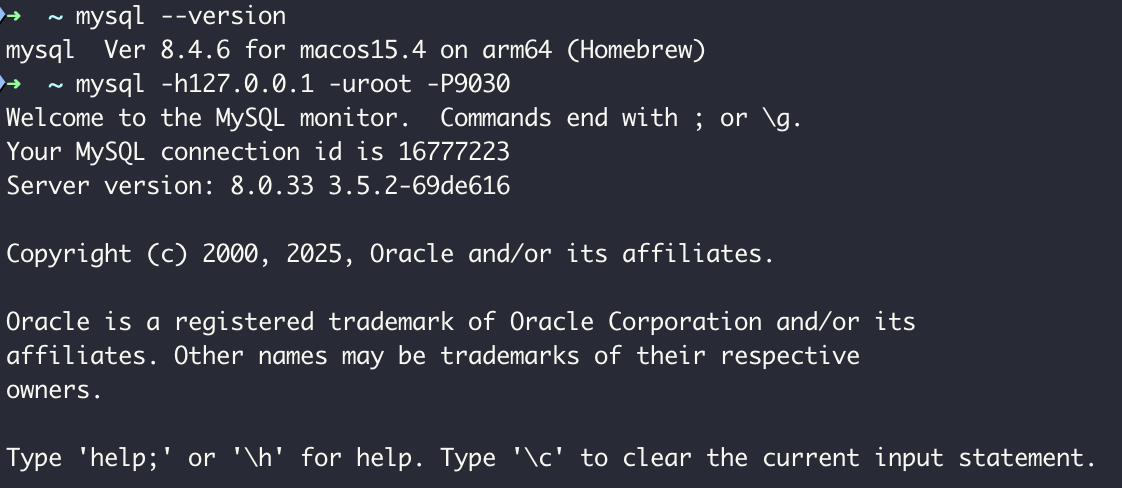

使用本地mysql进行连接StarRocks(注意mac安装要用mysql9以内的版本,比如brew install mysql@8.4)

Use a local MySQL instance to connect to StarRocks (Note: On Mac, install a MySQL version below 9, such as brew install mysql@8.4).

mysql -h127.0.0.1 -uroot -P9030

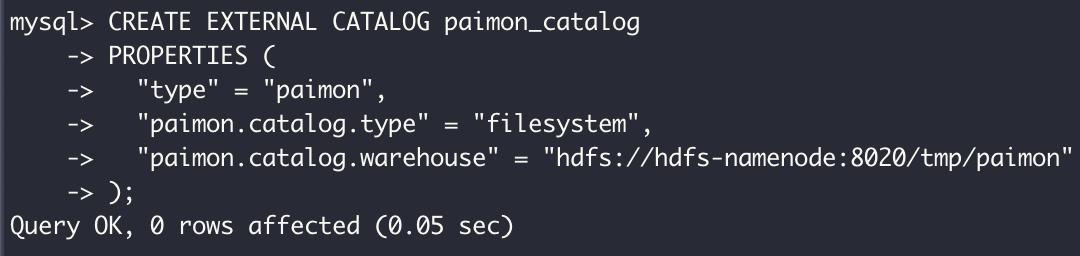

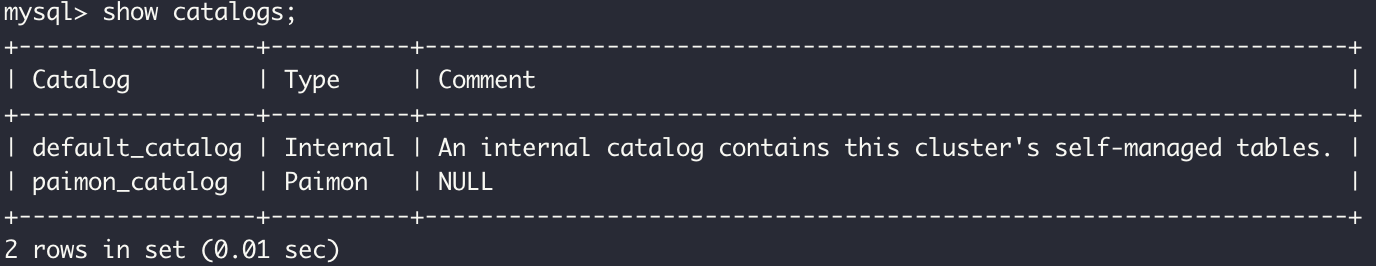

参考paimon官方文档,建立一个paimon的catalog

Refer to the official Paimon documentation and create a Paimon catalog.

CREATE EXTERNAL CATALOG paimon_catalog PROPERTIES ( "type" = "paimon", "paimon.catalog.type" = "filesystem", "paimon.catalog.warehouse" = "hdfs://hdfs-namenode:8020/tmp/paimon" );

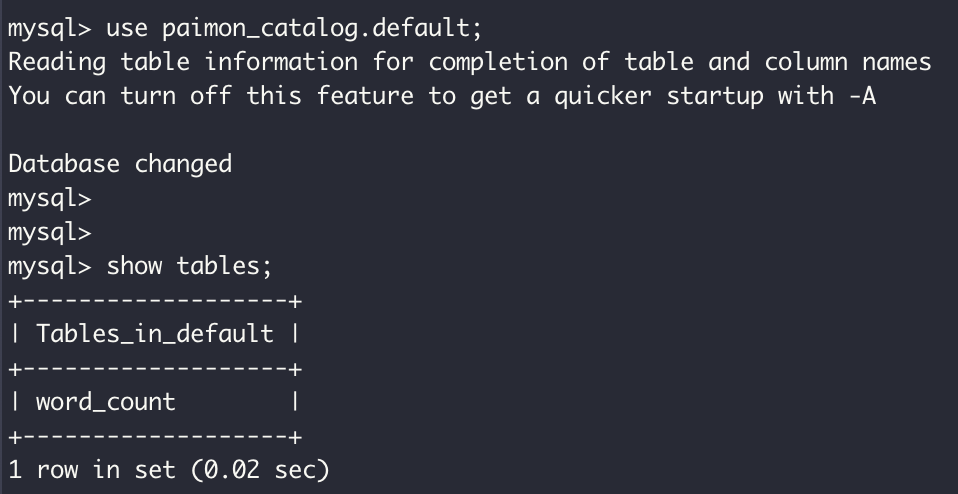

切换到该catalog,查看元数据

Switch to the catalog and check the metadata.

use paimon_catalog.default;

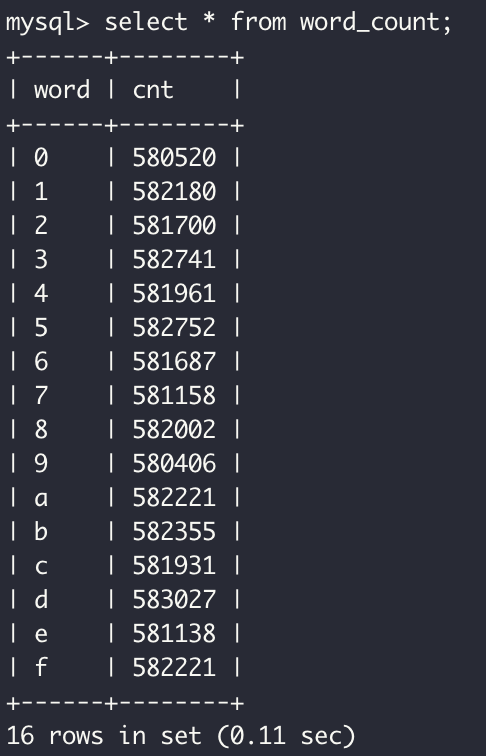

执行select进行查询,可以看到和flink sql-client实时查询的结果是一样的

Execute a SELECT query, and you will see the same real-time query results as in Flink SQL-Client.

SELECT * FROM word_count;

在该Flink -> HDFS -> StarRocks 场景中,查询的主要计算资源开销由 StarRocks 负责,执行分布式 SQL 查询的计算引擎,而 HDFS 仅提供存储和数据读取能力,不承担计算任务。因此,优化 StarRocks 的计算能力和 HDFS 读取性能,将能显著提升查询效率。

In the Flink -> HDFS -> StarRocks scenario, the main computational resource overhead for queries is handled by StarRocks, which serves as the distributed SQL query execution engine. HDFS only provides storage and data reading capabilities, without taking on any computational tasks. Therefore, optimizing the computational performance of StarRocks and the data reading performance of HDFS can significantly improve query efficiency.