博客内容Blog Content

大语言模型LLM之使用无标签数据预训练 Pretraining On Unlabeled Data of Large Language Models (LLMs)

构建损失函数实现对GPT模型的评估,实现预训练过程,文本生成的多样性策略以及加载开源的GPT2权重 Construct a loss function to evaluate the GPT model, implement the pre-training process, develop strategies for text generation diversity, and load open-source GPT-2 weights.

5.0 总览 Overview

![]() 5. Pretraining on unlabeled data.ipynb

5. Pretraining on unlabeled data.ipynb

目前为止我们弄好了GPT的主体,但是代码输出的预测词毫无逻辑,这是因为我们还没有GPT模型进行训练,因此现在是时候实现一个训练函数并对LLM进行预训练了。本章将学习一些基本的模型评估技术,用于衡量生成文本的质量,这是优化LLM训练过程的必要条件。

So far, we have built the main structure of the GPT model, but the predicted words output by the code are completely illogical. This is because the GPT model has not been trained yet. Therefore, it is now time to implement a training function and pre-train the LLM (Large Language Model). In this chapter, we will learn some basic model evaluation techniques to measure the quality of generated text, which are essential for optimizing the LLM training process.

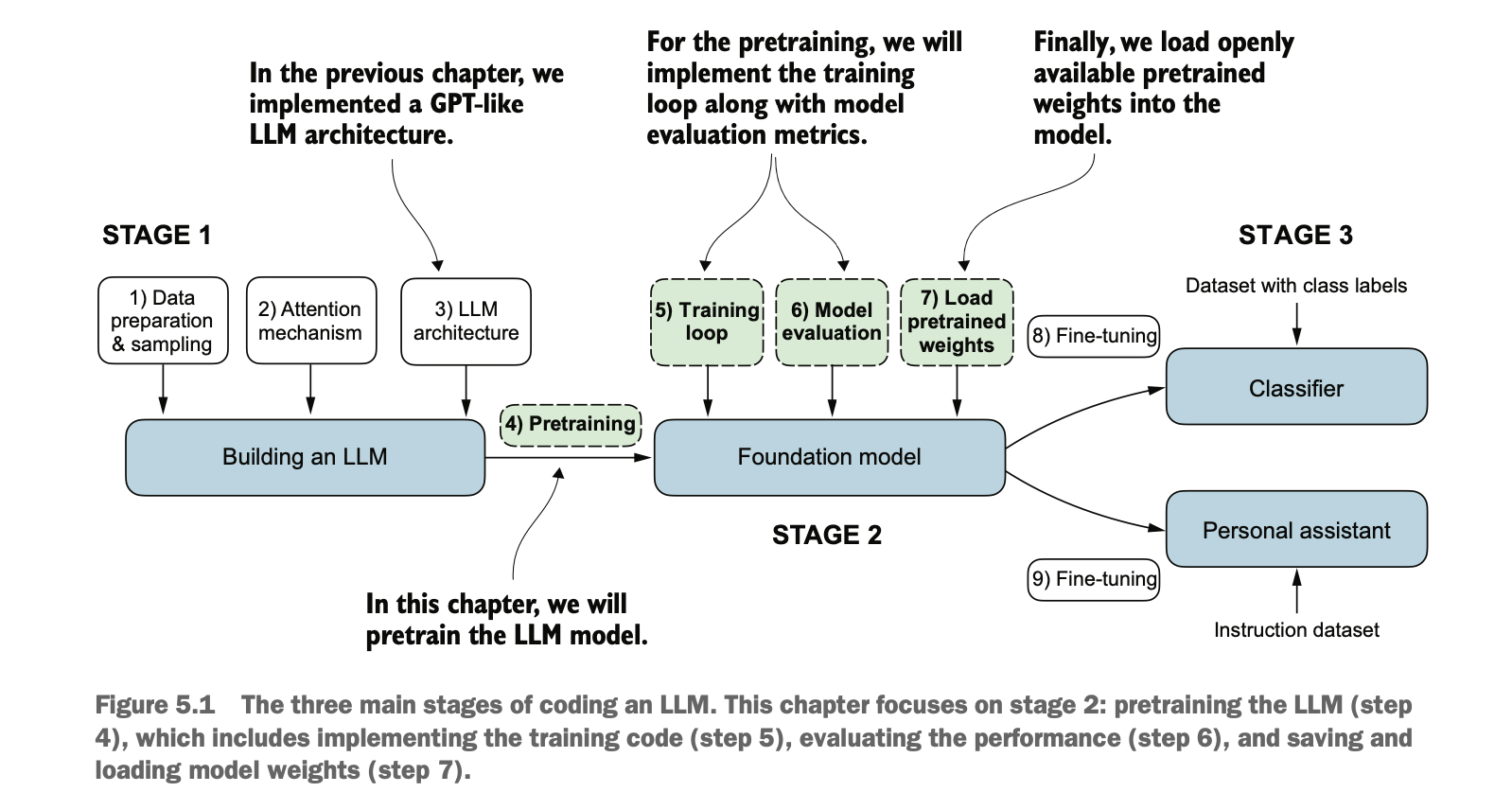

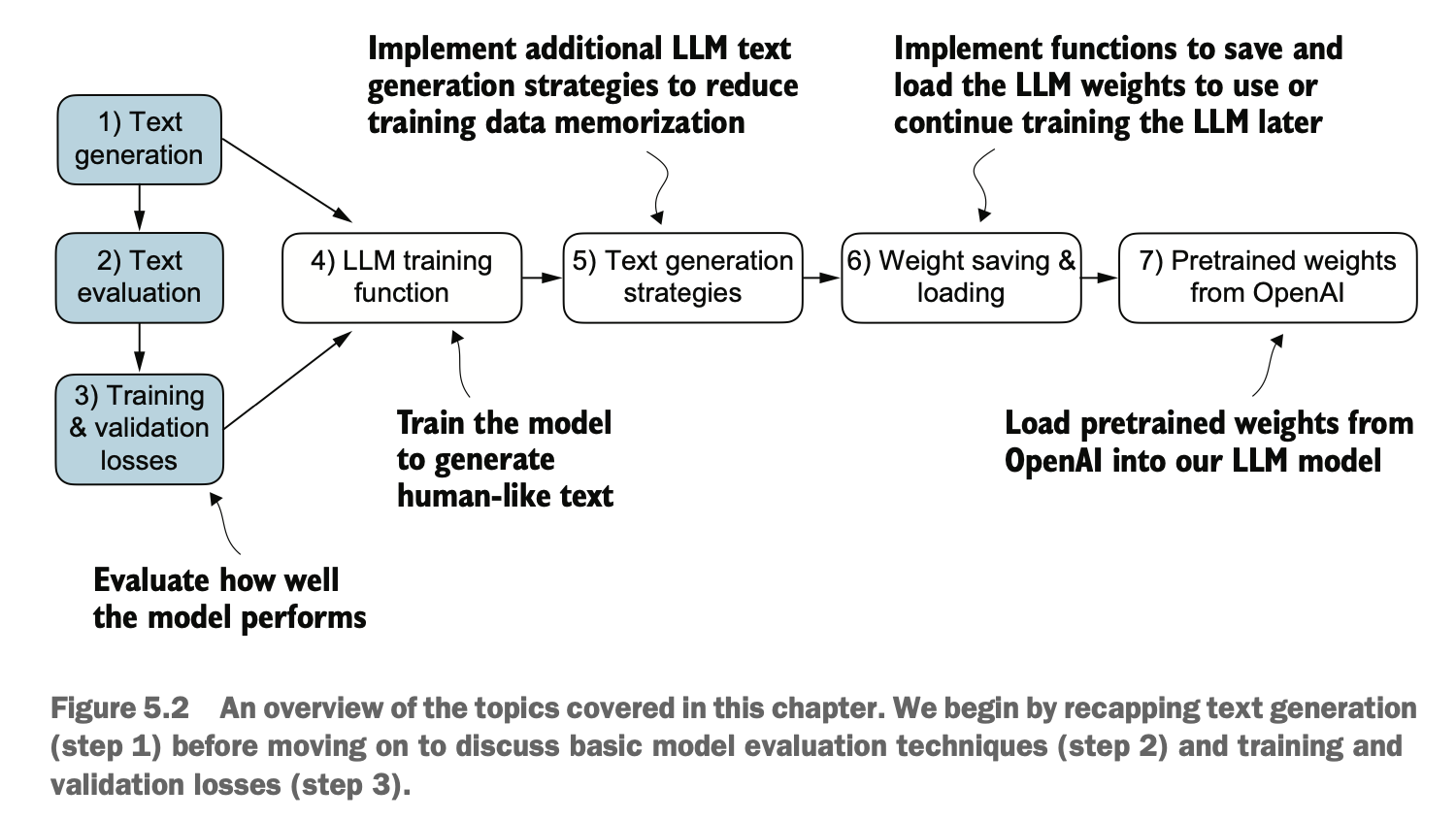

如图,本章将从文本生成和评估开始,逐步优化生成策略,完善权重保存与加载功能,最终通过加载预训练权重提高模型性能,具体目标包括:

计算训练集和验证集损失,以评估LLM(大型语言模型)在训练过程中生成的文本质量。

实现一个训练函数并完成LLM的预训练。

保存和加载模型权重,以便继续训练LLM。

加载来自OpenAI的预训练权重。

As shown in the figure, this chapter will start with text generation and evaluation, gradually optimize generation strategies, improve weight saving and loading functionalities, and ultimately enhance model performance by loading pre-trained weights. The specific objectives include:

Calculating training and validation losses to evaluate the quality of text generated by the LLM during training.

Implementing a training function and completing the pre-training of the LLM.

Saving and loading model weights to continue training the LLM.

Loading pre-trained weights from OpenAI.

5.1 评估生成文本 Evaluating Generated Text

我们首先需要回顾GPT文本生成,然后研究模型评估的技术以及训练和验证损失

We first need to revisit GPT text generation, followed by exploring model evaluation techniques and calculating training and validation losses.

5.1.1 回顾文本生成 Revisiting Text Generation

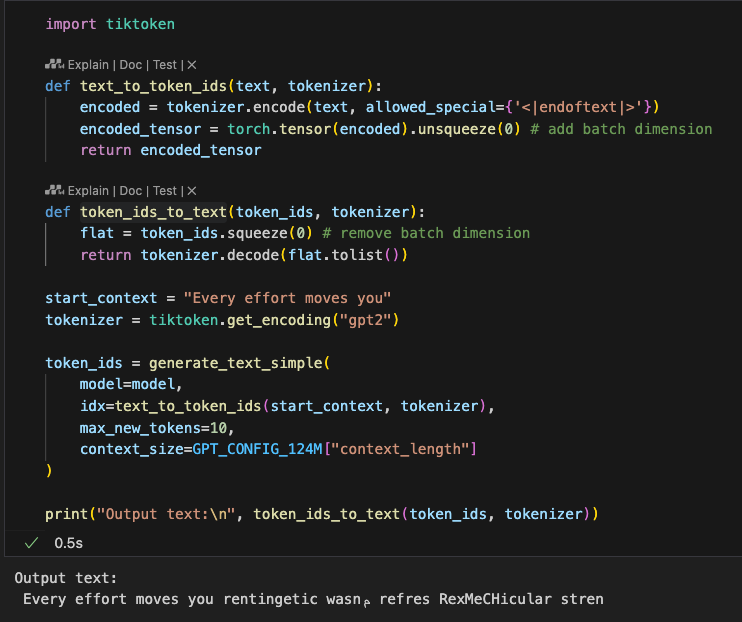

我们这里先简单实现两个函数text_to_token_ ids和token_ids_ to_text用于快速将token串和字符串进行互相转换

Here, we will implement two simple functions, text_to_token_ids and token_ids_to_text, to quickly convert between token sequences and strings.

因为目前模型尚未训练,所以输出结果乱七八糟,因此我们首先要建立一个评估体系,将是否输出流畅句子量化成数值指标

Since the model has not been trained yet, the output is currently nonsensical. Therefore, we need to establish an evaluation system to quantify the fluency of the output sentences as numerical metrics.

5.1.2 计算生产文本损失 Calculating Text Generation Loss

为了计算数值损失,我们首先需要获得模型预测的文本数据(样本),以及真正的结果文本数据(目标标签)

To calculate numerical loss, we first need to obtain the predicted text data (samples) generated by the model, as well as the actual text data (target labels).

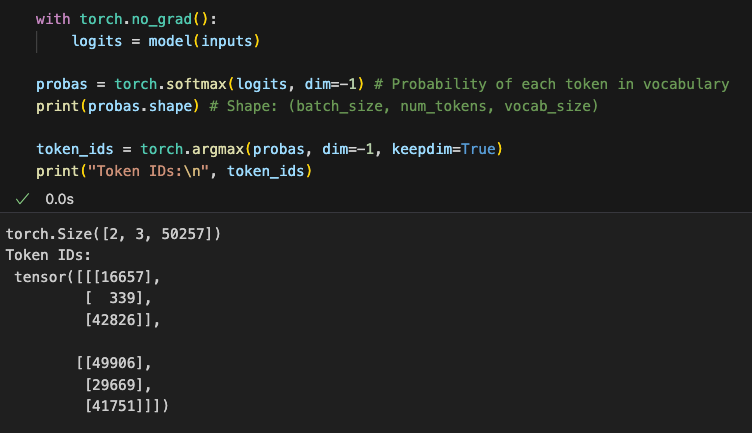

回顾一下,我们预测的出文本数据(样本),是通过对GPT模型输出的logits进行了softmax进行处理成概率后,选出概率最大的下标token得到的对应字符

As a reminder, the predicted text data (samples) is derived by processing the logits output by the GPT model through a softmax function to convert them into probabilities, and then selecting the token corresponding to the index with the highest probability.

另一方面,我们真正的结果文本数据(目标标签),是通过对应数据样本后移一位token得到的

On the other hand, the actual text data (target labels) is obtained by shifting the token sequence of the corresponding data sample one position forward.

可以发现这两者的结构是一样的,因为这种设计是用来提升机器学习性能的一种结构设计

It can be observed that the structures of these two are the same, as this design is a common structure used to enhance machine learning performance.

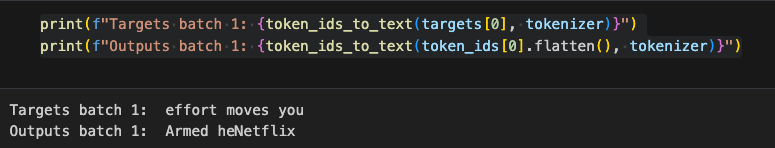

我们把这两者转化成文本,对比发现差别蛮大的,因为我们模型还没训练,因此我们就要根据这个差别,评估计算我们GPT模型的损失,同时也要用其来更新我们的GPT模型权重进而改善表现,输出更好的文本

When we convert both into text and compare them, we find significant differences. Since our model has not been trained yet, we need to assess and calculate the loss of the GPT model based on these differences. This loss will also be used to update the weights of our GPT model, improving its performance and enabling it to generate better text.

评估的核心是计算预估的数据和实际的数据“差距有多远”,这个距离将被用于更新模型权重,以便模型能产生相似或者接近的文本预测输出

The core of evaluation lies in calculating "how far apart" the predicted data and the actual data are. This "distance" will be used to adjust the model weights so that the model can produce predictions that are similar to or close to the actual data.

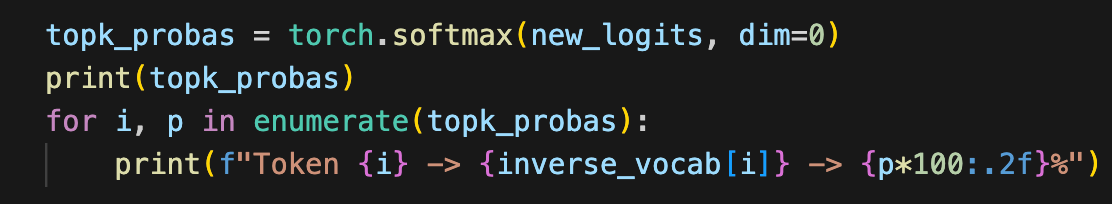

训练的目的就是要让softmax输出的tokenId的概率和真正的tokenId接近,对真正的tokenId预测概率越高,这个得分应该越好,我们的目标就是要训练模型想办法让它能最大化输出的得分,如下图(注意这里图片是演示每个单词概率约为1/7,真实GPT2每个初始概率约为1/50257)

The goal of training is to make the probability of the predicted token ID from the softmax output as close as possible to the actual token ID. The higher the predicted probability for the actual token ID, the better the score should be. Our objective is to train the model to maximize this score, as illustrated in the figure (note that the image demonstrates an example where the probability for each word is about 1/7, while in the real GPT-2, the initial probability for each token is approximately 1/50257).

如何最大化目标标记对应的 softmax 出的概率值?总体来说,我们通过更新模型的权重,使模型对目标标记 ID 生成更高的输出值。这种权重更新的过程被称为反向传播(backpropagation),这是训练深度神经网络的一种标准技术

How Do We Maximize the Probability Corresponding to the Target Token from the Softmax Output? In general, we achieve this by updating the model weights so that the model generates higher output values for the target token ID. This weight update process is called backpropagation, a standard technique for training deep neural networks.

而反向传播需要一个损失函数,用来计算模型预测输出(这里指目标标记 ID 对应的概率)和实际期望输出之间的差异。这个损失函数衡量模型预测与目标值之间的偏差程度。

Backpropagation requires a loss function to calculate the difference between the predicted output of the model (here, the probability corresponding to the target token ID) and the actual expected output. This loss function measures the degree of deviation between the model's predictions and the target values.

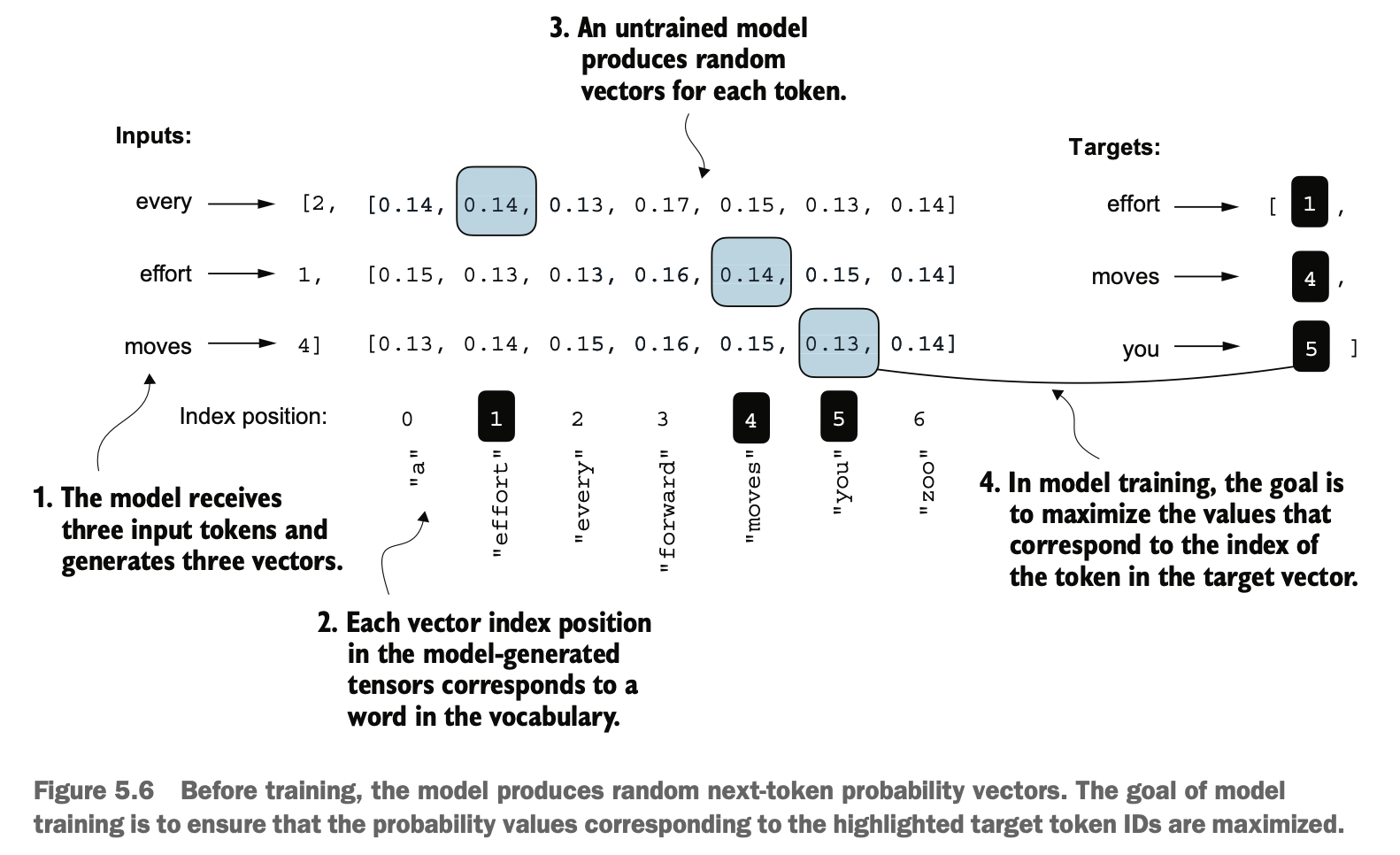

因此我们现在要计算出当前预测数据与实际结果的损失,步骤如下图:

模型输出的原始值(未归一化的分数)。

使用 softmax 将 logits 转换为概率分布。

提取目标类别对应的概率值,范围(0, 1)。约接近1说明猜得越准。

对目标类别的概率值取对数log,范围(-∞, 0),越接近0越好。

计算所有目标类别的平均对数概率值,范围(-∞, 0),越接近0越好。

取平均对数概率的负值,作为最终的损失值(即交叉熵损失),范围(0, +∞),越接近0越好,表示损失越小。

Therefore, we now need to calculate the loss between the current predictions and the actual results. The steps are as follows:

Raw model outputs (unnormalized scores): The model produces logits as its output.

Convert logits to probabilities using softmax: Transform the logits into a probability distribution.

Extract the probability of the target class: This value lies in the range (0, 1). The closer it is to 1, the more accurate the prediction.

Take the logarithm (log) of the target class probability: The result lies in the range (-∞, 0). The closer it is to 0, the better.

Compute the average logarithm of probabilities for all target classes: This gives a result in the range (-∞, 0). Again, the closer it is to 0, the better.

Take the negative of the average log probability: This serves as the final loss value (i.e., cross-entropy loss). The result lies in the range (0, +∞). The closer it is to 0, the smaller the loss.

训练的目标是通过更新模型的权重,使平均对数概率(损失)尽可能接近 0,因为越这样越说明猜的越准。然而,在深度学习中,通常的做法不是直接将平均对数概率推到 0,而是将负平均对数概率降到 0

The goal of training is to update the model's weights so that the average log probability (loss) is as close to 0 as possible—indicating that the predictions are highly accurate. However, in deep learning, the usual approach is not to directly reduce the average log probability to 0 but rather to minimize the negative average log probability to 0.

PyTorch 在这里非常方便,因为它已经内置了一个 cross_entropy 函数,可以自动完成上图所示的所有六个步骤,直接计算出损失(即负平均对数概率),这个损失越小越好

(注:在机器学习的背景下,特别是在像 PyTorch 这样的框架中,cross_entropy 函数用于计算离散结果的这种差异。它类似于目标标记在模型生成的标记概率下的负平均对数概率,因此“交叉熵”和“负平均对数概率”这两个术语在实践中是相关的,并且经常可以互换使用。)

PyTorch Makes This Process Convenient PyTorch provides a built-in cross_entropy function that automates all six steps above. It directly computes the loss (negative average log probability), and the smaller the loss, the better.

(Note: In the context of machine learning—especially when using frameworks like PyTorch—the cross_entropy function is used to measure the difference for discrete outcomes. It is equivalent to the negative average log probability of the target label under the predicted probability distribution. Therefore, in practice, the terms "cross-entropy" and "negative average log probability" are closely related and can often be used interchangeably.)

除了损失以外,还可用困惑度衡量,困惑度可以通过以下公式计算:perplexity = torch.exp(loss)

In addition to loss, perplexity can be used as a metric. Perplexity is calculated using the following formula: perplexity = torch.exp(loss)

困惑度通常被认为比原始的损失值更容易解释,因为它表明了模型在每一步中对有效词汇表大小的不确定性程度。在给定的示例中,这意味着模型无法确定在词汇表中的 48,725 个标记中,哪一个应该作为下一个标记生成。

Perplexity is often considered more interpretable than the raw loss value because it indicates the model's degree of uncertainty about the size of the effective vocabulary at each step. In the given example, this means the model struggles to decide which of the 48,725 tokens in the vocabulary should be generated next.

5.1.3 计算训练集和验证集的损失 Calculating Training and Validation Loss

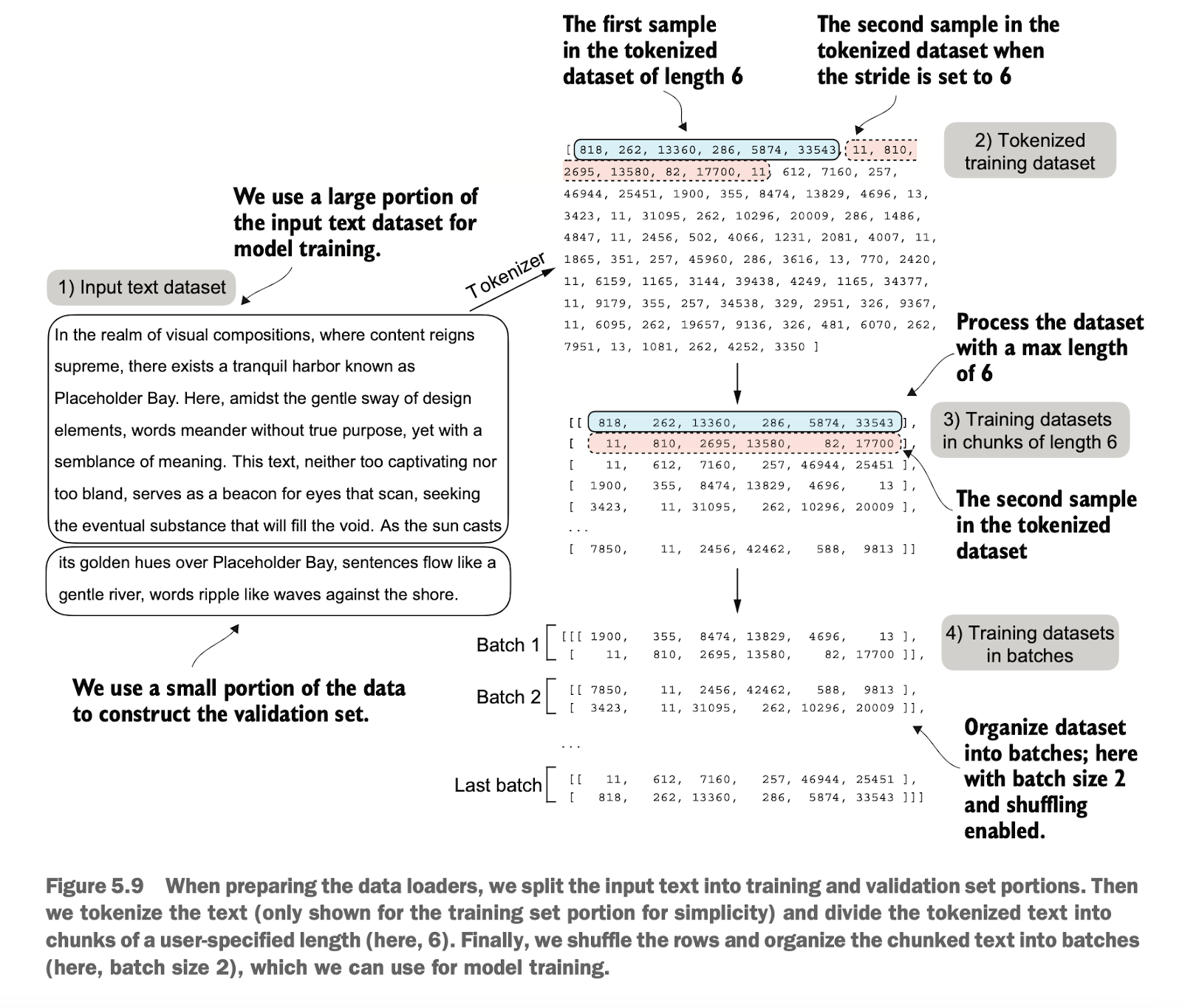

接下来,我们读取训练文本verdict,然后划分训练集和验证集

将输入文本数据划分为训练集和验证集。

对训练集进行分词,并分割为固定长度的块。

将分块后的数据打乱,分批次组织成模型训练所需的格式(如批次大小为 2)。

验证集的处理方式类似。

Next, we read the training text verdict and split it into a training set and a validation set.

Split the input text data into a training set and a validation set.

Tokenize the training set and divide it into fixed-length chunks.

Shuffle the chunked data and organize it into batches required for model training (e.g., batch size of 2).

The validation set is processed in a similar way.

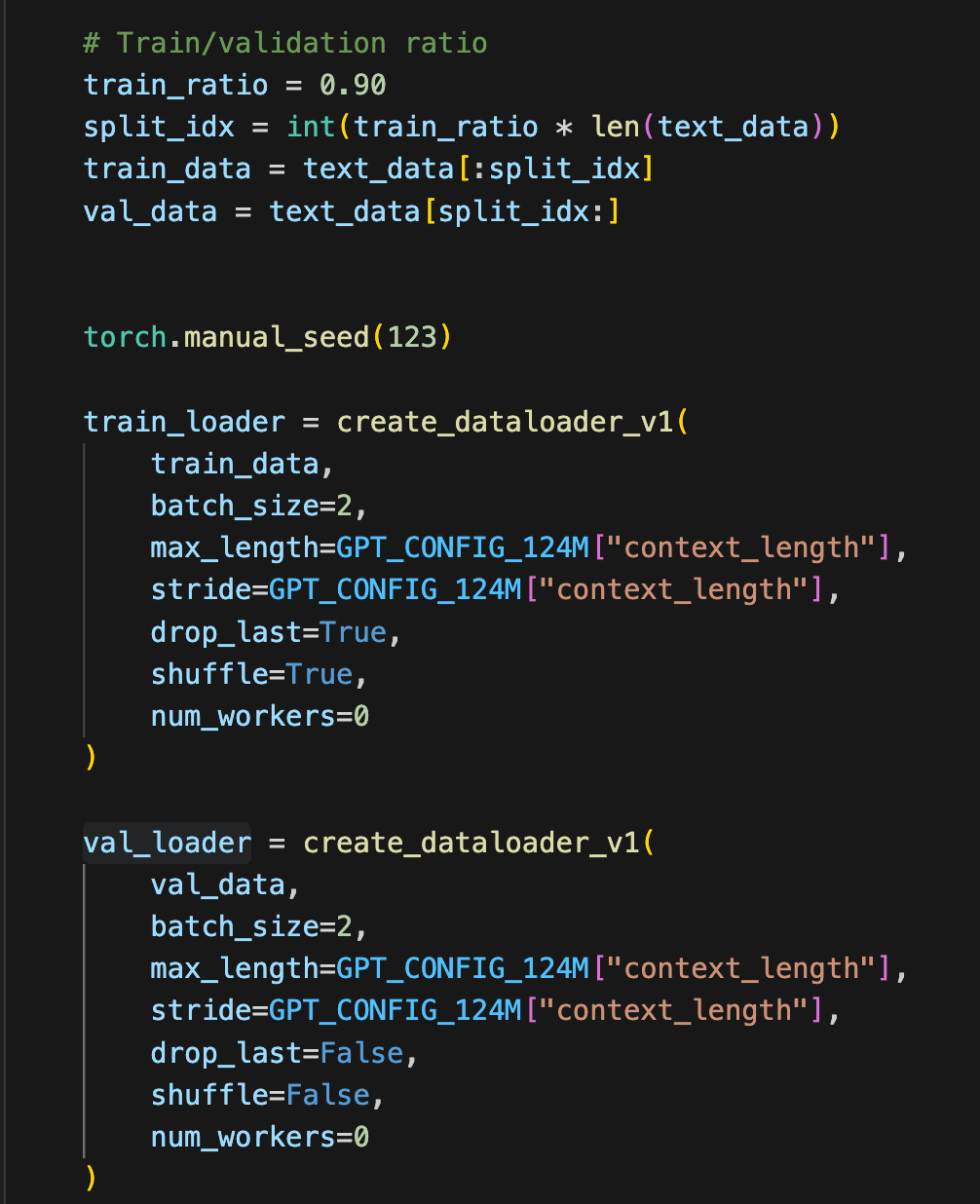

然后使用之前开发的dataloader进行数据的读取加载(内部会将长度N的文本弄成N-1的对)

Then, use the previously developed dataloader to read and load the data (internally, it will turn a text of length N into pairs of N-1).

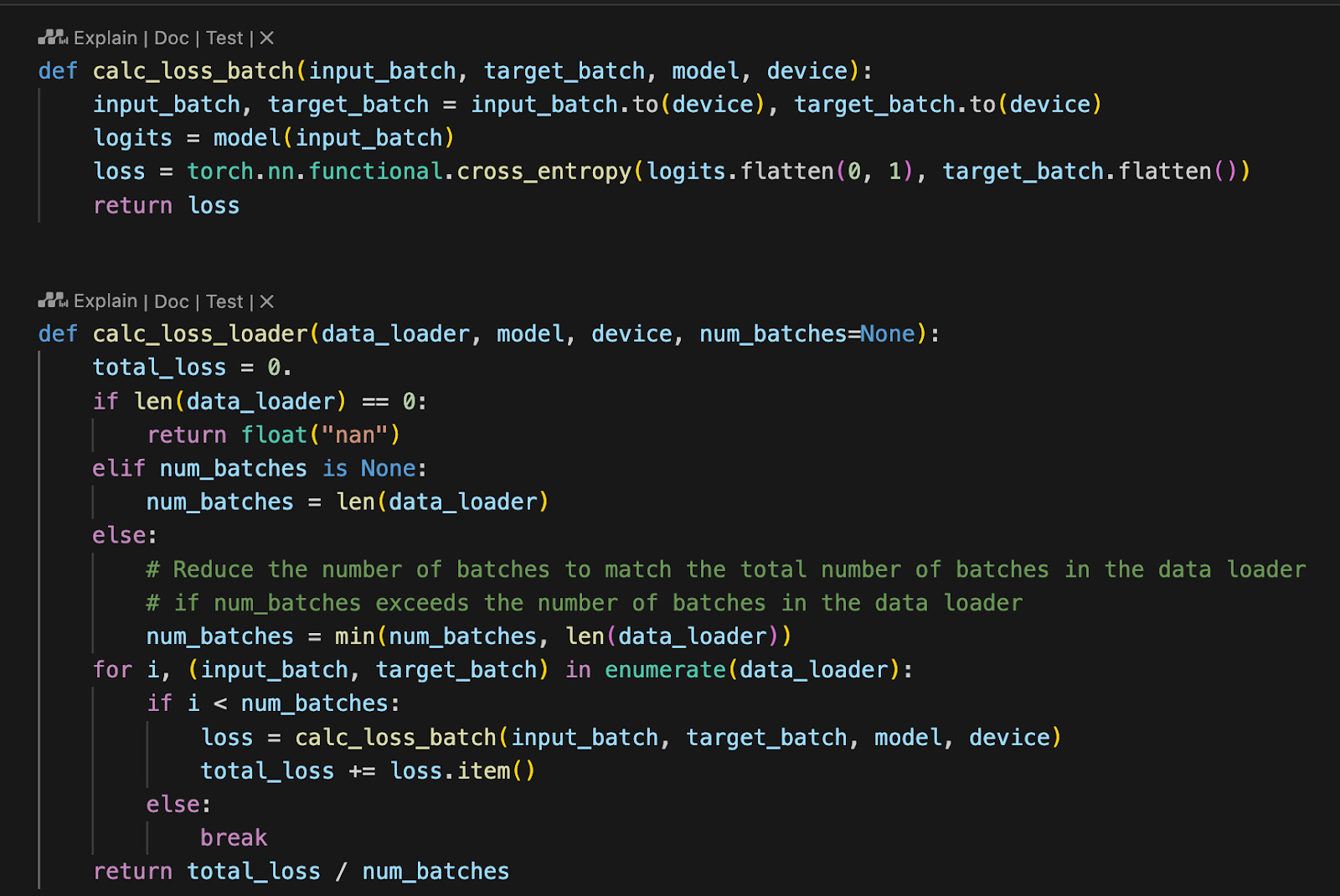

然后定义损失函数(使用交叉熵cross_entropy),以及定义批数据的损失计算

Next, define the loss function (using cross-entropy cross_entropy) and calculate the loss for the batch data.

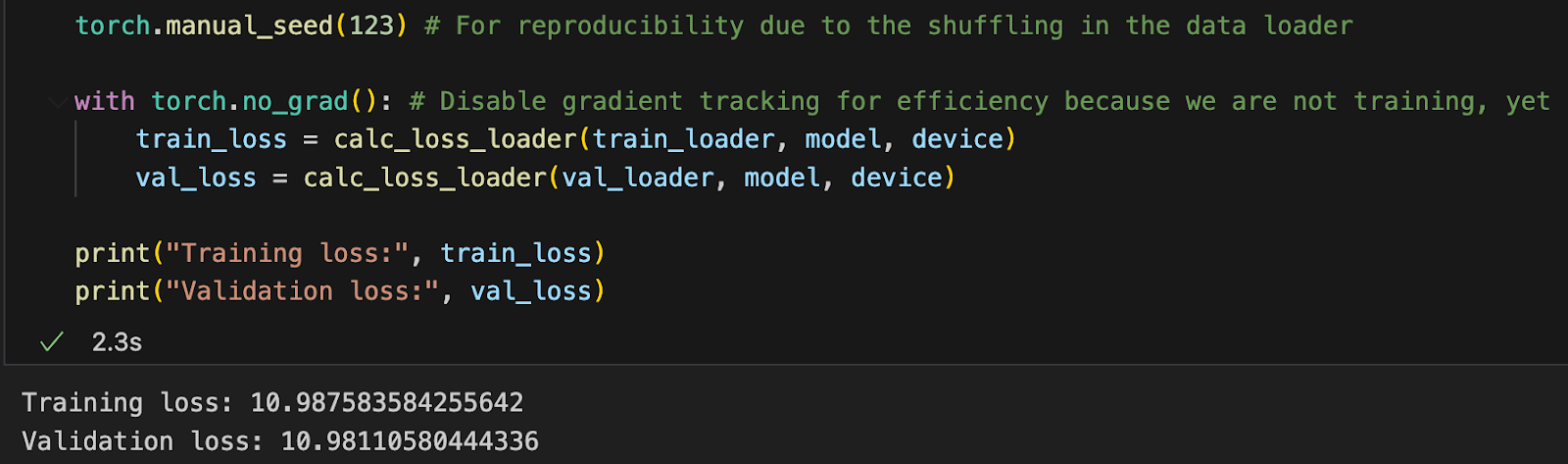

计算我们批数据的损失,发现损失值很大,这是因为我们还没进行训练

When calculating the loss for the batch data, we find that the loss value is very large because we have not yet performed training.

5.2 训练LLM Training LLM

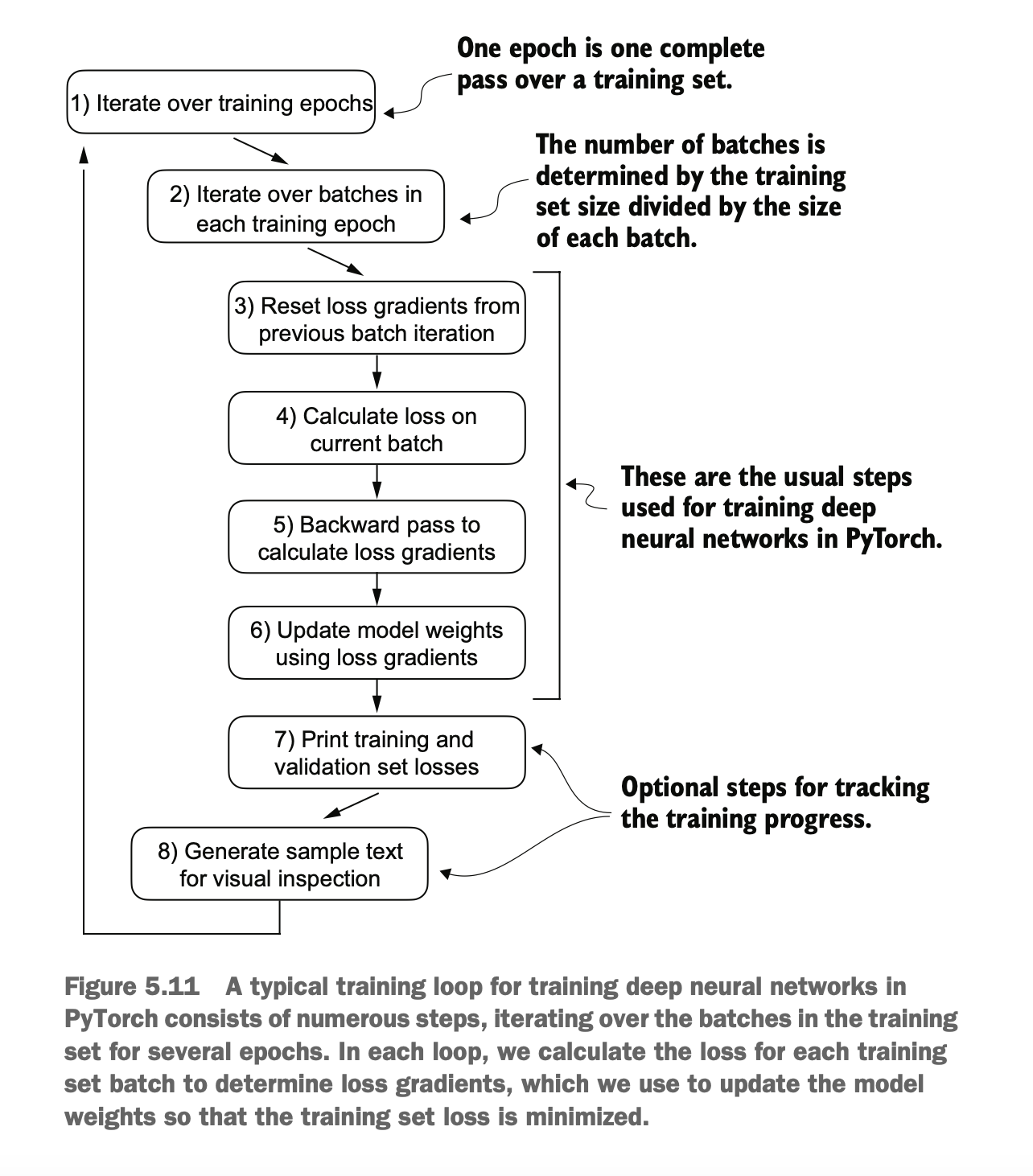

我们开始写代码对模型进行预训练,步骤如下(3-6为训练DNN通用步骤):

遍历训练轮次 (Epochs):对整个训练数据集进行多趟完整遍历。

遍历每个轮次中的批次 (Batches)

将训练数据划分为小批量,逐个批次训练。

批次数量 = 训练集大小 ÷ 批次大小。

清除上一批次的梯度 (Reset Gradients):在每个批次训练前,将之前的梯度清零(optimizer.zero_grad())。

计算当前批次的损失 (Calculate Loss):对当前批次数据进行前向传播,计算预测值与真实值的误差(损失)。

反向传播计算梯度 (Backward Pass):对损失执行反向传播(loss.backward()),计算梯度。

使用梯度更新模型权重 (Update Weights):使用优化器(如 SGD、Adam)根据梯度更新模型参数(optimizer.step())。

打印训练和验证集损失 (Optional):跟踪训练进度,打印训练集和验证集的损失。

生成样本以便可视化检查 (Optional):例如生成样本输出,用于检查模型的实际表现。

We start writing code to pre-train the model. The steps are as follows (steps 3-6 are general steps for training DNNs):

Iterate through training epochs: Perform multiple complete passes over the entire training dataset.

Iterate through batches within each epoch:

Split the training data into small batches and train the model batch by batch.

Number of batches = size of the training set ÷ batch size.

Reset gradients: Before training each batch, clear the previous gradients (optimizer.zero_grad()).

Calculate loss: Perform a forward pass on the current batch data, calculate the error (loss) between predictions and actual values.

Backward pass to calculate gradients: Perform backpropagation on the loss (loss.backward()), calculating the gradients.

Update model weights: Use an optimizer (e.g., SGD, Adam) to update the model parameters based on the gradients (optimizer.step()).

Print training and validation loss (optional): Track training progress by printing the losses for the training and validation sets.

Generate samples for visual inspection (optional): For example, generate sample outputs to check the model's actual performance.

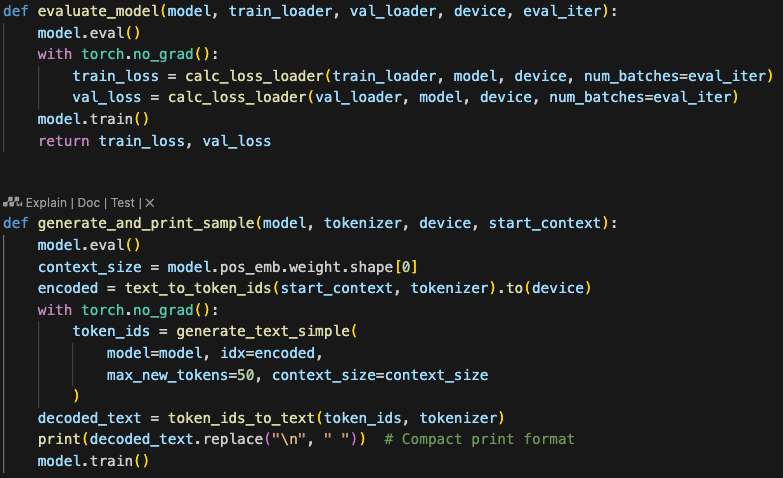

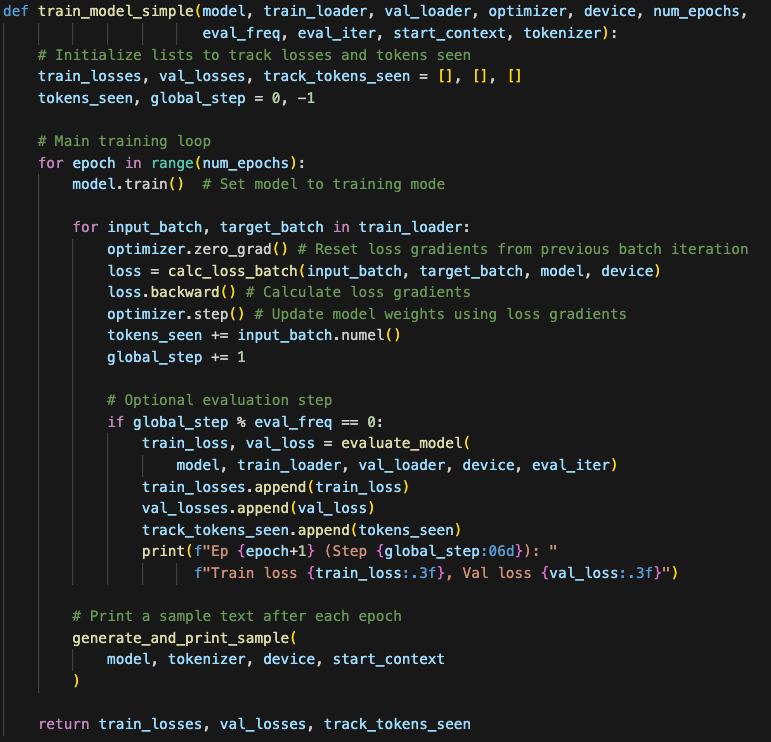

具体实现代码片段如下

The specific implementation code is as follows.

我们使用AdamW优化器,实际跑跑看

We use the AdamW optimizer and run the training to see the results.

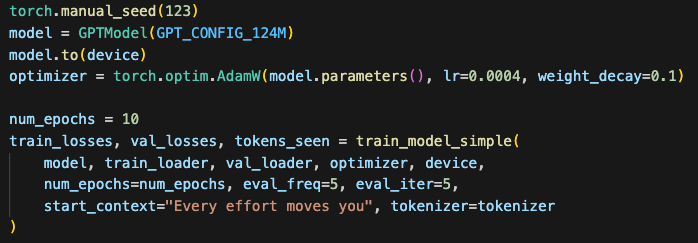

跑得结果如下,从一开始什么都不输出,到能输出正常的文字了

The results are as follows: Initially, the model outputs nothing, but eventually, it starts generating coherent text.

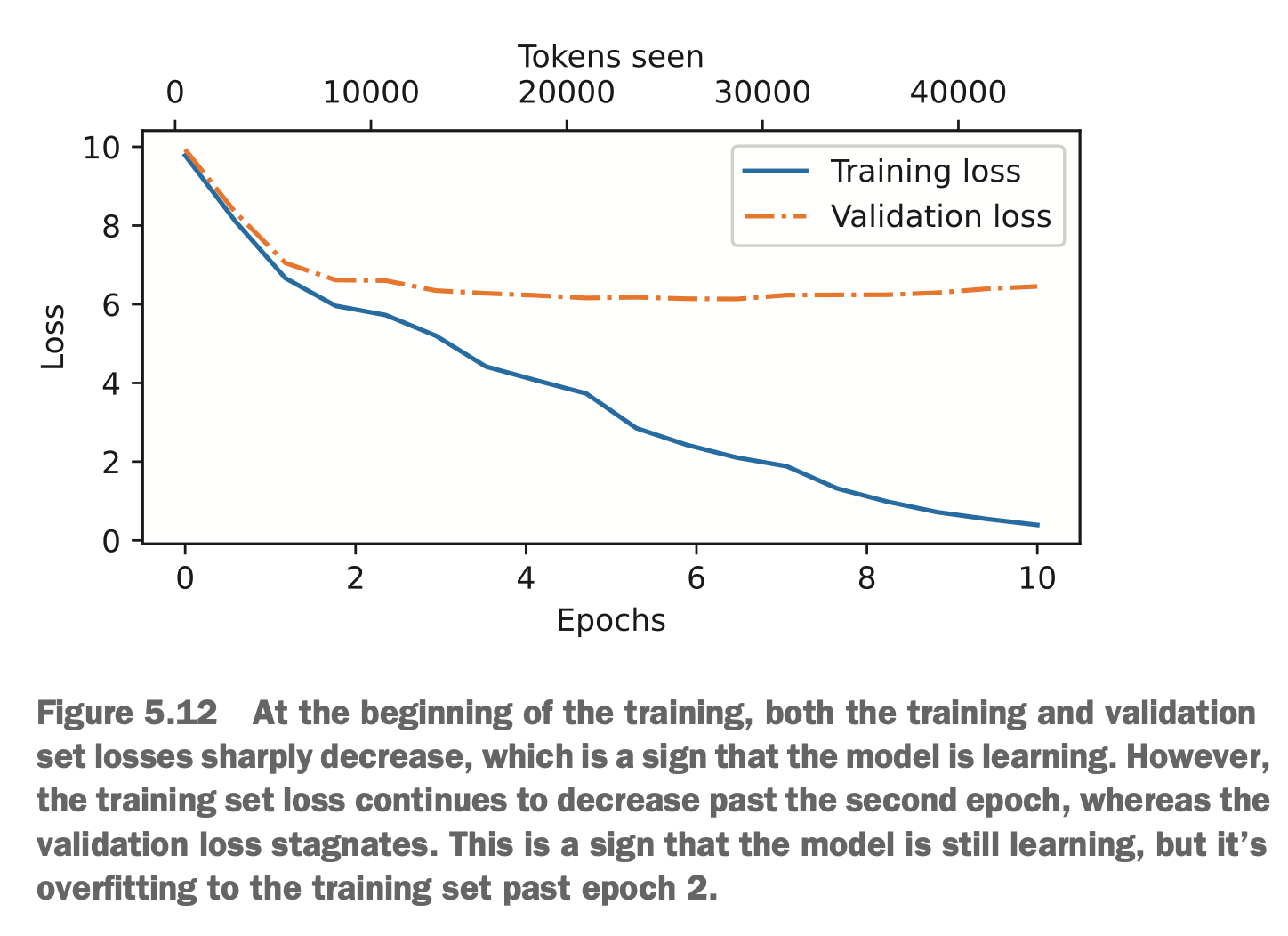

但当我们把训练中途保存的损失数据做可视化时,却发现随着训练epoch的增加,从epoch=2开始训练集的损失减小,但验证集的损失却停滞了,说明这里出现了拟合

However, when we visualize the loss data saved during training, we find that as the training epochs progress, starting from epoch=2, the training loss decreases, but the validation loss stagnates, indicating overfitting.

这个结果是符合预期的,因为我们只用了一个很小的数据来进行训练,如果我们用附录的6万的公开文件训练的话就不会像这样拟合。常见大模型训练的时候,对于超大数据集用多个近似 epoch,对小数据集用一个或多个epoch

This result is expected because we used only a very small dataset for training. If we train with the 60,000 public files in Appendix, overfitting like this would not occur. For large-scale model training, it is common to use multiple approximate epochs for extremely large datasets, and one or more epochs for small datasets.

5.3 控制文本生成随机性 Controlling Randomness For Text Generation

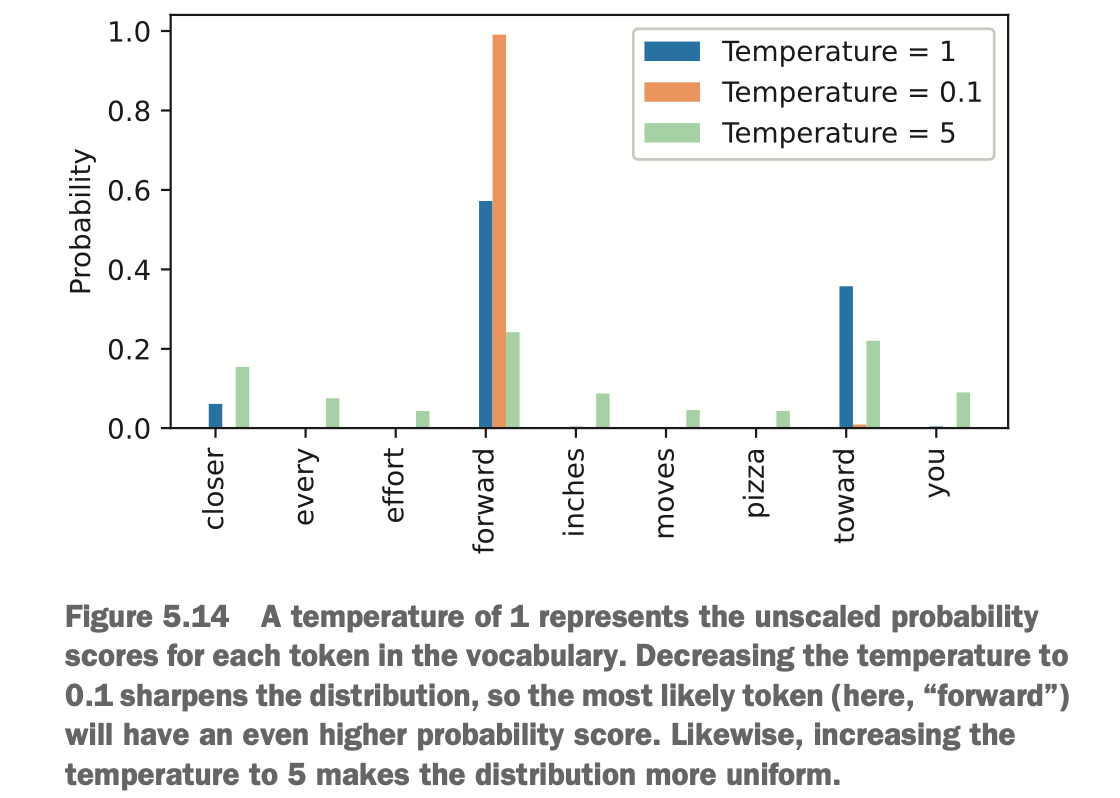

目前我们每次都通过从词汇表中选概率的最大单词作输出,所以我们每次生成的文本都是一样的

,对于文本生成还有不同策略,我们将介绍两种技术:温度缩放(temperature scaling)和 top-k 采样(top-k sampling)

Currently, we always select the word with the highest probability from the vocabulary as the output, so the generated text is always the same.

5.3.1 温度缩放 Temperature Scaling

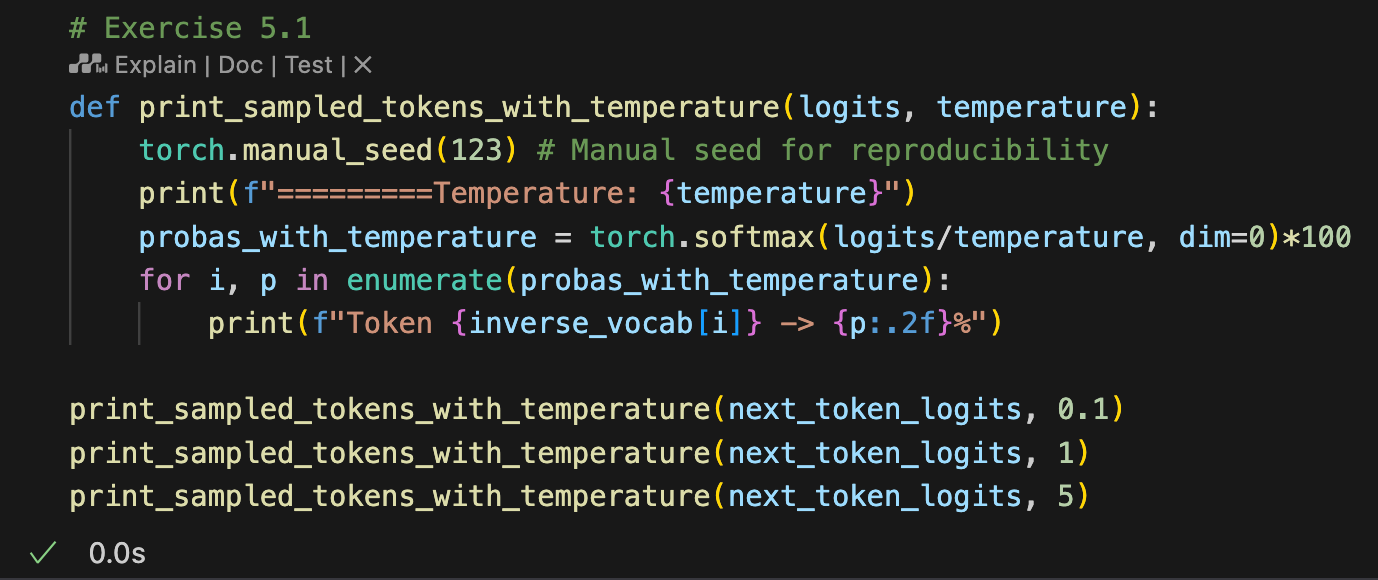

为了生成有随机性的下一个词,我们可以把选词的函数从argmax替换成概率分布的函数,比如使用温度缩放的温度参数

To generate the next word with randomness, we can replace the word selection function from argmax to a probability-based function, such as one using temperature scaling.

温度缩放是一种通过调整 logits(模型输出的未归一化分数)来控制概率分布的方法。具体来说,它通过将 logits 除以一个温度参数(temperature)来缩放分布,然后使用 softmax 将其转换为概率。

高温度:概率分布更加均匀,模型的输出更随机(增加不确定性,容易不理性)。

低温度:概率分布更加集中,模型更倾向于选择概率最大的结果(减少不确定性)。

Temperature scaling is a method to control the probability distribution by adjusting the logits (the unnormalized scores output by the model). Specifically, it scales the logits by dividing them by a temperature parameter and then applies softmax to convert them into probabilities.

High temperature: The probability distribution becomes more uniform, and the model’s output is more random (increased uncertainty, prone to irrational outputs).

Low temperature: The probability distribution becomes more concentrated, and the model tends to choose the result with the highest probability (reduced uncertainty).

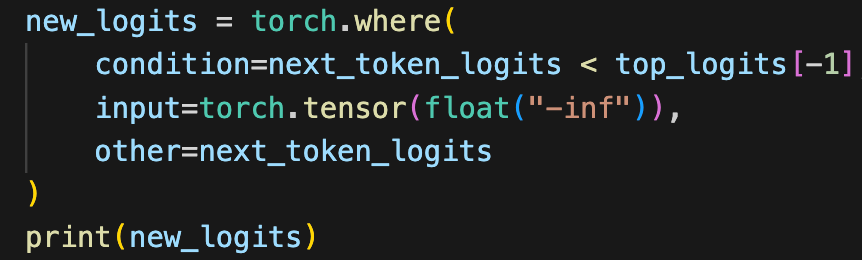

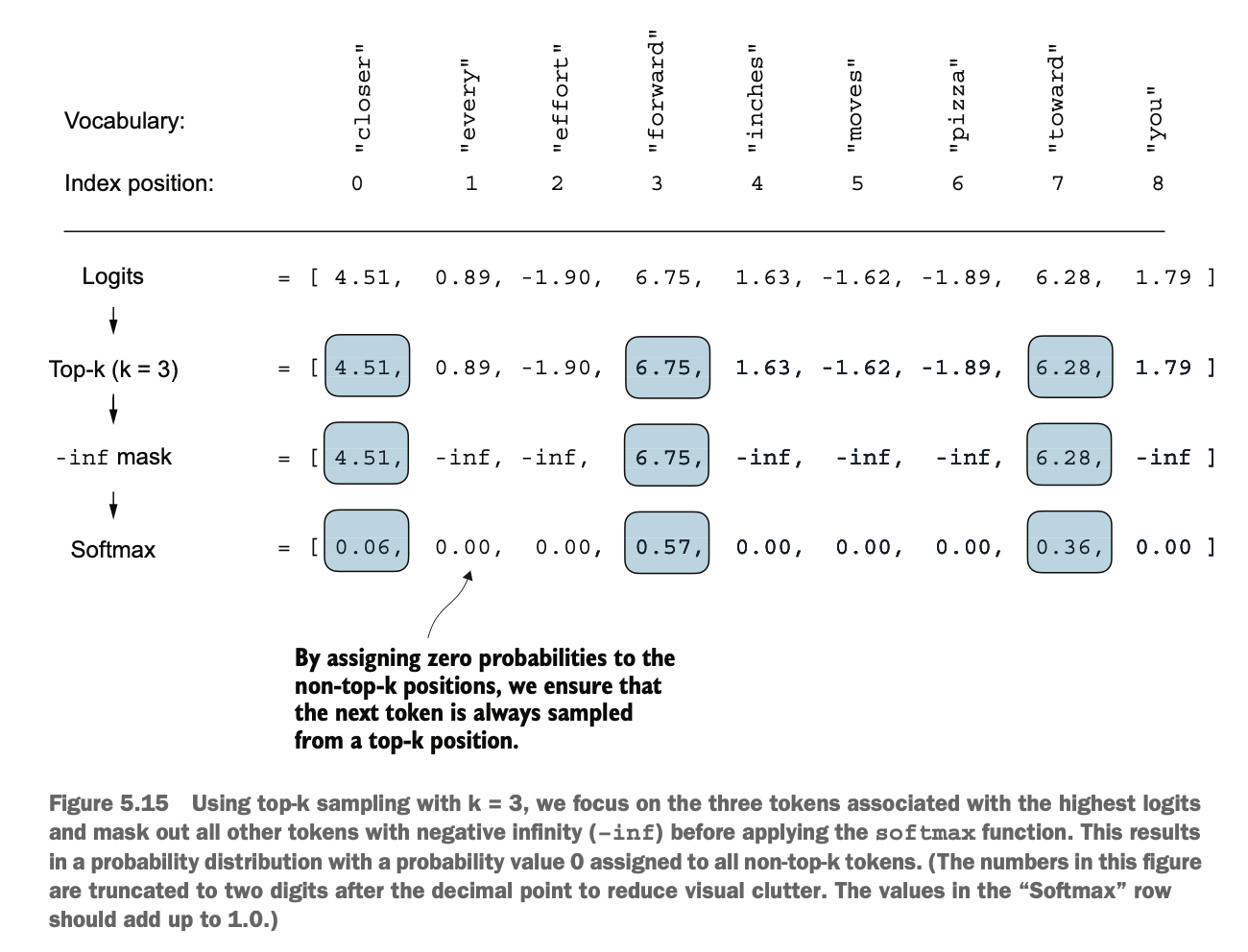

5.3.2 top-k 采样 Top-k Sampling

温度缩放虽然可以产生多元化输出,但小概率情况下会导致生成导致语法错误的单词

While temperature scaling can produce diverse outputs, it may lead to the generation of grammatically incorrect words due to low-probability events.

此时如果使用top-k采样,我们可以先从logits中选出前K个最大的词,然后把其它的单词logits用mask设成-inf,这样一来使用softmax之后只有这K个单词的概率是正的,其它是负的

By using top-k sampling, we can address this issue. First, we select the top K words with the highest logits, then mask the logits of all other words by setting them to -inf. This ensures that after applying softmax, only the probabilities of these K words are positive, while all others are zero.

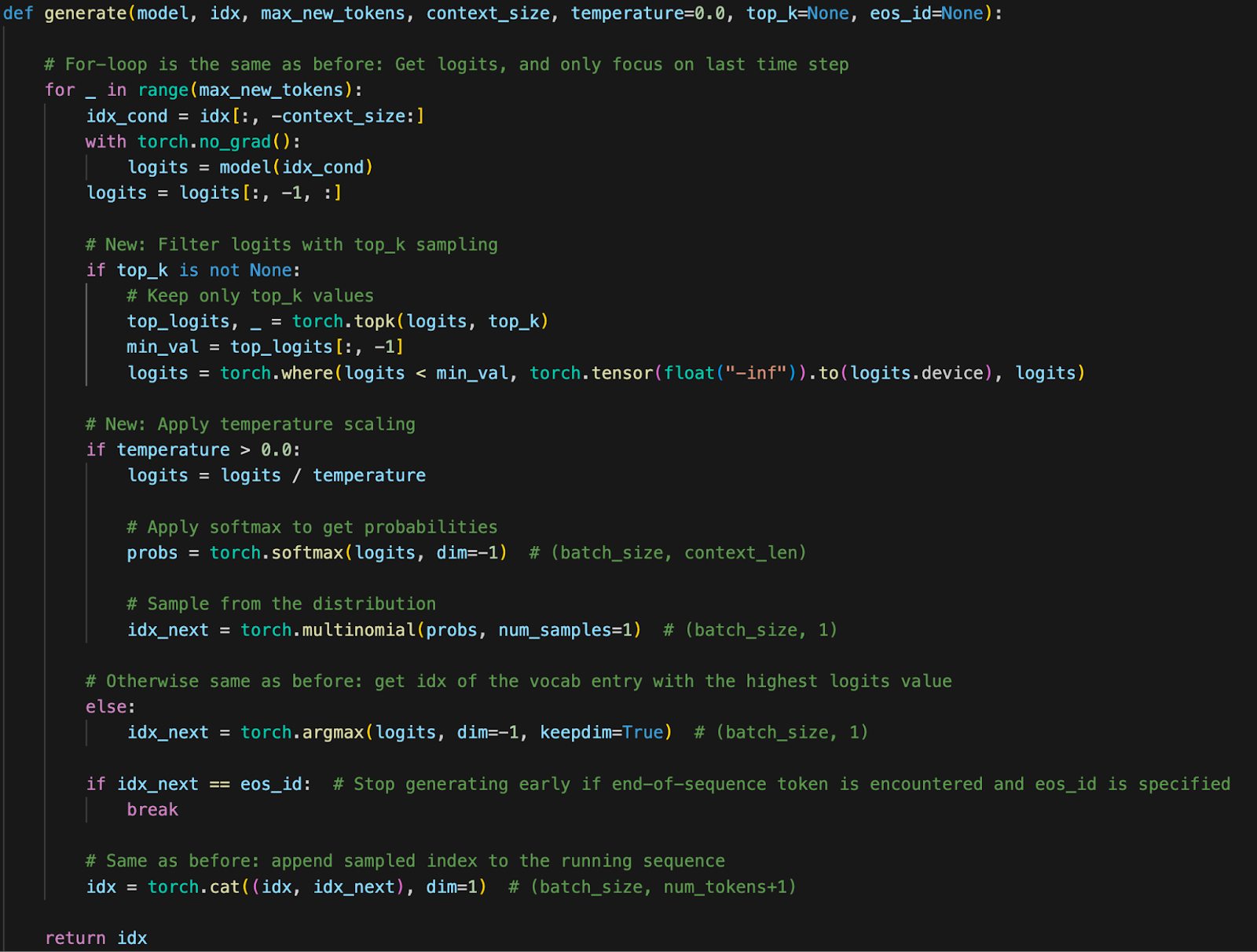

5.3.3 修改文本生成 Modifying Text Generation

现在我们结合温度缩放和top-k采样方法修改我们的文本生成函数

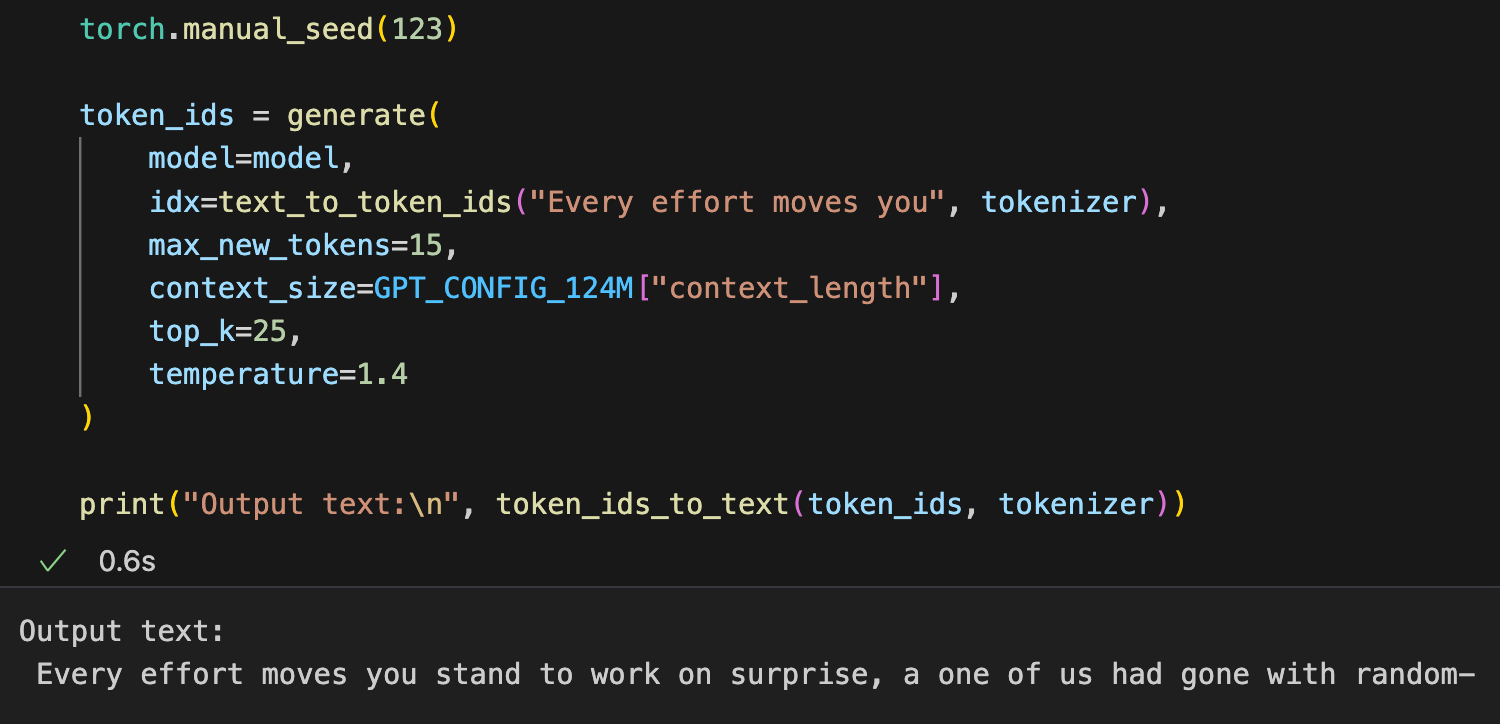

Now we combine temperature scaling and top-k sampling to modify our text generation function.

我们便可以通过设置温度值和k生成不同的输出

By setting the temperature value and K, we can generate different outputs.

5.4 加载和保存Pytorch权重 Saving and Loading PyTorch Weights

考虑到训练LLM模型的开销较大,我们需要能够将模型的参数进行保存和加载,这样我们就可以快速初始化模型,或者加载别人已经训练好的的开源权重

Considering the high cost of training LLM models, we need the ability to save and load the model parameters. This allows us to quickly initialize the model or load pre-trained open-source weights.

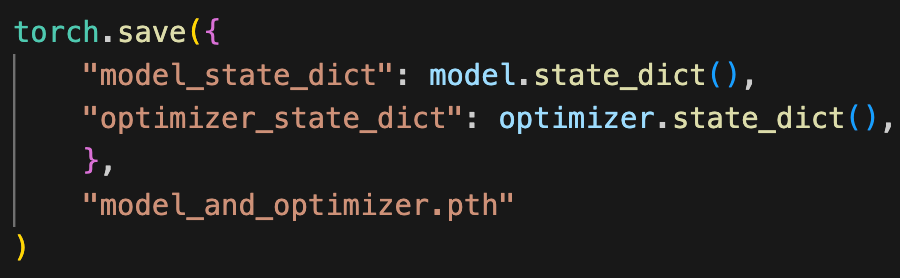

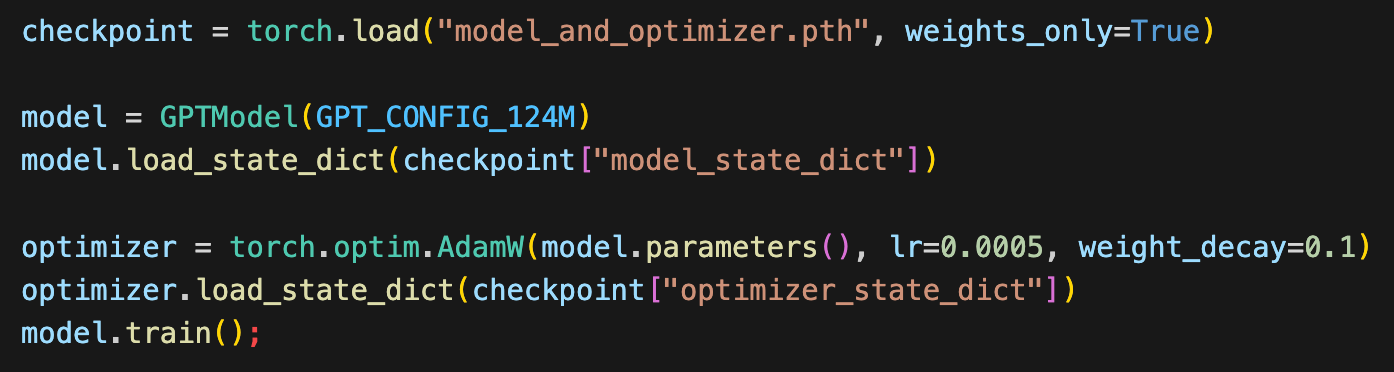

保存Pytorch的模型较简单,直接对模型的state_dict进行save()完事,保存的文件名按约定一般指定使用.pth后缀

Saving a PyTorch model is straightforward. Simply save the model's state_dict using the save() function. By convention, the saved file name typically uses the .pth suffix.

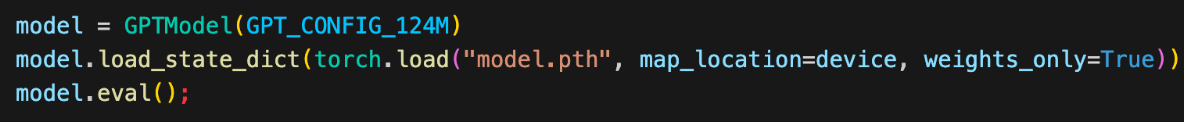

保存之后,我们使用load_state_dict()对模型的权重进行加载即可

After saving, we can load the model's weights using the load_state_dict() function.

注意:

Dropout 在训练和推理中的作用:Dropout 防止训练时过拟合,但在推理时需要禁用(通过 model.eval() 切换到推理模式)。

保存优化器状态的重要性:自适应优化器(如 AdamW)存储历史数据来动态调整学习率。如果状态丢失,优化器会重置,导致模型性能下降或无法收敛。因此,建议在保存模型时也保存优化器的状态(通过 torch.save)。

Notes:

The role of dropout in training and inference: Dropout prevents overfitting during training but must be disabled during inference (switch to inference mode using

model.eval()).The importance of saving optimizer states: Adaptive optimizers (e.g., AdamW) store historical data to dynamically adjust the learning rate. If the optimizer state is lost, it will reset, leading to performance degradation or inability to converge. Therefore, it is recommended to save the optimizer state along with the model (using

torch.save).

因此,我们需要对权重和优化器状态分别进行保存和加载

Thus, we need to save and load the weights and optimizer state separately.

5.5 加载OpenAI的预训练权重 Loading OpenAI's Pre-trained Weights

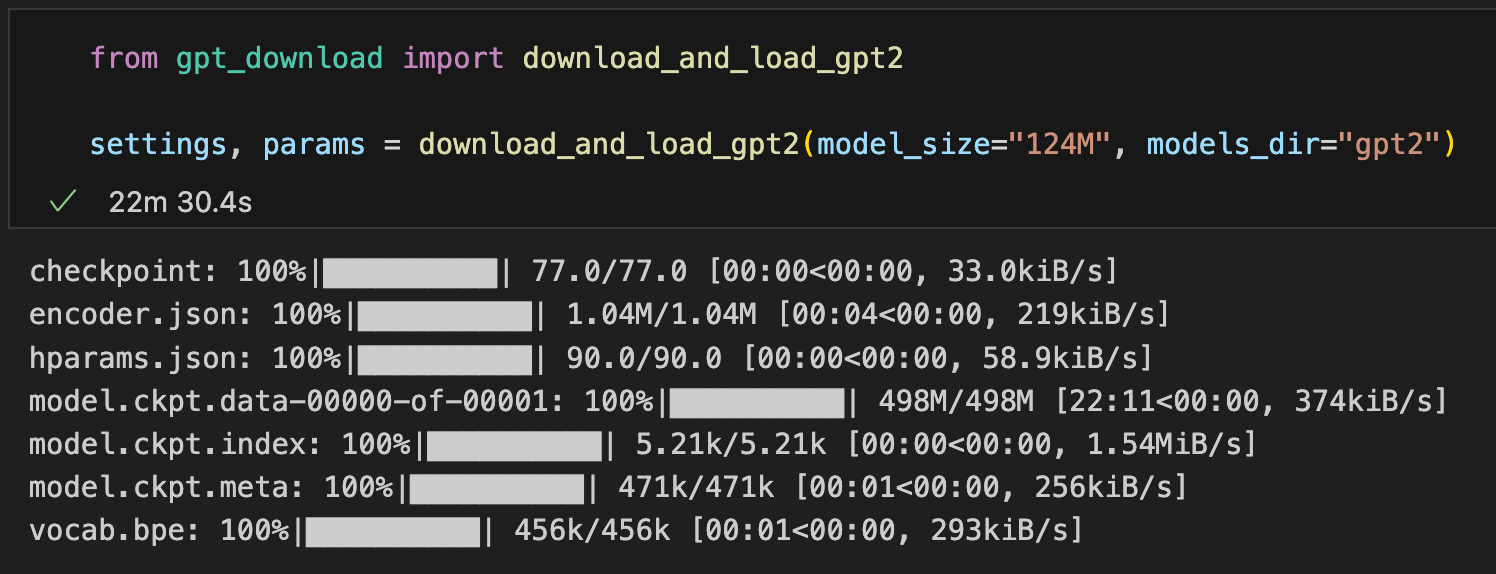

之前我们使用的是一个小文本集训练,以减少时间和计算成本;现在因为GPT2将训练权重做了开源,所以我们可以直接使用训练好的权重,而无需花费时间和计算成本

Previously, we used a small text dataset for training to reduce time and computational costs. Now, since GPT-2's trained weights have been open-sourced, we can directly use the pre-trained weights without spending additional time and computational resources.

由于GPT2算好的权重用的是TensorFlow写的,我们需要安装对应组件已进行加载,我们将下载GPT2架构配置(settings)文件和权重参数文件(params)

Because GPT-2's pre-trained weights were developed using TensorFlow, we need to install the corresponding components to load them. We will download the GPT-2 architecture configuration (settings) file and the weight parameters (params) file.

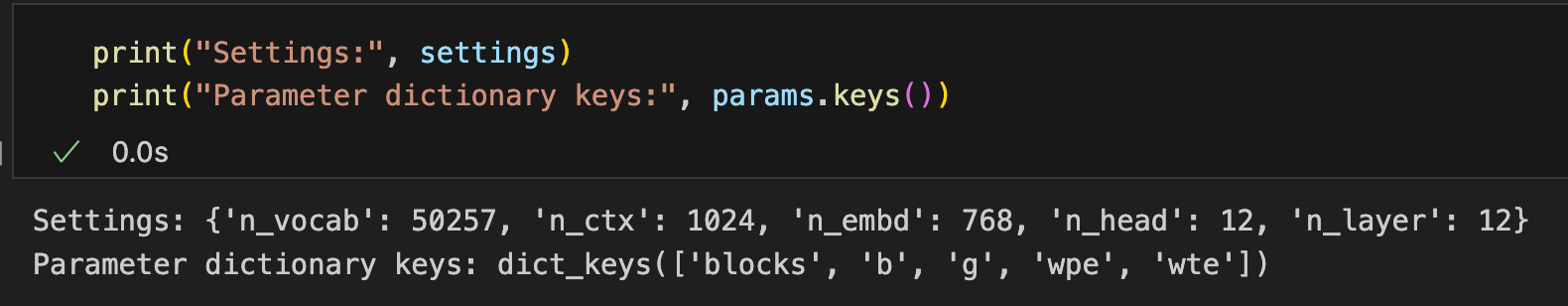

下载完成后查看这两者内容如下:

After downloading, let’s take a look at the contents of these files:

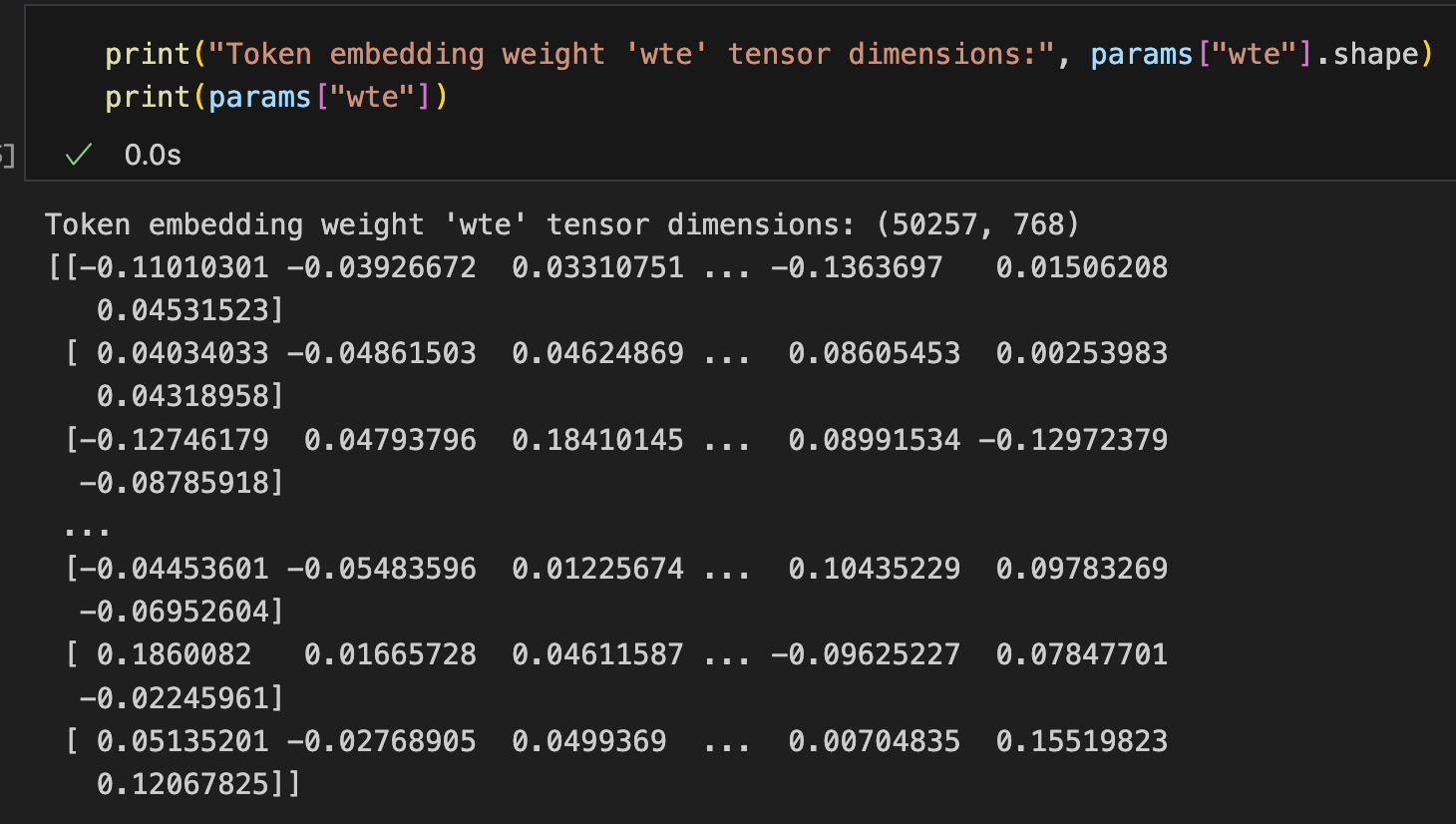

这两个文件都是python字典格式,其中settings文件和之前我们的GPT_CONFIG_124M配置文件是类似的,而params包含了权重,我们可以抽出来看一下

Both files are in Python dictionary format. The settings file is similar to our previous GPT_CONFIG_124M configuration file, while params contains the weights. We can extract and examine the parameters.

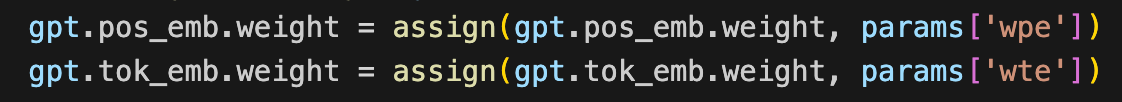

接下来我们要将下载的模型参数加载到我们的模型中

Next, we need to load the downloaded model parameters into our model.

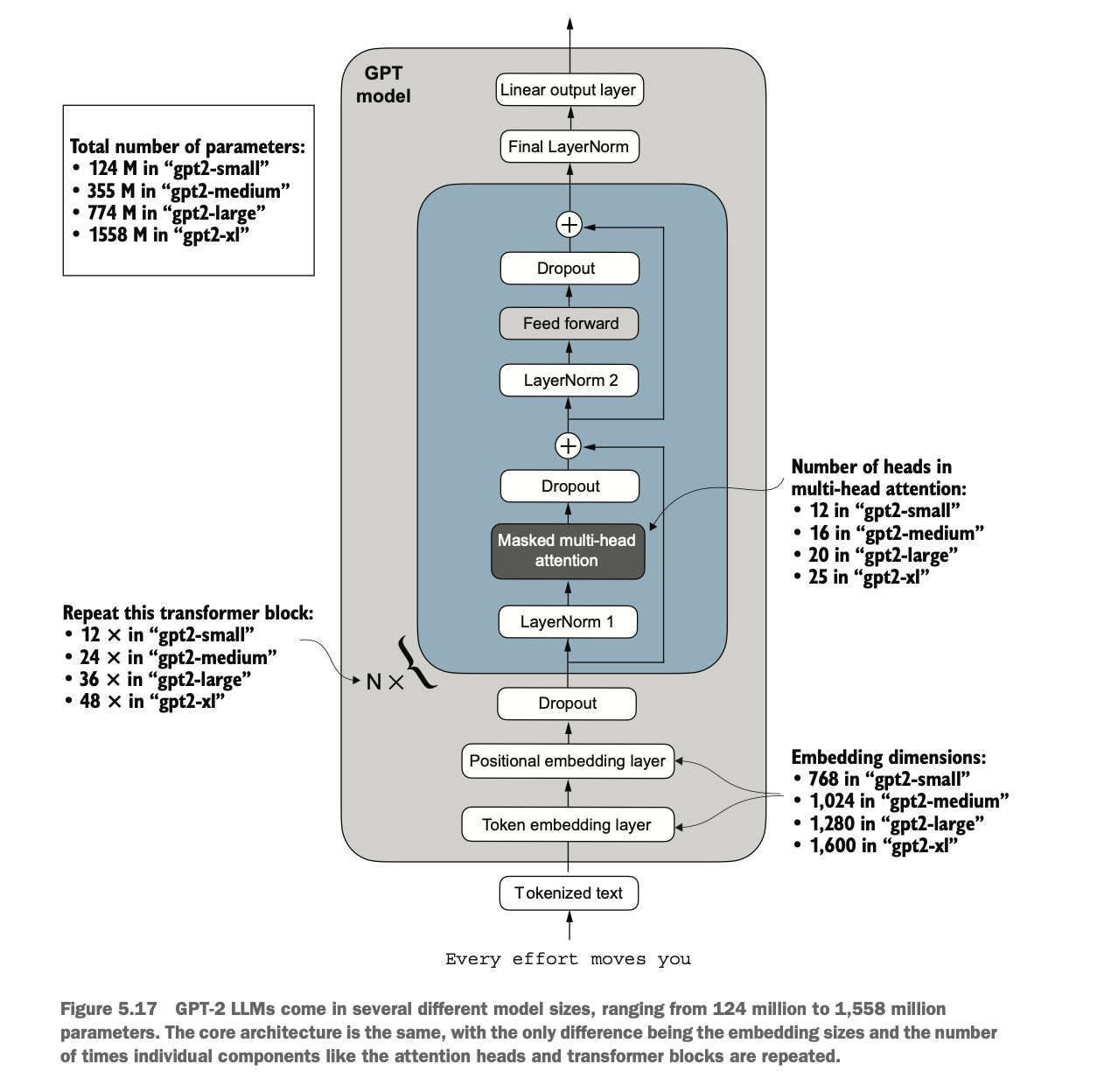

注意GPT2开源的权重还包含了GPT2小、中、大、超大几个版本,它们的区别在于参数大小、transformer重复次数、多头个数、向量的长度等;虽然它们参数不同,但是总结的架构上还是一样的

Note that GPT-2's open-source weights include several versions: small, medium, large, and extra-large. The differences lie in the parameter size, the number of transformer repetitions, the number of attention heads, and the vector length. Although their parameters differ, the overall architecture remains the same.

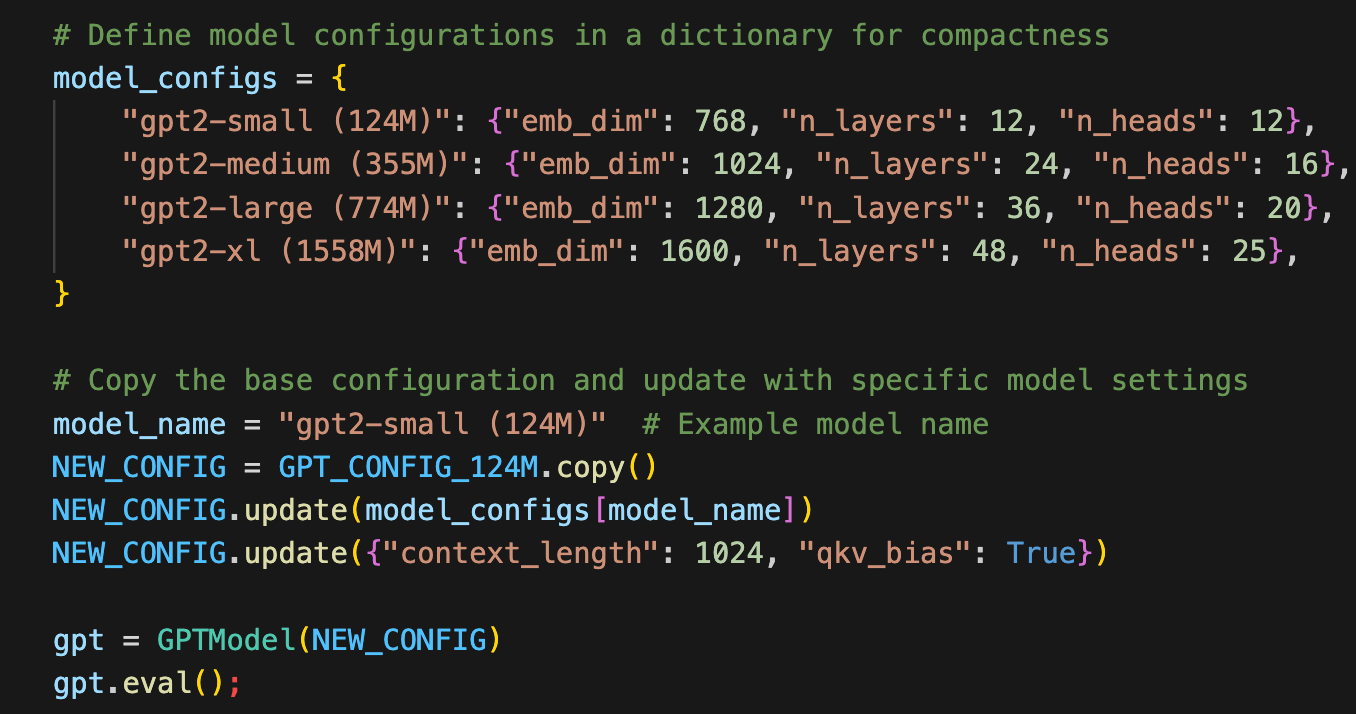

首先加载基本框架参数,注意需要做一些额外的qkv_bias设置以维持一致性,并且按GPT2开源的context_length=1024进行设置从而保持一致

First, we load the basic framework parameters. Note that some additional qkv_bias settings are required to maintain consistency, and we need to set context_length=1024 as per GPT-2's open-source configuration to ensure alignment.

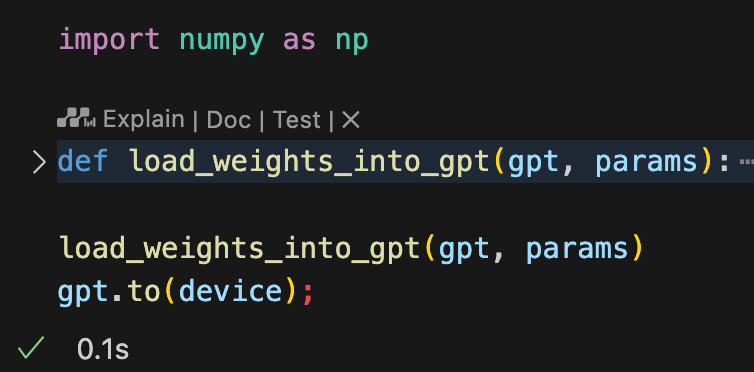

然后我们加载权重本身,将GPT2对应的数据分别映射到我们几个权重参数里(映射需要做一些猜测工作)

Then, we load the weights themselves, mapping GPT-2's data to our model's corresponding weight parameters (this mapping requires some guesswork).

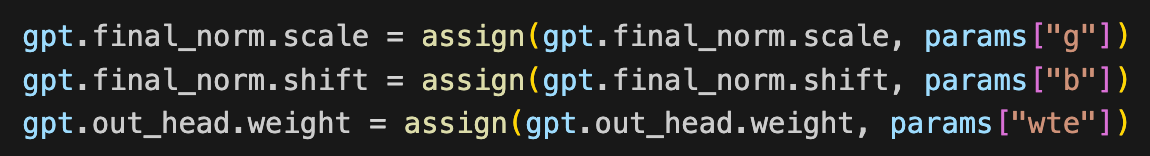

加载完成后,尝试使用模型生成预测文本,结果已经能生成连贯准确的句子了,说明参数已加载正确了

After loading is complete, we try using the model to generate prediction text. The results show that the generated sentences are coherent and accurate, indicating that the parameters have been loaded correctly.

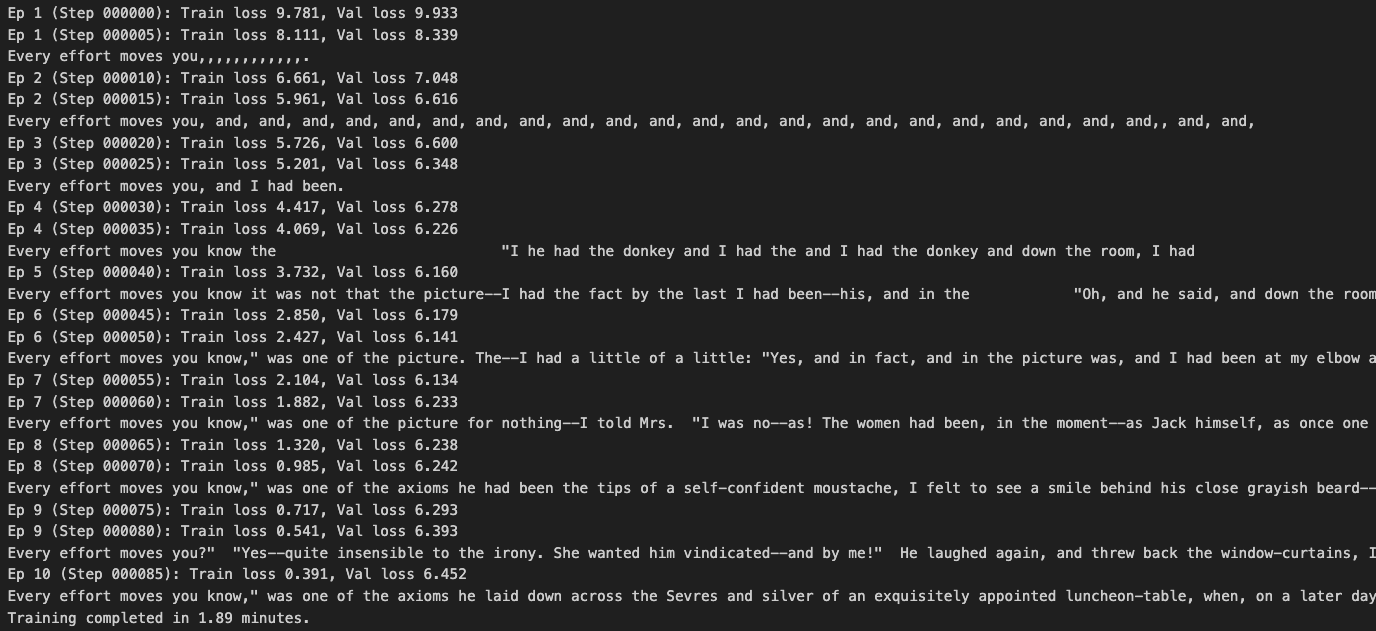

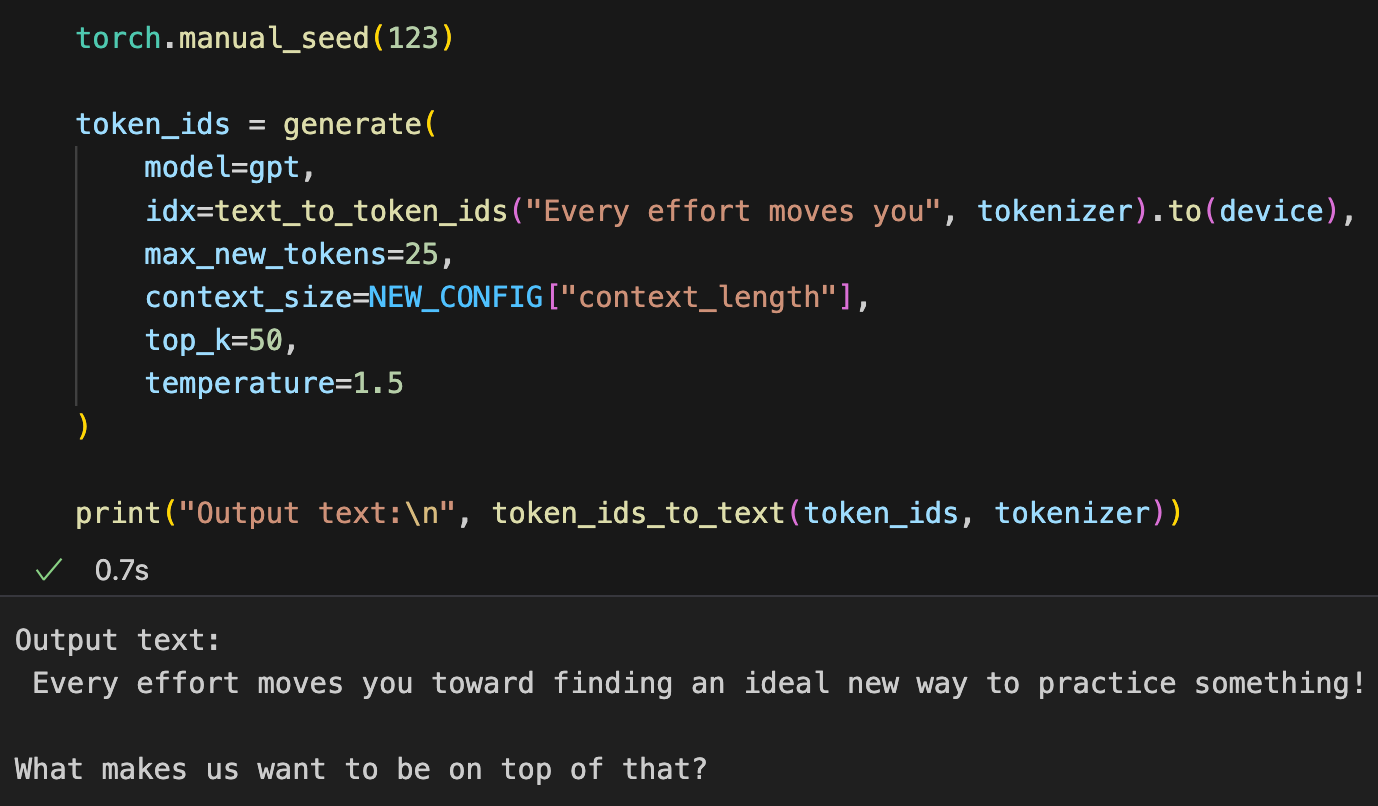

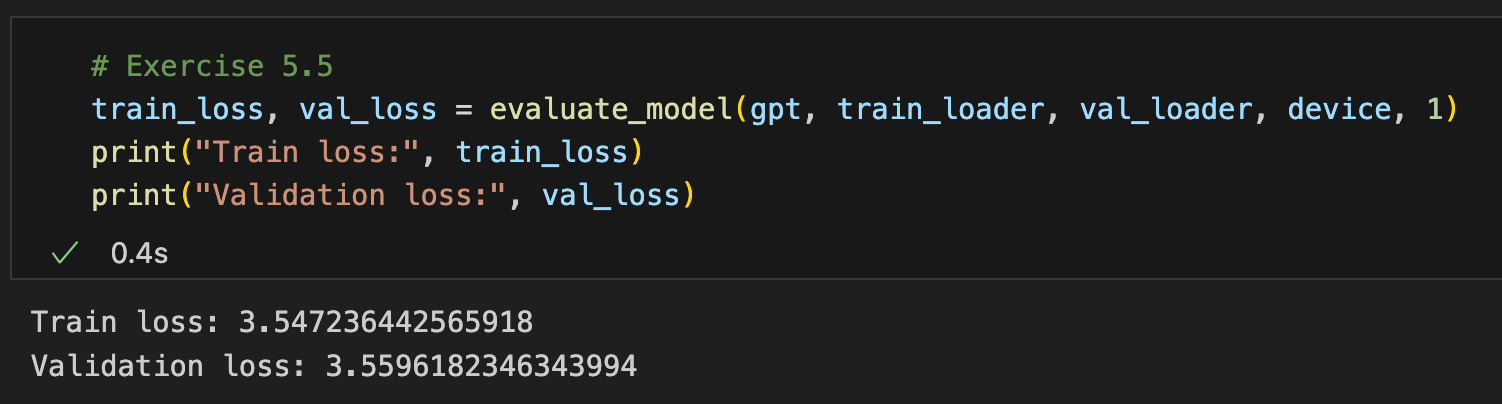

我们用加载开源权重后的模型再次预测一下之前verdict的损失,发现对于测试集和验证的损失都只有3.5左右,相比5.2里面验证集6.几的损失有很大提高

We then use the model with the loaded open-source weights to predict the loss for the previous verdict. For both the test set and validation set, the loss is around 3.5, which is a significant improvement compared to the validation loss of 6.x in section 5.2.

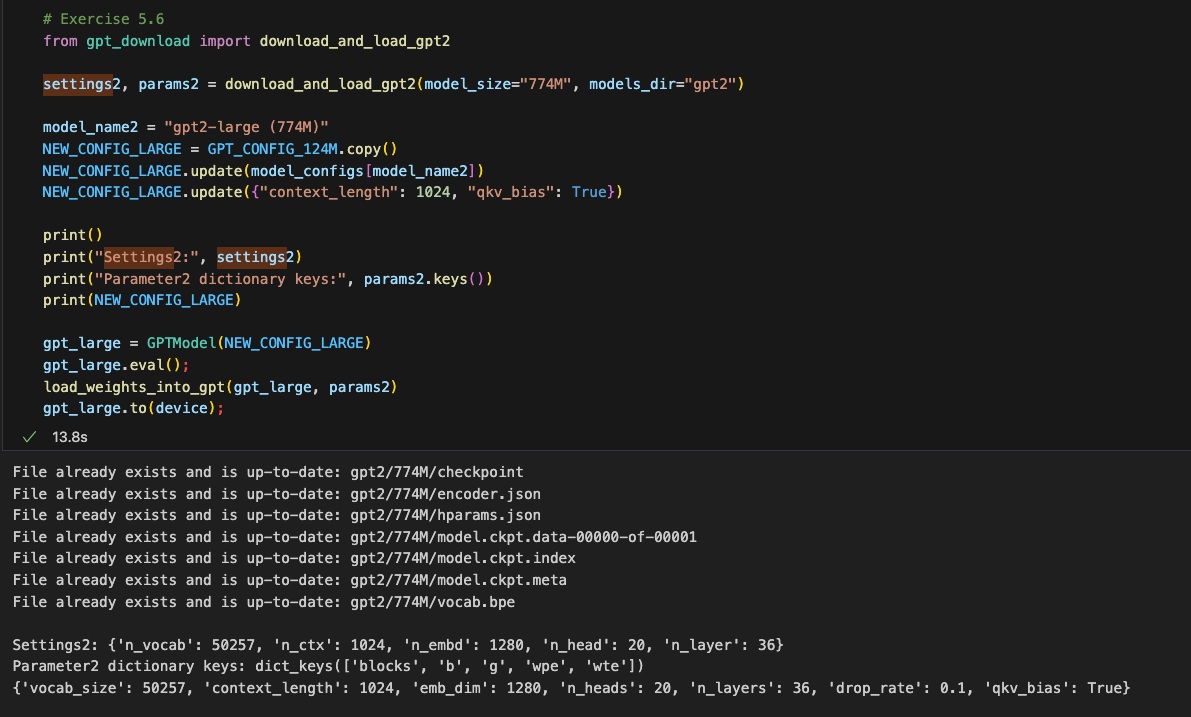

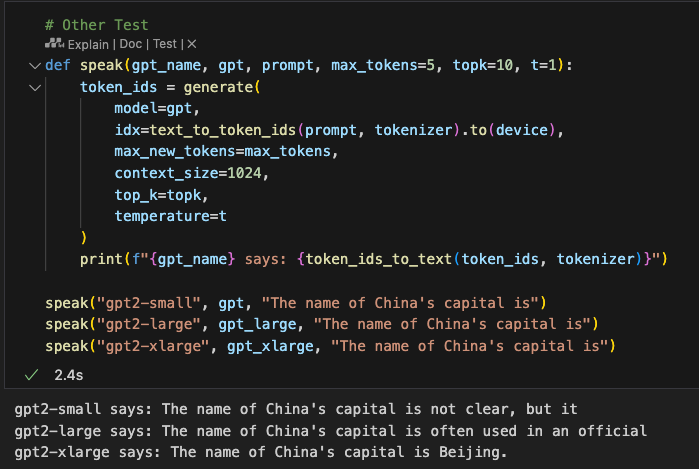

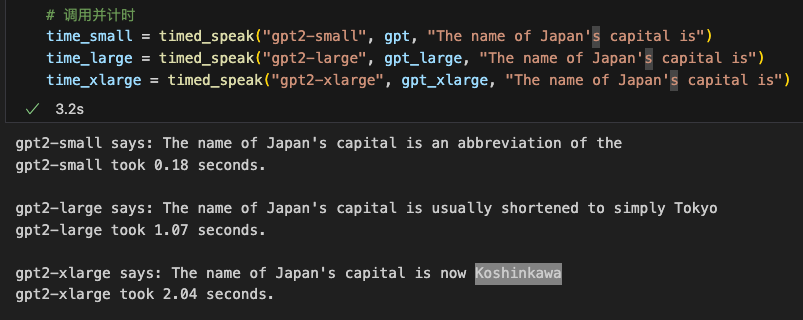

我们再下载一个gpt-large的模型试试

Next, we try downloading the GPT-2 large model.

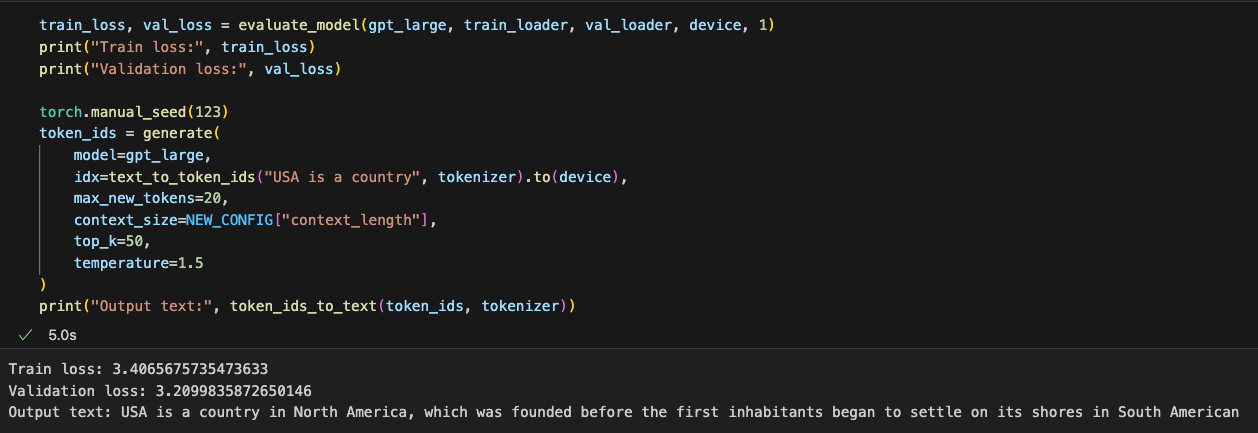

再进行文本输出预测,计算验证集的损失发现损失相对于gpt-small从3.5下降到了3.2

After running text output predictions and calculating the validation loss, we find that the loss decreases further from 3.5 (GPT-2 small) to 3.2.

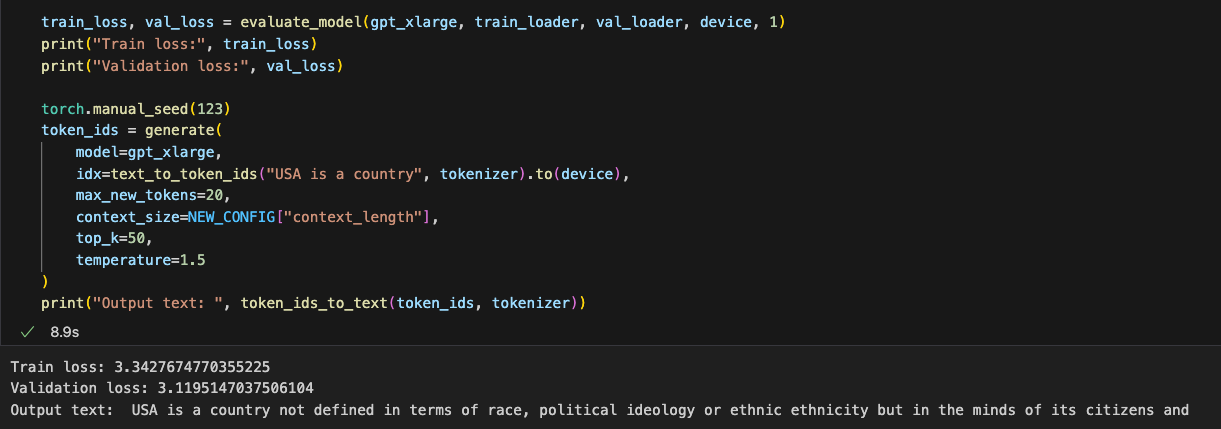

再下载最大的gpt2模型试试,似乎更厉害了,验证损失下降到了3.1,似乎生成文本也更流畅了

Finally, we download the largest GPT-2 model and test it. It seems even more impressive, as the validation loss drops to 3.1, and the generated text appears even smoother and more fluent.

做几个基本常识的补全测试,模型越大看起来输出相对越可靠,当然耗时也越久

Performing some basic common-sense completion tests, it appears that the larger the model, the more reliable the output, though it also takes longer to generate.

总结 Summary

当 LLM(大型语言模型)生成文本时,它一次输出一个标记(token)。

默认情况下,下一个标记是通过将模型输出转换为概率分数,并从词汇表中选择对应于最高概率分数的标记生成的,这被称为“贪婪解码”(greedy decoding)。

通过使用概率采样和温度缩放,我们可以影响生成文本的多样性和连贯性。

训练集和验证集的损失可以用来评估 LLM 在训练过程中生成文本的质量。

预训练一个 LLM 需要通过调整其权重来最小化训练损失。

LLM 的训练循环是深度学习中的标准过程,使用传统的交叉熵损失和 AdamW 优化器。

在大型文本语料库上预训练一个 LLM 是一项耗时且资源密集的任务,因此我们可以加载公开可用的权重,作为自行在大型数据集上预训练模型的替代方案。

When an LLM (Large Language Model) generates text, it outputs one token at a time.

By default, the next token is generated by converting the model's output into probability scores and selecting the token corresponding to the highest probability score from the vocabulary. This is called "greedy decoding."

By using probabilistic sampling and temperature scaling, we can influence the diversity and coherence of the generated text.

The losses of the training and validation sets can be used to evaluate the quality of text generation during the training process.

Pre-training an LLM involves minimizing the training loss by adjusting its weights.

The training loop for an LLM follows the standard process in deep learning, using traditional cross-entropy loss and the AdamW optimizer.

Pre-training an LLM on a large text corpus is a time-consuming and resource-intensive task, so we can load publicly available pre-trained weights as an alternative to training the model on a large dataset ourselves.