博客内容Blog Content

Langchain4j的概念和使用 Concept and Usage of Langchain4j

介绍langchain4j的相关基本概念(定义、特点、使用场景)以及使用示例的代码,并于springai进行对比 An introduction to the basic concepts of langchain4j (including its definition, features, and use cases), along with code examples demonstrating its usage, and a comparison with Spring AI.

LangChain4j介绍 Introduction to LangChain4j

定义 Definition

LangChain4j(https://docs.langchain4j.dev/) 是一个为 Java 和 JVM 生态设计的开源框架,旨在让开发者能够更容易地将大语言模型(LLM)如 OpenAI GPT、Azure OpenAI、ChatGLM、文心一言等,集成进 Java 应用程序中

LangChain4j (https://docs.langchain4j.dev/) is an open-source framework designed for Java and the JVM ecosystem. It aims to make it easier for developers to integrate large language models (LLMs) such as OpenAI GPT, Azure OpenAI, ChatGLM, Wenxin Yiyan, etc., into Java applications.

特点 Features

面向 Java(JVM):为 Java 开发者量身打造,API 风格和生态兼容主流 Java 框架。

多模型支持:支持多种主流大语言模型和对话接口(如 OpenAI、Azure OpenAI、ChatGLM、Baidu 等)。

链式能力:通过“链”的设计,把多个 LLM 操作、工具调用、检索、记忆等组合起来,构建复杂的 AI 应用。

向量检索/知识库:集成多种向量数据库,支持 RAG(检索增强生成)方案。

工具集成:支持函数调用、Agent、插件工具等。

易于扩展:插件式架构,便于接入新的模型和数据源。

Java (JVM) Oriented: Tailored for Java developers, API style and ecosystem compatible with mainstream Java frameworks.

Multi-Model Support: Supports various mainstream LLMs and chat interfaces (e.g., OpenAI, Azure OpenAI, ChatGLM, Baidu, etc.).

Chained Capabilities: Through a “chain” design, combines multiple LLM operations, tool calls, retrieval, memory, etc., to build complex AI applications.

Vector Retrieval/Knowledge Base: Integrates multiple vector databases and supports RAG (Retrieval-Augmented Generation) solutions.

Tool Integration: Supports function calling, Agents, plugin tools, etc.

Easy to Extend: Plugin architecture makes it convenient to integrate new models and data sources.

应用场景 Application Scenarios

构建智能问答机器人(Chatbot)

企业知识库检索(RAG)

智能文档摘要、生成

自动化文本处理与辅助决策

Building intelligent chatbots

Enterprise knowledge base retrieval (RAG)

Intelligent document summarization and generation

Automated text processing and decision assistance

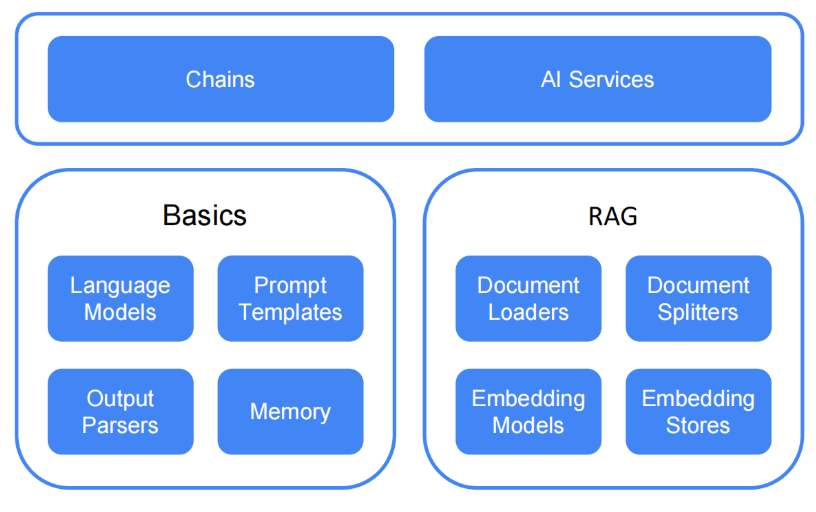

Langchain4j库结构 LangChain4j Library Structure

LangChain4j 采用模块化设计,包括:

Langchain4j-core 模块,它定义了核心抽象 (例如 ChatLanguageModel 和 EmbeddingStore) 及其 API。

主 langchain4j 模块,包含有用的工具,如文档加载器document loaders,,聊天内存 chat memory 实现以及高级功能,如 AI 服务。(AISerivce)

LangChain4j-{Integration} 模块的广泛阵列,每个模块都提供与各种 LLM 提供程序的集成,并将存储嵌入到 LangChain4j 中。您可以独立地使用 langchain4j-{Integration} 模块。对于其他特性,只需导入主 langchain4j 依赖项

LangChain4j adopts a modular design, including:

The `langchain4j-core` module, which defines core abstractions (e.g., ChatLanguageModel and EmbeddingStore) and their APIs.

The main `langchain4j` module, containing useful tools such as document loaders, chat memory implementations, and advanced functions like AI services.

A wide array of `LangChain4j-{Integration}` modules, each providing integrations with various LLM providers and embedding storage into LangChain4j. You can use the `langchain4j-{Integration}` modules independently. For other features, just import the main `langchain4j` dependency.

开发示例1-注入RAG初始化服务

Development Example 1 - Injecting RAG Initialization Service

开发Langchain4j的核心是构建AiService,它的设计理念是:定义Java接口的格式,通过注解和配置注入相关prompt、工具、chatMemory的组件,之后由框架或手动进行实例化,进而能像调用普通Spring Service一样方便调用AI模型。

The core of developing with LangChain4j is building an `AiService`. Its design concept is to define a Java interface format, inject related prompts, tools, and chat memory components through annotations and configurations, and then instantiate them via the framework or manually. This allows calling AI models as conveniently as calling a normal Spring service.

比如配置一个AiService,里面指定模型、记忆存储方式、引入的工具、RAG工具、系统提示词prompt等

For instance, configure an `AiService` specifying the model, memory storage, tools, RAG tool, and system prompt:

@AiService(

wiringMode = AiServiceWiringMode.EXPLICIT,

chatModel = "ChatGpt4oMini",

chatMemoryProvider = "mySeparateChatMemoryProvider",

tools = "dateTool",

contentRetriever = "jdContentRetriever"

)

public interface JdHelper {

@SystemMessage("你是一个京东客服机器人,用于帮助客户回答相关问题,你只会说广东话,所有回答用粤语+繁体字")

String chat(String userMessage);

}

同时配置RAG工具用于给上面jdContentRetriever注入,里面指定向量编码和存储(需要单独用config配置,比如PineconeEmbeddingStoreConfig),以及RAG匹配参数

Configure the RAG tool used to inject into jdContentRetriever, specifying vector encoding and storage (configured separately, e.g., PineconeEmbeddingStoreConfig), along with RAG matching parameters:

@Configuration

public class AgentConfig {

@Autowired

private EmbeddingModel embeddingModel;

@Autowired

private EmbeddingStore<TextSegment> embeddingStore;

@Bean

ContentRetriever jdContentRetriever() {

return EmbeddingStoreContentRetriever.builder()

.embeddingModel(embeddingModel)

.embeddingStore(embeddingStore)

.maxResults(1)

.minScore(0.8)

.build();

}

}

直接注入进行使用

Directly inject and use:

@Autowired

private JdHelper jdHelper;

@Test

public void testAISearch() {

String question = "今天余额提现,最晚到账时间是多久?请给出具体时间";

String answer = jdHelper.chat(question);

System.out.println(answer);

}

开发示例2-手动配置MCP初始化服务

Development Example 2 - Manually Configuring MCP Initialization Service

可以先手动构建mcp相关的工具,比如12306,提供ToolProvider给下面AiService初始化

You can manually build MCP-related tools, such as 12306, and provide a ToolProvider for initializing the following AiService.

@Configuration

public class McpToolProviderConfig {

@Bean(name = "toolProvider_12306")

public ToolProvider toolProvider() {

// 1. 构建mcp协议,这里以stdio协议为例

McpTransport mcp12306 = new StdioMcpTransport

.Builder()

.command(List.of("npx", "-y", "12306-mcp"))

.environment(Map.of("KEY", "VALUE"))

.build();

// 如果你想使用http协议连接mcp服务,可以使用下面的代码,支持常规和sse两种响应

// McpTransport transport = new StreamableHttpMcpTransport.Builder()

// .url("http://localhost:3001/mcp")

// .logRequests(true)

// .logResponses(true)

// .build();

// 2. 创建mcp client,用于配置使用mcp协议连接的客户端

McpClient mcpClient = new DefaultMcpClient

.Builder()

.transport(mcp12306)

.toolExecutionTimeout(Duration.ofSeconds(10))

.build();

// 3. 创建工具集,可以配置多个mcp client

ToolProvider toolProvider = McpToolProvider

.builder()

.mcpClients(mcpClient)

.build();

return toolProvider;

}

}

然后手动配置AiService,因为较复杂并未使用注入方式

Then manually configure the AiService, since it’s more complex and not using injection:

// 因为@AiService没有toolProvider属性,所以需要自定义config进行实例化

public interface McpAssistantAuto {

Flux<String> chat(String message);

}

@Configuration

public class McpAssistantAutoConfig {

@Autowired

private OpenAiStreamingChatModel openAiStreamingChatModel;

@Autowired

private ToolProvider toolProvider_12306;

@Bean(name = "mcpAssistantAuto_12306")

public McpAssistantAuto mcpAssistantAuto() {

McpAssistantAuto mcpAssistantAuto = AiServices.builder(McpAssistantAuto.class)

.streamingChatModel(openAiStreamingChatModel)

.toolProvider(toolProvider_12306)

.build();

return mcpAssistantAuto;

}

}

实例化AiService并测试

Instantiate AiService and test:

@SpringBootTest

public class McpAutoTest {

@Autowired

@Qualifier("mcpAssistantAuto_12306")

private McpAssistantAuto mcpAssistantAuto_12306;

@Test

public void createStudioMcp() {

StringBuffer sb = new StringBuffer();

mcpAssistantAuto_12306.chat("你会什么功能?")

.doOnNext(sb::append)

.blockLast();

System.out.println(sb);

}

}

LangChain4j对比SpringAI

LangChain4j vs. Spring AI

LangChain4j 和 Spring AI 都是目前 Java 生态下,用于集成大语言模型(如OpenAI GPT、Azure OpenAI等)和构建智能应用的热门框架。它们有诸多相似之处,也有各自独特的设计理念和适用场景。

LangChain4j and Spring AI are both popular frameworks in the Java ecosystem for integrating large language models (LLMs) like OpenAI GPT, Azure OpenAI, and building intelligent applications. They share many similarities but also have their own design concepts and applicable scenarios.

总的来说:

LangChain4j 专注于链式(Chain)AI应用开发,追求灵活组合、复杂智能流程。其受 Python 版 LangChain 启发,强调 RAG、Agent、工具调用等能力。

Spring 生态下的AI客户端开发框架,追求与Spring Boot无缝整合。其受 Spring Data/Spring Cloud 启发,强调配置化、依赖注入、易用性。

In summary:

LangChain4j focuses on chain-based AI application development, pursuing flexible composition and complex intelligent workflows. Inspired by the Python version of LangChain, it emphasizes RAG, Agents, tool invocation, and more.

Spring AI is an AI client development framework under the Spring ecosystem, aimed at seamless Spring Boot integration. Inspired by Spring Data/Spring Cloud, it emphasizes configuration, dependency injection, and ease of use.