博客内容Blog Content

递归神经网络原理 Principle of Recurrent Neural Networks (RNN)

介绍递归神经网络的原理、词向量原理和一个相关的影评情感分析的实际应用示例 Introduction to the principles of Recurrent Neural Networks (RNNs), word embeddings, and a practical example of sentiment analysis on movie reviews.

递归神经网络原理

The Principle of Recurrent Neural Networks (RNNs)

递归神经网络与卷积神经网络并称深度学习中两大杰出代表,分别应用于计算机视觉与自然语言处理中,本节介绍递归神经网络的基本原理。

Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) are considered two of the most prominent representatives of deep learning, with CNNs mainly applied in computer vision and RNNs widely used in natural language processing (NLP). This section introduces the basic principles of RNNs.

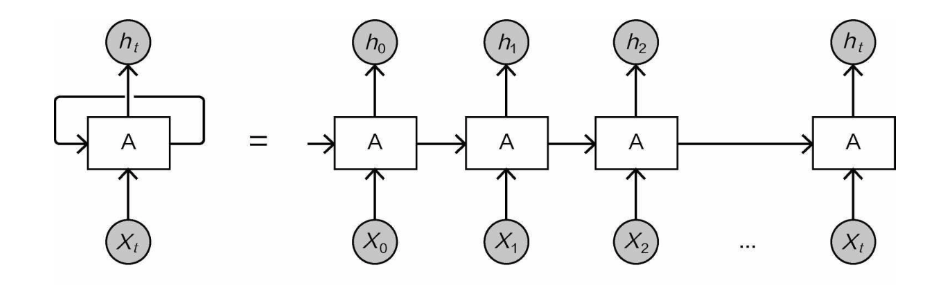

RNN网络的应用十分广泛,任何与自然语言处理能挂钩的任务基本都有它的影子,先来看一下它的整体架构,如图:

RNNs are extensively used in various tasks related to natural language processing, where they play a crucial role. Let’s first look at the overall architecture, as shown in the figure:

递归神经网络就容易多了只比传统网络多做了一件事——将依次输入得到的信息给下个输入使用。假设有一个时间序列数据,如果直接用神经网络去做,网络会依次输入各个数据,不会考虑它们之间的联系。而 RNN 的特别之处在于,它不仅有当前时刻的输入,还会保留之前时刻的信息,形成一个循环结构。这种循环使得网络能在处理当前输入时,参考之前的状态。 这与带状态的流计算有很多相似之处。本质上,两者都涉及状态的维护,并且都需要根据历史信息来影响当前的计算结果。

An RNN is relatively simple, differing from traditional neural networks in one key aspect—it passes information sequentially from one step to the next. Imagine working with time-series data: if you were to use a traditional neural network, each input would be processed independently, without considering the relationships between the inputs. However, the special feature of an RNN is that it not only takes the input at the current time step but also retains information from previous time steps, forming a recurrent structure. This recurrence allows the network to reference previous states when processing the current input. There are many similarities with stateful stream processing. Essentially, both involve maintaining a state, and both require leveraging historical information to influence the current computation results.

例如,整体操作需要2个全连接层得到最后的结果,现在把经过第一个全连接层得到的特征单独保存下来。因此,在计算下一个输入样本时,输入数就不仅是当前的输入样本,还包括前一步得到的中间特征,相当于综合考虑本轮的输入和上一轮的中间结果。通过这种方法,可以把时间序列的关系加入网络结构中。

For example, suppose the entire operation requires two fully connected layers to produce the final output. In an RNN, the features obtained after the first fully connected layer are separately saved. When the next input sample arrives, the input now includes not only the current sample but also the intermediate features from the previous step. This mechanism takes into account both the current input and the intermediate result from the previous step. Through this process, the temporal relationships in the sequence are integrated into the network’s structure.

如图,可以把输入数据想象成一段话,X0,X1,...,Xi就是其中每一个词语,此时要做一个分类任务,看 一看这句话的情感是积极的还是消极的。首先将X0最先输入网络,不仅得到当前输出结果h0,还有其中间输出特征。接下来将X1输入网络,和它一起进来的还有前一轮X0的中间特征,以此类推,最终这段话 最后一个词语Xt输入进来,依旧会结合前一轮的中间特征(此时前一轮不仅指Xt-1,因为Xt-1也会带有Xt-2 的特征,以依类推,就好比综合了前面全部的信息),得到最终的结果ht就是想要的分类结果。

As shown in the diagram (not provided), you can think of the input data as a sentence, where X₀, X₁, ..., Xᵢ represent each word in the sentence. The task at hand is a classification task: determining whether the sentiment of the sentence is positive or negative. First, X₀ is fed into the network, which produces not only the current output h₀ but also an intermediate feature. Next, X₁ is input into the network, along with the intermediate feature from the previous step (X₀). This process continues, and eventually, the last word in the sentence, Xt, is input into the network. This final input is again combined with the intermediate feature from the previous step (which now includes not just Xt₋₁, but also Xt₋₂ and the features from earlier steps, as they accumulate). The final result, ht, is the clasification output we desire, representing the sentiment of the sentence.

可以看到,在递归神经网络中,只要没到最后一步,就会把每一步的中间结果全部保存下来,以供 后续过程使用。每一步也都会得到相应的输出,只不过中间阶段的输出用处并不大,因为还没有把所有 内容都加载加来,通常都是把最后一步的输出结果当作整个模型的最终输出,因为它把前面所有的信息都考虑进来。

In a recurrent neural network (RNN), as long as it hasn’t reached the final step, the intermediate results from each step are saved for use in subsequent processes. Each step also produces a corresponding output, but the output from the intermediate stages is typically not very useful because not all of the input data has been processed yet. Usually, the output from the final step is regarded as the overall output of the model, as it takes into account all the information from the previous inputs.

RNN网络看起来十分强大,那么它有没有问题呢?如果一句话过长,也就是输入X序列过多的时候, 最后一个输入会把前面所有的中间特征都考虑进来。此时可以想象一下,通常情况下,语言或者文字都 是离着越近,相关性越高

RNNs seem very powerful, but do they have any issues? When a sentence is too long, meaning the input sequence X has too many elements, the final input will have to account for all the intermediate features from earlier steps. In such cases, you can imagine that, in most situations, the closer two words or phrases are to each other, the more related they are.

例如:“今天我白天在家玩了一天,主要在玩游戏,晚上照样没事干,准备出 去打球。”最后的词语“打球”应该会和晚上没事干比较相关,而和前面的玩游戏没有多大关系,但是, RNN网络会把很多无关信息全部考虑进来。实际的自然语言处理任务也会有相似的问题,越相关的应当 前后越紧密,如果中间东西记得太多,就会使得整体网络模型效果有所下降。

For example: “Today, I spent the whole day at home playing games, and in the evening, I had nothing to do, so I decided to go play basketball.” The last word, "basketball," is more related to "nothing to do in the evening" than to "playing games earlier in the day." However, an RNN would consider all the previous inputs, including the unrelated information. This is common in natural language processing tasks, where the most relevant information tends to be in close proximity. If too much irrelevant information is remembered, it can degrade the performance of the network model.

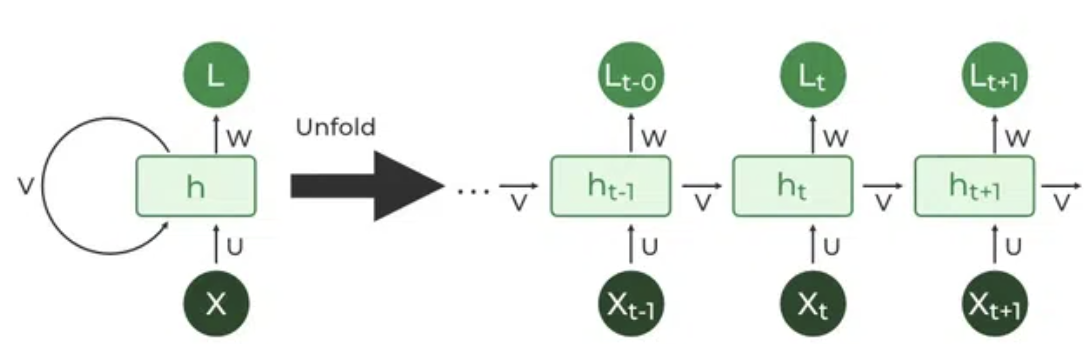

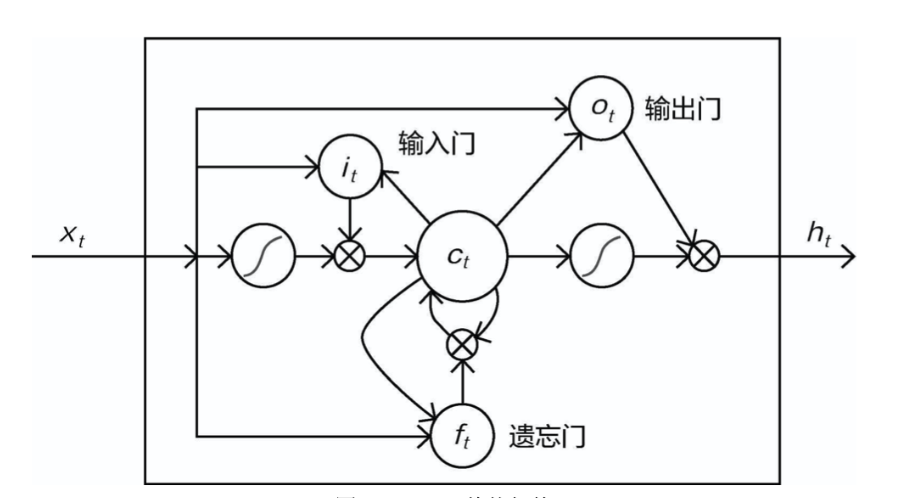

所以最好的办法就是让网络有选择地记忆或遗忘一些内容,重要的东西需要记得更深刻,价值不大 的信息可以遗忘掉,这就用到当下最流行的Long Short Term Memory Units,简称LSTM,它在RNN网络的 基础上加入控制单元,以有选择地保留或遗忘部分中间结果,现在来看一下它的整体架构,如图:

The best approach is to allow the network to selectively remember or forget certain information. Important information should be remembered more deeply, while less valuable information can be forgotten. This brings us to the popular Long Short-Term Memory (LSTM) units, which are an extension of RNNs. LSTMs introduce control units to selectively retain or forget parts of the intermediate results. Let’s now look at its overall architecture, as shown in the diagram:

LSTM的主要组成部分有输入门、输出门、遗忘门和一个记忆控制器C,简单概述,就是通过一个持续维 护并进行更新的Ct来控制每次迭代需要记住或忘掉哪些信息,如果一个序列很长,相关的内容会选择记 忆下来,一些没用的描述忘掉就好。 LSTM网络在处理问题时,整体流程还是与RNN网络类似,只不过每一步增加了选择记忆的细节。

The main components of an LSTM are the input gate, output gate, forget gate, and a memory controller C. In simple terms, by continuously maintaining and updating Ct, LSTM controls which information should be remembered or forgotten at each iteration. If a sequence is long, relevant content will be remembered, while irrelevant descriptions can simply be forgotten.When solving problems, the overall process of an LSTM network is still similar to that of an RNN, with the key difference being the added detail of selective memory for each step.

词向量 Word Embedding

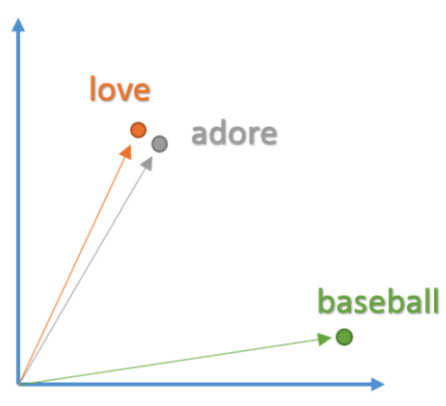

特征一直是机器学习中的难点,为了使得整个模型效果更好,必须要把词的特征表示做好,也就是词向量。

Feature representation has always been a challenge in machine learning. To improve the overall effectiveness of the model, it's crucial to represent words well, which is achieved through word embeddings.

下图为词向量的特征空间意义。相似的词语在向量空间上也会非常类似,这才是希望得到的结果。 所以,当拿到文本数据之后,第一步要对语料库进行词向量建模,以得到每一个词的向量。

The diagram (not provided) illustrates the meaning of word embeddings in feature space. Similar words will have very close representations in the vector space, which is the desired outcome. Therefore, when working with textual data, the first step is to model the word embeddings of the corpus to obtain a vector for each word.

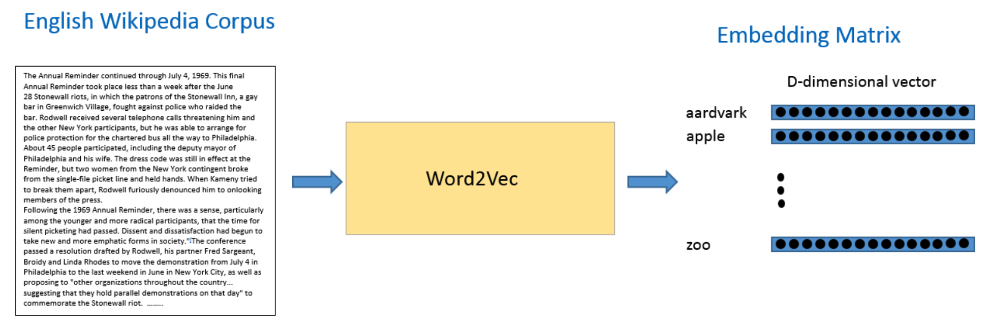

词向量的基本原理,也就是Word2Vec模型,在自然语言处理中经常用到这个模型,其目的就是得到各个词的向量表示。

The basic principle behind word embeddings is the Word2Vec model, which is commonly used in natural language processing to obtain vector representations for words.

Word2Vec 模型根据数据集中的每个句子进行训练,并且以一个固定窗口在句子上进行滑动,根据句子的上下文来预测固定窗口中间那个词的向量。然后根据一个损失函数和优化方法,来对这个模型进行训练。每一次迭代更新,输入的词向量都会发生变化,相当于既更新网络权重参数,也更新输入数据。

The Word2Vec model is trained on each sentence in the dataset by sliding a fixed window over the sentence. It predicts the vector for the word in the middle of the window based on its surrounding context. The model is trained using a loss function and optimization method. With each iteration, the input word vectors are updated, meaning that both the network’s weight parameters and the input data are continuously refined.

关于词向量的使用和建模方法,Gensim工具包中已经给出了非常不错的文档教程,如果要亲自动手创建一份词向量,可以参考其使用方法,只需先将数据进行分词,然后把分词后的语料库传给Word2Vec函数即 可,方法还是非常简单的。

Regarding the usage and modeling methods of word embeddings, the Gensim toolkit provides excellent documentation and tutorials. If you want to create your own word embeddings, you can refer to its usage guide. The process is quite simple: first, you need to tokenize the data, then pass the tokenized corpus to the Word2Vec function. The method is straightforward to implement.

import gensim.downloader as api

# 加载较小的GloVe模型(词向量维度100)

# Load the smaller GloVe model (word vector dimension 100)

model = api.load("glove-wiki-gigaword-100")

# 选择一个单词来查看其向量的维度

# Choose a word to check its vector dimensions

word = "bad"

# 获取该单词的向量

# Retrieve the vector for the selected word

vector = model[word]

# 获取模型词汇表的大小

# Get the size of the model's vocabulary

vocab_size = len(model.key_to_index)

# 打印词汇表中的单词数量

# Print the number of words in the vocabulary

print("词汇表中包含的单词数量:", vocab_size)

# Print the number of words in the vocabulary:

# 直接查看词向量的维度

# Directly check the dimensionality of word vectors

print("词向量维度:", model.vector_size)

# Print the dimensionality of word vectors

# 查询"bad"的最相似词汇

# Query the words most similar to "bad"

print("Words most similar to 'bad':")

print(model.most_similar("bad"))

# 查询"boy"的最相似词汇

# Query the words most similar to "boy"

print("\nWords most similar to 'boy':")

print(model.most_similar("boy"))影评情感分析 Movie Review Sentiment Analysis

现在要对电影评论数据集进行分类任务(二分类),建立一个LSTM网络模型,以识别哪些评论是积 极肯定的情感、哪些是消极批判的情感。

Now, we need to perform a classification task (binary classification) on a movie review dataset by building an LSTM network model to identify which reviews express positive, affirmative sentiment and which express negative, critical sentiment.

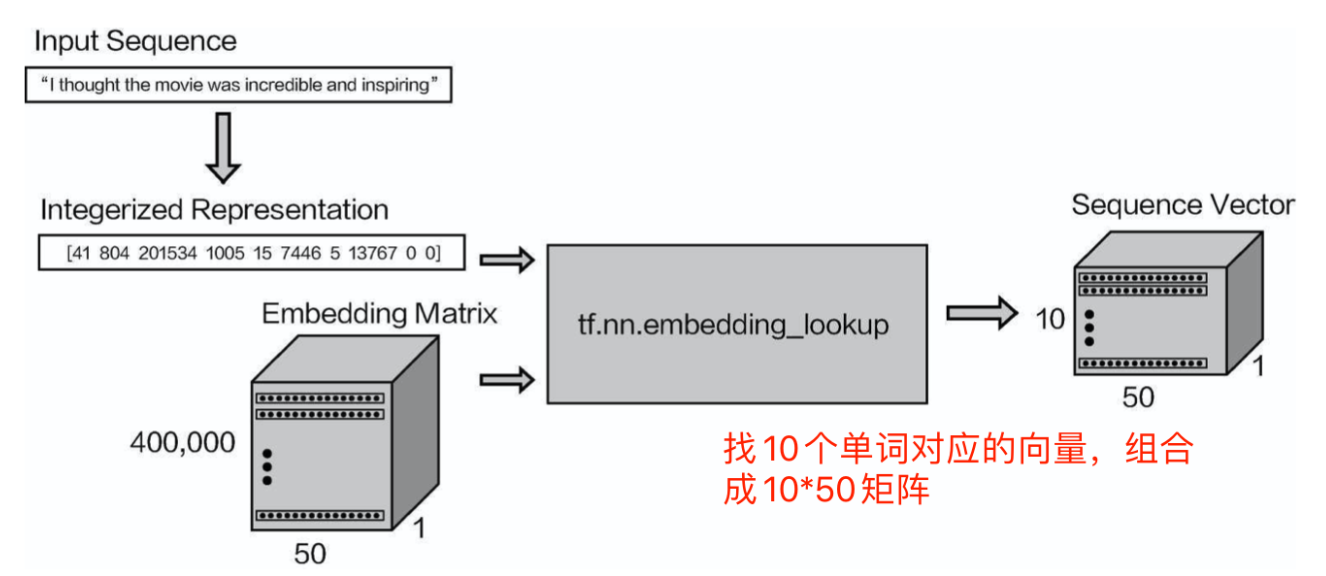

手里拿到的是一篇文章,需要对应地找到其各个词的向量,然后再 组合在一起,先来整体看一下流程,如图

Given a piece of text, the task is to find the word vectors corresponding to each word in the review, and then combine them together. Let's first take a look at the overall process, as shown in the diagram (not provided).

由图可见,先得到一句话,然后取其在词库中的对应索引位置,再对照词向量表转换成相应的结 果,例如输入10个词,最终得到的结果就是[10,50],表示每个词都转换成其对应的向量。

From the diagram, it's clear that the process starts with a sentence, where each word is mapped to its corresponding index in the vocabulary. Then, the indices are used to convert the words into their respective word vectors. For example, if there are 10 input words, the final result will be a matrix of shape [10,50], meaning each word is converted into a 50-dimensional vector.

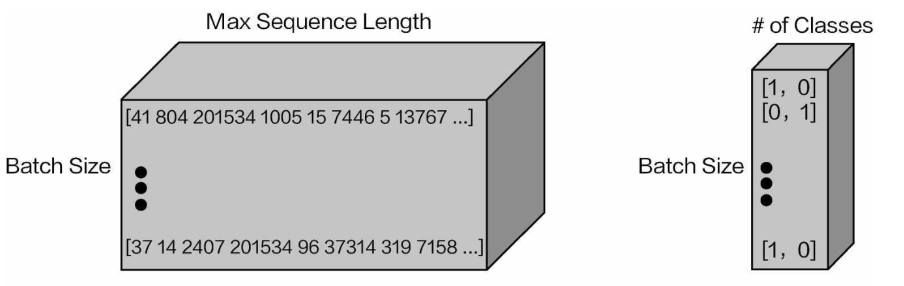

此时需要对文本数据进行预处理操作,基本思想就是选择一个合适的值来限制文本的长度,例如选 250(需要根据实际任务来选择)。如果一篇影评数据中词语数量比250多,那就从第250个词开始截断, 后面的就不需要了;少于250个词的,缺失部分全部用0来填充即可。

At this point, the text data needs to undergo preprocessing. The basic idea is to choose an appropriate value to limit the length of the text, for instance, 250 (the exact value should be chosen based on the specific task). If a review contains more than 250 words, it will be truncated after the 250th word; if it has fewer than 250 words, the missing parts will be padded with zeros.

图所示为数据最终预处理后的结果,构建RNN模型的时候,还需再将词索引转换成对应的向量。现在再向大家强调一下输入数据的格式,传入RNN网络中的数据需是一个三维的形式,即 [batchsize,文本长度,词向量维度],例如一次迭代训练10个样本数据,每个样本长度为250,每个词的 向量维度为50,输入就是[10,250,50]。

The diagram shows the final preprocessed result. When constructing the RNN model, the word indices will also need to be converted into their corresponding vectors. Now, it's important to emphasize the format of the input data. The data fed into the RNN must be in three-dimensional form, i.e.,[batchsize,text length,word vector dimension]. For example, if 10 samples are processed in one iteration, each sample has a length of 250 words, and each word is represented by a 50-dimensional vector, the input will have the shape[10,250,50].

# 超参数设定 batchSize = 24 lstmUnits = 64 numClasses = 2 iterations = 50000 maxSeqLength = 250 # 每个样本的最大序列长度 numDimensions = 50 # 词向量的维度 import tensorflow as tf # 构建模型 model = tf.keras.Sequential([ # 嵌入层,将每个输入的词汇索引映射为词向量 tf.keras.layers.Embedding(input_dim=400000, output_dim=numDimensions, input_length=maxSeqLength, trainable=False), # LSTM层 tf.keras.layers.LSTM(units=lstmUnits, return_sequences=False), # 全连接层,输出为numClasses(2分类) tf.keras.layers.Dense(units=numClasses, activation='softmax') ]) # 编译模型 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) # 打印模型摘要 model.summary()

# 训练模型

for i in range(iterations // 100):

# 获取训练批次

nextBatch, nextBatchLabels = getTrainBatch()

# 训练模型

model.train_on_batch(nextBatch, nextBatchLabels)

# 每100轮打印一次损失和准确率

if i % 10 == 0 and i != 0:

loss, acc = model.evaluate(nextBatch, nextBatchLabels, verbose=0)

print(f"迭代 {i*100} / {iterations}, 损失: {loss}, 准确率: {acc}")

# 每10000轮保存一次模型

if i % 100 == 0 and i != 0:

model.save(f"./models/pretrained_lstm_{i*100}.h5")

print(f"模型保存至 ./models/pretrained_lstm_{i*100}.h5")# 测试模型

iterations = 10 # 测试批次的数量

for i in range(iterations):

# 获取测试批次

nextBatch, nextBatchLabels = getTestBatch()

# 计算当前批次的准确率

loss, accuracy = model.evaluate(nextBatch, nextBatchLabels, verbose=0)

# 打印当前批次的准确率

print(f"Batch {i+1} accuracy: {accuracy * 100:.2f}%")