博客内容Blog Content

大语言模型LLM之为分类任务进行微调 Fine-Tuning for Classification Tasks of Large Language Models (LLMs)

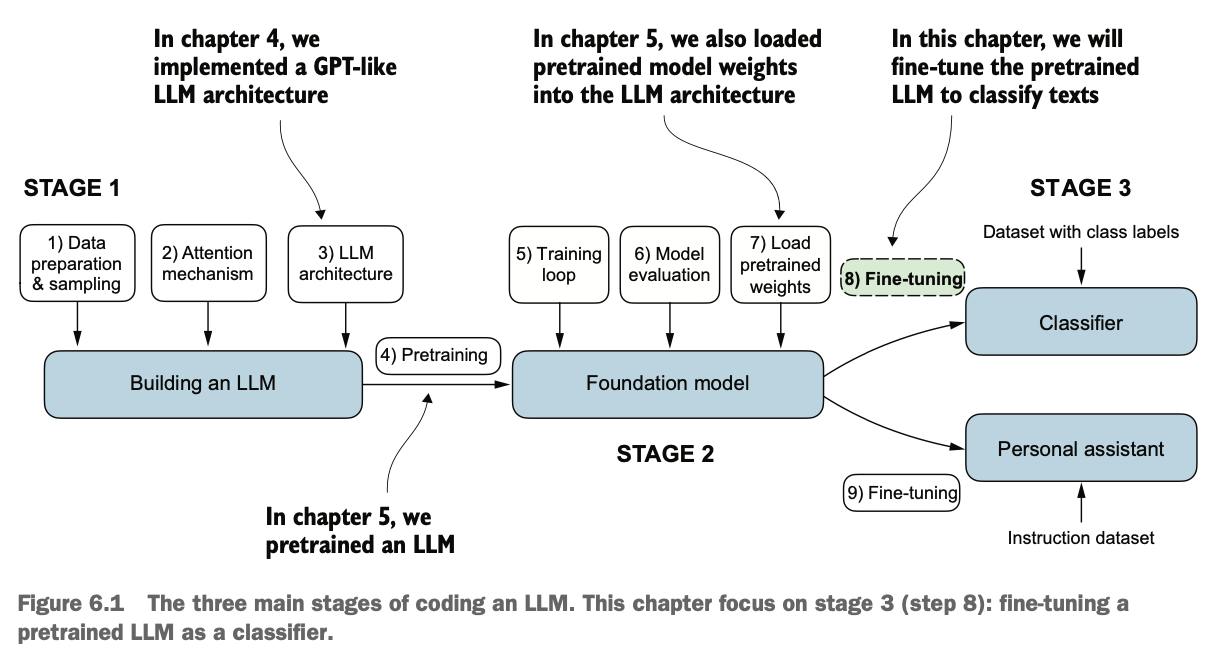

作为LLM应用的一种,我们可以将预训练好的模型进一步微调,使其能完成专业的垃圾邮件分类任务 As one application of LLMs, we can further fine-tune a pre-trained model to enable it to perform specialized tasks, such as spam classification.

6.0 总览 Overview

![]() 6. Fine-tuning for classification.ipynb

6. Fine-tuning for classification.ipynb

介绍不同的大语言模型(LLM)微调方法

准备用于文本分类的数据集

修改预训练的大语言模型以进行微调

微调大语言模型以识别垃圾信息

评估微调后模型分类器的准确性

使用微调后的大语言模型对新数据进行分类

Introduction to Different Fine-Tuning Methods for Large Language Models (LLMs)

Preparing a Dataset for Text Classification

Adapting a Pretrained Large Language Model for Fine-Tuning

Fine-Tuning a Large Language Model to Identify Spam

Evaluating the Accuracy of the Fine-Tuned Model Classifier

Classifying New Data Using the Fine-Tuned Large Language Model

目前我们掌握了预训练LLM的方法,并加载了开源的权重数据到模型运行,接下来我们可以开始做相关应用了,我们先来讨论其中一种应用,比如判断邮件是否为垃圾邮件

Currently, we have mastered the methods for pretraining LLMs and have loaded open-source weight data into the model for operation. Next, we can start applying this knowledge to practical scenarios. Let’s begin by discussing one such application, such as determining whether an email is spam.

6.1 不同类型的微调 Types of Fine-Tuning

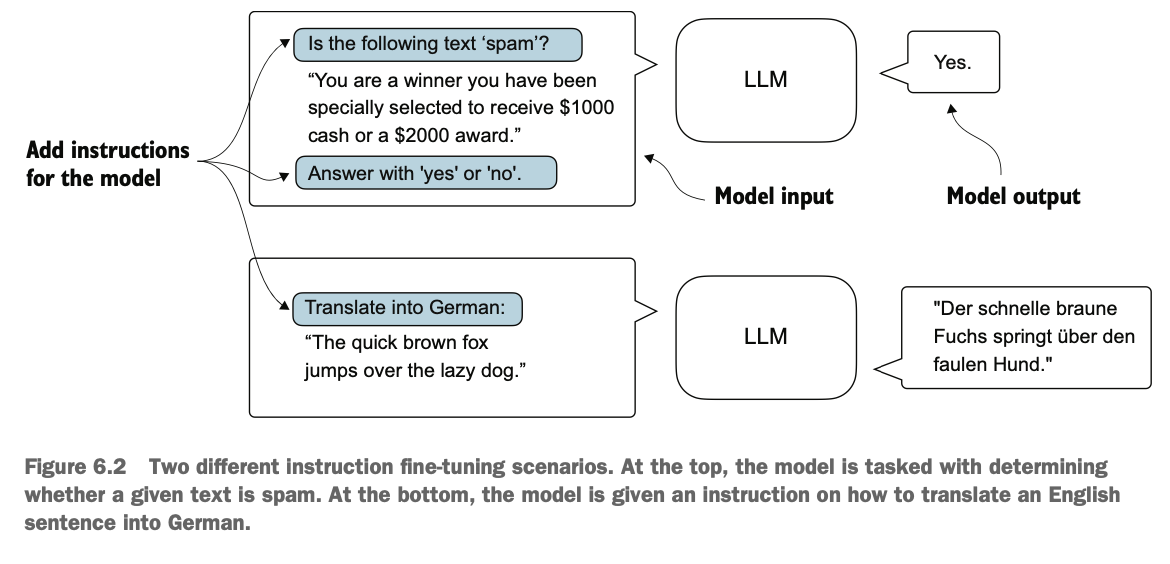

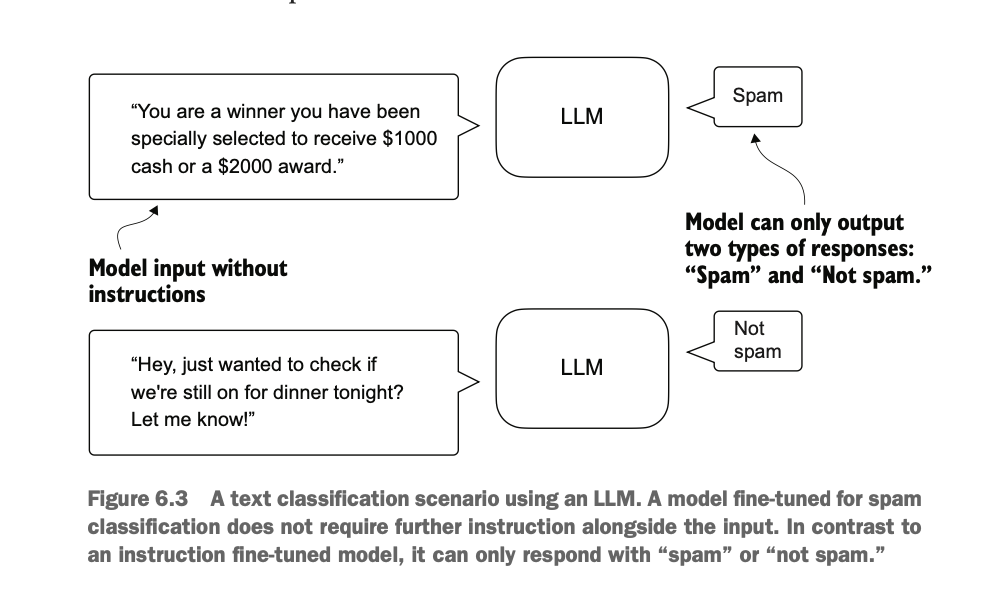

微调分两种类型:指令微调和分类微调

指令微调是通过使用特定指令对一组任务训练语言模型,以提高其理解和执行以自然语言提示描述的任务的能力(接受指令,完成对应任务,能力范围广)

分类微调是一种专注于特定分类任务的微调方法,旨在让语言模型能够根据输入数据将其归类到预定义的类别中(不需要指令,只输出分类结果,更专业)

Fine-tuning can be divided into two types: instruction fine-tuning and classification fine-tuning.

Instruction fine-tuning involves training a language model on a set of tasks using specific instructions to improve its ability to understand and execute tasks described in natural language prompts (accepting instructions, completing corresponding tasks, with a wide range of capabilities).

Classification fine-tuning is a method focused on specific classification tasks, aiming to enable the language model to classify input data into predefined categories (no instructions needed, only outputs classification results, more specialized).

各自优缺点 Pros and Cons::

指令微调:适合需要灵活处理多任务的模型,但要求较大的数据集和计算资源;优点是用途广泛、交互质量高。

分类微调:适用于特定分类任务(如情感分析、垃圾邮件检测),数据和计算需求较低,但功能局限于特定类别。

Instruction Fine-Tuning: Suitable for models that need to handle multiple tasks flexibly, but it requires larger datasets and computational resources. The advantage is its broad applicability and high-quality interaction.

Classification Fine-Tuning: Suitable for specific classification tasks (e.g., sentiment analysis, spam detection), with lower data and computational requirements. However, its functionality is limited to specific categories.

6.2 准备数据集 Preparing the Dataset

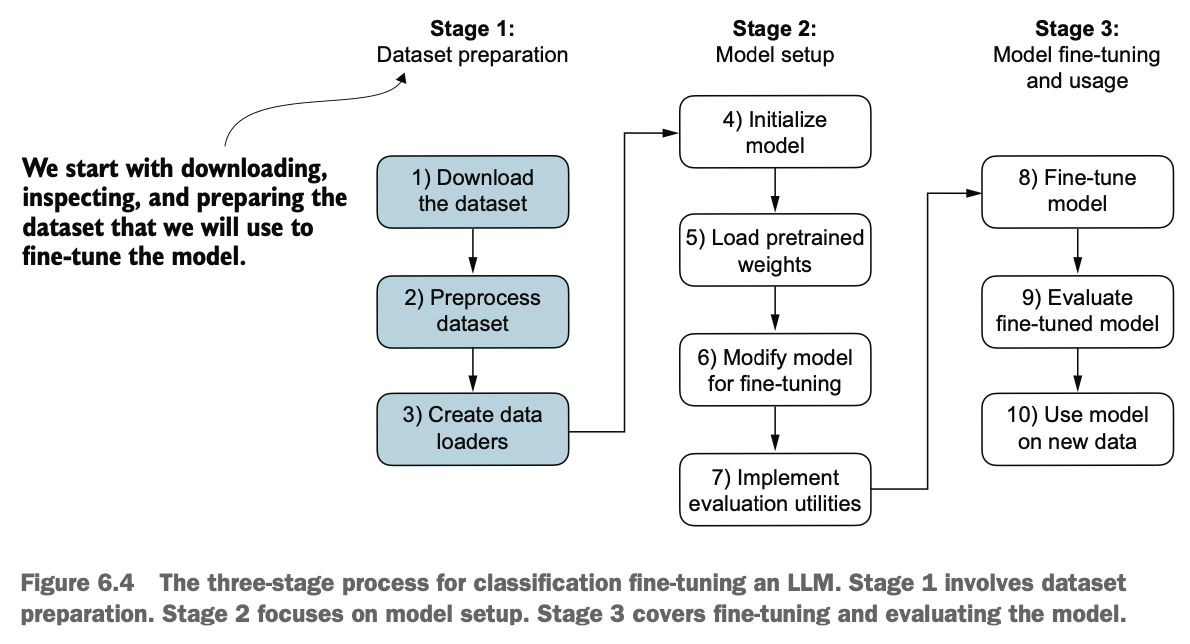

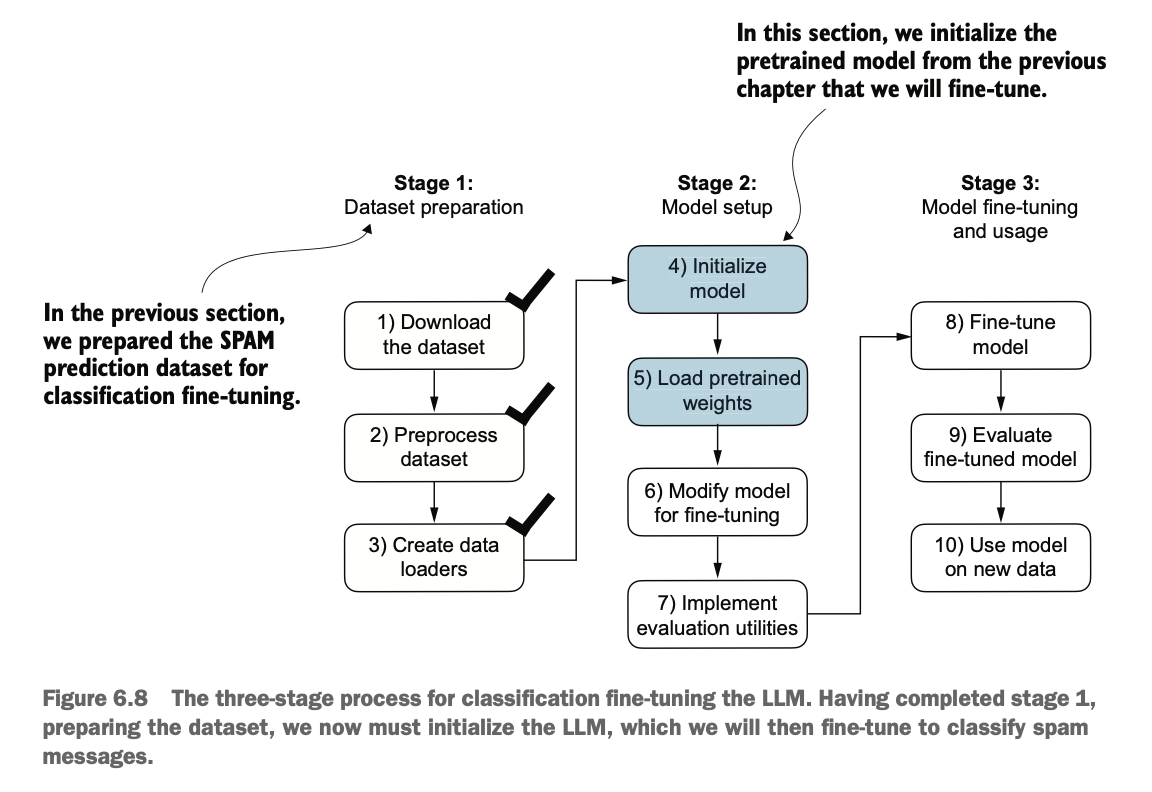

我们按这个流程来实现分类任务的微调,首先第一步是下载数据集

We follow this process to implement fine-tuning for the classification task. The first step is to download the dataset.

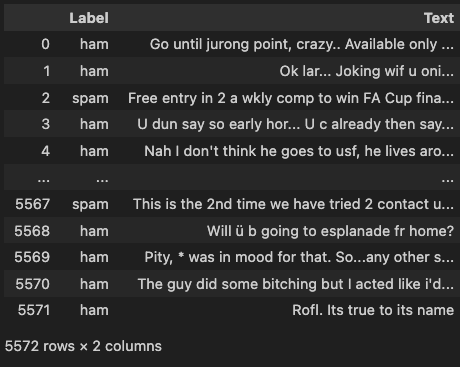

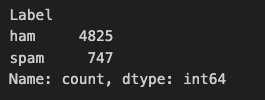

我们通过开源数据集下载数据,使用pandas查看数据内容和分布

We download the data from an open-source dataset and use pandas to inspect the data content and distribution.

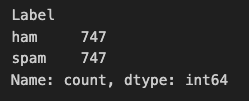

因为正常数据比异常的多,这里我们使用下采样,减少正常数据使其和异常数据一样大

Since normal data is more abundant than abnormal data, we use downsampling to reduce the amount of normal data so that it matches the size of the abnormal data.

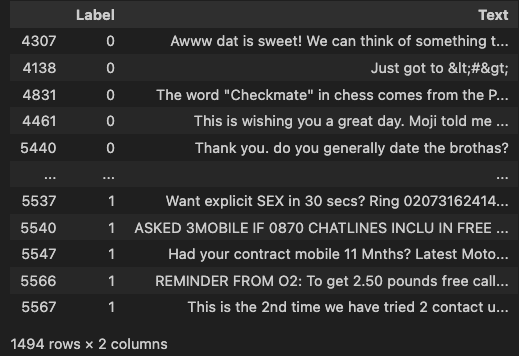

之后,我们做一下类别枚举值映射,把ham和spam标签转换成0和1,这个有点类似把文本转换成token

Next, we perform a category enumeration mapping, converting the "ham" and "spam" labels into 0 and 1. This is somewhat similar to converting text into tokens.

然后我们再划分70%训练集、10%验证集和20%测试集

训练集用于训练模型,学习特征和模式以更新内部权重

验证集用于监控是否过拟合,如果验证效果不佳则提前结束训练(早停),本身也作为模型训练一部分参与了模型优化过程

测试集用于进行最终的全面评估,本身不参与模型优化过程

After that, we divide the dataset into 70% training set, 10% validation set, and 20% test set.

The training set is used to train the model, allowing it to learn features and patterns to update its internal weights.

The validation set is used to monitor overfitting. If the validation performance deteriorates, training is stopped early (early stopping). It also participates in the model optimization process as part of the training.

The test set is used for final comprehensive evaluation and does not participate in the model optimization process.

6.3 构造数据加载器 Constructing a Data Loader

我们要把数据像之前一样做批加载,此时对数据的截取划分有两种选择:

所有批按最短的文本长度截取划分,这样虽然计算成本低,但可能导致长文本截取后信息丢失导致性能下降

所有批按最长文本长度截取划分,成本更高,但信息保留度更好些,因此我们选这个方法

We need to batch-load the data as before. At this point, there are two options for truncating and dividing the data:

Truncate all batches based on the shortest text length. This approach has lower computational cost but may cause information loss for longer texts, resulting in degraded performance.

Truncate all batches based on the longest text length. This approach is more computationally expensive but better preserves information. Therefore, we choose this method.

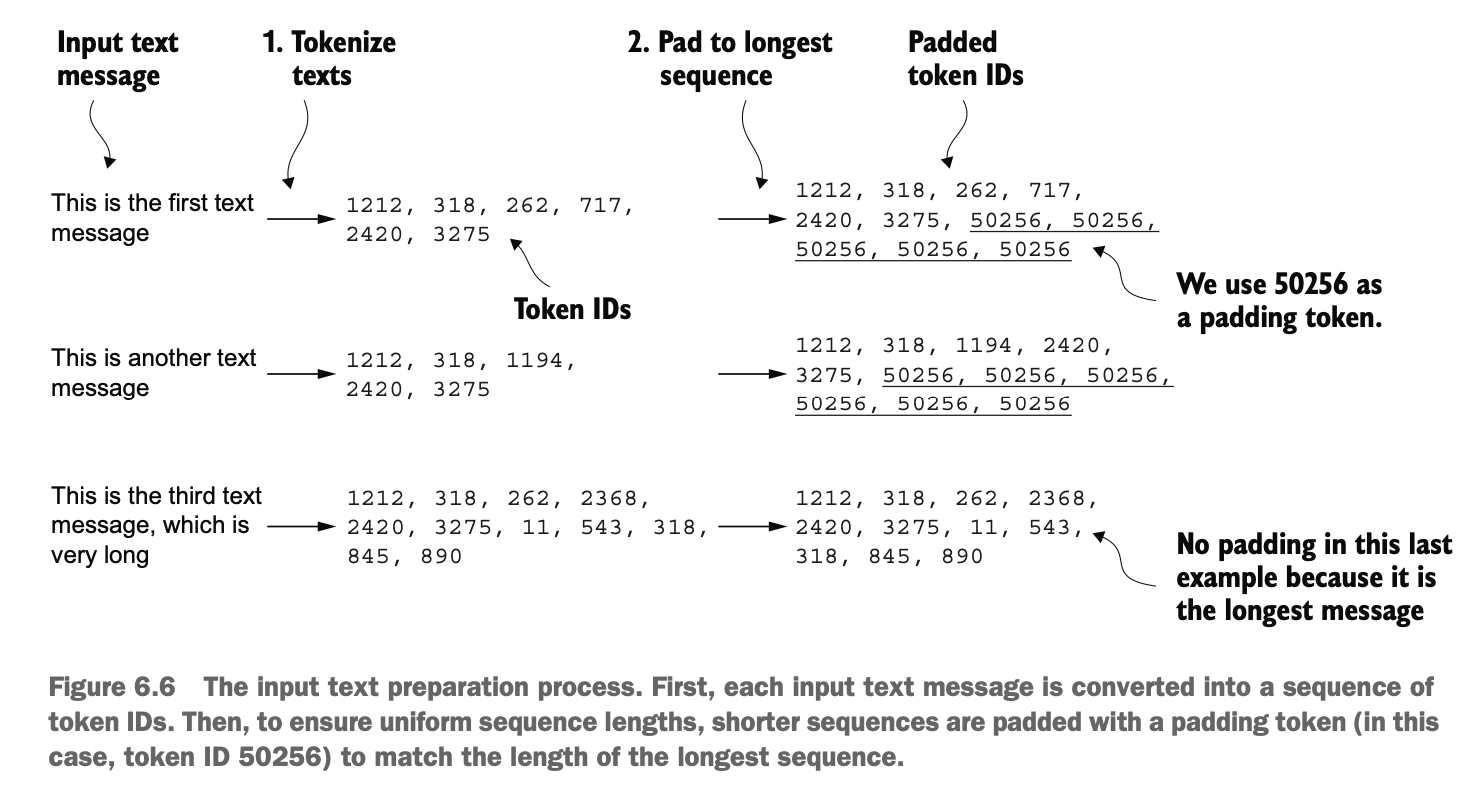

如图,我们按最长的文本截取,给每个短文本后面补上<|endoftext|>

As shown, we truncate based on the longest text and pad shorter texts with <|endoftext|>.

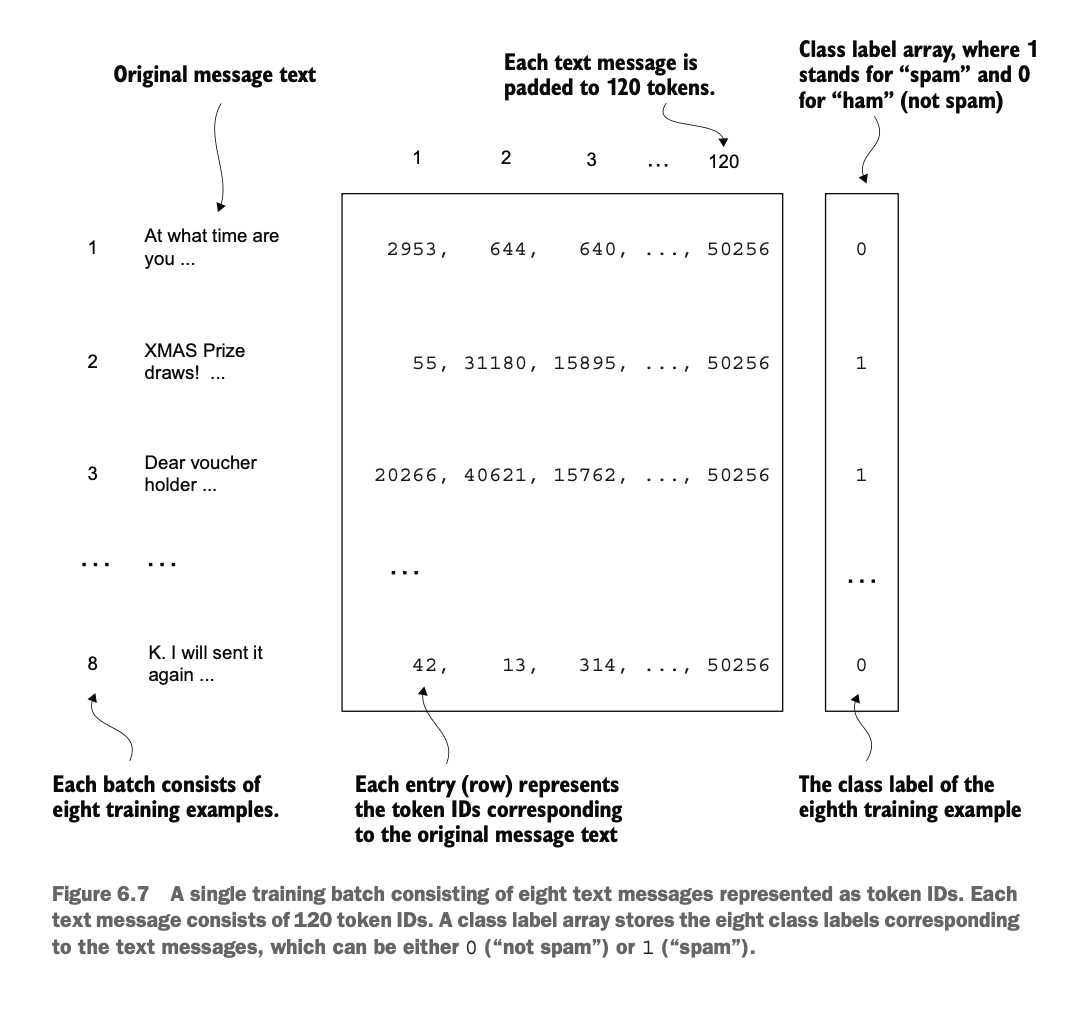

于是我们构建一个csv数据集类,能读入文本,取最长字段作为截取长度,并提供一些封装的数据索引方法

Thus, we construct a CSV dataset class that can read text, take the longest field as the truncation length, and provide some encapsulated data indexing methods.

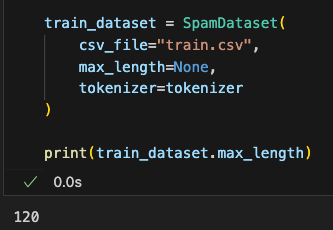

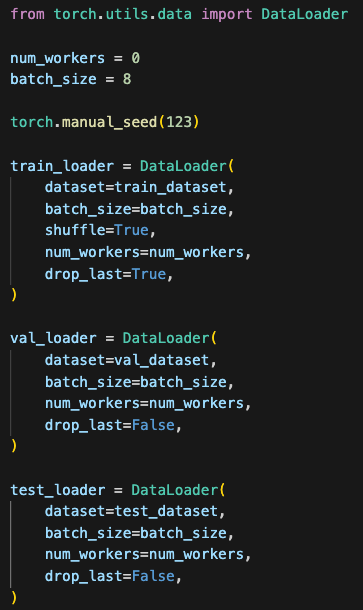

接下来我们使用这个数据集类来初始化torch提供的DataLoader,和之前GPT的加载不同不同,这里不是预测下一个输出的词,而是预测分类结果

Next, we use this dataset class to initialize the DataLoader provided by Torch. Unlike the previous GPT loading process, here we are not predicting the next word but predicting classification results.

6.4 使用预训练权重初始化模型 Initializing the Model with Pretrained Weights

在开始微调模型成分类器之前,我们先使用之前预训练好的权重初始化LLM

Before fine-tuning the model into a classifier, we first initialize the LLM using the pretrained weights.

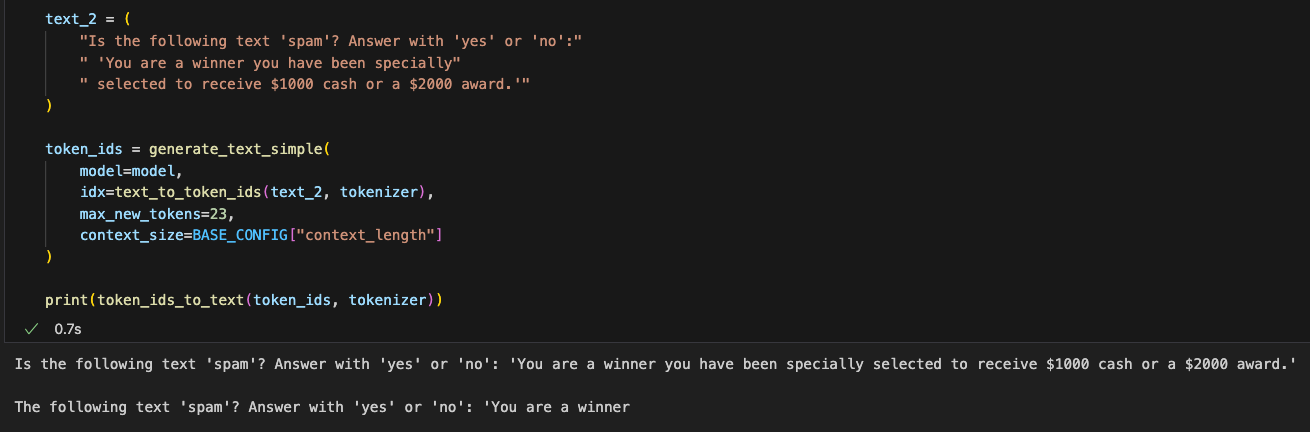

先直接用初始化好的LLM试试让它补全一个spam邮件看看会如何

We then test the initialized LLM by asking it to complete a spam email to see what happens.

输出的结果只是重复了指令没有意义,因为现在LLM只进行了预训练,我们还没有做指令的微调或分类的微调

The output simply repeats the instructions and is meaningless because the LLM has only been pretrained at this stage; instruction fine-tuning or classification fine-tuning has not yet been performed.

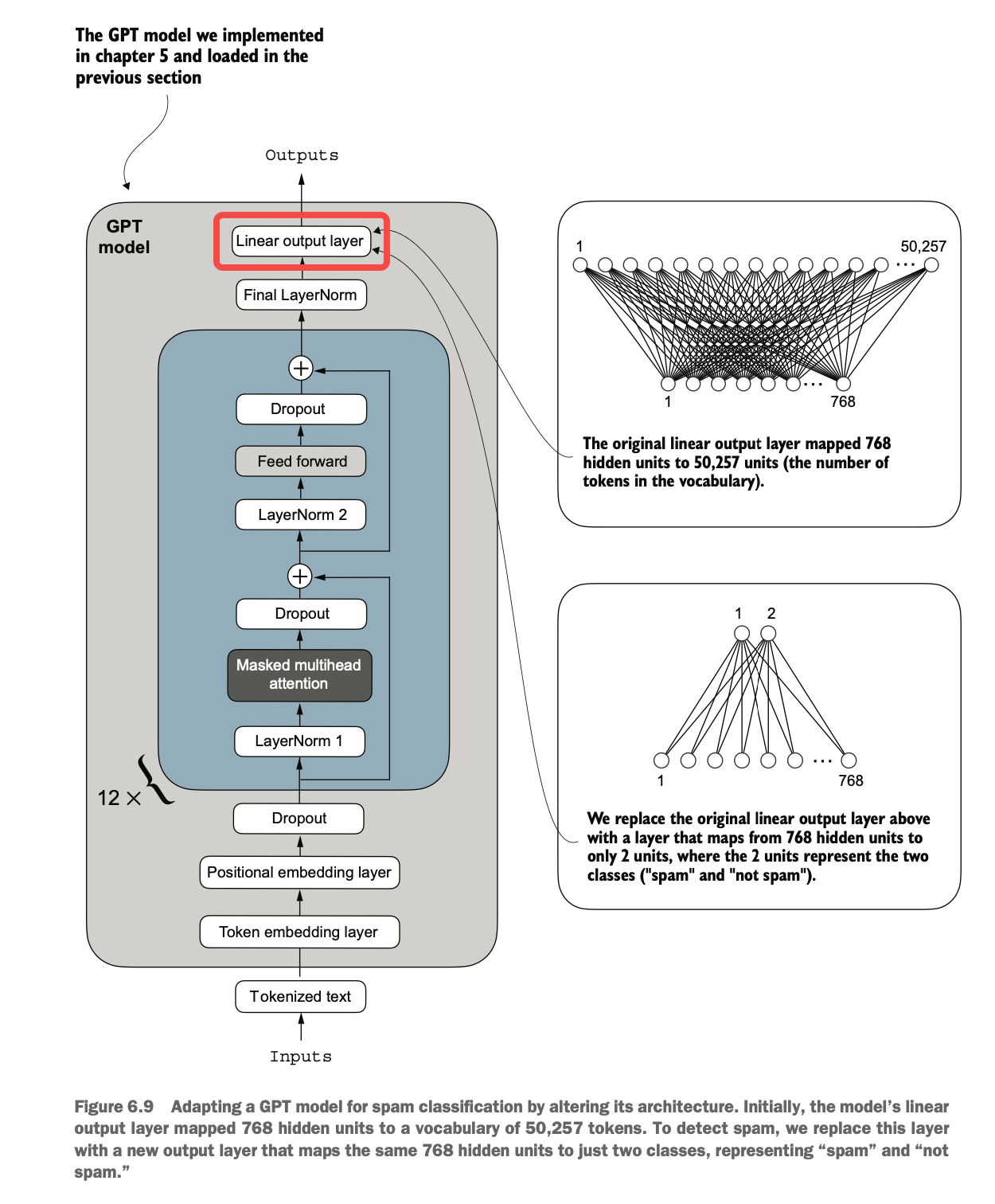

6.5 修改输出层的分类头 Modifying the Classification Head of the Output Layer

为了实现分类微调,我们要对输出层做修改,把从50257个词汇中预测下一个词的方法替换成预测0(不是垃圾邮件)或1(是垃圾邮件)

To achieve classification fine-tuning, we need to modify the output layer. Instead of predicting the next word from 50,257 vocabulary tokens, we replace it with predicting 0 (not spam) or 1 (spam).

微调预训练模型时,不需要调整所有层。底层捕获的是通用的语言结构,适用于各种任务,只需微调靠近输出的最后几层即可,既能适应新任务,又更高效。

When fine-tuning a pretrained model, it is not necessary to adjust all layers. The lower layers capture general language structures applicable to various tasks. By fine-tuning only the last few layers closer to the output, we can adapt to the new task more efficiently.

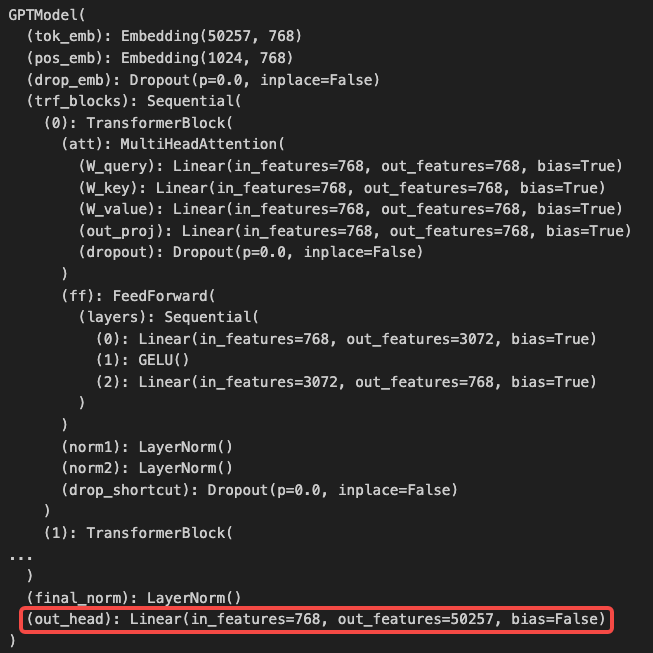

我们先看看修改前的模型如何,直接print(model)得到模型信息

Let’s first examine the model before modification by directly printing model to view its information.

为了微调,我们先把模型冻结锁定所有层(等会再解锁需要训练的层)

To prepare for fine-tuning, we first freeze and lock all layers (we will unlock the necessary layers later).

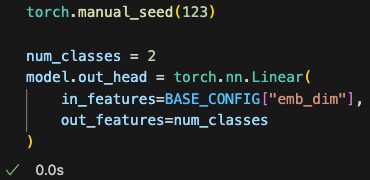

然后我们把输出层换成一个映射到2维logits的映射

Next, we replace the output layer with a mapping to 2-dimensional logits.

在默认情况下,我们仅训练对新改的输出层,但额外的实验表明,微调多几层(如最后一个 Transformer 块和 LayerNorm 层)可以显著提升性能。在实践中,可以先尝试微调部分层,再根据性能需求逐步扩展到更多层。

By default, we only train the newly modified output layer. However, additional experiments have shown that fine-tuning more layers (such as the last Transformer block and LayerNorm layers) can significantly improve performance. In practice, you can start by fine-tuning a subset of layers and gradually expand to more layers based on performance requirements.

因此我们通过将它们的 requires_grad 属性设置为 True进行层解锁,可以使这些层在训练中参与更新,从而增强模型的预测能力

To unlock these layers for training, we set their requires_grad attribute to True, allowing them to participate in updates during training and enhancing the model’s prediction capabilities.

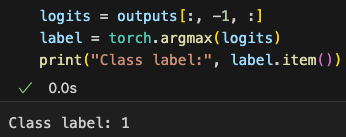

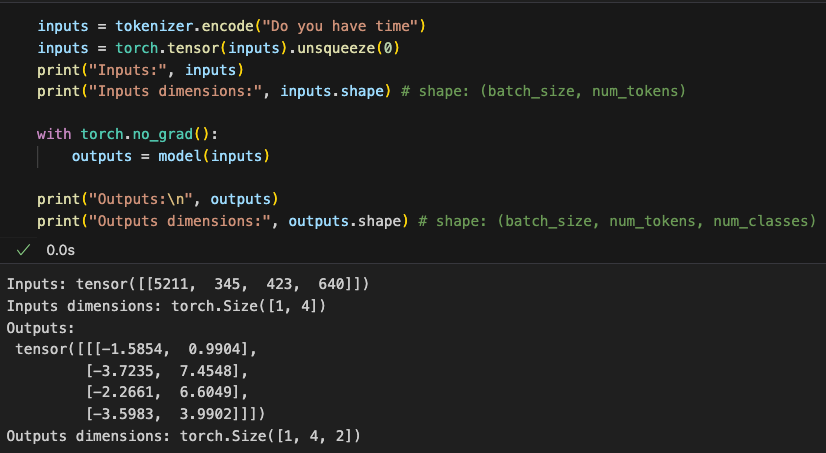

我们使用修改后的模型先直接做一个输入输出的打印

We then use the modified model to print an input-output example.

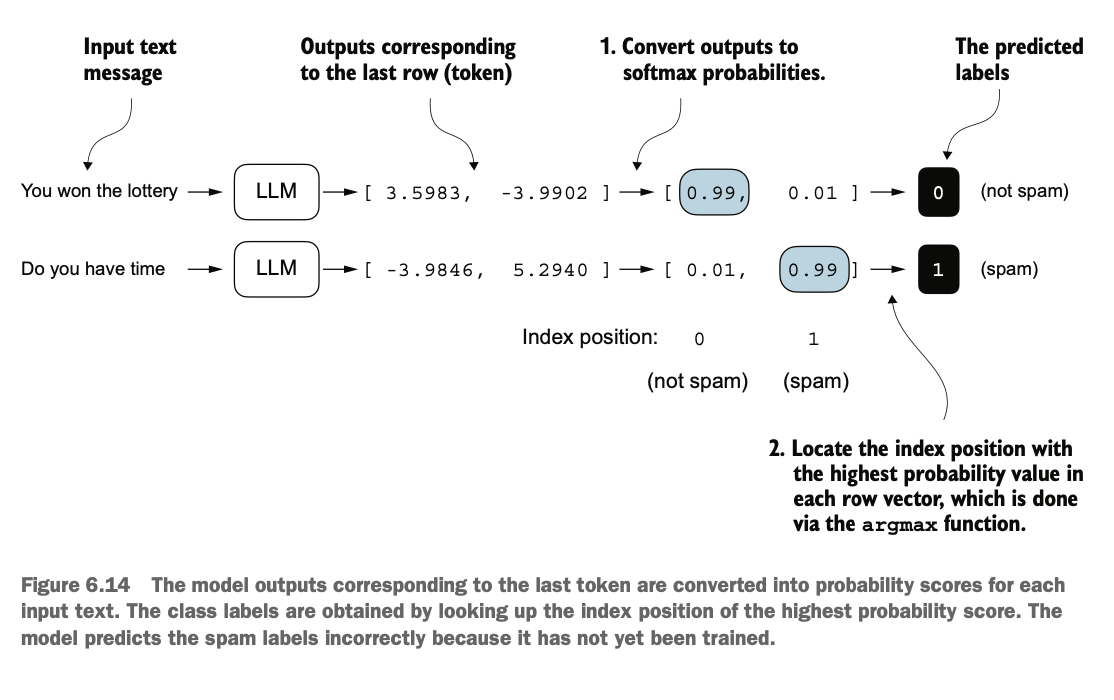

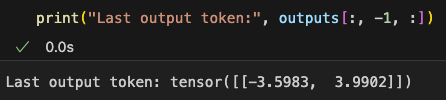

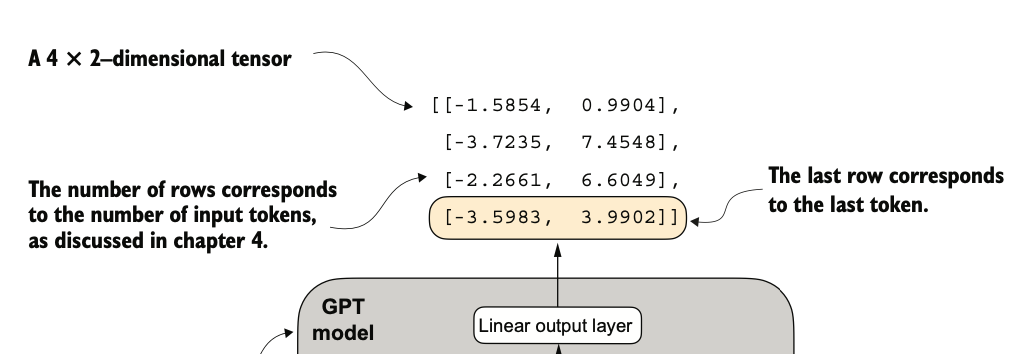

因为我们把从50257的维度换成了2维,所以这里的输出是4个2维的张量,但我们最终要的是一个分类结果,我们直接关注最后的一个2维张量就好了,因为最后一个词能获取前面所有词的关注信息,所以我们可以直接把它作为输出结果

Since we reduced the dimension from 50,257 to 2, the output here consists of 4 two-dimensional tensors. However, what we ultimately need is a single classification result. We can directly focus on the last two-dimensional tensor since the last word can attend to all previous words. This final tensor can be used as the output result.

6.6 计算分类的损失和准确率 Calculating Classification Loss and Accuracy

在微调前,我们还剩最后一步:构建模型的评估机制,我们可以把输出的logits转成概率(实际不转换直接argmax选最大logit也是一样的),然后得到可能性最大的分类结果

Before fine-tuning, there is one final step: constructing the model's evaluation mechanism. We can convert the output logits into probabilities (though directly applying argmax to select the largest logit works the same) and then obtain the classification result with the highest probability.

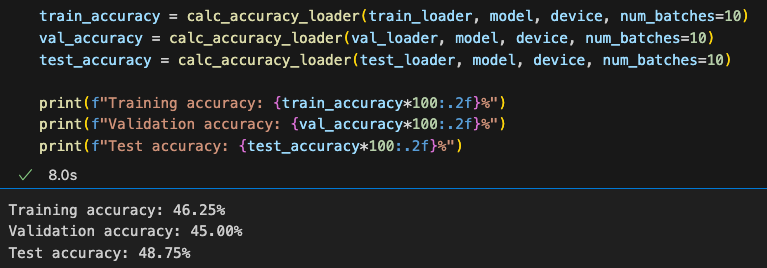

根据这个预测分类的方式,我们先写一个calc_accuracy_loader方法用于从数据加载器加载数据然后评估准确度,发现没进行微调之前预测的分类结果差不多50%,和预期一致

Based on this prediction method, we first write a calc_accuracy_loader method to load data from the data loader and evaluate accuracy. Before fine-tuning, we find that the predicted classification results are about 50%, which is consistent with expectations.

之后,我们需要评估每次预测的损失,注意在机器学习模型的训练中,准确率(accuracy)不能直接作为损失函数,因为这里准确率是一个离散值计算出来的不可微分结果(而机器学习中训练梯度更新需要求导),所以我们用交叉熵损失作为优化准确率的代理。但有一个调整:我们仅关注并只使用最后一个标记 model(input_batch)[:, -1, :]来计算损失,而不是所有的标记model(input_batch)。

Next, we need to evaluate the loss for each prediction. Note that in machine learning model training, accuracy cannot be directly used as a loss function. This is because accuracy is a discrete value and is not differentiable (while gradient updates in machine learning require differentiation). Therefore, we use cross-entropy loss as a proxy to optimize accuracy. However, there is an adjustment: we only focus on and use the final token model(input_batch)[:, -1, :] to calculate the loss, rather than all tokens model(input_batch).

6.7 使用有标签数据微调 Fine-Tuning with Labeled Data

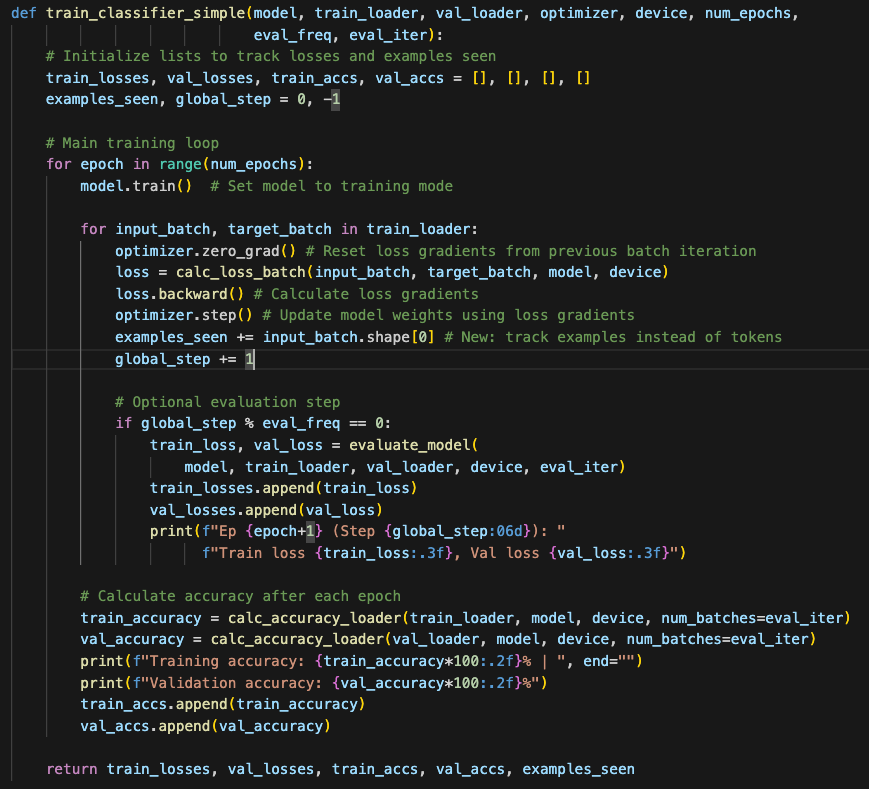

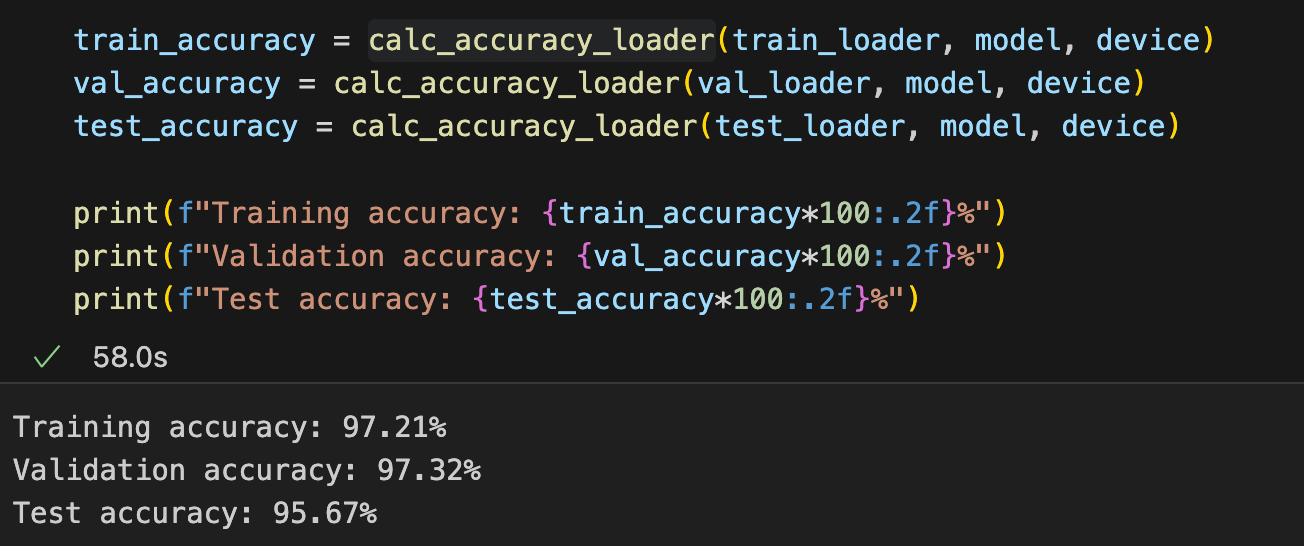

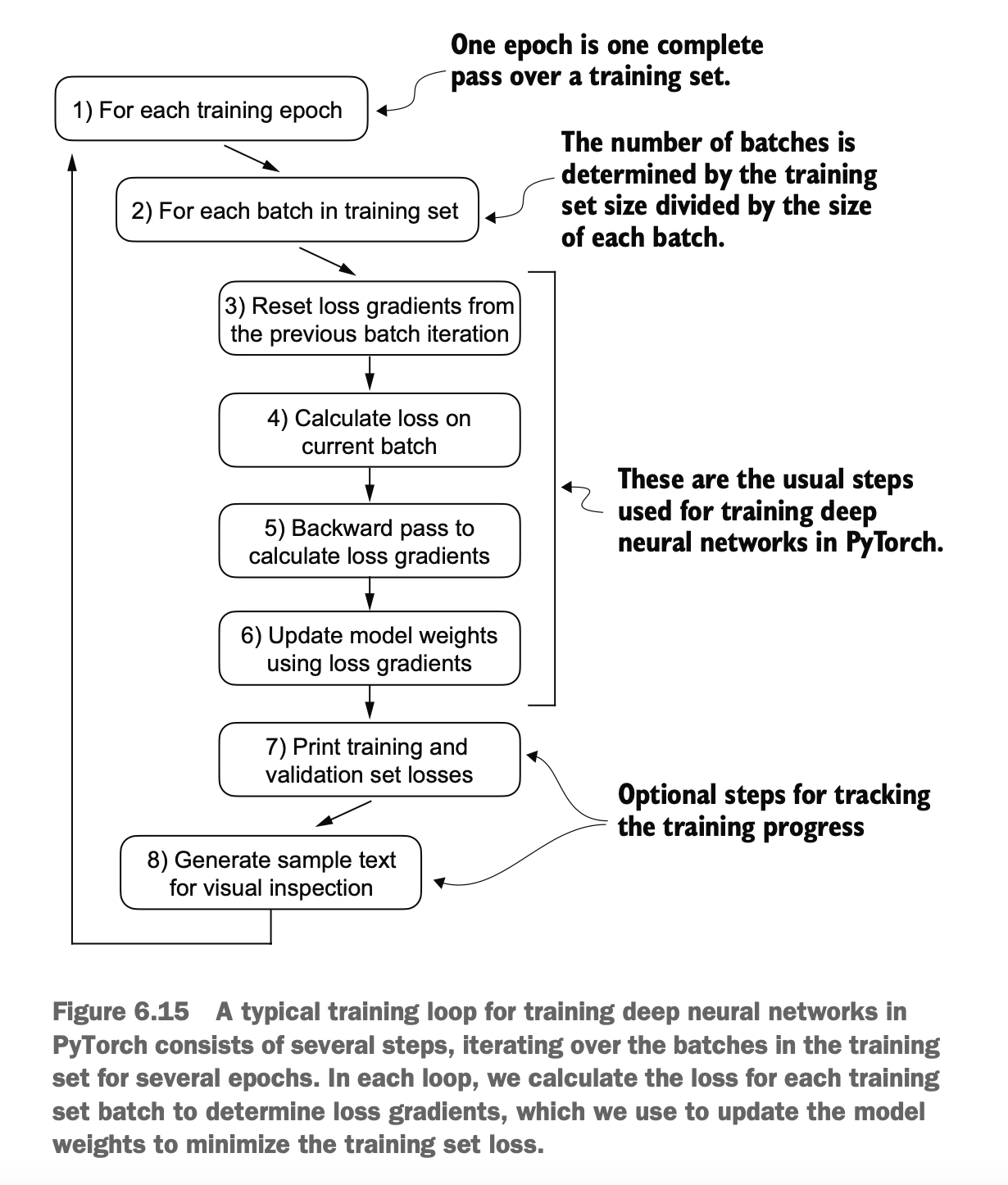

接下来我们将实现训练函数来微调模型,以最小化损失,从而增加分类准确度,训练过程和之前一样,只不过我们评估模型时,不是生成样本文本看是否通顺而是计算准确度

Next, we will implement a training function to fine-tune the model. The goal is to minimize the loss and increase classification accuracy. The training process is similar to before, but instead of evaluating the model by generating sample texts for fluency, we calculate accuracy.

训练函数具体实现代码基本和之前预训练LLM是一致的

The implementation of the training function is almost identical to the one used for pretraining the LLM.

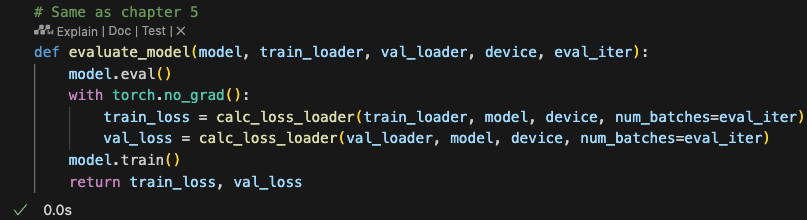

另外封装一个损失函数,返回训练集和验证集损失

The implementation of the training function is almost identical to the one used for pretraining the LLM.

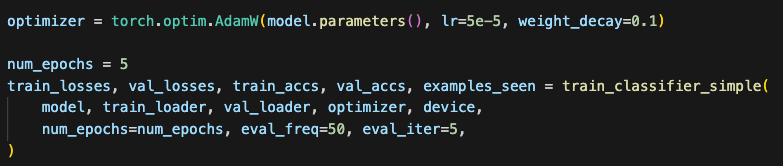

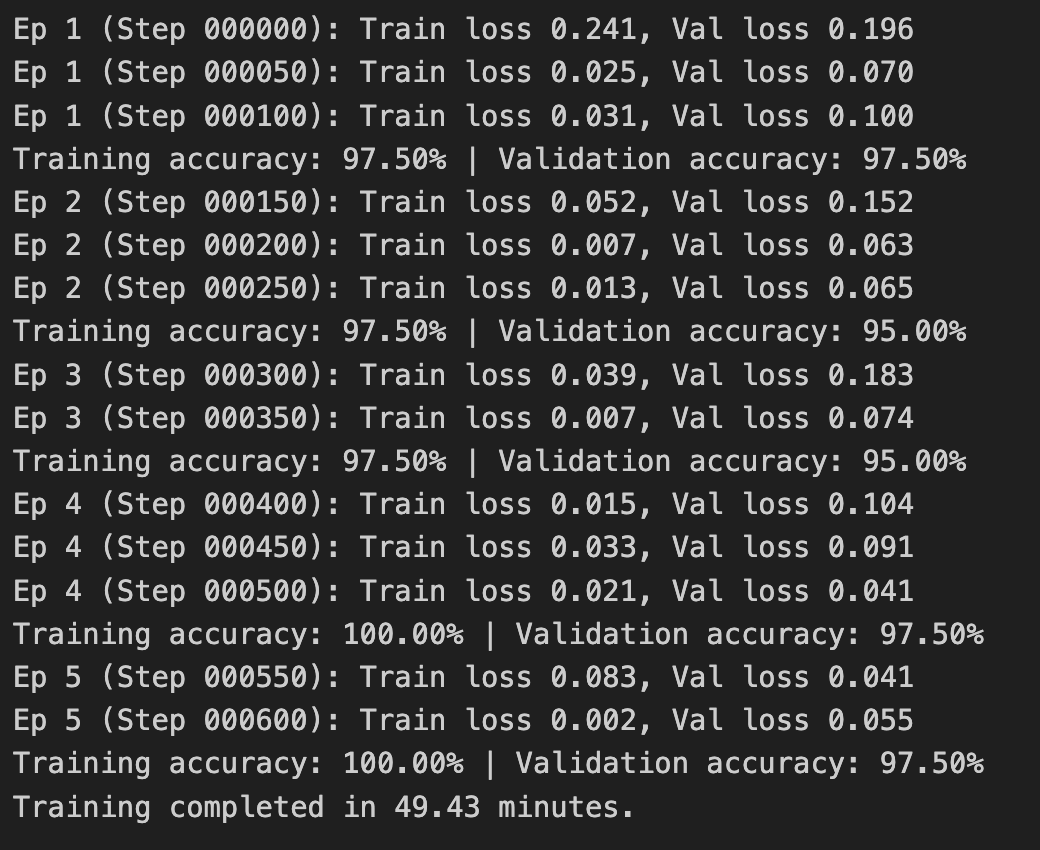

我们先直接跑5个epochs看看效果

We first run the training for 5 epochs to see the results.

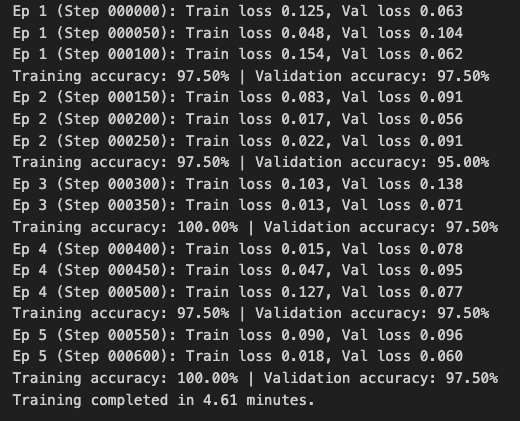

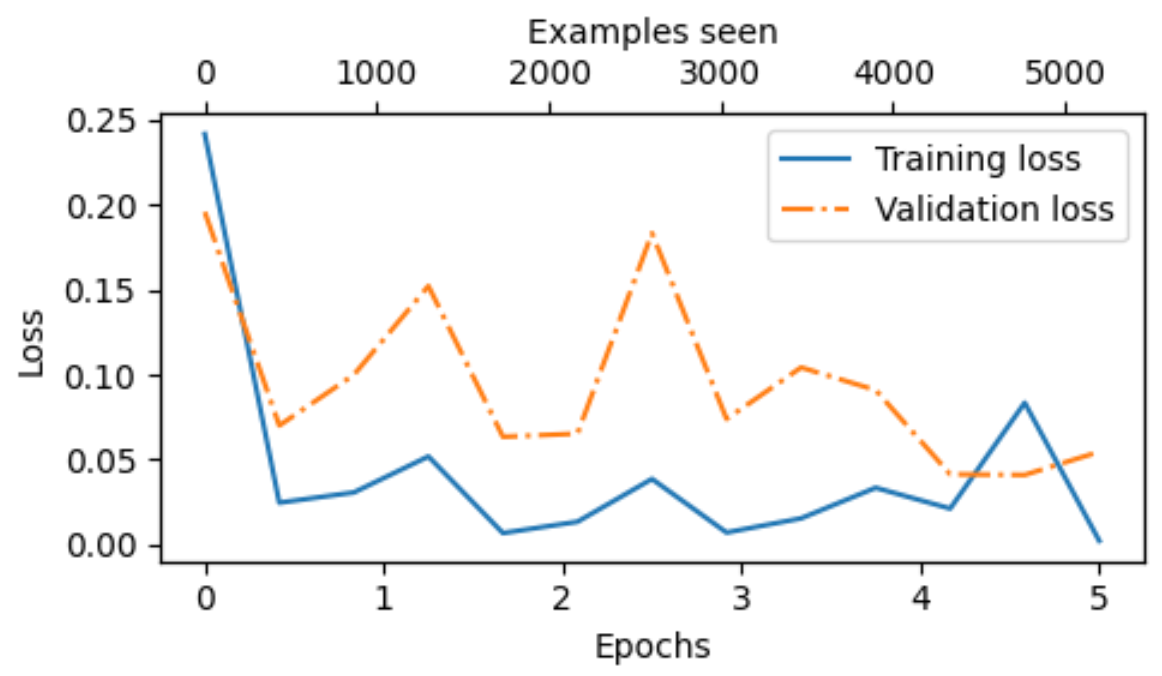

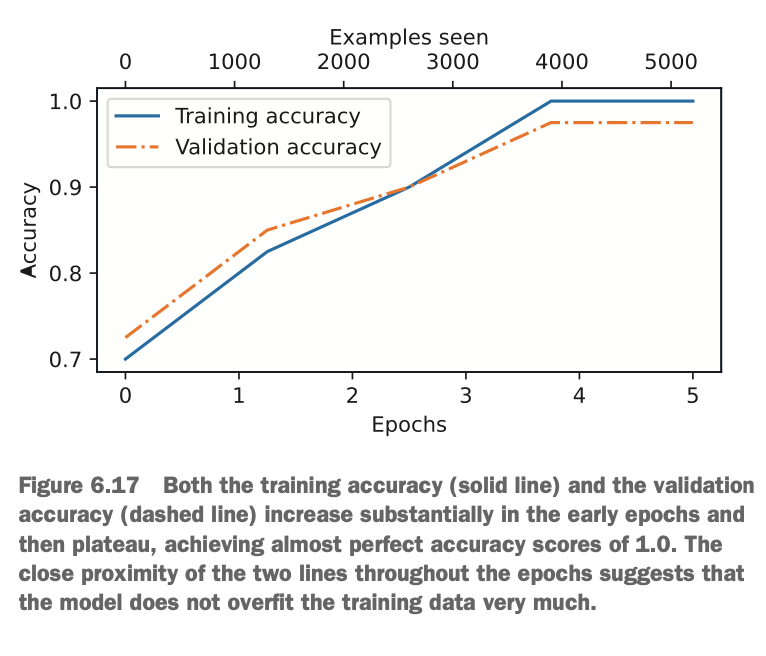

顺手将训练过程可视化,看看损失和准确率怎么变化的

We also visualize the training process to observe how the loss and accuracy change over time.

如图,训练即和验证集的损失都随着epoch的迭代进一步降低了,说明训练效果不错(两者中间没有gap说明暂未出现过拟合的迹象)

As shown, the loss for both the training and validation sets decreases further as the epochs progress, indicating good training performance (the absence of a gap between the two suggests no signs of overfitting so far).

注意这里选择训练轮次epoch数值没有固定标准,通常从5开始是一个好选择。如果模型早期过拟合,应减少epoch;如果验证损失还有下降趋势,应增加epoch

Note that there is no fixed standard for the number of training epochs. Starting with 5 is usually a good choice. If the model overfits early, the number of epochs should be reduced. If the validation loss is still decreasing, the number of epochs should be increased.

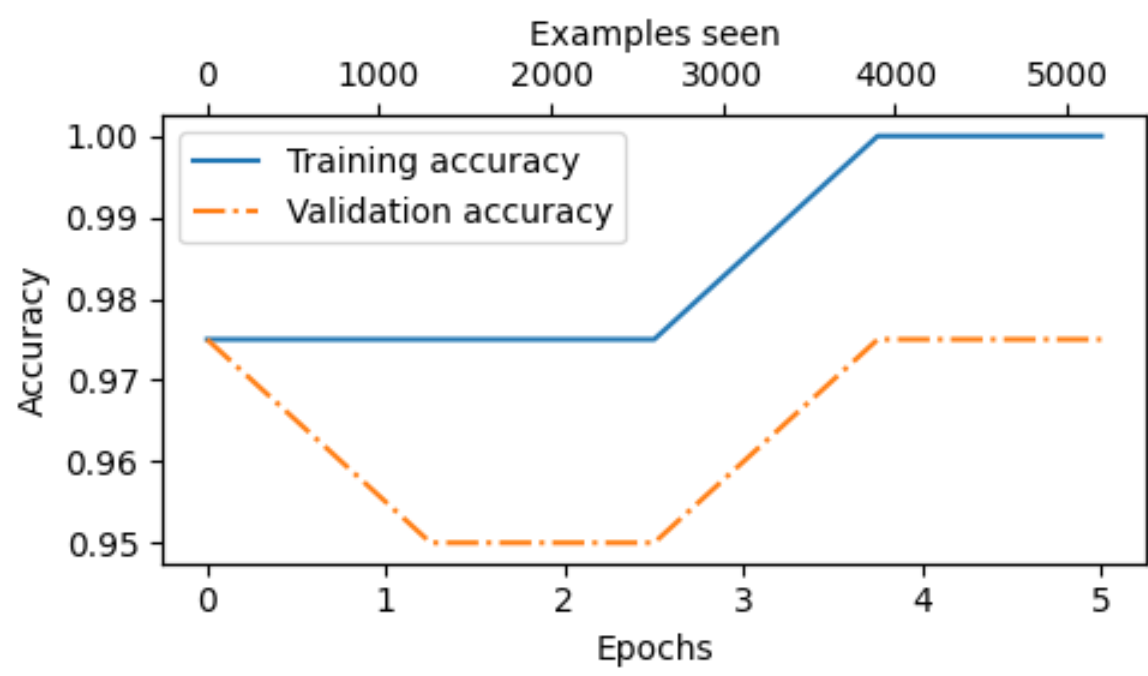

随着损失下降,我们可以看到对应的准确率也有较大提升,直到后面两个epoch才停滞

As the loss decreases, we can see a significant improvement in accuracy, which stabilizes only in the last two epochs.

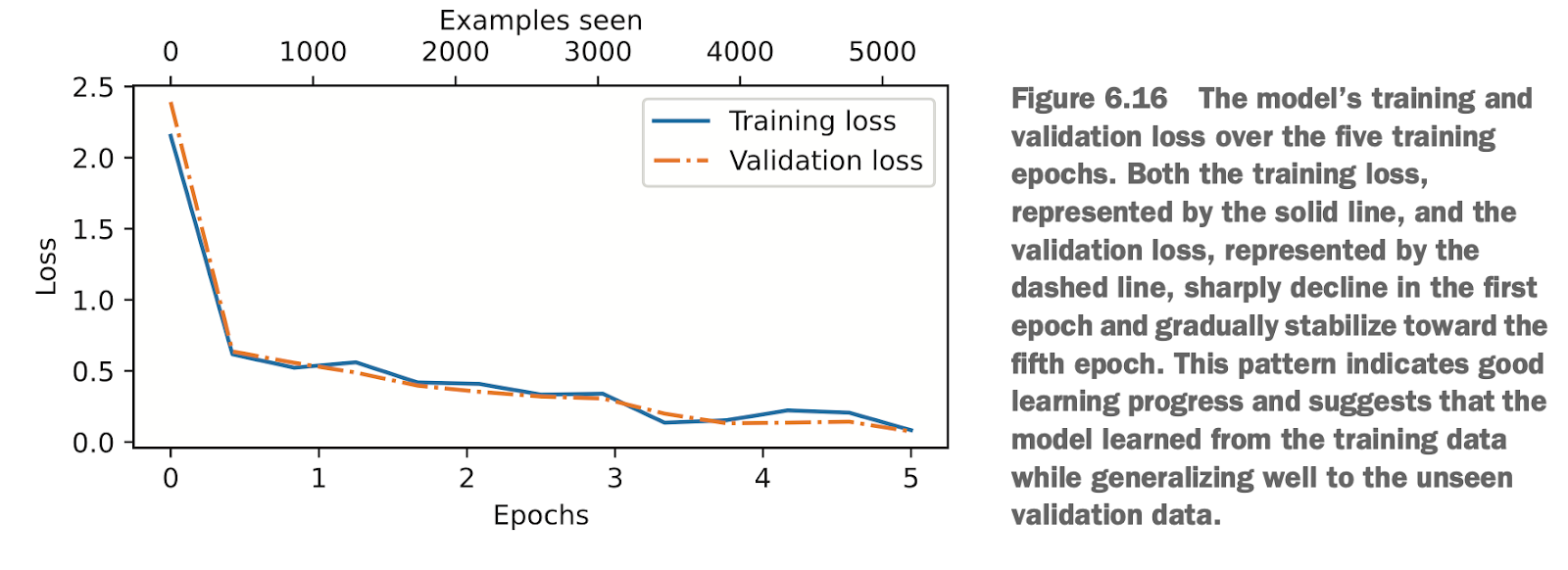

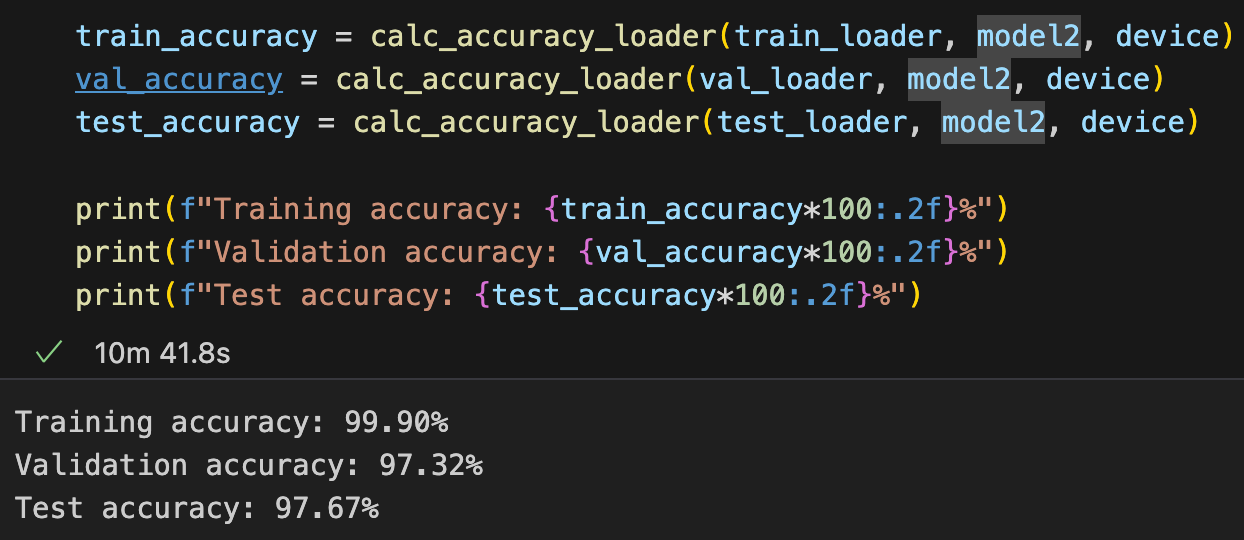

训练完成后,我们再来打印出最新训练集、验证集和测试集的准确率,看看相比之前只跑了几个批次的50%左右的准确率是否有提升

Once training is complete, we print the latest accuracy for the training, validation, and test sets to see if there is an improvement compared to the initial ~50% accuracy from running a few batches.

结论:

模型表现: 训练、验证和测试集的准确率非常接近,表明模型整体性能良好,过拟合程度很低。

验证集与测试集的差异: 验证集准确率略高于测试集,这是由于超参数调优过程中对验证集的轻微偏向,属于正常现象。

改进方向: 如果需要进一步减少验证集和测试集的差异,可以通过增加 dropout 率或调整权重衰减等方法提升模型的泛化性能。

Conclusion:

Model Performance: The accuracy for the training, validation, and test sets is very close, indicating good overall performance with minimal overfitting.

Difference Between Validation and Test Sets: The validation accuracy is slightly higher than the test accuracy. This is due to slight bias toward the validation set during hyperparameter tuning, which is normal.

Improvement Direction: To further reduce the gap between the validation and test sets, you can increase the dropout rate or adjust weight decay to improve the model's generalization performance.

6.8 使用LLM作为垃圾邮件分类器 Using LLM as a Spam Classifier

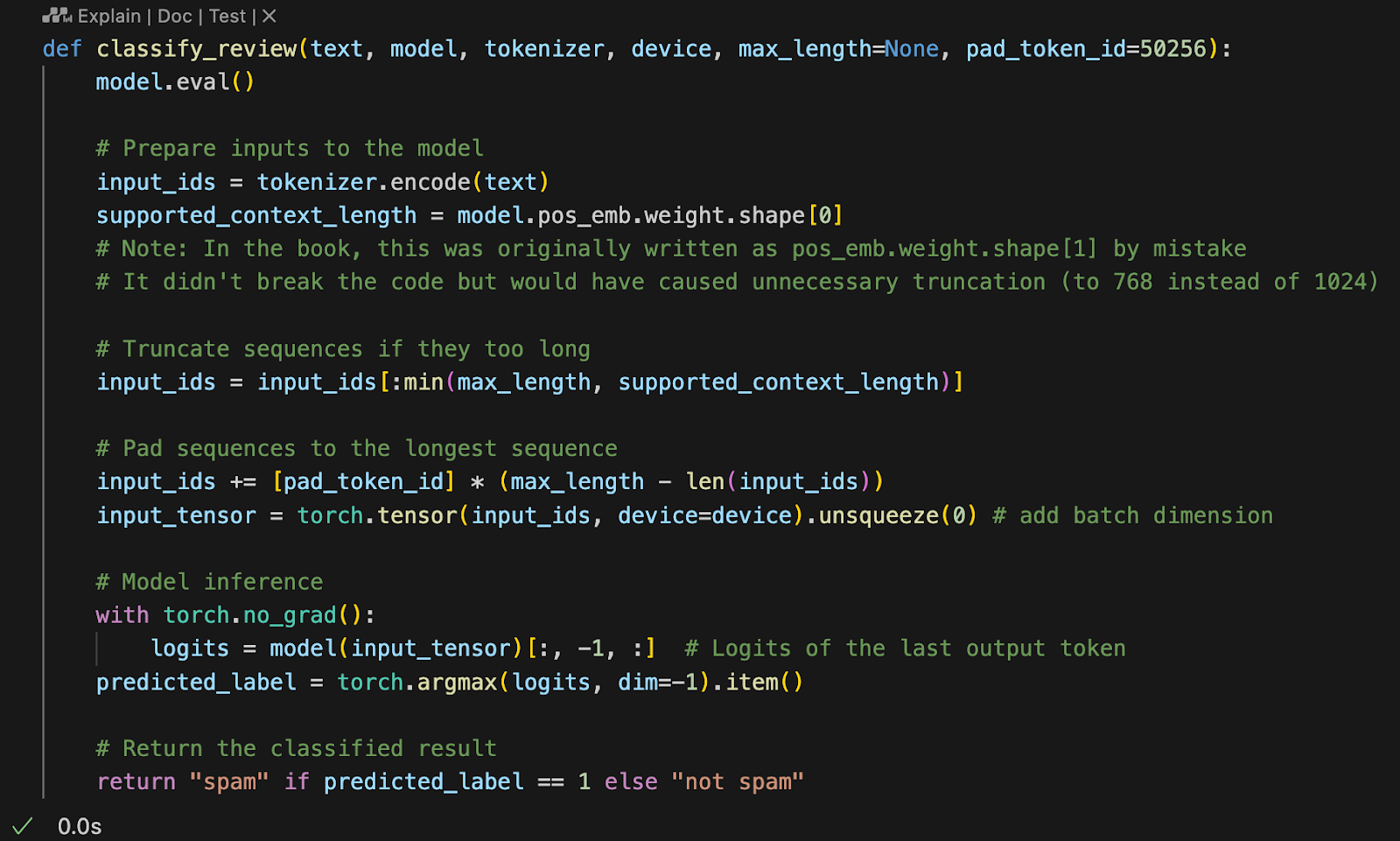

再接下来,我们将用微调后的模型进行简单的封装,实现对文本的分类判断:

Next, we will use the fine-tuned model to create a simple wrapper function for classifying text:

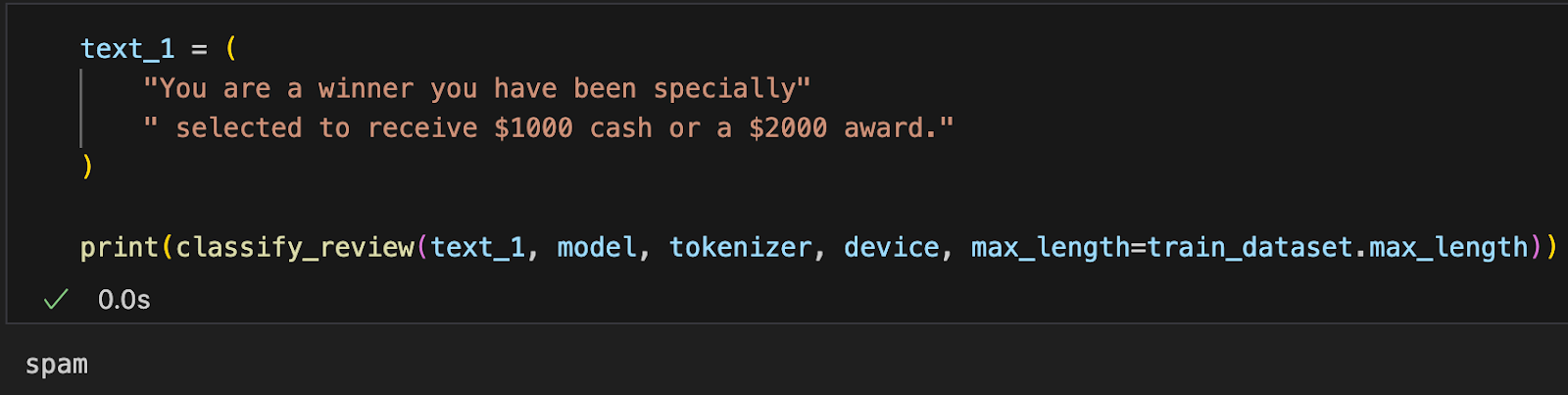

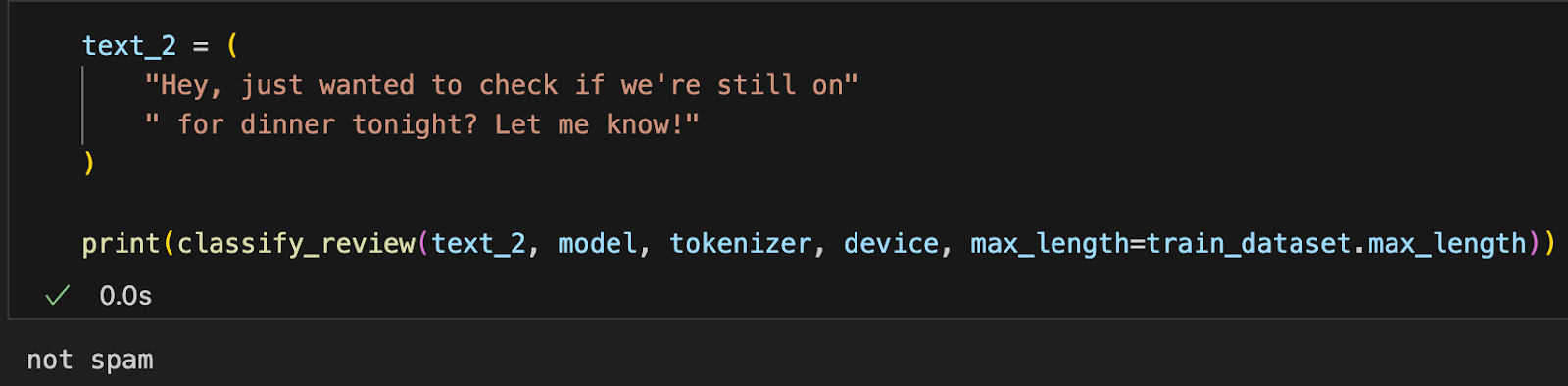

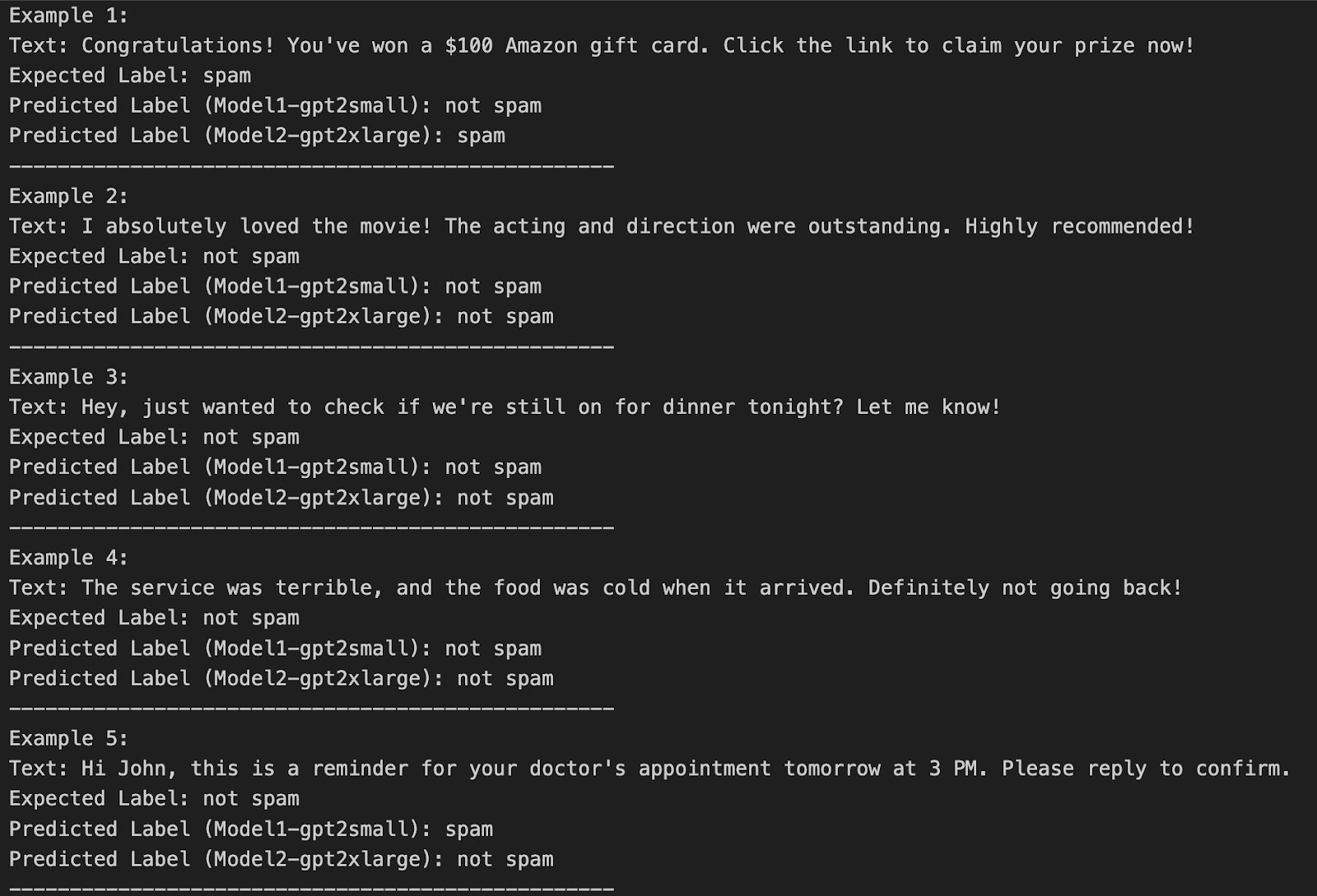

我们调用这个函数,对自己弄几个的例子进行判断看看效果

We call this function and test it on a few self-created examples to see how well it performs.

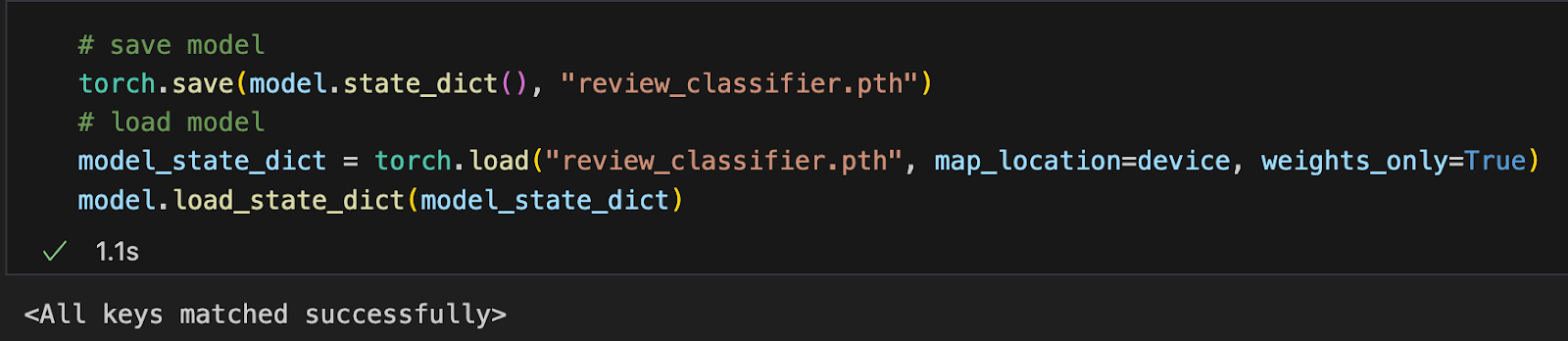

看起来效果不错,接下来我们将模型保存,方便后续读取使用

The results look good! Next, we save the model for future use.

另外,我们尝试使用gpt2-xlarge的权重再试试,首先重新进行微调,这下时间花费更多了,相比gpt2-small这回从5分钟变成了50分钟

Additionally, we try using the gpt2-xlarge weights for further experimentation. First, we perform fine-tuning again. This time, the process takes significantly longer—about 50 minutes compared to 5 minutes with gpt2-small.

看下训练过程中损失和准确率的变化,似乎刚跑第一个epoch,效果就已经是很好的了

Looking at the changes in loss and accuracy during training, it seems that the model already performed well after the first epoch.

看下整体准确率是多少,相比之前似乎高了2个百分百(但耗时从1分钟变成10分钟)

After evaluating the overall accuracy, we notice that it is about 2% higher than before, though the training time increased from 1 minute to 10 minutes.

从特殊的自测用例来看,gpt2-xlarge确实更“聪明”,识别得更准

From specific self-test cases, it is evident that gpt2-xlarge is indeed "smarter" and performs more accurate classifications.

总结 Summary

针对大型语言模型(LLMs)的微调有多种策略,包括分类微调和指令微调。

分类微调涉及通过一个小的分类层替换大型语言模型的输出层。

在将文本消息分类为“垃圾邮件”或“非垃圾邮件”的情况下,新分类层仅由两个输出节点组成。而之前,输出节点的数量等于词汇表中唯一标记的数量(例如,50,256)。

分类微调不再像预训练那样预测文本中的下一个标记,而是训练模型输出正确的类别标签,例如“垃圾邮件”或“非垃圾邮件”。

微调的模型输入是转换为标记 ID 的文本,类似于预训练过程。

在微调大型语言模型之前,我们需要加载预训练模型作为基础模型。

评估分类模型时,需要计算分类准确率(即正确预测的比例或百分比)。

微调分类模型使用与预训练大型语言模型时相同的交叉熵损失函数。

There are various strategies for fine-tuning large language models (LLMs), including classification fine-tuning and instruction fine-tuning.

Classification fine-tuning involves replacing the output layer of the LLM with a small classification layer.

For classifying text messages as "spam" or "not spam," the new classification layer consists of only two output nodes. Previously, the number of output nodes equaled the number of unique tokens in the vocabulary (e.g., 50,256).

Instead of predicting the next token in the text, as in pretraining, classification fine-tuning trains the model to output the correct class label, such as "spam" or "not spam."

The input to the fine-tuned model is text converted into token IDs, similar to the pretraining process.

Before fine-tuning an LLM, we need to load the pretrained model as the base model.

When evaluating the classification model, it is necessary to calculate classification accuracy (i.e., the proportion or percentage of correct predictions).

Fine-tuning a classification model uses the same cross-entropy loss function as in the pretraining of LLMs.